O11y Guide, Cloud-Native Observability Pitfalls: Sneaky, Sprawling Mess

Explore the sneaky, sprawling tooling mess in this final article of the series examining the common pitfalls of cloud-native observability.

Join the DZone community and get the full member experience.

Join For FreeAre you looking at your organization's efforts to enter or expand into the cloud-native landscape and feeling a bit daunted by the vast expanse of information surrounding cloud-native observability? When you're moving so fast with agile practices across your DevOps, SREs, and platform engineering teams, it's no wonder this can seem a bit confusing.

Unfortunately, the choices being made have a great impact on both your business, your budgets, and the ultimate success of your cloud-native initiatives that hasty decisions upfront lead to big headaches very quickly down the road.

In the previous article, we looked at the pain we are facing when led down proprietary paths for our cloud-native observability solutions. In this last article in my series, I'll address the sneaky sprawling mess that often develops in our cloud-native observability tooling landscape. By sharing common pitfalls in this series, the hope is that we can learn from them.

It's always going to be a challenge to make the right decisions when solving the cloud-native observability stack puzzle for our organization. There are so many choices. From open to proprietary, from pillars to phases, from a cost perspective, and more that we have to consider.

So often this leads to a cloud-native landscape full of observability tooling, giving us the feeling that the day is sneaking up on us where we really have lost control of our own destiny.

How Many Does It Take?

This story tells itself when we look at some of the recently published research around observability tooling. This report, published by the analyst firm ESG (a division of TechTarget), surveyed 357 IT, DevOps, and application development professionals responsible for infrastructure in their organizations. They all had some form of observability practice and revealed interesting insights into how organizations are being swamped by their tooling choices.

It's a given that cloud native environments are producing data at a scale that is hard to handle for many organizations, with this report showing that 71% are finding that their observability data is growing for them at an alarming rate.

This leads to concerns around challenges like lack of visibility into their landscape, which is becoming more and more disaggregated, which causes security concerns. These concerns have caused a knee-jerk reaction where 44% say they are buying more observability tools and 65% are turning to third-party tooling hoping to get back control over their observability. The real kicker is that 66% of these organizations use more than 10 - yes, you read that right - different observability tools.

Where does this take us in our quest to get back control of our observability data?

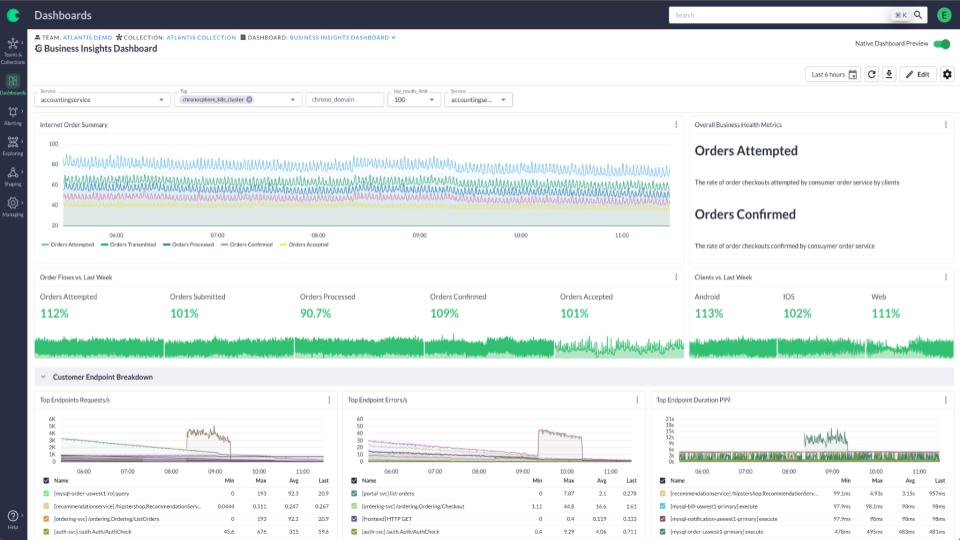

This has led to on-call engineers having to dig through disparate reporting tools, disconnected dashboard experiences, and end up with more stress than is healthy for a job that should not be that hard. We want to tackle our cloud-native experience by considering their phases, not by adding a new tool for each new observability data problem we encounter.

The best way to approach this problem and reduce the sneaky sprawl is to ensure we are giving our on-call engineers just the right amount of information, displayed in just the right way that they need, and give them a chance to solve the observability issues that arise from time to time in our cloud-native landscape. Picking tools that don't just patch the problem in front of us, but that are part of the overall planning on how to address our data and infrastructure needs, and helping our on-call engineers be successful in their endeavors.

The road to cloud-native success has many pitfalls, and understanding how to avoid the pillars and focusing instead on solutions for the phases of observability will save much wasted time and energy.

More Ideas?

This concludes my series on cloud native observability pitfalls, but it certainly does not mean that there are no more to be discussed. If you feel I've skipped something important, please comment or reach out to me as I'm happy to discuss. There's a good chance I will ask you to co-author another article in this series if you bring an idea, as sharing pitfalls is how others can avoid wreaking havoc on their cloud native observability efforts.

Below are the links to the other articles in this Cloud-Native Observability Pitfalls series:

- Introduction

- Controlling Costs

- Focusing on The Pillars

- Underestimating Cardinality

- Ignoring Existing Landscape

- The Protocol Jungle

- Sneaky Sprawling Mess (this article)

Published at DZone with permission of Eric D. Schabell, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments