O11y Guide, Cloud-Native Observability Pitfalls: Controlling Costs

In part 2 of this series, take a look at how to control the costs and the broken cost models encountered with cloud-native observability.

Join the DZone community and get the full member experience.

Join For FreeAre you looking at your organization's efforts to enter or expand into the cloud-native landscape and feeling a bit daunted by the vast expanse of information surrounding cloud-native observability? When you're moving so fast with Agile practices across your DevOps, SREs, and platform engineering teams, it's no wonder this can seem a bit confusing. Unfortunately, the choices being made have a great impact on both your business, your budgets, and the ultimate success of your cloud-native initiatives that hasty decisions upfront lead to big headaches very quickly down the road.

In the previous introduction, we looked at the problem facing everyone with cloud-native observability. It was the first article in this series. In this article, you'll find the first pitfall discussion that's another common mistake organizations make. By sharing common pitfalls in this series, the hope is that we can learn from them.

After laying the groundwork in the previous article, it's time to tackle the first pitfall, where we need to look at how to control the costs and the broken cost models we encounter with cloud-native observability.

O11y Costs Broken

One of the biggest topics of the last year has been how broken the cost models are for cloud-native observability. I previously wrote about why cloud-native observability needs phases, detailing how the second generation of observability tooling suffers from this broken model.

"The second generation consisted of application performance monitoring (APM) with the infrastructure using virtual machines and later cloud platforms. These second-generation monitoring tools have been unable to keep up with the data volume and massive scale that cloud-native architectures..."

They store all of our cloud-native observability data and charge for this, and as our business finds success, scaling data volumes means expensive observability tooling, degraded visualization performance, and slow data queries (rules, alerts, dashboards, etc.).

Organizations would not care how much data is being stored or what it costs if they had better outcomes, happier customers, higher levels of availability, faster remediation of issues, and, above all, more revenue. Unfortunately, as pointed out on TheNewStack,

"It's remarkable how common this situation is, where an organization is paying more for their observability data than they do for their production infrastructure."

The issue quickly resolves itself around the answer to the question, "Do we need to store all our observability data?" The quick and dirty answer is, of course, not! There has been almost no incentive for any tooling vendors to provide insights into the data we are ingesting for what is actually being used and what is not.

It turns out that when you do take a good look at the data coming in and are able to filter all your data at ingestion for what is not touched by any user, not ad-hoc queried, not part of any dashboard, not part of any rule, and not used for any alerts. It turns out to make quite a difference in data costs.

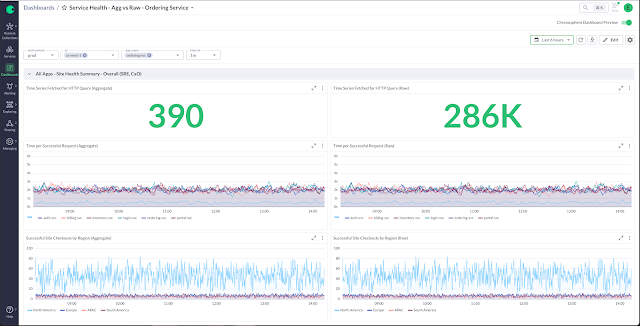

In the example above, we designed a dashboard for a service status overview, initially while ingesting over 280K data points. With the ability to inspect and clarify that a lot of these data points were not used in the organization, the same ingestion flow was reduced to just 390 single data points being stored. The cost reduction here depends on your vendor pricing, but with the effect shown here, it's obviously going to be a dramatic cost control tool.

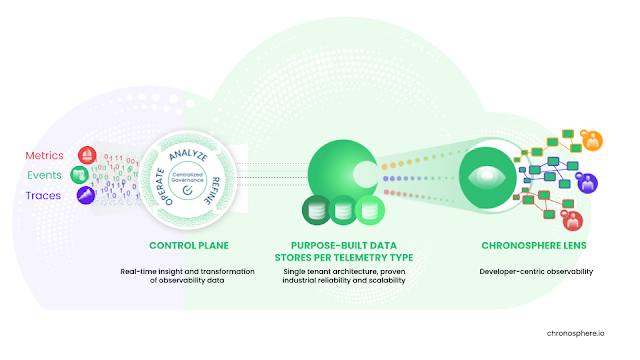

It's important to understand that we need to ingest what we can collect, but we really only want to store what we are actually going to use for queries, rules, alerts, and visualizations. Below is an architectural view of how we are assisted by having control plane functionality and tooling between our data ingestion and data storage. Any data we are not storing can later be passed through to storage should a future project require it.

Finally, without standards and ownership of the cost-controlling processes in an organization, there is little hope of controlling costs. To this end, the FinOps role has become critical to many organizations, and the entire field started a community in 2019 known as the FinOps Foundation. It's very important that cloud-native observability vendors join these efforts moving forward, and this should be a point of interest when evaluating new tooling. Today, 90% of the Fortune 50 now have FinOps teams.

The road to cloud-native success has many pitfalls, and understanding how to avoid the pillars and focusing instead on solutions for the phases of observability will save much wasted time and energy.

Coming Up Next

Another pitfall is when organizations focus on The Pillars in their observability solutions. In the next article in this series, I'll share why this is a pitfall and how we can avoid it wreaking havoc on our cloud-native observability efforts.

Published at DZone with permission of Eric D. Schabell, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

.png)

Comments