Neural Networks: From Perceptrons to Deep Learning

Explore in-depth the technical journey of neural networks, from the basic perceptron to advanced deep learning architectures driving AI innovations today.

Join the DZone community and get the full member experience.

Join For FreeFrom being inspired by the human brain to developing sophisticated models that allow for wonderful feats, the journey of neural networks has truly come a long way. In the following blog, we will discuss in depth the technical journey of neural networks — from the basic perceptron to advanced deep learning architectures driving AI innovations today.

The Human System

![Human brain neuron]()

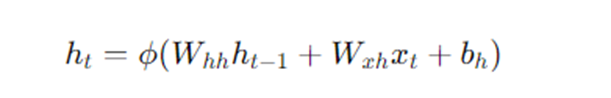

The human brain contains an estimated 86 billion neurons, all adjacent to each other and connected via synapses. Each neuron receives signals through the dendrites, then processes these through the soma, and sends its output down the axon to post-synaptic neurons. This complex network is how the brain is able to process vast amounts of information and perform exceedingly complex tasks.

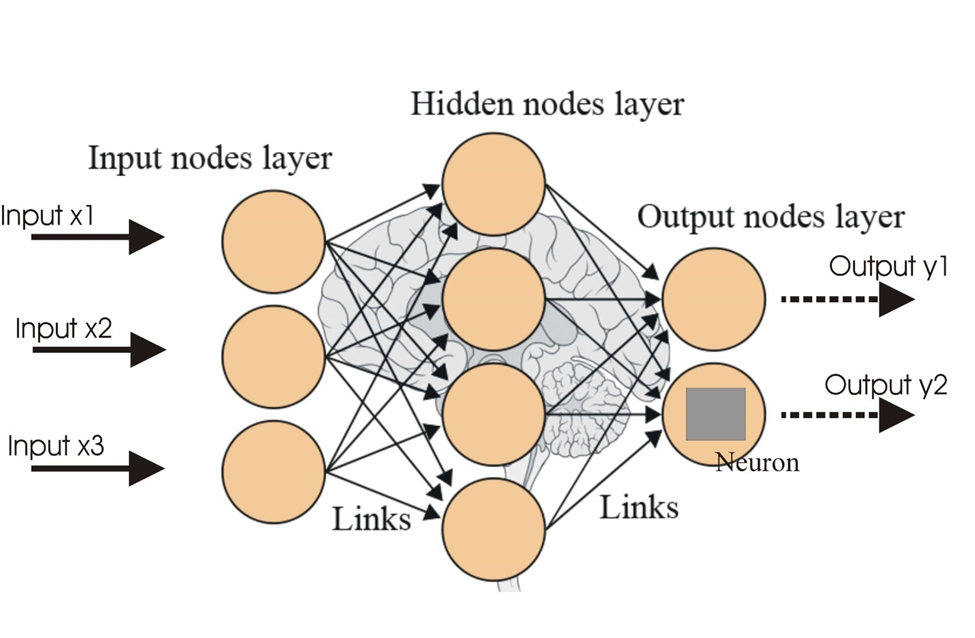

This is the same structure replicated in neural networks in AI. Interconnected artificial neurons, or nodes, are capable of processing and transmitting information; hence, they form the basic components of any machine learning model to learn from data and make a prediction or decision.

The Rise of Neural Networks in Deep Learning

Deep learning is a subset of machine learning that involves the use of neural networks with multiple layers — hence, deep neural networks — to model complex patterns in data. An evolution from relatively simple neural models toward deep architectures has been fostered by the increase in computational power, data availability, and algorithmic innovation.

Deep learning is a subset of machine learning that involves the use of neural networks with multiple layers — hence, deep neural networks — to model complex patterns in data. An evolution from relatively simple neural models toward deep architectures has been fostered by the increase in computational power, data availability, and algorithmic innovation.

The Perceptron: Foundation to Deep Learning

The simplest neural network is the perceptron, proposed by Frank Rosenblatt in 1957. It is used as the basic module or building block for more complex architectures. A perceptron is a linear classifier that maps an input, X to an output, Y with the following steps:

1. Weighted Sum

Compute the weighted sum of the inputs.

z=wTx+b

Where w is the weight vector, X is the input vector, and b is the bias.

2. Activation Function

Apply an activation function to the weighted sum to produce the output.

y=ϕ(z)

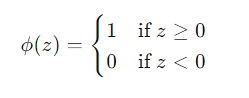

The activation function ϕ is typically a step function for binary classification:

Types of Perceptrons

1. Single-Layer Perceptron

A single-layer perceptron consists of a single layer of output nodes directly connected to the input nodes. It can only solve linearly separable problems.

2. Multi-Layer Perceptron (MLP)

A multi-layer perception extends the single-layer perceptron by adding one or more hidden layers between the input and output layers. Each layer contains multiple neurons, and the activation functions can be nonlinear (e.g., sigmoid, ReLU).

Multi-Layer Perceptron and Back Propagation

The introduction of hidden layers in MLPs enables the modeling of complex, non-linear relationships. Training an MLP involves adjusting the weights and biases to minimize the error between the predicted output and the actual target. This is achieved through the backpropagation algorithm:

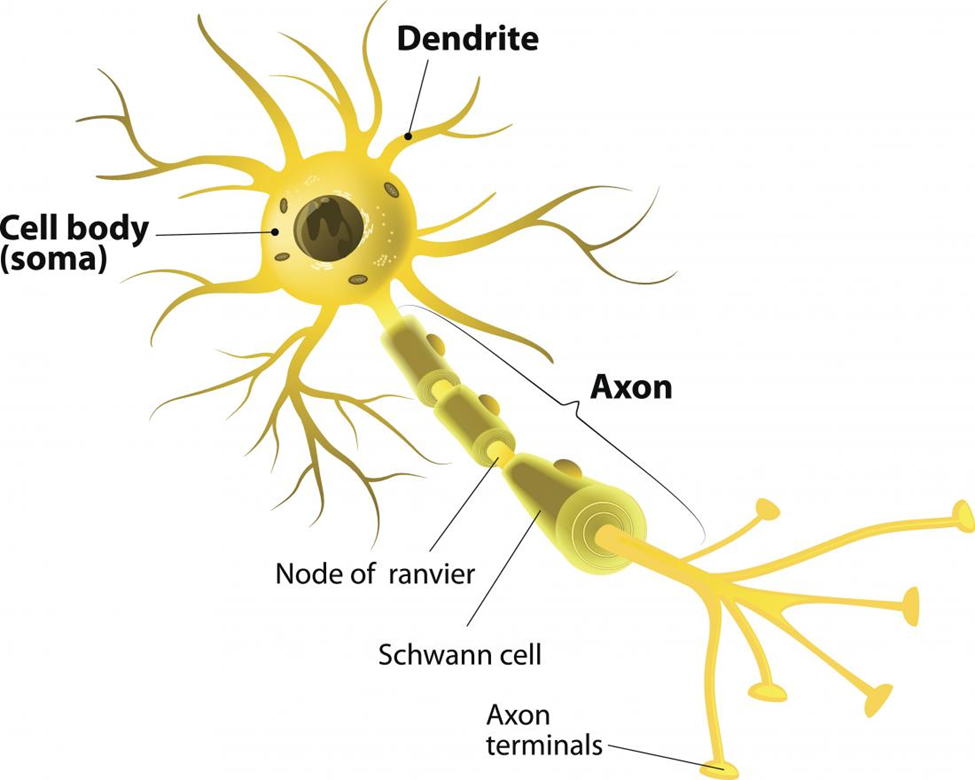

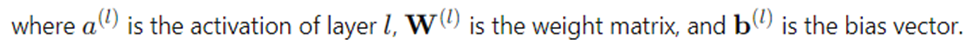

- Forward pass: Compute the output of the network by propagating the input through the layers.

![Forward pass formula]()

![Explanation of a1]()

- Loss calculation: Compute the loss function L (e.g., mean squared error, cross-entropy) to measure the discrepancy between the predicted and actual outputs.

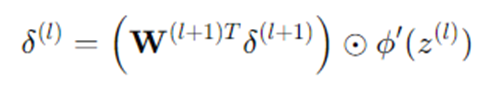

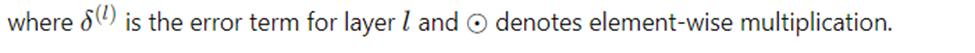

- Backward pass: Compute the gradients of the loss with respect to the weights and biases using the chain rule.

![Backward pass formula]()

![Error term for layer l explanation]()

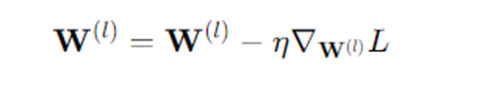

- Weight update: Adjust the weights and biases using gradient descent.

![Weight update formula]() Where n is the learning rate.

Where n is the learning rate.

Deep Learning Architectures

Deep learning has given rise to specialized architectures, each tailored for specific tasks:

1. Convolutional Neural Networks (CNNs)

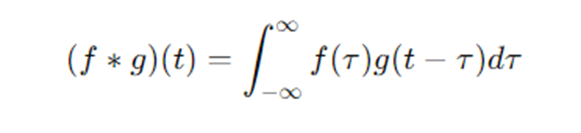

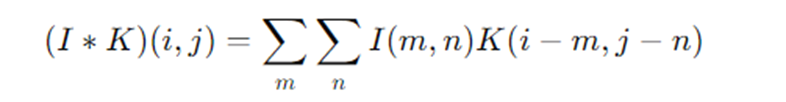

Designed for image processing, CNNs use convolutional layers to extract spatial features from input images. The mathematical operation of convolution is defined as: In discrete form, for images:

In discrete form, for images:

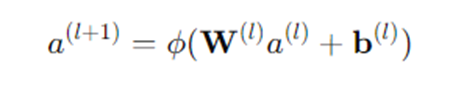

2. Recurrent Neural Networks (RNNs)

Suitable for sequential data, RNNs maintain a hidden state that captures information from previous time steps. The hidden state ht is updated as:

Applications and Impact

Deep learning models are making a difference in many areas of work:

- Computer vision: Applications include image classification, object detection, and facial recognition.

- Natural Language Processing (NLP): Driving a sea of change in tasks like language translation, sentiment analysis, and chatbots

- Healthcare: It increases the potential for improving the diagnosis of diseases, discovering drugs, and offering personalized medicine.

- Finance: Improve fraud detection, algorithmic trading, and risk assessment.

Conclusion

This evolution, from the perceptrons to deep learning, unlocked the latent ability of neural networks, where complex problems could not even be imagined, and set the trend for innovation in many segments of the economy. With high optimism in the development of research and technology, the future of neural networks is going to equip themselves with even greater capacities in their application.

Opinions expressed by DZone contributors are their own.

Where n is the learning rate.

Where n is the learning rate.

Comments