Must-Have Tool for Anonymous Virtual Livestreams

Virtual characters allow live streamers to interact anonymously within an app. Learn how live streamers can incorporate the human body occlusion capability.

Join the DZone community and get the full member experience.

Join For FreeInfluencers have become increasingly important as more and more consumers choose to purchase items online — whether on Amazon, Taobao, or one of the many other prominent e-commerce platforms. Brands and merchants have spent a lot of money finding influencers to promote their products through live streams and consumer interactions, and many purchases are made on the recommendation of a trusted influencer.

However, employing a public-facing influencer can be costly and risky. Many brands and merchants have opted instead to host live streams with their own virtual characters. This gives them more freedom to showcase their products and widens the pool of potential on-camera talent. For consumers, virtual characters can add fun and whimsy to the shopping experience.

E-commerce platforms have begun to accommodate the preference for anonymous live streaming by offering a range of important capabilities, such as those that allow for automatic identification, skeleton point-based motion tracking in real-time, facial expression and gesture identification, copying of traits to virtual characters, a range of virtual character models for users to choose from, and natural real-world interactions.

Building these capabilities comes with its share of challenges. For example, after finally building a model that is able to translate the person's every gesture, expression, and movement into real-time parameters and then applying them to the virtual character, you can find out that the virtual character can't be blocked by real bodies during the live stream, which gives it a fake, ghost-like form. This is a problem I encountered when I developed my own e-commerce app, and it occurred because I did not occlude the bodies that appeared behind and in front of the virtual character. Fortunately, I was able to find an SDK that helped me solve this problem — HMS Core AR Engine.

This toolkit provides a range of capabilities that make it easy to incorporate AR-powered features into apps. From hit testing and movement tracking to environment mesh, and image tracking, it's got just about everything you need. The human body occlusion capability was exactly what I needed at the time.

Now I'll show you how I integrated this toolkit into my app and how helpful it's been.

First, I registered for an account on the HUAWEI Developers website, downloaded the AR Engine SDK, and followed the step-by-step development guide to integrate the SDK. The integration process was quite simple and did not take too long. Once the integration was successful, I ran the demo on a test phone and was amazed to see how well it worked. During live streams, my app was able to recognize and track the areas where I was located within the image, with an accuracy of up to 90%, and provided depth-related information about the area. Better yet, it was able to identify and track the profile of up to two people and output the occlusion information and skeleton points corresponding to the body profiles in real-time. With this capability, I was able to implement a lot of engaging features, for example, changing backgrounds, hiding virtual characters behind real people, and even a feature that allows the audience to interact with the virtual character through special effects. All of these features have made my app more immersive and interactive, which makes it more attractive to potential shoppers.

How To Develop

Preparations

Registering as a Developer

Before getting started, you will need to register as a Huawei developer and complete identity verification on HUAWEI Developers. You can click here to find out the detailed registration and identity verification procedure.

Creating an App

Create a project and create an app under the project. Pay attention to the following parameter settings:

- Platform: Select Android.

- Device: Select Mobile phone.

- App category: Select App or Game.

Integrating the AR Engine SDK

Before development, integrate the AR Engine SDK via the Maven repository into your development environment.

Configuring the Maven Repository Address for the AR Engine SDK

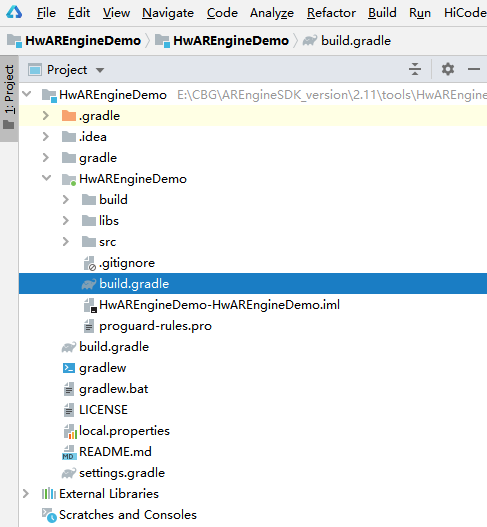

The procedure for configuring the Maven repository address in Android Studio is different for the Gradle plugin earlier than 7.0, Gradle plugin 7.0, and Gradle plugin 7.1 or later. You need to configure it according to the specific Gradle plugin version.

Adding Build Dependencies

Open the build.gradle file in the app directory of your project.

Add a build dependency in the dependencies block.

dependencies {

implementation 'com.huawei.hms:arenginesdk:{version}'

}Open the modified build.gradle file again. You will find a Sync Now link in the upper right corner of the page. Click Sync Now and wait until synchronization is complete.

Developing Your App

Checking the Availability

Check whether AR Engine has been installed on the current device. If so, the app can run properly. If not, the app prompts the user to install AR Engine, for example, by redirecting the user to AppGallery. The code is as follows:

boolean isInstallArEngineApk = AREnginesApk.isAREngineApkReady(this);

if (!isInstallArEngineApk) {

// ConnectAppMarketActivity.class is the activity for redirecting users to AppGallery.

startActivity(new Intent(this, com.huawei.arengine.demos.common.ConnectAppMarketActivity.class));

isRemindInstall = true;

}Create a BodyActivity object to display body bones and output human body features for AR Engine to recognize the human body.

Public class BodyActivity extends BaseActivity{

Private BodyRendererManager mBodyRendererManager;

Protected void onCreate(){

// Initialize surfaceView.

mSurfaceView = findViewById();

// Context for keeping the OpenGL ES running.

mSurfaceView.setPreserveEGLContextOnPause(true);

// Set the OpenGL ES version.

mSurfaceView.setEGLContextClientVersion(2);

// Set the EGL configuration chooser, including for the number of bits of the color buffer and the number of depth bits.

mSurfaceView.setEGLConfigChooser(……);

mBodyRendererManager = new BodyRendererManager(this);

mSurfaceView.setRenderer(mBodyRendererManager);

mSurfaceView.setRenderMode(GLSurfaceView.RENDERMODE_CONTINUOUSLY);

}

Protected void onResume(){

// Initialize ARSession to manage the entire running status of AR Engine.

If(mArSession == null){

mArSession = new ARSession(this.getApplicationContext());

mArConfigBase = new ARBodyTrackingConfig(mArSession);

mArConfigBase.setEnableItem(ARConfigBase.ENABLE_DEPTH | ARConfigBase.ENABLE_MASK);

mArConfigBase.setFocusMode(ARConfigBase.FocusMode.AUTO_FOCUS

mArSession.configure(mArConfigBase);

}

// Pass the required parameters to setBodyMask.

mBodyRendererManager.setBodyMask(((mArConfigBase.getEnableItem() & ARConfigBase.ENABLE_MASK) != 0) && mIsBodyMaskEnable);

sessionResume(mBodyRendererManager);

}

}

Create a BodyRendererManager object to render the personal data obtained by AR Engine.

Public class BodyRendererManager extends BaseRendererManager{

Public void drawFrame(){

// Obtain the set of all traceable objects of the specified type.

Collection<ARBody> bodies = mSession.getAllTrackables(ARBody.class);

for (ARBody body : bodies) {

if (body.getTrackingState() != ARTrackable.TrackingState.TRACKING){

continue;

}

mBody = body;

hasBodyTracking = true;

}

// Update the body recognition information displayed on the screen.

StringBuilder sb = new StringBuilder();

updateMessageData(sb, mBody);

Size textureSize = mSession.getCameraConfig().getTextureDimensions();

if (mIsWithMaskData && hasBodyTracking && mBackgroundDisplay instanceof BodyMaskDisplay) {

((BodyMaskDisplay) mBackgroundDisplay).onDrawFrame(mArFrame, mBody.getMaskConfidence(),

textureSize.getWidth(), textureSize.getHeight());

}

// Display the updated body information on the screen.

mTextDisplay.onDrawFrame(sb.toString());

for (BodyRelatedDisplay bodyRelatedDisplay : mBodyRelatedDisplays) {

bodyRelatedDisplay.onDrawFrame(bodies, mProjectionMatrix);

} catch (ArDemoRuntimeException e) {

LogUtil.error(TAG, "Exception on the ArDemoRuntimeException!");

} catch (ARFatalException | IllegalArgumentException | ARDeadlineExceededException |

ARUnavailableServiceApkTooOldException t) {

Log(…);

}

}

// Update gesture-related data for display.

Private void updateMessageData(){

if (body == null) {

return;

}

float fpsResult = doFpsCalculate();

sb.append("FPS=").append(fpsResult).append(System.lineSeparator());

int bodyAction = body.getBodyAction();

sb.append("bodyAction=").append(bodyAction).append(System.lineSeparator());

}

}

Customize the camera preview class, which is used to implement human body drawing based on certain confidence.

Public class BodyMaskDisplay implements BaseBackGroundDisplay{}Obtain skeleton data and pass the data to the OpenGL ES, which renders the data and displays it on the screen.

public class BodySkeletonDisplay implements BodyRelatedDisplay {Obtain skeleton point connection data and pass it to OpenGL ES for rendering the data and display it on the screen.

public class BodySkeletonLineDisplay implements BodyRelatedDisplay {}Conclusion

True-to-life AR live-streaming is now an essential feature in e-commerce apps, but developing this capability from scratch can be costly and time-consuming. AR Engine SDK is the best and most convenient SDK I've encountered, and it's done wonders for my app by recognizing individuals within images with accuracy as high as 90% and providing the detailed information required to support immersive, real-world interactions. Try it out on your own app to add powerful and interactive features that will have your users clamoring to shop more!

Published at DZone with permission of Jackson Jiang. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments