Monitoring Audit Logs With auditd and Auditbeat

Learn how to configure rules to generate audit logs with auditd and Auditbeat for application performance monitoring.

Join the DZone community and get the full member experience.

Join For FreeThe Linux Audit framework is a kernel feature (paired with userspace tools) that can log system calls. For example, opening a file, killing a process or creating a network connection. These audit logs can be used to monitor systems for suspicious activity.

In this post, we will configure rules to generate audit logs. First, we will use auditd to write logs to flat files. Then we'll use Auditbeat to ship those logs through the Elasticsearch API: either to a local cluster or to Sematext Logs (aka Logsene, our logging SaaS). Along the way, we will search through the generated logs, create alerts and generate requests.

Define Your Objectives

Audit logs to track suspicious activity, but what does "suspicious" actually mean? As with all logging, the answer will depend on both what we need and how much it costs. For example, if you're hosting websites, you may be interested in changes to document files. You may also be interested in accesses outside document root or privilege escalation. The list can always grow, but so will the cost. Intercepting more system calls will add more CPU overhead, and storing more events for the same period will cost more.

To strike a good balance, you'd first figure out what is needed, then think about how to implement it in a cost-effective way. For this post, let's say we want to track user management and changes to the system time. To implement this, we'll track access to /etc/passwd and system calls to change time.

Set Up Audit Rules

The go-to application for tapping into the Linux Audit framework is its userspace component: the Linux Audit daemon (auditd). auditd can subscribe to events from the kernel based on rules.

To create a rule for watching /etc/passwd, we'll run this command as root:

auditctl -w /etc/passwd -p wra -k passwd Let's break it down:

- auditctl defines and lists audit rules. It has a really nice man page that you can use as a reference, but we'll call out the important bits here.

- -w /etc/passwd starts a watcher on a file. When the file is accessed, the watcher will generate events.

- -p wra specifies the permission type to watch for. "wra" adds up write, read and attribute change.

- -k passwd is an optional key. Later on, we could search for this (arbitrary) string to identify events tagged with this key.

Now that we've defined a rule, we can list the current rules to double-check that it was stored:

$ auditctl -l

LIST_RULES: exit,always watch=/etc/passwd perm=rwa key=passwdSure enough, our rule is there. At this point, if we do

cat /etc/passwd We will see events in /var/log/audit/audit.log (you can change the default location in /etc/audit/auditd.conf):

type=SYSCALL msg=audit(1522927552.749:917): arch=c000003e syscall=2 success=yes exit=3 a0=7ffe2ce05793 a1=0 a2=1fffffffffff0000 a3=7ffe2ce043a0 items=1 ppid=2906 pid=4668 auid=1000 uid=0 gid=0 euid=0 suid=0 fsuid=0 egid=0 sgid=0 fsgid=0 tty=pts4 ses=1 comm="cat" exe="/bin/cat" key="passwd"

type=CWD msg=audit(1522927552.749:917): cwd="/root"

type=PATH msg=audit(1522927552.749:917): item=0 name="/etc/passwd" inode=3147443 dev=08:01 mode=0100644 ouid=0 ogid=0 rdev=00:00 nametype=NORMAL

type=UNKNOWN[1327] msg=audit(1522927552.749:917): proctitle=636174002F6574632F706173737764"auditctl lets you define rules watch file changes and other system calls. The same rule syntax is used in MetricBeat's config file."

Let's set up another rule for tracking changes to the system time:

auditctl -a exit,always -F arch=b64 -S clock_settime -k changetime This command looks a bit different, because it tracks a specific system call (-S clock_settime). Let's break it down as well:

- -k changetime is the same optional tag for identifying the rule.

- -S clock_settime specifies the system call. You can find the full list, along with the kernel version where each call was introduced, in the syscalls man page. Go to each system call to see its own man page. Back to our command, you can specify multiple -S parameters. This will watch for multiple system calls in one rule, lowering the audit overhead.

- -a exit,always specifies the list to log to and the action. The exit,always combination is the most widely used. The exit list will produce an event when the system call exits. Other lists are task (when a task/process is created), user (to filter userspace calls based on user attributes), and exclude (for filtering out events). The always action timestamps and writes the record. This contrasts to never, which is used to override other rules. Note that rules are evaluated in order, so exclusion rules should be on top.

- -F arch=b64 specifies a filter, defined by a key (arch), a value (b64) and an operator (=). This one filters only 64-bit architecture system calls. We need to specify it for the kernel to identify the clock_settime we want. You can filter by user id, use greater than (>) or less than (<) operators, and much more. You'll find the whole list in auditctl's man page. As with system calls (-S), you can specify multiple filter (-F) arguments to combine multiple filters.

With the above rule defined, if we change the system clock:

date --set "$NEWDATE" We'll see new entries in audit.log:

type=SYSCALL msg=audit(1522928030.508:2940): arch=c000003e syscall=227 success=yes exit=0 a0=0 a1=7ffe530e4db0 a2=1 a3=7ffe530e4b10 items=0 ppid=2906 pid=4745 auid=1000 uid=0 gid=0 euid=0 suid=0 fsuid=0 egid=0 sgid=0 fsgid=0 tty=pts4 ses=1 comm="date" exe="/bin/date" key="changetime"

type=UNKNOWN[1327] msg=audit(1522928030.508:2940): proctitle=64617465002D2D736574003330204D415220323031382031323A30303A3030In these events, we don't see the name of the system call (clock_settime), only its number (syscall=227). To see the mapping between system call numbers and their names, run ausyscall -dump. On this particular system, one of the lines was 227 clock_settime.

Searching and Analyzing Audit Logs

Now that we have some audit logs, let's go ahead and analyze them. We can always use the likes of grep, but the Linux Audit System comes with a few handy binaries that already parse audit logs. For example, ausearch can easily filter logs by the event key we defined with -k in our rules. Below you can see traces of when the changetime rule was added and when it was triggered by the date command.

$ ausearch -k changetime

----

time->Thu Apr 5 11:32:16 2018

type=CONFIG_CHANGE msg=audit(1522927936.343:2600): auid=1000 ses=1 op="add_rule" key="changetime" list=4 res=1

----

time->Thu Apr 5 11:33:50 2018

type=UNKNOWN[1327] msg=audit(1522928030.508:2940): proctitle=64617465002D2D736574003330204D415220323031382031323A30303A3030

type=SYSCALL msg=audit(1522928030.508:2940): arch=c000003e syscall=227 success=yes exit=0 a0=0 a1=7ffe530e4db0 a2=1 a3=7ffe530e4b10 items=0 ppid=2906 pid=4745 auid=1000 uid=0 gid=0 euid=0 suid=0 fsuid=0 egid=0 sgid=0 fsgid=0 tty=pts4 ses=1 comm="date" exe="/bin/date" key="changetime"For a more condensed view of audit events, aureport comes in handy. For example, we can use -f to filter only the file-related events:

$ aureport -f

File Report

===============================================

# date time file syscall success exe auid event

===============================================

1. 05.04.2018 11:25:52 /etc/passwd 2 yes /bin/cat 1000 917

2. 30.03.2018 12:14:34 /etc/passwd 2 yes /usr/bin/vim.basic 1000 2941

3. 30.03.2018 12:14:34 /etc/passwd 2 yes /usr/bin/vim.basic 1000 2942

4. 30.03.2018 12:14:34 /etc/passwd 89 no /usr/bin/vim.basic 1000 2943

5. 30.03.2018 12:14:34 /etc/passwd 2 yes /usr/bin/vim.basic 1000 2944Centralizing Audit Logs With Elasticsearch/Logsene

Using the standard tools of the Linux Audit System is usually enough for monitoring a single system. Larger deployments will benefit from centralizing audit logs (as they do for all logs). By shipping audit logs to Elasticsearch, or to Logsene, our logging SaaS exposing the Elasticsearch API, we are able to get a better overview of all hosts. Searches and aggregations will also scale better with the volume of audit logs.

For shipping Linux Audit System logs to Elasticsearch, Auditbeat is the tool of choice. It replaces auditd as the recipient of events - though we'll use the same rules - and push data to Elasticsearch/Logsene instead of a local file.

To install Auditbeat, we'll use repositories. For example, on Ubuntu:

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

sudo apt-get install apt-transport-https

echo "deb https://artifacts.elastic.co/packages/6.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-6.x.list

sudo apt-get update && sudo apt-get install auditbeatNext, we'll edit /etc/auditbeat/auditbeat.yml to configure Auditbeat. Here's an example config file that uses the same rules for watching /etc/passwd and changing system time:

auditbeat.modules:

- module: auditd

audit_rules: |

-a exit,always -F arch=b64 -S clock_settime -k changetime

-w /etc/passwd -p wra -k passwd

setup.template.enabled: false

output.elasticsearch:

hosts: ["https://logsene-receiver.sematext.com:443"]

index: "LOGSENE-APP-TOKEN-GOES-HERE"Rules previously set with auditctl now go under the audit_rules key of Auditbeat's auditd module. Like with Filebeat, to send data to Logsene instead of a local Elasticsearch, you'd use logsene-receiver.sematext.com as the endpoint and your Sematext Logs App token as the index name.

Since Auditbeat replaces auditd, we'll have to stop/disable auditd before starting Auditbeat:

service auditd stop

service auditbeat restart An audit event (such as the previous cat /etc/passwd) will emit a JSON to Elasticsearch/Logsene that looks like this:

{

"@timestamp": "2018-03-30T12:36:07.710Z",

"user": {

"sgid": "0",

"fsuid": "0",

"gid": "0",

"name_map": {

"egid": "root",

"sgid": "root",

"suid": "root",

"uid": "root",

"auid": "radu",

"fsgid": "root",

"fsuid": "root",

"gid": "root",

"euid": "root"

},

"fsgid": "0",

"uid": "0",

"egid": "0",

"auid": "1000",

"suid": "0",

"euid": "0"

},

"process": {

"ppid": "2906",

"title": "cat /etc/passwd",

"name": "cat",

"exe": "/bin/cat",

"cwd": "/root",

"pid": "5373"

},

"file": {

"device": "00:00",

"inode": "3147443",

"mode": "0644",

"owner": "root",

"path": "/etc/passwd",

"uid": "0",

"gid": "0",

"group": "root"

},

"beat": {

"version": "6.2.3",

"name": "radu-laptop",

"hostname": "radu-laptop"

},

"tags": [

"passwd"

],

"auditd": {

"summary": {

"actor": {

"primary": "radu",

"secondary": "root"

},

"object": {

"primary": "/etc/passwd",

"type": "file"

},

"how": "/bin/cat"

},

"paths": [

{

"dev": "08:01",

"nametype": "NORMAL",

"rdev": "00:00",

"inode": "3147443",

"item": "0",

"mode": "0100644",

"name": "/etc/passwd",

"ogid": "0",

"ouid": "0"

}

],

"sequence": 3029,

"result": "success",

"session": "1",

"data": {

"a0": "7ffea3df0793",

"tty": "pts4",

"a3": "7ffea3dee890",

"syscall": "open",

"a1": "0",

"exit": "3",

"arch": "x86_64",

"a2": "1fffffffffff0000"

}

},

"event": {

"category": "audit-rule",

"type": "syscall",

"action": "opened-file",

"module": "auditd"

}

}"ausearch and aureport allow you to explore logs collected via auditd. MetricBeat can collect the same logs and push them to Elasticsearch/Logsene."

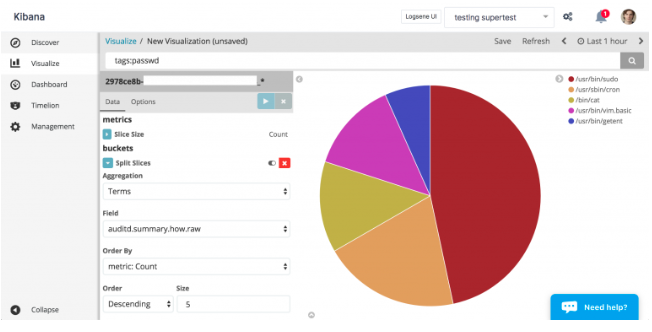

Once data is in, we can search or set up Kibana visualizations. For example, which commands generated audit events:

audit kibana.

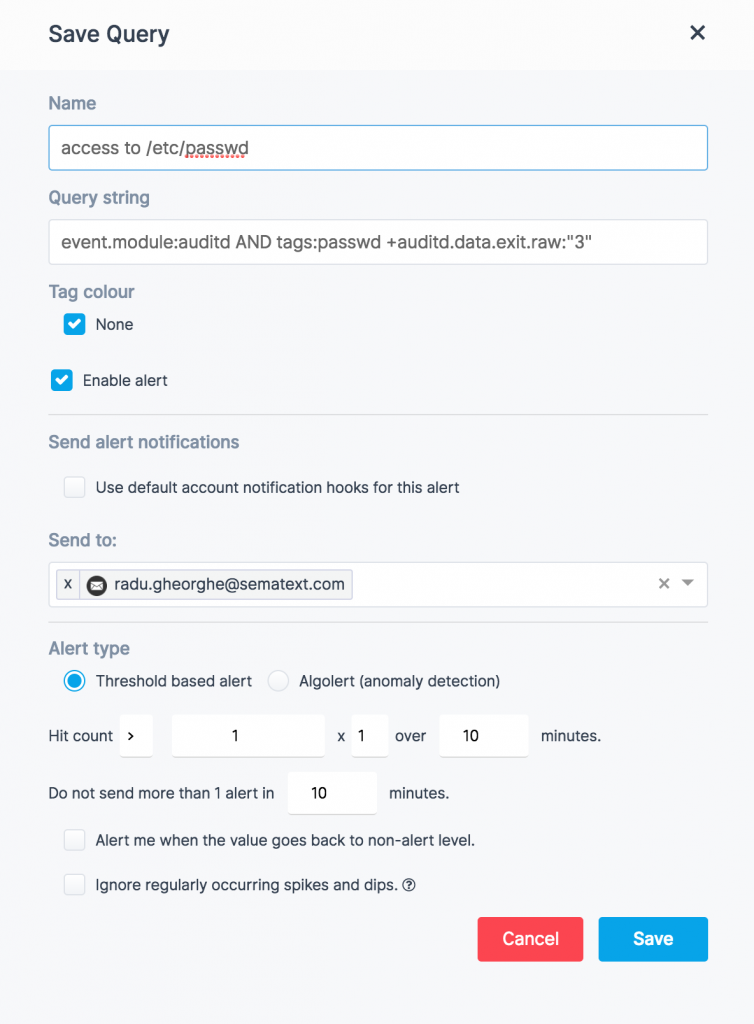

With Logsene, you can also set up alerts when saving a query:

Summary

If you followed all the way down here, congratulations! Either way, let's summarize:

- the Linux Audit System has rich audit capabilities, letting you track all system calls right from the kernel.

- auditd can listen to and log all audit events based on a set of rules defined via auditctl. You can use ausearch and aureport to drill through the local audit log.

- Auditbeat can replace auditd and listen to the same events, following rules defined in the same auditctl format. It will convert these events into JSON and push them to Elasticsearch/Logsene. There, you can run searches, create alerts and reports based on data from multiple hosts.

That being said, the Linux Audit System isn't the only way to audit... systems. Here are some complementary frameworks:

- Auditbeat itself has a file integrity module. You can use it to set up file watchers, much like we did here with the auditd module. Being a higher-level feature, it's often less efficient. For example, it has to scan for new files on startup. The upside is that it will work on Windows and OSX as well, not only on Linux.

- Bro is a framework geared towards network analysis, aiming to bridge the gap between academia and operations. Read more about Bro and how to ship Bro Logs to Elasticsearch/Logsene.

- Falco is, roughly said, the userspace equivalent of auditd. It can give you richer information, but it's going to be easier to disable. Of course, there's much more to it than that, so if you're curious, read more on Falco and how it compares to auditd, but also enforcing frameworks, such as SELinux and AppArmor.

- Wazuh and Moloch are also IDS frameworks, focused on file integrity and network monitoring respectively. Both are integrated with Elasticsearch, so you can ship this information to Logsene as well, using the same API. Stay tuned for more tutorials!

Published at DZone with permission of Radu Gheorghe. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments