Modern Application Performance: Gaining Insight Into Telemetry and Observability

Gain insight into how telemetry and observability will further streamline application performance management to be more efficient and effective over time.

Join the DZone community and get the full member experience.

Join For FreeThis is an article from DZone's 2023 Observability and Application Performance Trend Report.

For more:

Read the Report

In today's digital landscape, the growing importance of monitoring and managing application performance cannot be overstated. With businesses increasingly relying on complex applications and systems to drive their operations, ensuring optimal performance has become a top priority. In essence, efficient application performance management can mean the difference between business success and failure. To better understand and manage these sophisticated systems, two key components have emerged: telemetry and observability.

Telemetry, at its core, is a method of gathering and transmitting data from remote or inaccessible areas to equipment for monitoring. In the realm of IT systems, telemetry involves collecting metrics, events, logs, and traces from software applications and infrastructure. This plethora of data is invaluable as it provides insight into system behavior, helping teams identify trends, diagnose problems, and make informed decisions. In simpler terms, think of telemetry as the heartbeat monitor of your application, providing continuous, real-time updates about its health.

Observability takes this concept one step further. It's important to note that while it does share some similarities with traditional monitoring, there are distinct differences. Traditional monitoring involves checking predefined metrics or logs for anomalies. Observability, on the other hand, is a more holistic approach. It not only involves gathering data but also understanding the "why" behind system behavior. Observability provides a comprehensive view of your system's internal state based on its external outputs. It helps teams understand the overall health of the system, detect anomalies, and troubleshoot potential issues.

Simply put, if telemetry tells you what is happening in your system, observability explains why it's happening.

The Emergence of Telemetry and Observability in Application Performance

In the early days of information systems, understanding what a system was doing at any given moment was a challenge. However, the advent of telemetry played a significant role in mitigating this issue. Telemetry, derived from Greek roots tele (remote) and metron (measure), is fundamentally about measuring data remotely. This technique has been used extensively in various fields such as meteorology, aerospace, and healthcare, long before its application in information technology.

As the complexity of systems grew, so did the need for more nuanced understanding of their behavior. This is where observability — a term borrowed from control theory — entered the picture. In the context of IT, observability is not just about collecting metrics, logs, and traces from a system, but about making sense of that data to understand the internal state of the system based on the external outputs. Initially, these concepts were applied within specific software or hardware components, but with the evolution of distributed systems and the challenges they presented, the application of telemetry and observability became more systemic.

Nowadays, telemetry and observability are integral parts of modern information systems, helping operators and developers understand, debug, and optimize their systems. They provide the necessary visibility into system performance, usage patterns, and potential bottlenecks, enabling proactive issue detection and resolution.

Emerging Trends and Innovations

With cloud computing taking the center stage in the digital transformation journey of many organizations, providers like Amazon Web Services (AWS), Azure, and Google Cloud have integrated telemetry and observability into their services. They provide a suite of tools that enable users to collect, analyze, and visualize telemetry data from their workloads running on the cloud.

These tools don't just focus on raw data collection but also provide features for advanced analytics, anomaly detection, and automated responses. This allows users to transform the collected data into actionable insights.

Another trend we observe in the industry is the adoption of open-source tools and standards for observability like OpenTelemetry, which provides a set of APIs, libraries, agents, and instrumentation for telemetry and observability. The landscape of telemetry and observability has come a long way since its inception, and continues to evolve with technology advancements and changing business needs. The incorporation of these concepts into cloud services by providers like AWS and Azure has made it easier for organizations to gain insights into their application performance, thereby enabling them to deliver better user experiences.

The Benefits of Telemetry and Observability

The world of application performance management has seen a paradigm shift with the adoption of telemetry and observability. This section delves deep into the advantages provided by these emerging technologies.

Enhanced Understanding of System Behavior

Together, telemetry and observability form the backbone of understanding system behavior. Telemetry, which involves the automatic recording and transmission of data from remote or inaccessible parts of an application, provides a wealth of information about the system's operations. On the other hand, observability derives meaningful insights from this data, allowing teams to comprehend the internal state of the system from its external outputs. This combination enables teams to proactively identify anomalies, trends, and potential areas of improvement.

Improved Fault Detection and Resolution

Another significant advantage of implementing telemetry and observability is the enhanced ability to detect and resolve faults. There are tools that allow users to collect and track metrics, collect and monitor log files, set alarms, and automatically react to changes in configuration. This level of visibility hastens the detection of any operational issues, enabling quicker resolution and reducing system downtime.

Optimized Resource Utilization

These modern application performance techniques also facilitate optimized resource utilization. By understanding how resources are used and identifying any inefficiencies, teams can make data-driven decisions to optimize resource allocation. An auto-scaling feature — which adjusts capacity to maintain steady, predictable performance at the lowest possible cost — is a prime example of this benefit.

Challenges in Implementing Telemetry and Observability

Implementing telemetry and observability into existing systems is not a straightforward task. It involves a myriad of challenges, stemming from the complexity of modern applications to the sheer volume of data that needs to be managed. Let's delve into these potential pitfalls and roadblocks.

Potential Difficulties and Roadblocks

The first hurdle is the complexity of modern applications. They are typically distributed across multiple environments — cloud, on-premises, hybrid, and even multi-cloud setups. This distribution makes it harder to understand system behavior, as the data collected could be disparate and disconnected, complicating telemetry efforts.

Another challenge is the sheer volume, speed, and variety of data. Modern applications generate massive amounts of telemetry data. Collecting, storing, processing, and analyzing this data in real time can be daunting. It requires robust infrastructure and efficient algorithms to handle the load and provide actionable insights.

Also, integrating telemetry and observability into legacy systems can be difficult. These older systems may not be designed with telemetry and observability in mind, making it challenging to retrofit them without impacting performance.

Strategies To Mitigate Challenges

Despite these challenges, there are ways to overcome them. For the complexity and diversity of modern applications, adopting a unified approach to telemetry can help. This involves using a single platform that can collect, correlate, and analyze data from different environments. To tackle the issue of data volume, implementing automated analytics and machine learning algorithms can be beneficial. These technologies can process large datasets in real time, identifying patterns and providing valuable insights.

For legacy system integration issues, it may be worthwhile to invest in modernizing these systems. This could mean refactoring the application or adopting new technology stacks that are more conducive to telemetry and observability. Finally, investing in training and up-skilling teams on tools and best practices can be immensely beneficial.

Practical Steps for Gaining Insights

Both telemetry and observability have become integral parts of modern application performance management. They offer in-depth insights into our systems and applications, enabling us to detect and resolve issues before they impact end-users. Importantly, these concepts are not just theoretical — they're put into practice every day across services provided by leading cloud providers such as AWS and Google Cloud.

In this section, we'll walk through a step-by-step guide to harnessing the power of telemetry and observability. I will also share some best practices to maximize the value you gain from these insights.

Step-By-Step Guide

The following are steps to implement performance management of a modern application using telemetry and observability on AWS, though this is also possible to implement using other cloud providers:

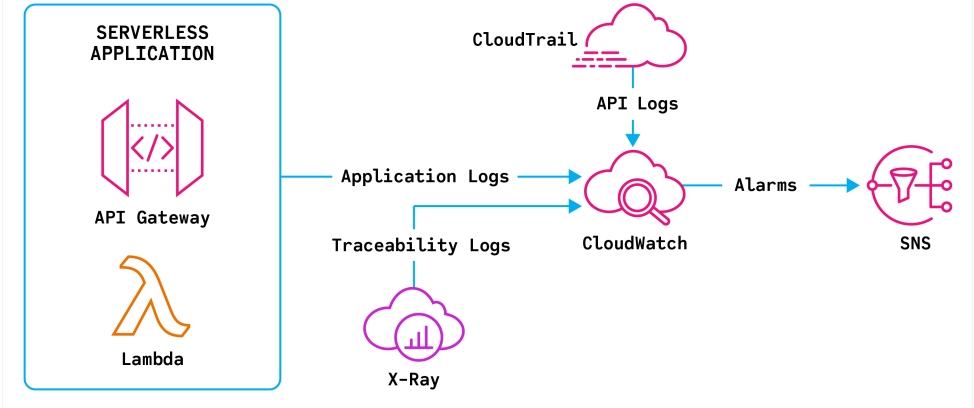

Step 1 – Start by setting up AWS CloudWatch. CloudWatch collects monitoring and operational data in the form of logs, metrics, and events, providing you with a unified view of AWS resources, applications, and services.

Step 2 – Use AWS X-Ray for analyzing and debugging your applications. This service provides an end-to-end view of requests as they travel through your application, showing a map of your application's underlying components.

Step 3 – Implement AWS CloudTrail to keep track of user activity and API usage. CloudTrail enhances visibility into user and resource activity by recording AWS Management Console actions and API calls. You can identify which users and accounts called AWS, the source IP address from which the calls were made, and when the calls occurred.

Step 4 – Don't forget to set up alerts and notifications. AWS SNS (Simple Notification Service) can be used to send you alerts based on the metrics you define in CloudWatch.

Figure 1: An example of observability on AWS

Best Practices

Now that we've covered the basics of setting up the tools and services for telemetry and observability, let's shift our focus to some best practices that will help you derive maximum value from these insights:

- Establish clear objectives – Understand what you want to achieve with your telemetry data — whether it's improving system performance, troubleshooting issues faster, or strengthening security measures.

- Ensure adequate training – Make sure your team is adequately trained in using the tools and interpreting the data provided. Remember, the tools are only as effective as the people who wield them.

- Be proactive rather than reactive – Use the insights gained from telemetry and observability to predict potential problems before they happen instead of merely responding to them after they've occurred.

- Conduct regular reviews and assessments – Make it a point to regularly review and update your telemetry and observability strategies as your systems evolve. This will help you stay ahead of the curve and maintain optimal application performance.

Conclusion

The rise of telemetry and observability signifies a paradigm shift in how we approach application performance. With these tools, teams are no longer just solving problems — they are anticipating and preventing them. In the complex landscape of modern applications, telemetry and observability are not just nice-to-haves; they are essentials that empower businesses to deliver high-performing, reliable, and user-friendly applications.

As applications continue to evolve, so will the tools that manage their performance. We can anticipate more advanced telemetry and observability solutions equipped with AI and machine learning capabilities for predictive analytics and automated anomaly detection. These advancements will further streamline application performance management, making it more efficient and effective over time.

This is an article from DZone's 2023 Observability and Application Performance Trend Report.

For more:

Read the Report

Opinions expressed by DZone contributors are their own.

Comments