Mitigating DevOps Repository Risks

Docker is in the news for two reasons: Image retention limits and download throttling. Let's discuss both and see the better alternatives.

Join the DZone community and get the full member experience.

Join For FreeDocker Hub: In the News

DockerHub is a cloud-based repository where popular Docker images can be published and end-users can pull them for their cloud-native infrastructure and deployments. Docker images are lightweight and portable; they can be easily moved between systems. Anybody can create a set of standard images, store them on a repository, and share them throughout the organization. You can also use Docker Hub for sharing Docker container images.

Docker Hub was recently in the news for the following two reasons:

Image retention limits

Images stored on DockerHub with free accounts (which is very common for both open-source projects and automated builds) will be subject to a six-month image retention policy, after which their images will be deleted if they are inactive.

Download throttling

Docker introduced a download rate limit of 100 pulls per six hours for anonymous users and 200 pulls per six hours for free accounts.

This is not the first time Docker Hub has made the news. In 2018, 17 malicious docker images were found on Docker Hub, allowing hackers to make $90,000 in cryptojacking profits.

It is shocking how most of the internet blindly trusts and pulls images from Docker Hub. Due to the interconnected nature of accounts and projects within Docker Hub, if a security breach happens tracking down exactly which assets were impacted will be a huge task with severe implications for your organization. This will slow down everything from development to operations to deployment.

The DevOps team will now have to spend many hours looking through image repositories and autobuilds for suspicious activity in their accounts and projects. They will also need to reset passwords, remove and replace all the images from compromised accounts and redo work that has already been done.

Docker Acquisition: End of an Era?

Docker provided what other packaging tools didn't: Free image hosting, with no strings attached. That was a significant success in attracting the developer community.

However, Docker’s fate as technology and company was transformed by Google's open-source Kubernetes platform, which democratized container technology. In 2019, Mirantis acquired Docker and gave their enterprise business to Mirantis, further clouding the future of Docker’s future as a company.

No doubt, Docker helped to pioneer the use of containers in software development, at the time when heavyweight virtual machines were the only solution to scalably package and ship code. Docker also helped with the flexibility and portability of applications but struggled to find a sustainable business model, especially as Google's technology continued to grow in popularity.

The original Docker, Inc, after its acquisition, is left with only two platforms to focus on - Docker Desktop and Docker Hub. Hence it pivoted its business focus onto the developer-Kubernetes pipeline via an emphasis on the two existing platforms - Docker Desktop and Docker Hub.

While Docker has been an innovator in the containerization space, they have not done the best job at protecting their business interests. For example, they miscalculated the power of open source and standards by trying to compete with Kubernetes. Also, they were unable to properly integrate their enterprise business with their community, which resulted in the acquisition of their enterprise assets to a lesser-known company, Mirantis.

When looking at their most recent decisions to change the image retention policy and the throttling limit, you may also ask yourself if they have underestimated the value of community and the business impact they are causing on their enterprise customers.

Docker License Challenges

Docker images are composed of a variety of different software and operating system packages that have disparate licenses terms and compliance obligations. To understand the risk of a container that you are adopting in your enterprise you need to know and understand all of those dependencies in order to evaluate compliance with your corporate security guidelines and open source policies.

To properly understand the license terms of images downloaded from Docker Hub you really need automated tooling that can drill-down into the image and identify all of the operating system packages, bundled applications, dependant libraries, and the aggregate licenses of all of these layers in order to identify non-compliant packages. Complying with license terms and requirements is very crucial; it minimizes business and legal risk.

JFrog offers a security scanning solution called Xray that can drill into Docker images identifying security vulnerabilities, license compliance, and flag on new vulnerabilities as they are discovered by security researchers.

Mitigate Risk: Time to Act Soon

Two groups are potentially going to get affected by the new policies established by Docker - The open-source contributors who create Docker images and the DevOps enthusiasts who consume them.

The open-source group will have image retention issues for less used but important images. DevOps enthusiasts will have challenges using Docker Hub as a system of record, because images might disappear without warning, breaking builds, and anonymous or free builds can fail due to throttling of pull requests.

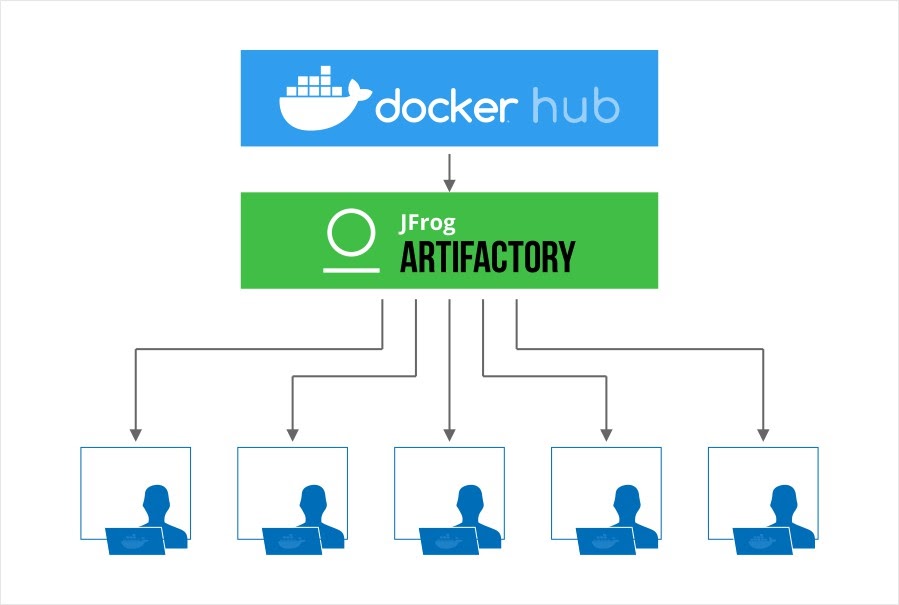

With the latest image retention and throttling policies by Docker, the free tier users and the open-source enthusiasts are going to get affected. These communities have no choice but to move to a highly sophisticated option, that is 'Artifactory'. Yes!

Artifactory caches images, so you are not affected by upstream removal.

Artifactory serves from the cache, so only 1 pull per image, preventing throttling.

Only a single DockerHub license is required for all developers and build machines in an organization.

Artifactory allows you to create multiple Docker registries per instance. You can make use of a local repository as a private Docker registry to share Docker images across the organization with fine-grained access control.

You can store and also retrieve any type of artifact, including secure docker images that your development teams produce, and having these artifacts stored in a central, managed location makes Artifactory an essential part of any software delivery lifecycle.

Best Alternatives and Offerings by JFrog

JFrog Artifactory:

Free tier with 2GB Storage and 10GB Transfer

Commercial tiers for larger organizations

Artifactory is a service for hosting and distributing container images seamlessly. It allows you to control the content and information that goes into a Docker image because it grants you the ability to store all the binaries in a single location – a Docker registry.

Artifactory also provides widespread integration, high availability, highly security, massively scalable storage, and is updated continuously to support the latest Docker client version and APIs.

JFrog Container Registry:

Self-hosted: Free and unlimited container registry

Cloud SAAS: 2 GB Storage and 10 GB Transfer for free

BYOL on your cloud: Free on your own infrastructure

JFrog Container Registry comes with all the features to manage Docker images in the environments where they are required. Also, complete compatibility with Docker enables native operation with all the tools the team needs to use.

JCR can be used as our single access point to manage all our Docker images. It gives secure, reliable, consistent, and efficient access to remote Docker container registries with integration to our build ecosystem.

We can maintain as many Docker registries as we need, public and private, with fine-grain permission controls that help administrators manage who can access what. It also stores your containers with rich metadata that allows providing unique, comprehensive views of the layers in our containers so we can know what is in them.

Building a Sustainable Container Ecosystem

Having a thriving container ecosystem is critical for the success of all companies deploying using cloud-native technologies like Docker and Kubernetes. Docker has done an admirable job of supporting the community with free resources like Docker Hub, but this era is coming to an end as they find the need to monetize on their user base to support the large amount of data and transfer required to run a free service.

By deploying local respiratory technologies like JFrog Artifactory and JFrog Container Registry within your enterprise to support build, test, and deployment scenarios you are reducing the load on shared community infrastructure like Docker Hub and also a variety of other free repositories like Maven, NPM, Go, Conan, and other central repositories run by the community. This makes it possible for them to focus on the quality and security of the packages rather than just invest in scaling infrastructure.

At the same time, you are also mitigating risk for your organization by having a persistent copy of images used by your teams and infrastructure even if the upstream container images are removed or if the service has throttling or outages that would impact your business. Therefore, deploying an artifact management system should be an essential part of any end-to-end software delivery platform.

Opinions expressed by DZone contributors are their own.

Comments