Microservices on Kubernetes

Learn how to get started with microservices on Kubernetes, including automating your environment setup and building a CI/CD pipeline.

Join the DZone community and get the full member experience.

Join For FreeKubernetes is an open-source container orchestration system for automating deployments, and scaling and management of containerized applications. In this tutorial, you will learn how to get started with microservices on Kubernetes. I will cover the below topics:

- How does Kubernetes help to build scalable microservices?

- Overview of Kubernetes architecture

- Creating a local development environment for Kubernetes using Minikube

- Creating a Kubernetes cluster and deploying your microservices on Kubernetes

- Automating your Kubernetes Environment Setup

- Setting up a CI/CD pipeline to deploy a containerized application to Kubernetes

Immutable Infrastructure

Containers and Kubernetes have made it possible to run pre-built and configured components as part of every release. Users do not make any manual configuration changes. With every deployment, a new container is deployed.

Self Healing Systems

Kubernetes ensures that the Desired state and Actual state are always in sync. Kubernetes continuously monitors the health of the cluster and ensures that the system is self-healing. This is a huge benefit in case of application failures and increases the reliability of the system.

Declarative Configuration

We provide the API server with the manifest files (YAML or JSON) describing how we want the Kubernetes cluster to look. This forms the basis of the cluster desired state.

Horizontal Autoscaling

One of the cool features of Kubernetes is that it can monitor your workload and scale it up or down based on the CPU utilization or memory consumption. This automatic scaling is great for applications having spikes in load and usage for a period of time. You have the option for -

- Vertical Scaling - Increasing the amount of CPU the pods can use

- Horizontal Scaling - Increasing the number of pods

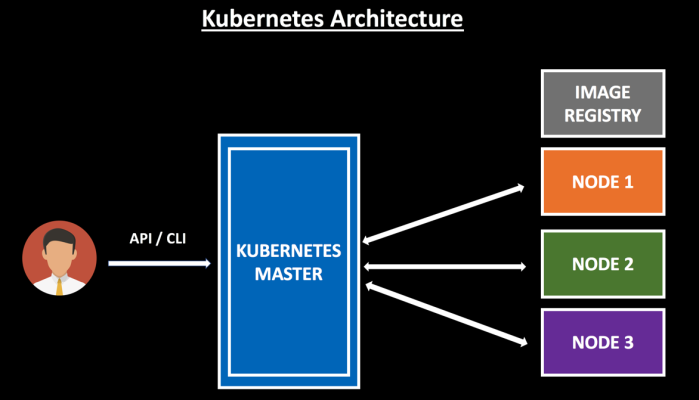

Kubernetes Architecture

Let us now discuss the Kubernetes architecture and look at the various components of the Master and Worker nodes.

At a high level, K8s (Kubernetes) architecture has two main components: Master and Worker nodes.

The Master is the entry point and is responsible for managing the entire Kubernetes cluster. It takes care of scheduling, provisioning and exposing the API to the client. You can communicate with the K8s master via the K8s CLI or via the API.

K8s objects are created/updated/deleted in a declarative manner by using configuration files which are submitted to the Master. Container Images for your application are stored in the registry - which can be either a Private Registry or DockerHub.

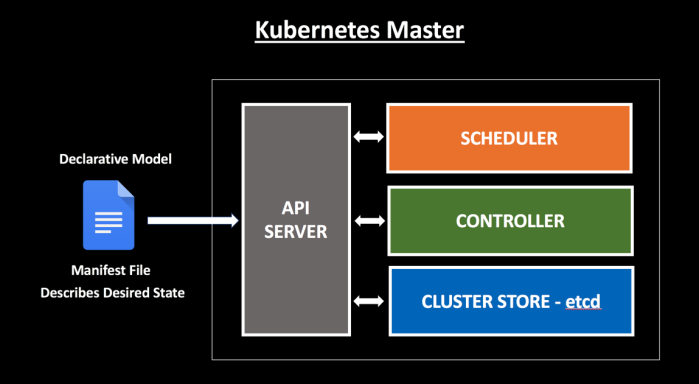

The Master node has four components:

- API Server

- Scheduler

- Controller

- Etcd

API Server

The front end of the K8s control plane that exposes the K8s API and consumes the manifest file provided by the user. It is the gatekeeper for the entire cluster and all the administrative tasks are performed by the API Server.

All the user requests need to pass through this. After the requests are processed, the cluster state is stored in the distributed key-value store.

Etcd

A distributed key-value store to store the current state of the K8s cluster, along with the configuration details. It is treated as the single source of truth of the K8s cluster. Worker nodes do all the heavy lifting work. It runs the applications using pods and is controlled by the Master node. Users connect to the worker nodes to access the applications.

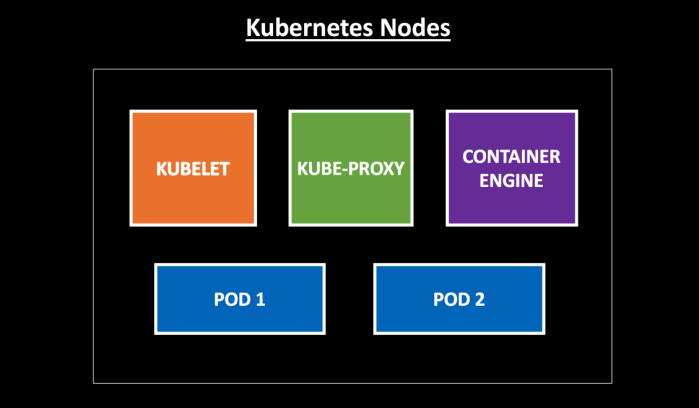

Worker nodes have the following components:

- Kubelet

- Kube-Proxy

- Container Engine

- Pods

Kubelet

The main Kubernetes agent which runs on each node.

Responsible for communicating the health of the node to Kubernetes master and Etcd.

Fetches the pod definition mentioned in the manifest file and instantiates the pods.

Manages the pod and reports back to the master.

Pods

The smallest deployable unit that can be created and managed in Kubernetes. Pods are atomic and can also be defined as a group of one or more containers that run in a shared context. It is important to note that each Pod gets a unique IP.

Containers inside the pods are able to communicate with each other and share the pod environment.

Creating a Local Development Environment for Kubernetes Using Minikube

I have created a video tutorial showing how to set up a local development environment for K8s using Minikube. I will walk you through how to perform the required installations to create a running K8s cluster locally. We will then deploy an app using the K8s CLI. Once done, we will explore the deployed application and environments.

Minikube is a tool that allows you to run a single-node K8s cluster locally. Minikube starts a VM and runs the necessary K8s components. Once the K8s cluster is up, you can interact with it using the K8s CLI - kubectl.

Kubectl is used for running commands to deploy and manage components against your K8s instance. Minikube also configures kubectl for you.

We will do the required installations and create a running Kubernetes cluster.

We will deploy our app on Kubernetes using the K8s Command Line Interface.

We will explore the deployed application and environments

You can find the scripts below used in the demonstration:

// Install Minikube

brew cask install minikube

// Verify that minikube is properly installed

minikube version

// Install the Kubernetes Command Line Utility - kubectl

brew install kubectl

// Start Minikube

minikube start

// Check Minikube status

minikube status

// View Kubernetes Dashboard

minikube dashboard

// View Kubernetes Cluster Information

kubectl cluster-info

// View the nodes that can be used to host your applications

kubectl get nodes

// Create a new deployment

kubectl run hello-minikube --image=k8s.gcr.io/echoserver:1.4 --port=8080

// List the deployments

kubectl get deployments

// Expose the deployment to an external IP

kubectl expose deployment hello-minikube --type=NodePort

// View the K8s services

kubectl get services

// View the URL that you can hit via browser

minikube service hello-minikube --url

// View the K8s pods in the cluster

kubectl get pods

// View the details of a specific resource

kubectl describe svc hello-minikube

// Delete the service and deployment

kubectl delete service,deployment hello-minikube

// Shut down the Minikube cluster

minikube stopAutomate Your Kubernetes Environment Setup

I have created a set of scripts to install Minikube and Kubectl, so that you can run this script and have a functional development environment all set up for you to use.

This script is targeted towards developers using Mac and we will be using Homebrew - the package manager for macOS.

Homebrew is a free package manager for macOS and helps you to manage software in your Mac. You can install the software you need through command line in no time. There is no need to download DMG files and drag it to your applications folder. It makes the process of installing and uninstalling software so much easier and faster.

The below script will install Homebrew, Minikube, and Kubernetes Command Line Interface:

# Check for Homebrew and install if we don't have it

if test ! $(which brew); then

echo "Installing homebrew..."

ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

fi

# Make sure we are using the latest Homebrew.

brew update

# Check for Minikube and install if we don't have it

echo "Installing Minikube to run a single-node Kubernetes Cluster locally..."

brew cask install minikube

echo "Minikube Installation Complete"

# Check for Kubernetes Command Line Utility and install if we don't have it

echo "Installing kubectl to manage components in your Kubernetes cluster..."

brew install kubectl

echo "kubectl Installation Complete"CI/CD Pipeline to Deploy Microservices on Kubernetes

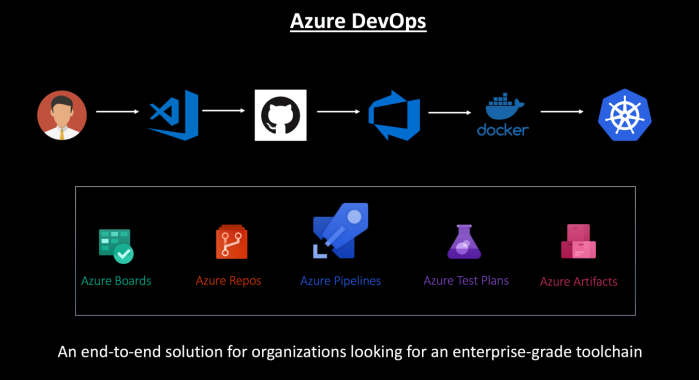

In a microservice ecosystem, containers make it easy for you to build and deploy services. You can orchestrate the deployments of these containers using Kubernetes in Azure Container Service.

On September 10th, 2018, Microsoft released Azure DevOps, with which you can set up a continuous build to produce your container images and reliably deploy to the managed Kubernetes.

Azure DevOps marks the evolution of Visual Studio Team Services. VSTS users will be upgraded into Azure DevOps projects automatically. Azure Pipeline lets you continuously build, test and deploy to any platform (Linux, macOS, Windows) and cloud (Azure, AWS and Google). Azure Pipelines offers free CI/CD with unlimited minutes and 10 parallel jobs for every open source project. With cloud-hosted Linux, macOS, and Windows pools, Azure Pipelines is great for all types of projects.

- In the below CI/CD Pipeline leveraging Azure DevOps, a developer modifies the code and checks it into GitHub.

- Using Azure DevOps, continuous integration triggers the application build, the container image is built and the unit tests are executed.

- The container image is pushed to Azure Container Registry.

- Continuous deployment triggers the application deployment into Managed Kubernetes — Azure Container Service.

I hope this tutorial was useful for getting started with Kubernetes on your microservice journey. In case you have any questions, please add a comment below and I would be happy to discuss it.

Published at DZone with permission of Samir Behara, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments