Mastering Scalability and Performance: A Deep Dive Into Azure Load Balancing Options

Efficient and scalable load balancing is pivotal for ensuring optimal performance and high availability. Explore an overview of Azure's load-balancing options.

Join the DZone community and get the full member experience.

Join For FreeAs organizations increasingly migrate their applications to the cloud, efficient and scalable load balancing becomes pivotal for ensuring optimal performance and high availability. This article provides an overview of Azure's load balancing options, encompassing Azure Load Balancer, Azure Application Gateway, Azure Front Door Service, and Azure Traffic Manager. Each of these services addresses specific use cases, offering diverse functionalities to meet the demands of modern applications. Understanding the strengths and applications of these load-balancing services is crucial for architects and administrators seeking to design resilient and responsive solutions in the Azure cloud environment.

What Is Load Balancing?

Load balancing is a critical component in cloud architectures for various reasons. Firstly, it ensures optimized resource utilization by evenly distributing workloads across multiple servers or resources, preventing any single server from becoming a performance bottleneck. Secondly, load balancing facilitates scalability in cloud environments, allowing resources to be scaled based on demand by evenly distributing incoming traffic among available resources. Additionally, load balancers enhance high availability and reliability by redirecting traffic to healthy servers in the event of a server failure, minimizing downtime, and ensuring accessibility.

From a security perspective, load balancers implement features like SSL termination, protecting backend servers from direct exposure to the internet, and aiding in mitigating DDoS attacks and threat detection/protection using Web Application Firewalls.

Furthermore, efficient load balancing promotes cost efficiency by optimizing resource allocation, preventing the need for excessive server capacity during peak loads. Finally, dynamic traffic management across regions or geographic locations capabilities allows load balancers to adapt to changing traffic patterns, intelligently distributing traffic during high-demand periods and scaling down resources during low-demand periods, leading to overall cost savings.

Overview of Azure’s Load Balancing Options

Azure Load Balancer: Unleashing Layer 4 Power

Azure Load Balancer is a Layer 4 (TCP, UDP) load balancer that distributes incoming network traffic across multiple Virtual Machines or Virtual Machine Scalesets to ensure no single server is overwhelmed with too much traffic. There are 2 options for the load balancer: a Public Load Balancer primarily used for internet traffic and also supports outbound connection, and a Private Load Balancer to load balance traffic with a virtual network. The load balancer uses a five-tuple (source IP, source port, destination IP, destination port, protocol).

Features

- High availability and redundancy: Azure Load Balancer efficiently distributes incoming traffic across multiple virtual machines or instances in a web application deployment, ensuring high availability, redundancy, and even distribution, thereby preventing any single server from becoming a bottleneck. In the event of a server failure, the load balancer redirects traffic to healthy servers.

- Provide outbound connectivity: The frontend IPs of a public load balancer can be used to provide outbound connectivity to the internet for backend servers and VMs. This configuration uses source network address translation (SNAT) to translate the virtual machine's private IP into the load balancer's public IP address, thus preventing outside sources from having a direct address to the backend instances.

- Internal load balancing: Distribute traffic across internal servers within a Virtual Network (VNet); this ensures that services receive an optimal share of resources

- Cross-region load balancing: Azure Load Balancer facilitates the distribution of traffic among virtual machines deployed in different Azure regions, optimizing performance and ensuring low-latency access for users of global applications or services with a user base spanning multiple geographic regions.

- Health probing and failover: Azure Load Balancer monitors the health of backend instances continuously, automatically redirecting traffic away from unhealthy instances, such as those experiencing application errors or server failures, to ensure seamless failover.

- Port-level load balancing: For services running on different ports within the same server, Azure Load Balancer can distribute traffic based on the specified port numbers. This is useful for applications with multiple services running on the same set of servers.

- Multiple front ends: Azure Load Balancer allows you to load balance services on multiple ports, multiple IP addresses, or both. You can use a public or internal load balancer to load balance traffic across a set of services like virtual machine scale sets or virtual machines (VMs).

- High Availability (HA) ports in Azure Load Balancer play a crucial role in ensuring resilient and reliable network traffic management. These ports are designed to enhance the availability and redundancy of applications by providing failover capabilities and optimal performance. Azure Load Balancer achieves this by distributing incoming network traffic across multiple virtual machines to prevent a single point of failure.

Configuration and Optimization Strategies

- Define a well-organized backend pool, incorporating healthy and properly configured virtual machines (VMs) or instances, and consider leveraging availability sets or availability zones to enhance fault tolerance and availability.

- Define load balancing rules to specify how incoming traffic should be distributed. Consider factors such as protocol, port, and backend pool association.

- Use session persistence settings when necessary to ensure that requests from the same client are directed to the same backend instance.

- Configure health probes to regularly check the status of backend instances. Adjust probe settings, such as probing intervals and thresholds, based on the application's characteristics.

- Choose between the Standard SKU and the Basic SKU based on the feature set required for your application. Implement frontend IP configurations to define how the load balancer should handle incoming network traffic.

- Implement Azure Monitor to collect and analyze telemetry data, set up alerts based on performance thresholds for proactive issue resolution, and enable diagnostics logging to capture detailed information about the load balancer's operations.

- Adjust the idle timeout settings to optimize the connection timeout for your application. This is especially important for applications with long-lived connections.

- Enable accelerated networking on virtual machines to take advantage of high-performance networking features, which can enhance the overall efficiency of the load-balanced application.

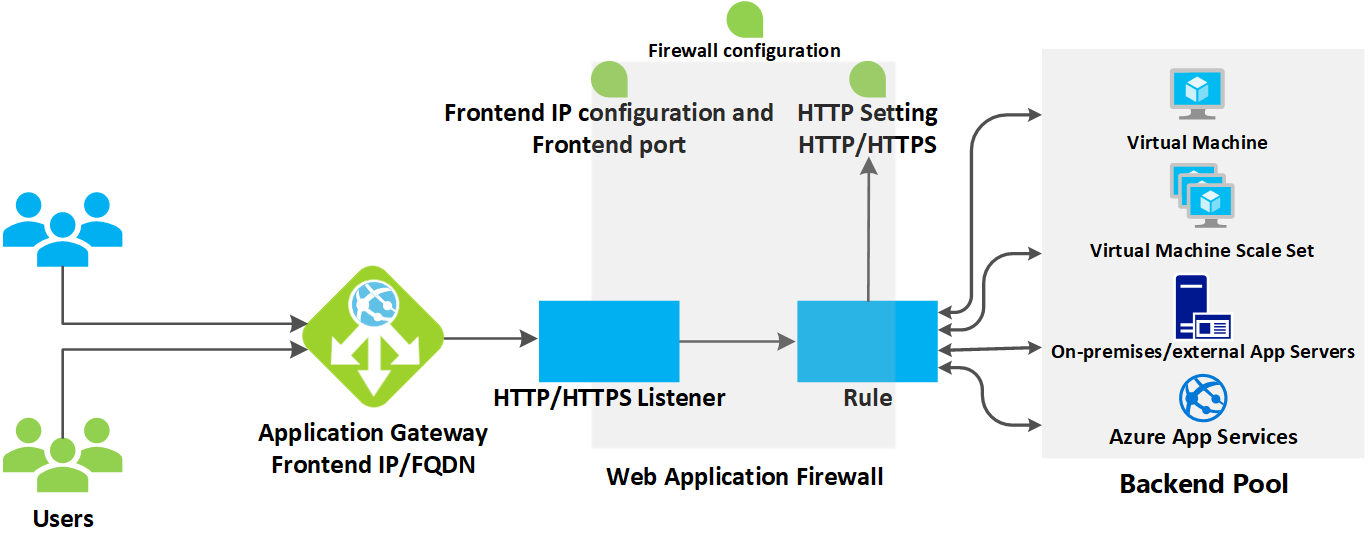

Azure Application Gateway: Elevating To Layer 7

Azure Application Gateway is a Layer 7 load balancer that provides advanced traffic distribution and web application firewall (WAF) capabilities for web applications.

Features

- Web application routing: Azure Application Gateway allows for the routing of requests to different backend pools based on specific URL paths or host headers. This is beneficial for hosting multiple applications on the same set of servers.

- SSL termination and offloading: Improve the performance of backend servers by transferring the resource-intensive task of SSL decryption to the Application Gateway and relieving backend servers of the decryption workload.

- Session affinity: For applications that rely on session state, Azure Application Gateway supports session affinity, ensuring that subsequent requests from a client are directed to the same backend server for a consistent user experience.

- Web Application Firewall (WAF): Implement a robust security layer by integrating the Azure Web Application Firewall with the Application Gateway. This helps safeguard applications from threats such as SQL injection, cross-site scripting (XSS), and other OWASP Top Ten vulnerabilities. You can define your own WAF custom firewall rules as well.

- Auto-scaling: Application Gateway can automatically scale the number of instances to handle increased traffic and scale down during periods of lower demand, optimizing resource utilization.

- Rewriting HTTP headers: Modify HTTP headers for requests and responses, as adjusting these headers is essential for reasons including adding security measures, altering caching behavior, or tailoring responses to meet client-specific requirements.

- Ingress Controller for AKS: The Application Gateway Ingress Controller (AGIC) enables the utilization of Application Gateway as the ingress for an Azure Kubernetes Service (AKS) cluster.

- WebSocket and HTTP/2 traffic: Application Gateway provides native support for the WebSocket and HTTP/2 protocols.

- Connection draining: This pivotal feature ensures the smooth and graceful removal of backend pool members during planned service updates or instances of backend health issues. This functionality promotes seamless operations and mitigates potential disruptions by allowing the system to handle ongoing connections gracefully, maintaining optimal performance and user experience during transitional periods

Configuration and Optimization Strategies

- Deploy the instances in a zone-aware configuration, where available.

- Use Application Gateway with Web Application Firewall (WAF) within a virtual network to protect inbound

HTTP/Straffic from the Internet. - Review the impact of the interval and threshold settings on health probes. Setting a higher interval puts a higher load on your service. Each Application Gateway instance sends its own health probes, so 100 instances every 30 seconds means 100 requests per 30 seconds.

- Use App Gateway for TLS termination. This promotes the utilization of backend servers because they don't have to perform TLS processing and easier certificate management because the certificate only needs to be installed on Application Gateway.

- When WAF is enabled, every request gets buffered until it fully arrives, and then it gets validated against the ruleset. For large file uploads or large requests, this can result in significant latency. The recommendation is to enable WAF with proper testing and validation.

- Having appropriate DNS and certificate management for backend pools is crucial for improved performance.

- Application Gateway does not get billed in stopped state. Turn it off for the dev/test environments.

- Take advantage of features for autoscaling and performance benefits, and make sure to have scale-in and scale-out instances based on the workload to reduce the cost.

- Use Azure Monitor Network Insights to get a comprehensive view of health and metrics, crucial in troubleshooting issues.

Azure Front Door Service: Global-Scale Entry Management

Azure Front Door is a comprehensive content delivery network (CDN) and global application accelerator service that provides a range of use cases to enhance the performance, security, and availability of web applications. Azure Front Door supports four different traffic routing methods latency, priority, weighted, and session affinity to determine how your HTTP/HTTPS traffic is distributed between different origins.

Features

- Global content delivery and acceleration: Azure Front Door leverages a global network of edge locations, employing caching mechanisms, compressing data, and utilizing smart routing algorithms to deliver content closer to end-users, thereby reducing latency and enhancing overall responsiveness for an improved user experience.

- Web Application Firewall (WAF): Azure Front Door integrates with Azure Web Application Firewall, providing a robust security layer to safeguard applications from common web vulnerabilities, such as SQL injection and cross-site scripting (XSS).

- Geo filtering: In Azure Front Door WAF you can define a policy by using custom access rules for a specific path on your endpoint to allow or block access from specified countries or regions.

- Caching: In Azure Front Door, caching plays a pivotal role in optimizing content delivery and enhancing overall performance. By strategically storing frequently requested content closer to the end-users at the edge locations, Azure Front Door reduces latency, accelerates the delivery of web applications, and prompts resource conservation across entire content delivery networks.

- Web application routing: Azure Front Door supports path-based routing, URL redirect/rewrite, and rule sets. These help to intelligently direct user requests to the most suitable backend based on various factors such as geographic location, health of backend servers, and application-defined routing rules.

- Custom domain and SSL support: Front Door supports custom domain configurations, allowing organizations to use their own domain names and SSL certificates for secure and branded application access.

Configuration and Optimization Strategies

- Use WAF policies to provide global protection across Azure regions for inbound

HTTP/Sconnections to a landing zone. - Create a rule to block access to the health endpoint from the internet.

- Ensure that the connection to the back end is re-encrypted as Front Door does support SSL passthrough.

- Consider using geo-filtering in Azure Front Door.

- Avoid combining Traffic Manager and Front Door as they are used for different use cases.

- Configure logs and metrics in Azure Front Door and enable WAF logs for debugging issues.

- Leverage managed TLS certificates to streamline the costs and renewal process associated with certificates. Azure Front Door service issues and rotates these managed certificates, ensuring a seamless and automated approach to certificate management, thereby enhancing security while minimizing operational overhead.

-

Use the same domain name on Front Door and your origin to avoid any issues related to request cookies or URL redirections.

- Disable health probes when there’s only one origin in an origin group. It's recommended to monitor a webpage or location that you specifically designed for health monitoring.

- Regularly monitor and adjust the instance count and scaling settings to align with actual demand, preventing overprovisioning and optimizing costs.

Azure Traffic Manager: DNS-Based Traffic Distribution

Azure Traffic Manager is a global DNS-based traffic load balancer that enhances the availability and performance of applications by directing user traffic to the most optimal endpoint.

Features

- Global load balancing: Distribute user traffic across multiple global endpoints to enhance application responsiveness and fault tolerance.

- Fault tolerance and high availability: Ensure continuous availability of applications by automatically rerouting traffic to healthy endpoints in the event of failures.

- Routing: Support various routing globally. Performance-based routing optimizes application responsiveness by directing traffic to the endpoint with the lowest latency. Geographic traffic routing is based on the geographic location of end-users, priority-based, weighted, etc.

- Endpoint monitoring: Regularly check the health of endpoints using configurable health probes, ensuring traffic is directed only to operational and healthy endpoints.

- Service maintenance: You can have planned maintenance done on your applications without downtime. Traffic Manager can direct traffic to alternative endpoints while the maintenance is in progress.

- Subnet traffic routing: Define custom routing policies based on IP address ranges, providing flexibility in directing traffic according to specific network configurations.

Configuration and Optimization Strategies

- Enable automatic failover to healthy endpoints in case of endpoint failures, ensuring continuous availability and minimizing disruptions.

- Utilize appropriate traffic routing methods, such as Priority, Weighted, Performance, Geographic, and Multi-value, to tailor traffic distribution based on specific application requirements.

- Implement a custom page to use as a health check for your Traffic Manager.

- If the Time to Live (TTL) interval of the DNS record is too long, consider adjusting the health probe timing or DNS record TTL.

- Consider nested Traffic Manager profiles. Nested profiles allow you to override the default Traffic Manager behavior to support larger, more complex application deployments.

- Integrate with Azure Monitor for real-time monitoring and logging, gaining insights into the performance and health of Traffic Manager and endpoints.

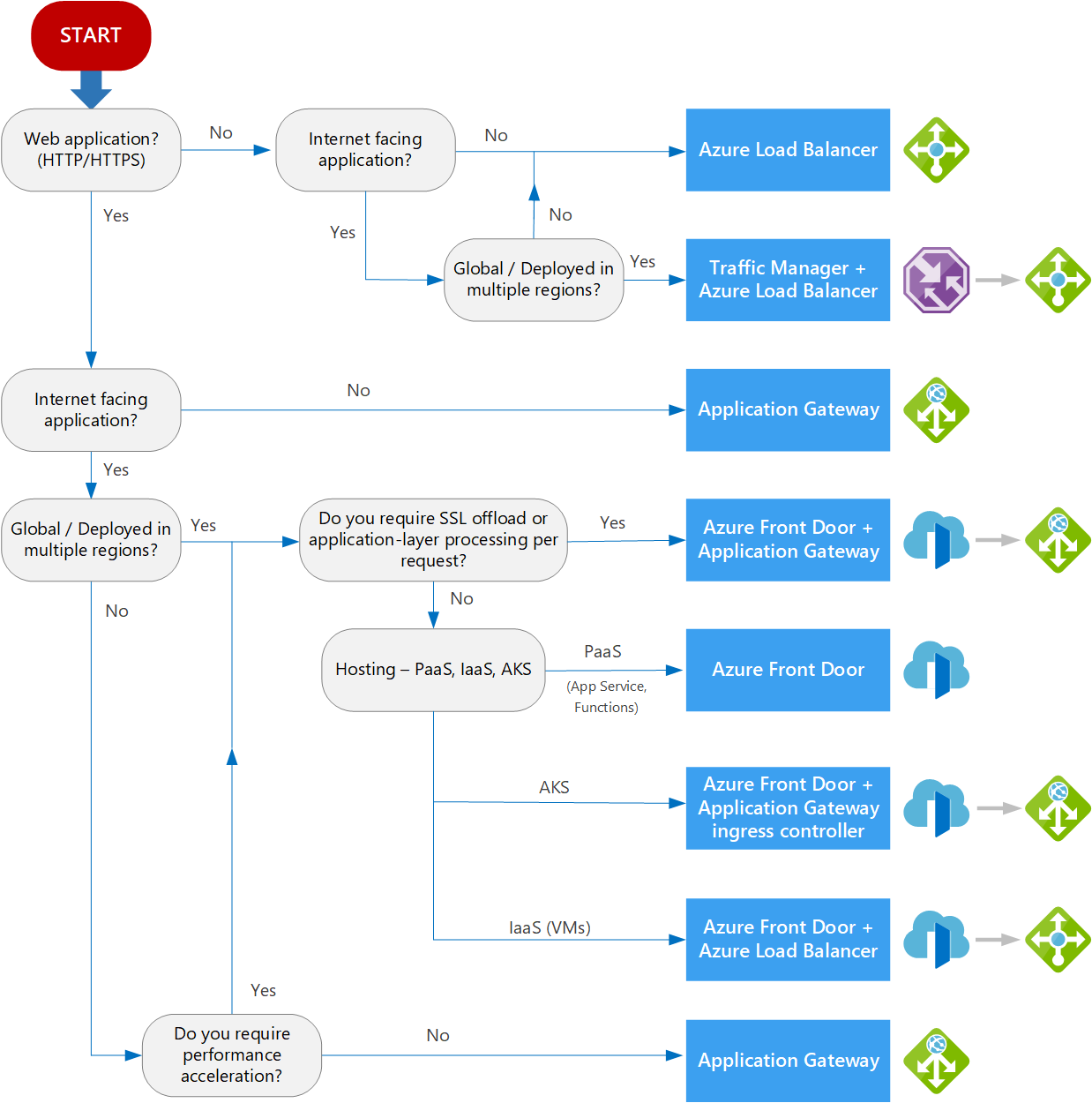

How To Choose

When selecting a load balancing option in Azure, it is crucial to first understand the specific requirements of your application, including whether it necessitates layer 4 or layer 7 load balancing, SSL termination, and web application firewall capabilities. For applications requiring global distribution, options like Azure Traffic Manager or Azure Front Door are worth considering to efficiently achieve global load balancing. Additionally, it's essential to evaluate the advanced features provided by each load balancing option, such as SSL termination, URL-based routing, and application acceleration. Scalability and performance considerations should also be taken into account, as different load balancing options may vary in terms of throughput, latency, and scaling capabilities. Cost is a key factor, and it's important to compare pricing models to align with budget constraints. Lastly, assess how well the chosen load balancing option integrates with other Azure services and tools within your overall application architecture. This comprehensive approach ensures that the selected load balancing solution aligns with the unique needs and constraints of your application.

| Service | Global/Regional | Recommended traffic |

|---|---|---|

| Azure Front Door | Global | HTTP(S) |

| Azure Traffic Manager | Global | Non-HTTP(S) and HTTPS |

| Azure Application Gateway | Regional | HTTP(S) |

| Azure Load Balancer | Regional or Global | Non-HTTP(S) and HTTPS |

Here is the decision tree for load balancing from Azure.

Source: Azure

Opinions expressed by DZone contributors are their own.

Comments