Managing Software Engineering Teams of Artificial Intelligence Developers

Learn the importance of focusing on continuous learning by observing the latest AI development best practices and integrating them with the engineering team.

Join the DZone community and get the full member experience.

Join For FreeRegardless of its industry, every organization has an AI solution, is working on AI integration, or has a plan for it in its roadmap. While developers are being trained in the various technological skills needed for development, senior leadership must focus on strategies to integrate and align these efforts with the broader organization. In this article, let's take a step back to examine the entire AI product landscape. We will identify the areas where the organization can add significant customer value, develop the necessary skills among developers, utilize modern AI development tools, and structure teams for greater efficiency.

Generative AI Product Landscape

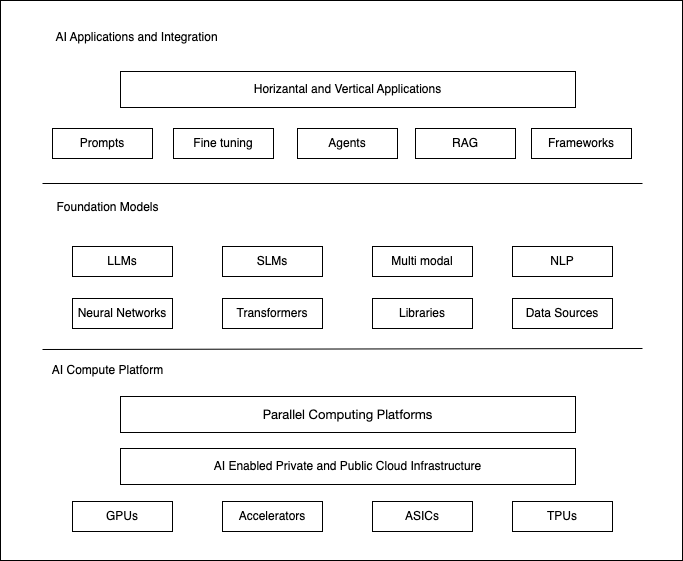

The diagram illustrates how the landscape of Generative AI development can be broadly divided into three layers at a high level.

The AI Compute Platform has experienced an exponential increase in computational needs, leading to the development of AI accelerators — specialized chips designed to enhance application performance. Initially created for graphics-related tasks, Graphics Processing Units (GPUs) have become essential in meeting the substantial parallel computation requirements for building AI models. Additionally, numerous specialized AI accelerators Application-Specific Integrated Circuit (ASIC) processors, such as Tensor Processing Units (TPUs), Learning Processing Units (LPUs), and Neural Processing Units (NPUs), have significantly transformed training and inference workloads. On top of the physical layer, public and private cloud infrastructure virtualizes and provides abstractions for various computational needs. Parallel computing platforms like CUDA and OpenCL are essential for effectively utilizing physical and virtual hardware. A computing platform is necessary for AI, providing the foundational infrastructure required to build Generative AI models.

Foundational models are at the core of Generative AI and are based on neural network architectures using transformer technology. Various libraries, such as PyTorch and TensorFlow, provide computer vision and natural language processing functionalities, primarily utilized for training and inference of neural networks. These foundational models are trained using large datasets' semi-supervised and self-supervised learning processes to understand the statistical relationships between words and phrases. Additionally, large language models (LLMs) are evolving to become multi-modal, enabling them to address various application needs.

AI applications use foundational models, and an abstraction layer is needed. This layer can extract valuable and customized information using prompts, frameworks, retrieval-augmented generation (RAG), Agents, or fine-tuning. Generic horizontal and specialized vertical applications can benefit from these models and their underlying abstraction layers to create highly intelligent applications.

The entire ecosystem supports each layer and fosters overall innovation.

Customer Value

Recognize the business environment in which your organization generates customer value. Each layer of the AI technology stack provides value to different customer segments. Only a few large companies, such as Nvidia, Google, Amazon, and Microsoft, operate across all three layers of the technology stack.

Customer value can typically be categorized as either hedonic or utilitarian: the functional value of a product or service, such as its usefulness, quality, and value for money. Utilitarian products are often practical, effective, and necessary while hedonic products provide fun, playfulness, and enjoyment. Hedonic products are often exciting, delightful, and thrilling. They generate more intense emotional responses than utilitarian products.

Investing in AI alone will not guarantee success for the company. Avoid making investment decisions solely based on the Fear of Missing Out (FOMO). For the business to thrive in the long run, it must focus on value creation through AI integration. Follow standard processes and conduct thorough due diligence to identify where AI can effectively drive value for your product.

Collaborate closely with the product, business, and engineering teams to define the scope of work and develop a strategic vision that ensures alignment within the team. It is also crucial to achieve stakeholder alignment, especially given the complexity of the projects, while setting realistic expectations.

Developer Roles and Skills

Each of the layers mentioned above requires developers with specialized skill sets. The landscape changes every day with a new tool or service announced. As an engineering leader, invest in the right skills required for the project. Empower the team to make the best decisions. Building strong expertise in the teams and providing learning opportunities for the team by allowing them to attend learning sessions, conferences, hackathons, etc.

This is not an exhaustive list, but a glimpse of different roles and necessary skill sets.

- AI compute platform:

- ICU Design Engineer: Design and verification of GPUs or ASICs

- AI Cloud Platform Engineer: Building infrastructure, tools, and platforms for AI in hyperscalers

- Foundational models:

- AI Trainer, Data Annotator: Review and validate learning, especially in semi-supervised scenarios.

- AI/Machine Learning Engineer: Develop, train, and optimize foundational models.

- AI Researcher, Research Scientist: Design the Transformer Neural Networks algorithms.

- Robotics Engineer: Assemble physical parts and connect them with software and sensors.

- AI applications:

- Prompt Engineer: Develop AI integration consuming coding assistant and AI Developer tools.

- No code and low code developers: Build solutions using AI Services.

- AI Software Developer, AI Agent Developer: Build or integrate Agent frameworks, Vector DB, or RAG.

- AI Engineer: Fine-tuning of foundational models based on organization requirements

Development Tools

Generative AI can deliver human-like responses, making AI assistants a revolutionary application. Specialized versions of these assistants have evolved into coding assistants and tools for developers.

AI services like Flowise and AutoGen are no-code and low-code platforms that can fully replace the entire development cycle.

GitHub Copilot, Codium, Tabnine, Claude.ai, Cursor, Visual Studio Code, Amazon CodeWhisperer, Cody, and JetBrains AI are among the most popular development tools that have IDE integration and help with various phases of the engineering development cycle by designing UI, developing code, auto-completion, code summarization, debugging and fix the code, generating tests, deploying and monitoring the solution.

Depending on the cloud computing platform used, Amazon SageMaker, Google Cloud Vertex AI, and Microsoft Azure Machine Learning provide service integrations for the entire machine learning lifecycle.

Engineering leadership should analyze and invest in the right developer tools for the team to enhance developers' productivity.

Organization Structure

After successfully developing an AI prototype, leadership often faces the challenge of integrating AI development into the core engineering teams. Whether the team is building an AI solution or integrating AI, engineering management must make the right decision.

When a team is developing an AI solution, it is essential to have a dedicated group of engineers with specific roles and skill sets. This process typically requires extensive research and development in AI, and these engineers should be integrated as part of the core engineering team.

The team building an AI integration will likely develop an AI application and an integration layer. They typically have an existing application into which they plan to incorporate AI model responses. In this scenario, a subsystem team should focus on creating the Retrieval-Augmented Generation (RAG) and agent subsystems, which will be integrated into the application. This subsystem team can be part of either the core engineering team or the platform engineering team.

Platform engineering should lead in developing reusable GenAI components by creating AI developer platforms for infrastructure, tools, libraries, and more.

Teams should ideally be structured with 6 to 8 developers who are co-located or available within similar time zones for effective communication and collaboration.

Regardless of team structure, Agile development practices offer the best approach for an AI development team to adopt an iterative, introspective, and adaptive methodology.

Final Thoughts

The Artificial Intelligence domain is fairly early in its development. We will be experiencing a lot more applications and integrations that were never imaginable before. While the leadership understands the product landscape and invests in identifying the right customer value, organizing the team for efficiency, and investing in building developer skills and tools, it is very important to focus on continuous learning by observing the latest AI development best practices and integrating them with the engineering team.

Opinions expressed by DZone contributors are their own.

Comments