Manage Redis on AWS From Kubernetes

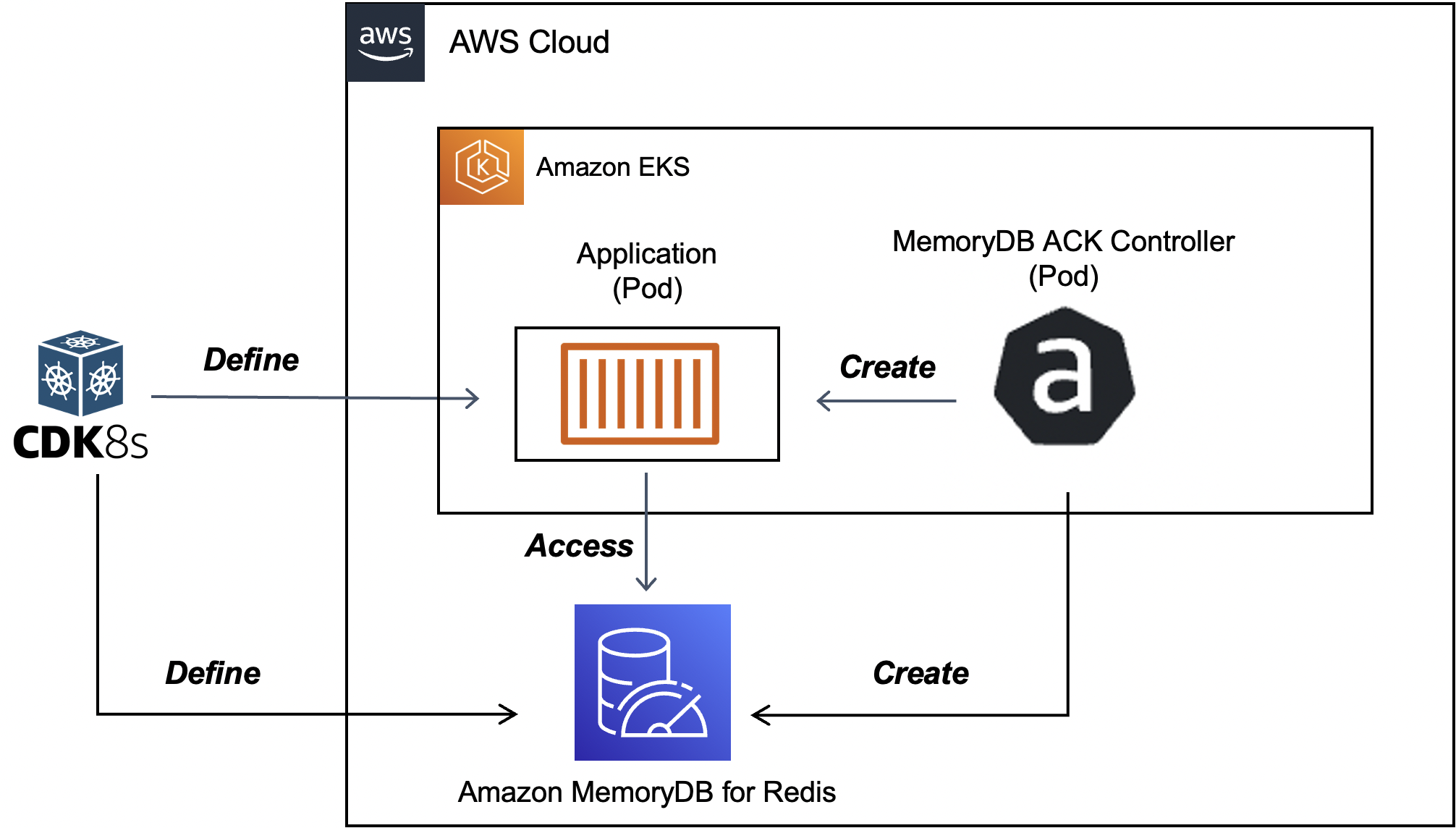

In this blog post, you will learn how to use ACK with Amazon EKS for creating a Redis cluster on AWS (with Amazon MemoryDB).

Join the DZone community and get the full member experience.

Join For FreeIn this blog post, you will learn how to use ACK with Amazon EKS for creating a Redis cluster on AWS (with Amazon MemoryDB).

AWS Controllers for Kubernetes (also known as ACK) leverage Kubernetes Custom Resource and Custom Resource Definitions and allow you to manage and use AWS services directly from Kubernetes without needing to define resources outside of the cluster. It supports many AWS services including S3, DynamoDB, MemoryDB, etc.

Normally you would define custom resources in ACK using YAML. However, in this case, we will leverage cdk8s (Cloud Development Kit for Kubernetes), an open-source framework (part of CNCF) that allows you to define your Kubernetes applications using regular programming languages (instead of yaml). Thanks to cdk8s support for Kubernetes Custom Resource definitions, we will import MemoryDB ACK CRDs as APIs and then define a cluster using code (I will be using Go for this).

That's not all! In addition to the infrastructure, we will take care of the application that will represent the application that will connect with the MemoryDB cluster. To do this, we will use the cdk8s-plus library to define a Kubernetes Deployment (and Service to expose it), thereby building an end-to-end solution. In the process, you will learn about some of the other nuances of ACK such as FieldExport etc.

I have written a few blog posts around cdk8s and Go that you may find useful.

Pre-Requisites

To follow along step-by-step, in addition to an AWS account, you will need to have installed AWS CLI, cdk8s CLI, kubectl, Helm, and the Go programming language.

There are a variety of ways in which you can create an Amazon EKS cluster. I prefer using eksctl CLI because of the convenience it offers!

Set up the MemoryDB Controller

Most of the below steps are adapted from the ACK documentation - Install an ACK Controller.

Install it using Helm:

export SERVICE=memorydb

export RELEASE_VERSION=`curl -sL https://api.github.com/repos/aws-controllers-k8s/$SERVICE-controller/releases/latest | grep '"tag_name":' | cut -d'"' -f4`

export ACK_SYSTEM_NAMESPACE=ack-system

# you can change the region as required

export AWS_REGION=us-east-1

aws ecr-public get-login-password --region us-east-1 | helm registry login --username AWS --password-stdin public.ecr.aws

helm install --create-namespace -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller \

oci://public.ecr.aws/aws-controllers-k8s/$SERVICE-chart --version=$RELEASE_VERSION --set=aws.region=$AWS_REGION

To confirm, run:

kubectl get crd

# output (multiple CRDs)

NAME CREATED AT

acls.memorydb.services.k8s.aws 2022-08-13T19:15:46Z

adoptedresources.services.k8s.aws 2022-08-13T19:15:53Z

clusters.memorydb.services.k8s.aws 2022-08-13T19:15:47Z

eniconfigs.crd.k8s.amazonaws.com 2022-08-13T19:02:10Z

fieldexports.services.k8s.aws 2022-08-13T19:15:56Z

parametergroups.memorydb.services.k8s.aws 2022-08-13T19:15:48Z

securitygrouppolicies.vpcresources.k8s.aws 2022-08-13T19:02:12Z

snapshots.memorydb.services.k8s.aws 2022-08-13T19:15:51Z

subnetgroups.memorydb.services.k8s.aws 2022-08-13T19:15:52Z

users.memorydb.services.k8s.aws 2022-08-13T19:15:53ZSince the controller has to interact with AWS Services (make API calls), we need to configure IAM Roles for Service Accounts (also known as IRSA).

Refer to Configure IAM Permissions for details.

IRSA Configuration

First, create an OIDC identity provider for your cluster.

export EKS_CLUSTER_NAME=<name of your EKS cluster>

export AWS_REGION=<cluster region>

eksctl utils associate-iam-oidc-provider --cluster $EKS_CLUSTER_NAME --region $AWS_REGION --approveThe goal is to create an IAM role and attach appropriate permissions via policies. We can then create a Kubernetes Service Account and attach the IAM role to it. Thus, the controller Pod will be able to make AWS API calls. Note that we are using providing all MemoryDB permissions to our control via the arn:aws:iam::aws:policy/AmazonMemoryDBFullAccess policy.

Thanks to eksctl, this can be done with a single line!

export SERVICE=memorydb

export ACK_K8S_SERVICE_ACCOUNT_NAME=ack-$SERVICE-controller

# recommend using the same name

export ACK_SYSTEM_NAMESPACE=ack-system

export EKS_CLUSTER_NAME=<enter EKS cluster name>

export POLICY_ARN=arn:aws:iam::aws:policy/AmazonMemoryDBFullAccess

# IAM role has a format - do not change it. you can't use any arbitrary name

export IAM_ROLE_NAME=ack-$SERVICE-controller-role

eksctl create iamserviceaccount \

--name $ACK_K8S_SERVICE_ACCOUNT_NAME \

--namespace $ACK_SYSTEM_NAMESPACE \

--cluster $EKS_CLUSTER_NAME \

--role-name $IAM_ROLE_NAME \

--attach-policy-arn $POLICY_ARN \

--approve \

--override-existing-serviceaccountsThe policy (AmazonMemoryDBFullAccess) is chosen as per recommended policy.

To confirm, you can check whether the IAM role was created and also introspect the Kubernetes service account:

aws iam get-role --role-name=$IAM_ROLE_NAME --query Role.Arn --output text

kubectl describe serviceaccount/$ACK_K8S_SERVICE_ACCOUNT_NAME -n $ACK_SYSTEM_NAMESPACE

# you will see similar output

Name: ack-memorydb-controller

Namespace: ack-system

Labels: app.kubernetes.io/instance=ack-memorydb-controller

app.kubernetes.io/managed-by=eksctl

app.kubernetes.io/name=memorydb-chart

app.kubernetes.io/version=v0.0.2

helm.sh/chart=memorydb-chart-v0.0.2

k8s-app=memorydb-chart

Annotations: eks.amazonaws.com/role-arn: arn:aws:iam::568863012249:role/ack-memorydb-controller-role

meta.helm.sh/release-name: ack-memorydb-controller

meta.helm.sh/release-namespace: ack-system

Image pull secrets: <none>

Mountable secrets: ack-memorydb-controller-token-2cmmx

Tokens: ack-memorydb-controller-token-2cmmx

Events: <none>For IRSA to take effect, you need to restart the ACK Deployment:

# Note the deployment name for ACK service controller from following command

kubectl get deployments -n $ACK_SYSTEM_NAMESPACE

kubectl -n $ACK_SYSTEM_NAMESPACE rollout restart deployment ack-memorydb-controller-memorydb-chartConfirm that the Deployment has restarted (currently Running) and the IRSA is properly configured:

kubectl get pods -n $ACK_SYSTEM_NAMESPACE

kubectl describe pod -n $ACK_SYSTEM_NAMESPACE ack-memorydb-controller-memorydb-chart-5975b8d757-k6x9k | grep "^\s*AWS_"

# The output should contain following two lines:

AWS_ROLE_ARN=arn:aws:iam::<AWS_ACCOUNT_ID>:role/<IAM_ROLE_NAME>

AWS_WEB_IDENTITY_TOKEN_FILE=/var/run/secrets/eks.amazonaws.com/serviceaccount/tokenNow that we're done with the configuration, it's time for...

Cdk8s in Action!

We will go step by step:

- Build and push the application Docker images to a private registry in Amazon ECR.

- Deploy

MemoryDBalong with the application and required configuration. - Test the application.

Build Docker Image and Push to ECR

Create ECR Private Repository

Login to ECR:

aws ecr get-login-password --region <enter region> | docker login --username AWS --password-stdin <enter aws_account_id>.dkr.ecr.<enter region>.amazonaws.comCreate a Private Repository:

aws ecr create-repository \

--repository-name memorydb-app \

--region <enter region>Build the Image and Push to ECR:

# if you're on Mac M1

#export DOCKER_DEFAULT_PLATFORM=linux/amd64

docker build -t memorydb-app .

docker tag memorydb-app:latest <enter aws_account_id>.dkr.ecr.<enter region>.amazonaws.com/memorydb-app:latest

docker push <enter aws_account_id>.dkr.ecr.<enter region>.amazonaws.com/memorydb-app:latestUse cdk8s and Kubectl To Deploy MemoryDB and the Application

This is a ready-to-use cdk8s project that you can use. The entire logic is in the main.go file. I will dive into the nitty-gritty of the code in the next section.

Clone the project from GitHub and change it to the right directory:

git clone https://github.com/abhirockzz/memorydb-ack-cdk8s-go.git

cd memorydb-ack-cdk8s-goGenerate and Deploy Manifests

Use cdk8s synth to generate the manifest for MemoryDB, the application as well as required configuration. We can then apply it using kubectl.

export SUBNET_ID_LIST=<enter comma-separated list of subnet IDs. should be same as your EKS cluster>

# for example:

# export SUBNET_ID_LIST=subnet-086c4a45ec9a206e1,subnet-0d9a9c6d2ca7a24df,subnet-028ca54bb859a4994

export SECURITY_GROUP_ID=<enter security group ID>

# for example

# export SECURITY_GROUP_ID=sg-06b6535ee64980616

export DOCKER_IMAGE=<enter ECR repo that you created earlier>

# example

# export DOCKER_IMAGE=1234567891012.dkr.ecr.us-east-1.amazonaws.com/memorydb-app:latestYou can also add other environment variables MEMORYDB_CLUSTER_NAME, MEMORYDB_USERNAME, MEMORYDB_PASSWORD. These are not mandatory and default to memorydb-cluster-ack-cdk8s, demouser and Password123456789 respectively.

To generate the manifests:

cdk8s synth

# check the "dist" folder - you should see these files:

0000-memorydb.k8s.yaml

0001-config.k8s.yaml

0002-deployment.k8s.yamlLet's deploy them one by one, starting with the one which creates the MemoryDB cluster. In addition to the cluster, it will also provide the supporting components including ACL, User, and Subnet Groups.

kubectl apply -f dist/0000-memorydb.k8s.yaml

#output

secret/memdb-secret created

users.memorydb.services.k8s.aws/demouser created

acls.memorydb.services.k8s.aws/demo-acl created

subnetgroups.memorydb.services.k8s.aws/demo-subnet-group created

clusters.memorydb.services.k8s.aws/memorydb-cluster-ack-cdk8sKubernetes Secret is used to hold the password for MemoryDB cluster user.

This initiates cluster creation. You can check the status using the AWS console. Once the result is complete, you can test connectivity with redis-cli:

# run this from EC2 instance in the same subnet as the cluster

export REDIS=<enter cluster endpoint>

# example

# export REDIS=clustercfg.memorydb-cluster-ack-cdk8s.smtjf4.memorydb.us-east-1.amazonaws.com

redis-cli -h $REDIS -c --user demouser --pass Password123456789 --tls --insecureLet's apply the second manifest. This will create configuration-related components i.e. ConfigMap and FieldExports - these are required by our application (to be deployed after this).

kubectl apply -f dist/0001-config.k8s.yaml

#output

configmap/export-memorydb-info created

fieldexports.services.k8s.aws/export-memorydb-endpoint created

fieldexports.services.k8s.aws/export-memorydb-username createdIn this case, we create two FieldExports to extract data from the cluster (from .status.clusterEndpoint.address) and user (.spec.name) resources that we created before and seed it into a ConfigMap.

ConfigMapandFieldExport:

FieldExportis an ACK component that can "export any spec or status field from an ACK resource into a KubernetesConfigMaporSecret". You can read up on the details in the ACK docs along with some examples.

You should be able to confirm by checking the FieldExport and ConfigMap:

kubectl get fieldexport

#output

NAME AGE

export-memorydb-endpoint 20s

export-memorydb-username 20s

kubectl get configmap/export-memorydb-info -o yamlWe started out with a blank ConfigMap, but ACK magically populated it with the required attributes:

apiVersion: v1

data:

default.export-memorydb-endpoint: clustercfg.memorydb-cluster-ack-cdk8s.smtjf4.memorydb.us-east-1.amazonaws.com

default.export-memorydb-username: demouser

immutable: false

kind: ConfigMap

#....omittedLet's create the application resources - Deployment and the Service.

kubectl apply -f dist/0003-deployment.k8s.yaml

#output

deployment.apps/memorydb-app created

service/memorydb-app-service configuredSince the Service type is LoadBalancer, an appropriate AWS Load Balancer will be provisioned to allow for external access.

Check Pod and Service:

kubectl get pods

kubectl get service/memorydb-app-service

# to get the load balancer IP

APP_URL=$(kubectl get service/memorydb-app-service -o jsonpath="{.status.loadBalancer.ingress[0].hostname}")

echo $APP_URL

# output example

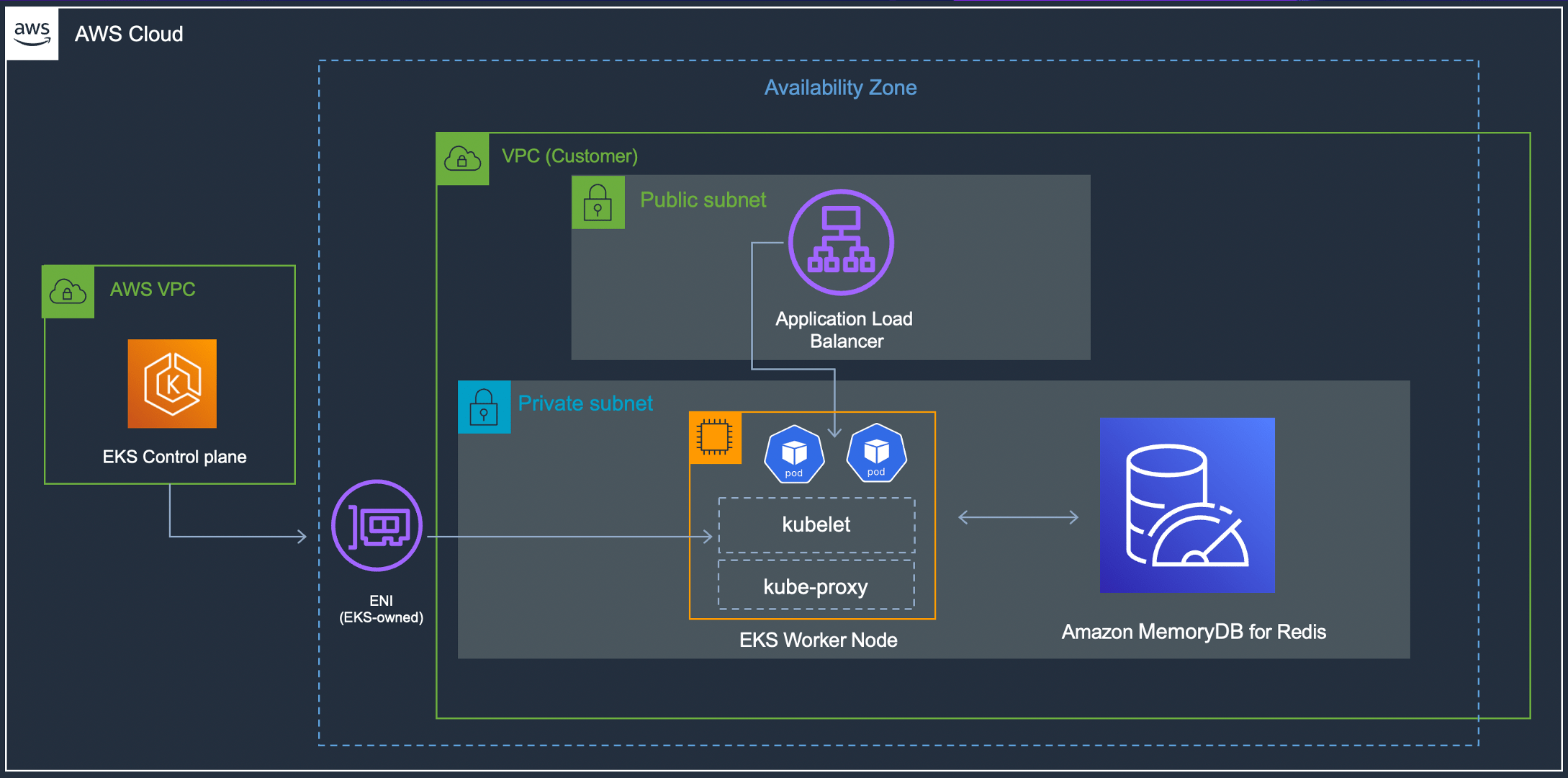

a0042d5b5b0ad40abba9c6c42e6342a2-879424587.us-east-1.elb.amazonaws.comYou have deployed the application and know the endpoint over which it's publicly accessible. Here is a high-level view of the current architecture:

Now You Can Access the Application

It's quite simple - it exposes a couple of HTTP endpoints to write and read data from Redis (you can check it on GitHub):

# create a couple of users - this will be added as a `HASH` in Redis

curl -i -X POST -d '{"email":"user1@foo.com", "name":"user1"}' http://$APP_URL:9090/

curl -i -X POST -d '{"email":"user2@foo.com", "name":"user2"}' http://$APP_URL:9090/

HTTP/1.1 200 OK

Content-Length: 0

# search for user via email

curl -i http://$APP_URL:9090/user2@foo.com

HTTP/1.1 200 OK

Content-Length: 41

Content-Type: text/plain; charset=utf-8

{"email":"user2@foo.com","name":"user2"}If you get a Could not resolve host error while accessing the LB URL, wait for a minute or so and retry.

Once you're done...

... Don’t Forget To Delete Resources

# delete MemoryDB cluster, configuration and the application

kubectl delete -f dist/

# to uninstall the ACK controller

export SERVICE=memorydb

helm uninstall -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller

# delete the EKS cluster. if created via eksctl:

eksctl delete cluster --name <enter name of eks cluster>So far, you deployed the ACK controller for MemoryDB, set up a cluster and an application that connects to it, and tested the end-to-end solution. Great!

Now let's look at the cdk8s code that makes it all happen. The logic is divided into three Charts. I will only focus on critical sections of the code and the rest will be omitted for brevity.

You can refer to the complete code on GitHub.

Code Walkthrough

MemoryDB and Related Components

We start by defining the MemoryDB cluster along with the required components - ACL, User, and Subnet Group.

func NewMemoryDBChart(scope constructs.Construct, id string, props *MyChartProps) cdk8s.Chart {

//...

secret = cdk8splus22.NewSecret(chart, jsii.String("password"), &cdk8splus22.SecretProps{

Metadata: &cdk8s.ApiObjectMetadata{Name: jsii.String(secretName)},

StringData: &map[string]*string{"password": jsii.String(memoryDBPassword)},

})

user = users_memorydbservicesk8saws.NewUser(chart, jsii.String("user"), &users_memorydbservicesk8saws.UserProps{

Metadata: &cdk8s.ApiObjectMetadata{Name: jsii.String(memoryDBUsername)},

Spec: &users_memorydbservicesk8saws.UserSpec{

Name: jsii.String(memoryDBUsername),

AccessString: jsii.String(memoryDBUserAccessString),

AuthenticationMode: &users_memorydbservicesk8saws.UserSpecAuthenticationMode{

Type: jsii.String("Password"),

Passwords: &[]*users_memorydbservicesk8saws.UserSpecAuthenticationModePasswords{

{Name: secret.Name(), Key: jsii.String(secretKeyName)},

},

},

},

})ACL references the User defined above:

acl := acl_memorydbservicesk8saws.NewAcl(chart, jsii.String("acl"),

&acl_memorydbservicesk8saws.AclProps{

Metadata: &cdk8s.ApiObjectMetadata{Name: jsii.String(memoryDBACLName)},

Spec: &acl_memorydbservicesk8saws.AclSpec{

Name: jsii.String(memoryDBACLName),

UserNames: jsii.Strings(*user.Name()),

},

})

The subnet IDs (for the subnet group), as well as the security group ID for the cluster, are read from environment variables.

subnetGroup := subnetgroups_memorydbservicesk8saws.NewSubnetGroup(chart, jsii.String("sg"),

&subnetgroups_memorydbservicesk8saws.SubnetGroupProps{

Metadata: &cdk8s.ApiObjectMetadata{Name: jsii.String(memoryDBSubnetGroup)},

Spec: &subnetgroups_memorydbservicesk8saws.SubnetGroupSpec{

Name: jsii.String(memoryDBSubnetGroup),

SubnetIDs: jsii.Strings(strings.Split(subnetIDs, ",")...), //same as EKS cluster

},

})Finally, the MemoryDB cluster is defined - it references all the resources created above (it has been omitted on purpose):

memoryDBCluster = memorydbservicesk8saws.NewCluster(chart, jsii.String("memorydb-ack-cdk8s"),

&memorydbservicesk8saws.ClusterProps{

Metadata: &cdk8s.ApiObjectMetadata{Name: jsii.String(memoryDBClusterName)},

Spec: &memorydbservicesk8saws.ClusterSpec{

Name: jsii.String(memoryDBClusterName),

//omitted

},

})

return chart

}Configuration

Then we move on to the next chart that handles the configuration-related aspects. It defines a ConfigMap (which is empty) and FieldExports - one each for the MemoryDB cluster endpoint and username (the password is read from the Secret)

As soon as these are created, the ConfigMap is populated with the required data as per from and to configuration in the FieldExport.

func NewConfigChart(scope constructs.Construct, id string, props *MyChartProps) cdk8s.Chart {

//...

cfgMap = cdk8splus22.NewConfigMap(chart, jsii.String("config-map"),

&cdk8splus22.ConfigMapProps{

Metadata: &cdk8s.ApiObjectMetadata{

Name: jsii.String(configMapName)}})

fieldExportForClusterEndpoint = servicesk8saws.NewFieldExport(chart, jsii.String("fexp-cluster"), &servicesk8saws.FieldExportProps{

Metadata: &cdk8s.ApiObjectMetadata{Name: jsii.String(fieldExportNameForClusterEndpoint)},

Spec: &servicesk8saws.FieldExportSpec{

From: &servicesk8saws.FieldExportSpecFrom{Path: jsii.String(".status.clusterEndpoint.address"),

Resource: &servicesk8saws.FieldExportSpecFromResource{

Group: jsii.String("memorydb.services.k8s.aws"),

Kind: jsii.String("Cluster"),

Name: memoryDBCluster.Name()}},

To: &servicesk8saws.FieldExportSpecTo{

Name: cfgMap.Name(),

Kind: servicesk8saws.FieldExportSpecToKind_CONFIGMAP}}})

fieldExportForUsername = servicesk8saws.NewFieldExport(chart, jsii.String("fexp-username"), &servicesk8saws.FieldExportProps{

Metadata: &cdk8s.ApiObjectMetadata{Name: jsii.String(fieldExportNameForUsername)},

Spec: &servicesk8saws.FieldExportSpec{

From: &servicesk8saws.FieldExportSpecFrom{Path: jsii.String(".spec.name"),

Resource: &servicesk8saws.FieldExportSpecFromResource{

Group: jsii.String("memorydb.services.k8s.aws"),

Kind: jsii.String("User"),

Name: user.Name()}},

To: &servicesk8saws.FieldExportSpecTo{

Name: cfgMap.Name(),

Kind: servicesk8saws.FieldExportSpecToKind_CONFIGMAP}}})

return chart

}The Application Chart

Finally, we deal with the Deployment (in its dedicated Chart) - it makes use of the configuration objects we defined in the earlier chart:

func NewDeploymentChart(scope constructs.Construct, id string, props *MyChartProps) cdk8s.Chart {

//....

dep := cdk8splus22.NewDeployment(chart, jsii.String("memorydb-app-deployment"), &cdk8splus22.DeploymentProps{

Metadata: &cdk8s.ApiObjectMetadata{

Name: jsii.String("memorydb-app")}})The next important part is the container and its configuration. We specify the ECR image repository along with the environment variables - they reference the ConfigMap we defined in the previous chart (everything is connected!):

//...

container := dep.AddContainer(

&cdk8splus22.ContainerProps{

Name: jsii.String("memorydb-app-container"),

Image: jsii.String(appDockerImage),

Port: jsii.Number(appPort)})

container.Env().AddVariable(jsii.String("MEMORYDB_CLUSTER_ENDPOINT"),

cdk8splus22.EnvValue_FromConfigMap(

cfgMap,

jsii.String("default."+*fieldExportForClusterEndpoint.Name()),

&cdk8splus22.EnvValueFromConfigMapOptions{Optional: jsii.Bool(false)}))

container.Env().AddVariable(jsii.String("MEMORYDB_USERNAME"),

cdk8splus22.EnvValue_FromConfigMap(

cfgMap,

jsii.String("default."+*fieldExportForUsername.Name()),

&cdk8splus22.EnvValueFromConfigMapOptions{Optional: jsii.Bool(false)}))

container.Env().AddVariable(jsii.String("MEMORYDB_PASSWORD"),

cdk8splus22.EnvValue_FromSecretValue(

&cdk8splus22.SecretValue{

Secret: secret,

Key: jsii.String("password")},

&cdk8splus22.EnvValueFromSecretOptions{}))Finally, we define the Service (type LoadBalancer) which enables external application access and ties it all together in the main function:

//...

dep.ExposeViaService(

&cdk8splus22.DeploymentExposeViaServiceOptions{

Name: jsii.String("memorydb-app-service"),

ServiceType: cdk8splus22.ServiceType_LOAD_BALANCER,

Ports: &[]*cdk8splus22.ServicePort{

{Protocol: cdk8splus22.Protocol_TCP,

Port: jsii.Number(lbPort),

TargetPort: jsii.Number(appPort)}}})

//...

func main() {

app := cdk8s.NewApp(nil)

memorydb := NewMemoryDBChart(app, "memorydb", nil)

config := NewConfigChart(app, "config", nil)

config.AddDependency(memorydb)

deployment := NewDeploymentChart(app, "deployment", nil)

deployment.AddDependency(memorydb, config)

app.Synth()

}That's all for now!

Wrap Up

Combining AWS Controllers for Kubernetes and cdk8s can prove useful if you want to manage AWS services as well as the Kubernetes applications - using code (not yaml). In this blog, you saw how to do this in the context of MemoryDB and an application that was composed of a Deployment and Service. I encourage you to try out other AWS services as well - here is a complete list.

Until then, Happy Building!

Published at DZone with permission of Abhishek Gupta, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments