Making Elasticsearch in Docker Swarm Truly Elastic

Running a truly elastic Elasticsearch cluster on Docker Swarm is hard. Here's how to get past Elasticsearch and Docker's pitfalls with IP addresses, networking, and more.

Join the DZone community and get the full member experience.

Join For FreeRunning on Elasticsearch on Docker sounds like a natural fit – both technologies promise elasticity. However, running a truly elastic Elasticsearch cluster on Docker Swarm became somewhat difficult with Docker 1.12 Swarm mode. Why? Since Elasticsearch gave up on multicast discovery (by moving multicast node discovery into a plugin and not including it by default) one has to specify IP addresses of all master nodes to join the cluster. Unfortunately, this creates the chicken or the egg problem in the sense that these IP addresses are not actually known in advance when you start Elasticsearch as a Swarm service! It would be easy if we could use the shared Docker bridge or host network and simply specify the Docker host IP addresses, as we are used to it with the “docker run” command. However, “docker service create” rejects the usage of bridge or host network. Thus, the question remains: How can we deploy Elasticsearch in a Docker Swarm cluster?

Luckily, using a few tricks it is possible to create an Elasticsearch cluster on Docker Swarm and have it automatically create additional Elasticsearch node on each Docker Swarm node as they join the Swarm cluster!

We assume you already have a working Docker Swarm cluster. If not, simply run “docker swarm init” on the master node, and then run docker swarm join on worker nodes.

Let’s see how we can resolve these two problems.

Elasticsearch Problem: Node Discovery Without Explicit IP Addresses for Zen Discovery

We use an overlay network (a virtual network between containers, available on all Swarm nodes) with DNS round robin setup. We also specify the Elasticsearch service name “escluster” for the Zen discovery. This means each new Elasticsearch container will ask the “Elasticsearch node” named “escluster” to discover other Elasticsearch nodes. Swarm DNS round robin setup means that each time the IP address returned will be an IP of a different/random Elasticsearch node. Using this trick we enable Elasticesearch nodes to eventually discover all other nodes and form the cluster.

Docker Problem: Connecting Closed Overlay Network With Outside Network

This gets us over one hurdle, but it leaves us facing the next Docker networking problem: Docker does not allow outside connections into overlay networks with DNS round robin setup. This is done by design, though some see it as a bug (see Docker SwarmKit issue #1693, which simplifies the network setup in the future).

However you see it, this means that while the Elasticsearch cluster is able to find other nodes in the overlay network with DNS round robin trick that solved our original problem, now we are faced with the second problem — it is not possible to connect to port 9200 and talk to Elasticsearch from any other network.

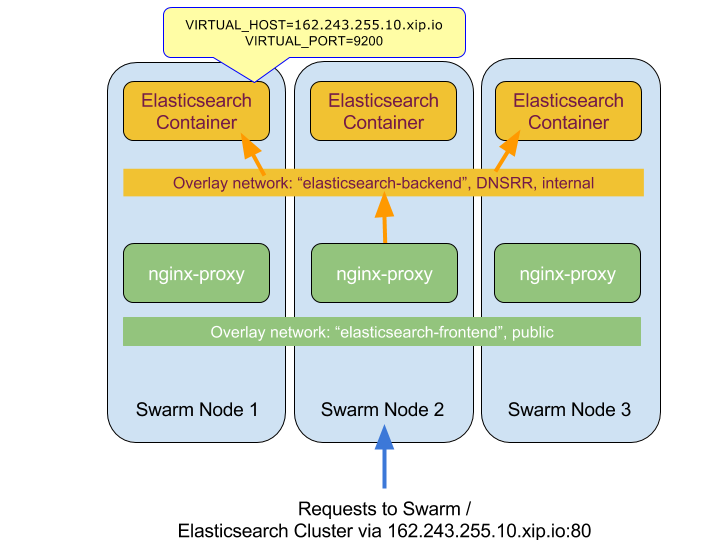

This Elasticsearch cluster can be used only from containers that share the overlay network! To solve this issue, we need a proxy service to connect the outside network to our Elasticsearch network. With such a proxy, we can reach the Elasticsearch cluster from the “outside” world. We use jwilder’s wildly popular jwilder/nginx-proxy, which can discover the target service by using VIRTUAL_HOST and VIRTUAL_PORT tags, which we have to set as environment variables in the Elasticsearch Docker Swarm service. Because we had no DNS configured during this setup, we simply used a public wildcard DNS (see xip.io for more info) for the virtual hostname. The following diagram shows how everything is connected.

Elasticsearch on Docker Swarm: Setting Up

Now that we’ve described the problems and our solutions for them, let’s actually set up Elasticsearch on Docker Swarm.

First, let’s create networks by executing these commands on Swarm master nodes:

docker network create -d overlay elasticsearch-backend

docker network create -d overlay elasticsearch-frontendNext, we’ll deploy NGINX proxy:

docker service create --mode global \

--name proxy -p 80:80 \

--network elasticsearch-frontend \

--network elasticsearch-backend \

--mount type=bind,src=/var/run/docker.sock,target=/tmp/docker.sock:ro \

jwilder/nginx-proxyNow we can create the global Elasticsearch service. The NGINX proxy will automatically discover every Elasticsearch container created by this service:

docker service create \

--name escluster \

--network elasticsearch-backend \

--mode global \

--endpoint-mode dnsrr \

--update-parallelism 1 \

--update-delay 60s \

-e VIRTUAL_HOST=162.243.255.10.xip.io \

-e VIRTUAL_PORT=9200 \

--mount type=bind,source=/tmp,target=/data \

elasticsearch:2.4 \

elasticsearch \

-Des.discovery.zen.ping.multicast.enabled=false \

-Des.discovery.zen.ping.unicast.hosts=escluster \

-Des.gateway.expected_nodes=3 \

-Des.discovery.zen.minimum_master_nodes=2 \

-Des.gateway.recover_after_nodes=2 \

-Des.network.bind=_eth0:ipv4_Let’s check on the status of our Elasticsearch cluster:

> docker service ps escluster

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR

30uq0ru7hc0suj500hiu9ojw1 escluster elasticsearch:2.4 docker-1gb-nyc2-02 Running Running 6 seconds ago

7v55bb2l2g5f2gbzqn58nzr2o \_ escluster elasticsearch:2.4 docker-1gb-nyc2-03 Running Running 6 seconds ago

72le8cee81d03p2u211k7n8m7 \_ escluster elasticsearch:2.4 docker-1gb-nyc2-04 Running Running 6 seconds ago

14mbvsx4su038mw9y3apv4k31 \_ escluster elasticsearch:2.4 docker-1gb-nyc2-01 Running Running 6 seconds ago

Now we can query the list of nodes from Elasticsearch and use Elasticsearch from any other client:

> curl http://162.243.255.10.xip.io/_cat/_nodes

10.0.0.6 10.0.0.6 6 21 0.30 d * Tethlam

10.0.0.4 10.0.0.4 8 21 0.01 d m Thornn

10.0.0.7 10.0.0.7 6 21 0.90 d m Sphinx

Elasticity in Action

Now let’s get back to the original problem — making Elasticsearch clusters deployed in Docker Swarm truly elastic. With everything we’ve done so far, when we need additional Elasticsearch nodes we can simply create a new server and have it join the Swarm cluster:

> ssh root@51.15.46.117 docker swarm join \

--token SWMTKN-1-54ld5e3nz31wloghribbwt8m0px4z5a1qeg17iazm7p2j7g7ke-6zccu9643j0dj7bhwmhqtwh46 162.243.255.10:2377

> This node joined a swarm as a worker.At this point, the new Elasticsearch node and the nginx-proxy get automatically deployed to the new Swarm node and the new Elasticsearch node automatically joins the Elasticsearch cluster:

> ssh root@51.15.46.117 docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2fc12ed5f1db jwilder/nginx-proxy:latest "/app/docker-entrypoi" 3 seconds ago Up Less than a second 80/tcp, 443/tcp proxy.0.3gw46kczaiugioc6gdo0414ws

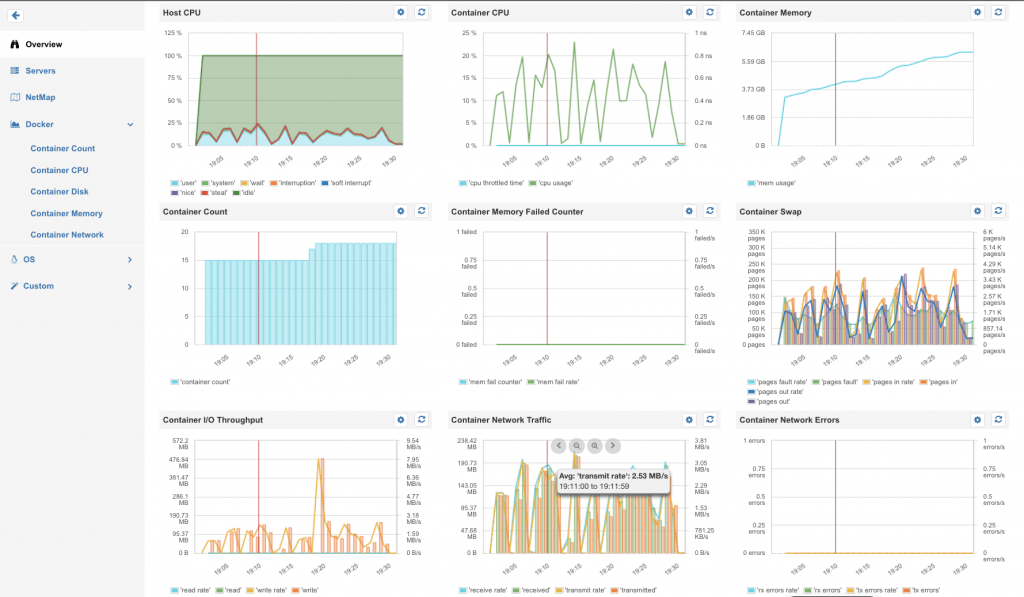

4b4999a6c7b4 elasticsearch:2.4 "/docker-entrypoint.s" 3 seconds ago Up 1 seconds 9200/tcp, 9300/tcpTo see the Elasticsearch cluster in action, we deployed Sematext Docker Agent to collect container metrics in the Swarm cluster and then we indexed large log files in short batch jobs using Logagent:

> cat test.log | logagent --elasticsearchUrl http://162.243.255.10.xip.io --index logs

In our little setup, Logagent was able to ship up to 60,000 events per second with up to 150 HTTP sockets to the five-node cluster without indicating any network problem or request rejections from Elasticsearch.

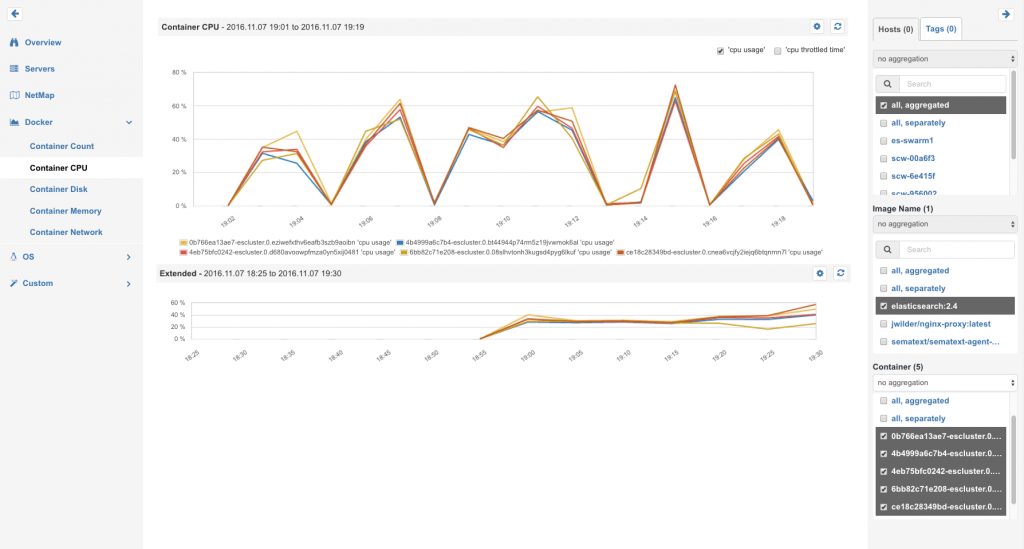

The following chart shows that the load of indexing got evenly distributed to all Elasticsearch containers:

Balanced CPU load on Elasticsearch nodes during indexing jobs

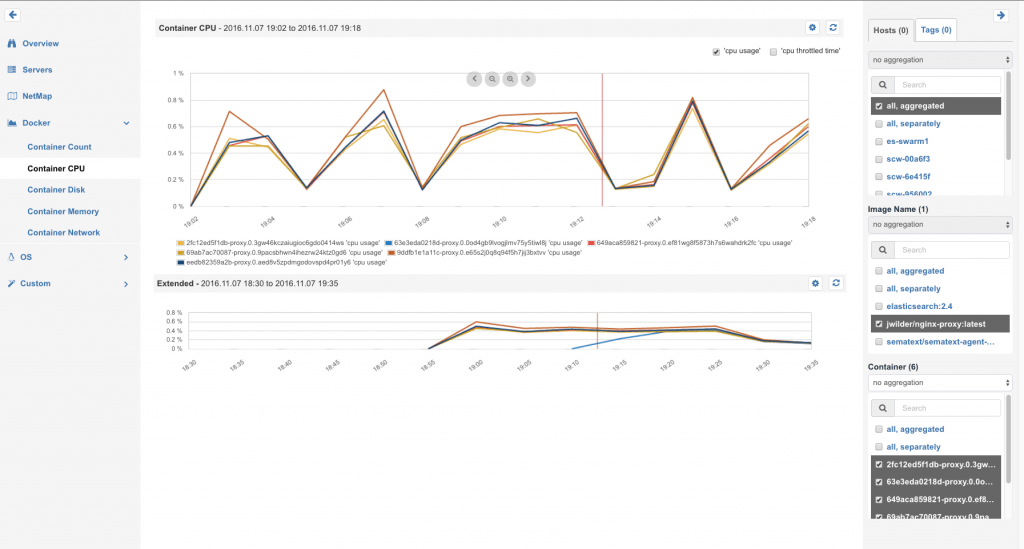

The nginx-proxy did show small CPU spikes at the same time — because it just forwarded the indexing requests from the “elasticsearch-frontend” network to the the “elasticsearch-backend” network, and the load was distributed over all proxy containers.

Balanced CPU load on NGINX proxies during indexing jobs

Container metrics overview in SPM for Docker

Missing features in Elasticsearch (e.g. removed multicast-discovery since version 2.3) and Docker networking made it challenging to deploy Elasticsearch as a Docker Swarm service. We hope new features in Elasticsearch and Docker will simplify such setups in the future.

We demonstrated that there are workarounds to overcome Elasticsearch discovery issues using DNS round robin in the overlay network in Swarm and made the Elasticsearch cluster available to external applications that don’t share the same overlay network. The result is a setup that can scale Elasticsearch automatically with the number of Swarm nodes, while all requests remain balanced across the cluster.

Published at DZone with permission of Stefan Thies, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments