Low Code Platforms for Test Automation: A Benchmark

In this post, we will share the results of a benchmark that we ran to compare the speed of some of the most popular low code solutions for test automation.

Join the DZone community and get the full member experience.

Join For FreeThe faster we get feedback, the better. That also applies to end-to-end automated tests. Considering the trend toward low code solutions for test automation at the UI level, we wanted to run some experiments comparing the execution time of some of the most popular options. Low code solutions are great because they have a less steep learning curve, but they are less useful in evaluating new code changes if the feedback is delayed due to slow running tests.

In this blog post, we will share the results of a benchmark that we ran to compare the speed of some of the most popular low code solutions for test automation.

We will explain our experiment and provide all the details (in case you want to reproduce it) and summarize our analysis. If you just want to learn about the results, jump directly to the section Benchmark results: conclusions and observations.

Why Is It Important To Have Fast UI Test Automation?

As part of your test strategy, you might automate test cases in order to have a set of checks that can provide feedback about the possible impact on the system of your most recent changes in the codebase. The typical scenario is that you just finished a change you were working on and want to check if that could damage other pieces of the system.

You run your tests while you refresh your mind, maybe taking a short walk around your home, having a coffee, or chit-chat with teammates. You want to have your sanity check results in a few minutes, especially in your CI/CD pipelines. If the feedback loop is too long you move on to something else and change context. Getting back to what you were doing gets more expensive, demanding more time and effort.

So, let’s say you define your sanity checks to last no more than 5 minutes. The faster the automation tool can run the tests and provide the results, the better because you will be able to add more test cases to your sanity check suite under the same time limit and increase the coverage in your application.

It’s also important to mention that for sanity checks, you will probably want to run your unit tests, your API tests, and some UI tests to have an end-to-end view of the most critical flows of your application. Take into consideration that what typically happens is that you can run thousands of unit tests in seconds, hundreds of API tests in a minute, and a few UI tests could take several minutes.

Benchmarking the Most Popular Low Code Solutions

Low code platforms for test automation aim to simplify the test automation with functionalities that don't require the user to write any code. You will have a recorder that lets you easily create test cases and edit them with a simple interface without requiring coding skills. In the last few years, new low code solutions for test automation have been spinning up. To mention a few: Functionize, GhostInspector, Katalon, Kobiton, Mabl, Reflect, Testim, TestProject. And the list grows every day.

A few months ago Testim released a new feature called turbo mode that enables you to run the test cases in a faster mode. To gain the speed boost, they run without saving all the data that is typically stored, which in most cases you won’t need, that being the network, console logs, and the screenshots of all the steps on successful runs (they still store results when the test case reports an error). They claim that they can run between 25% and 30% faster than standard Testim test executions.

They also claim in the same article that they “compared our tests against other low code or codeless tools and consistently completed execution in less than half the time of alternatives.”

We wanted to test their claims. We thought it could be a nice experiment to learn and share the results with the community, making a benchmark between the most popular low code solutions for test automation (defining the most popular as the ones we have known for a while).

Experiment Details

Let’s see how the experiment was designed before discussing its results. We decided to compare the following low code platforms:

- Testim (with and without the turbo mode)

- Mabl

- Functionize

- TestProject

We also wanted to compare Katalon, as it is also a popular alternative, but it does not provide native cloud support. The same happened with TestProject, but it was very easy to integrate it with SauceLabs, so we decided to include it anyway.

Another aspect to mention is that Testim, Mabl, and TestProject allow you to run locally, so we also compared the local execution times. We focused on comparing the time it takes to run a test in the cloud since this is the typical scenario where you will run your tests from your CI engine. But because you often debug your tests locally, we included a comparison of local execution speeds. To minimize variability across all tests, we ran them on the same laptop.

Laptop specifications for local runs:

- Operating System: Windows 10 Home

- Model: ASUS VivoBook 15

- CPU: AMD Ryzen 7 3700U 2.3 GHz

- Memory: 8 GB

- Connectivity: Wifi and Fiber, accessing from Uruguay

To keep the experiment as simple as possible, we decided to focus our time on the comparison between different tools but not on the different test design strategies (like if it’s best to use shorter test cases or not).

We designed a single test case with a typical length, as many real projects would have. It’s important to highlight that we designed the test to include different types of elements in the UI to interact with (filling inputs, clicking buttons, validating texts, etc). Then, we automated the same test case in each tool. To make our comparison fair and to reduce the impact of outliers, we decided to run the test 10 times in each tool.

To reduce variables, we always ran the tests with Google Chrome, even though most of the tools offer running the test in different browsers (but we were not comparing the browser’s performance this time).

We researched different demo sites to use as the system under test and decided to use Demoblaze (provided by Blazemeter as a training site for their performance testing tool). It’s not managed by any of the companies we were evaluating, it’s stable and fast (so there is less risk that it affects the results of our analysis), it’s similar to a real e-commerce site and has enough complexity for our goal.

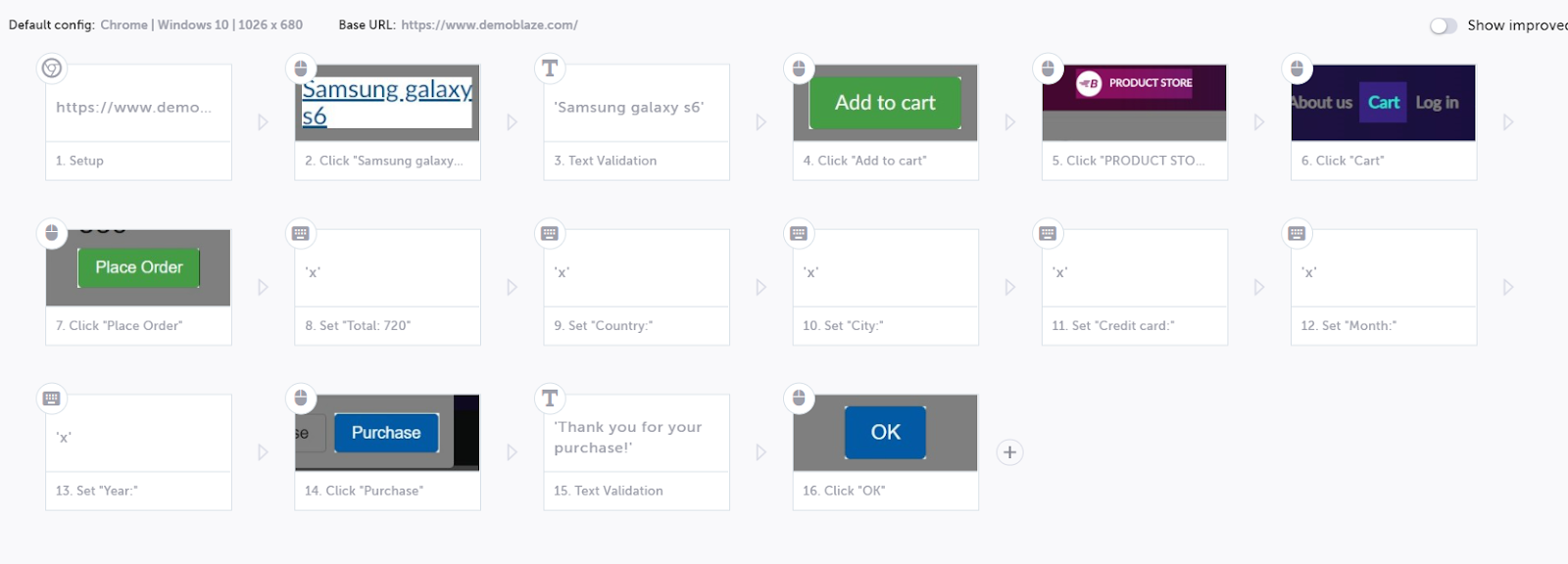

The test case was defined following these steps:

- Go to Demoblaze

- Click the title of the first element of the list (Samsung galaxy s6)

- Verify that the title of the product is "Samsung galaxy s6"

- Click the button "Add to cart"

- Click "Ok" on the popup saying that the product was added

- Click "PRODUCT STORE" in order to go back to the homepage

- Click the menu "Cart" at the top right

- Click the button "Place Order"

- Fill each of the x fields with an "x"

- Click the button "Purchase"

- Validate the message "Thank you for your purchase!"

- Click the button "OK"

In the following screenshots, you can see how the test script is represented in some of the tools we are comparing.

Testim with a very graphical approach:

TestProject with a very simple script-like style:

It’s important to mention that in order to automate the test with Functionize we had to find a workaround, using JavaScript to locate some elements that the tool could not find by itself.

So, in summary:

- Comparison between Testim, Mabl, TestProject, and Functionize.

- One single test case includes different types of actions (validations, filling inputs, clicking buttons). Site under test: Demoblaze.

- Local executions were always run on the same laptop (for all the tools that provide local execution).

- All tests were run with Google Chrome.

- We ran the test case 10 times in each configuration to normalize results.

Benchmark Results: Conclusions and Observations

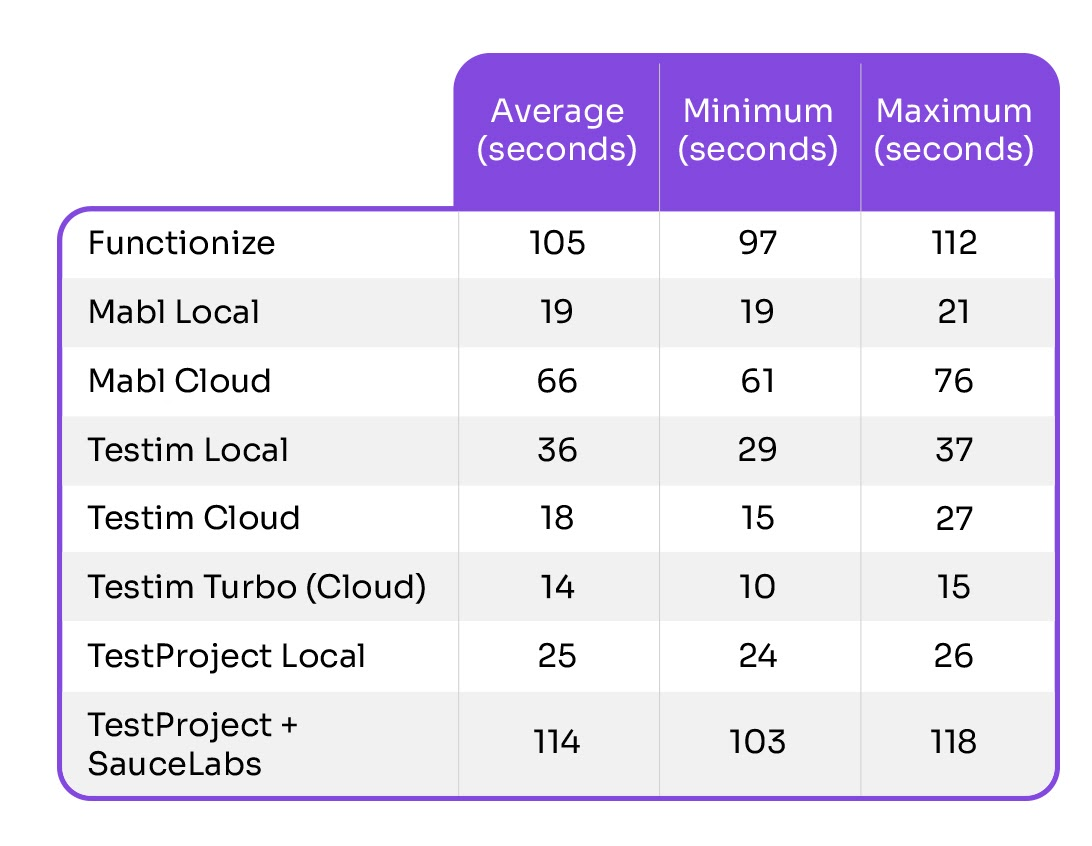

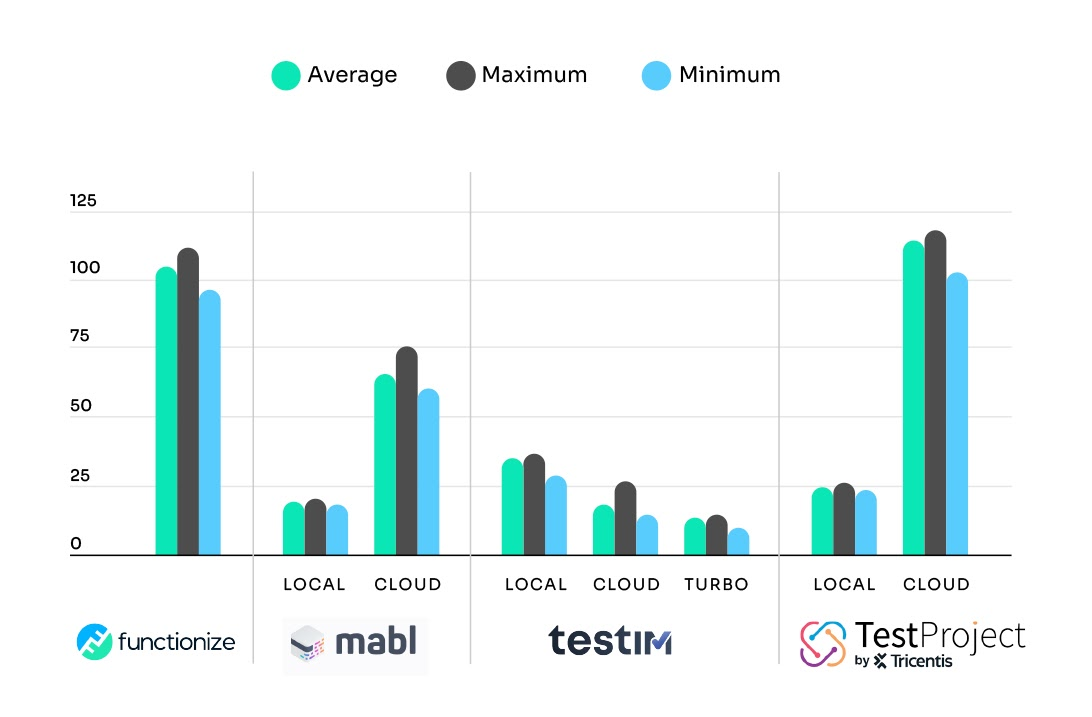

The following table and graphs summarize the results. We ran a statistical analysis to verify that the datasets were significantly different. If you want to learn more about why you should not plainly compare averages, read this article.

Observation 1

In our experiment, Testim turbo was (on average) 27% faster than the standard Testim execution. That means that we could not refute what they claimed in their article.

The same information is easier to analyze and compare in a graph like the one that follows:

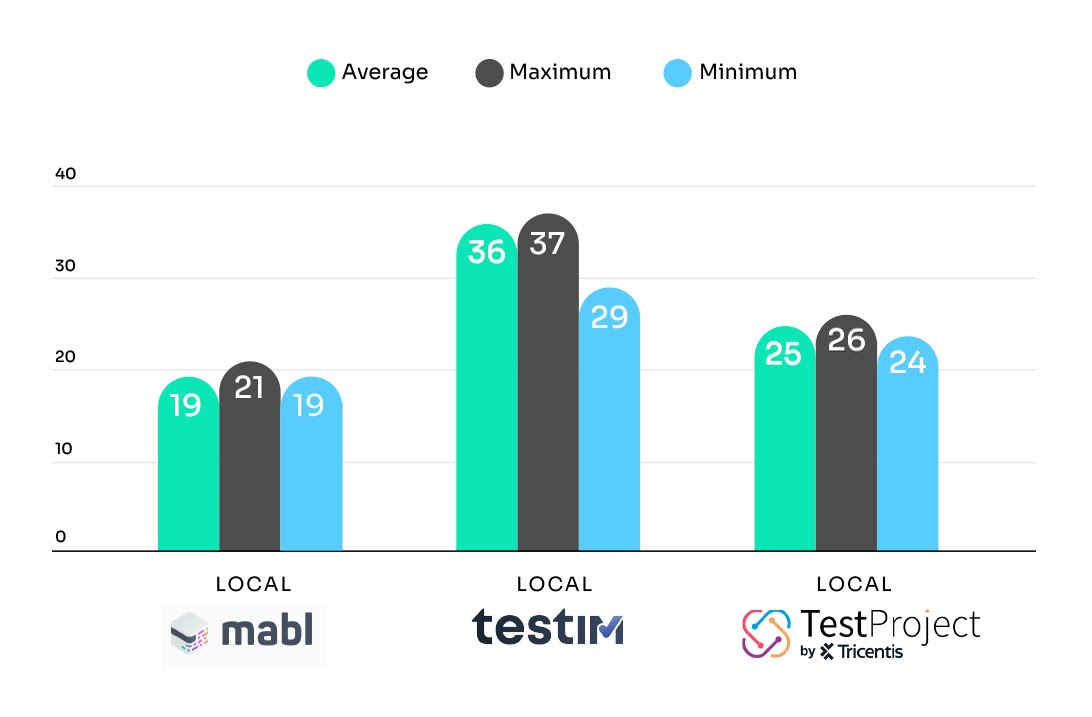

Let’s compare the executions only on local:

Even though the most important result is the time it takes to run from the cloud, because we will run our tests in our pipelines there, we also wanted to compare the execution times in local, since that will affect our time debugging the test scripts.

Observation 2

Mabl was the fastest running in local, with an average time 22% faster than the next fastest tool.

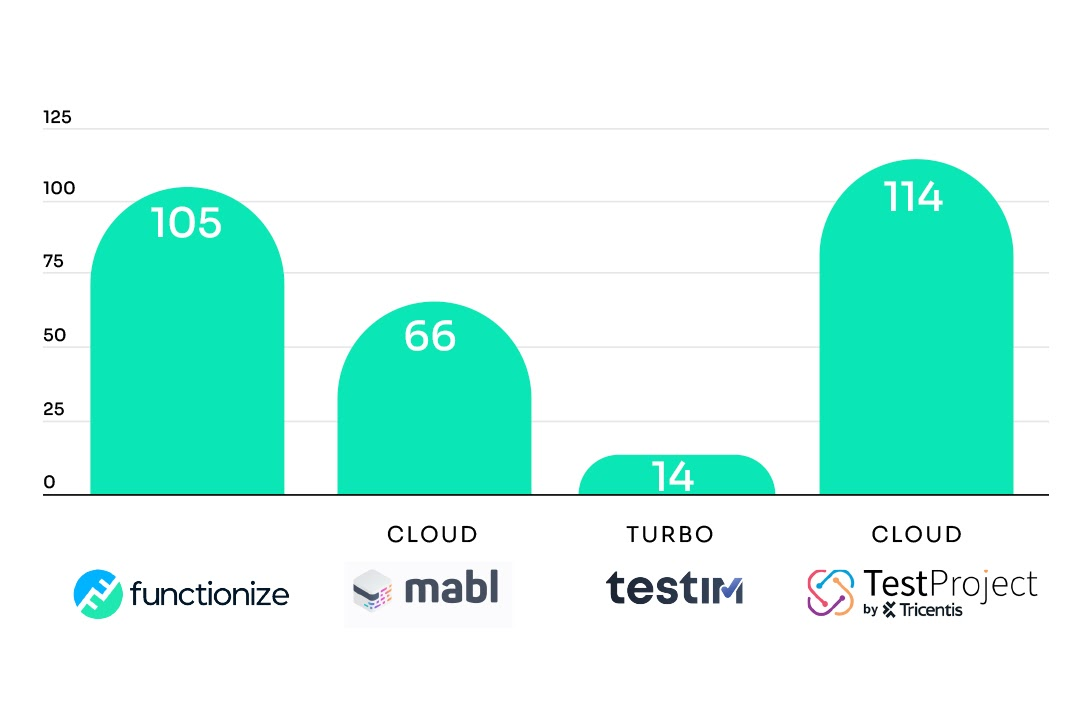

To make it simple to compare the different tools on the cloud, let’s pay attention only to the average of the executions of the experiment:

Observation 3

Testim was the fastest on the Cloud execution. On average, Testim was between 79% and 87% faster than the other tools when it comes to executing the same single test case. Put another way, in the time you can run the test case with Mabl just one time (the second fastest in this experiment), you could run the same test almost 5 times with Testim. Also, Mabl was almost twice as fast as TestProject and Functionize.

We didn’t compare how latency could affect the results. All the different tools have their own clouds or they can be connected to a platform like Saucelabs or BrowserStack.

Some additional observations:

- It is very easy to learn how to automate with a low code tool, especially once you have already learned to use one of them. We were impressed that we were able to transfer our knowledge of one low code automation tool to another. For instance, we were able to sign up, record a test case, and execute it with Mabl without any additional tool-specific learning. Even though we have experience with Selenium, it could have taken us at least a couple of hours to set the environment, prepare the framework and run the tests. Let alone configure it to run it in a grid, have nice-looking test reports, get the solution ready to integrate it in a CI/CD environment, etc.

- In most of the tools, you don’t really lose flexibility as compared to coded solutions like Selenium because there is typically a mechanism to inject code if the situation requires it. For example, we added some JavaScript in the Functionize test to automate some actions that the tool was not capable of doing at the moment of automating.

- While it is true that someone without coding skills can be very good at automating test cases with these tools, having coding experience helps improve the test architecture, including driving good practices for modularization, versioning, etc.

Acknowledgments

As a final note, I (Federico Toledo) could not have written this article without the help of Juan Pablo Rodríguez and Federico Martínez, from Abstracta. They prepared most of the scripts and gathered the results.

I hope you enjoyed this article and found it useful!

Published at DZone with permission of Federico Toledo, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments