Logging Docker Containers With AWS Cloudwatch

This post describes how to set up the integration between Docker and AWS and then establish a pipeline of logs from CloudWatch into the ELK Stack.

Join the DZone community and get the full member experience.

Join For Free

one of the ways to log docker containers is to use the logging drivers added by docker last year . these drivers log the stdout and stderr output of a docker container to a destination of your choice — depending on which driver you are using — and enable you to build a centralized log management system (the default behavior is to use the json-file driver, saving container logs to a json file).

the awslogs driver allows you to log your containers to aws cloudwatch, which is useful if you are already using other aws services and would like to store and access the log data on the cloud. once in cloudwatch, you can hook up the logs with an external logging system for future monitoring and analysis.

this post describes how to set up the integration between docker and aws and then establish a pipeline of logs from cloudwatch into the elk stack (elasticsearch, logstash, and kibana) offered by logz.io.

setting up aws

before you even touch docker, you need to make sure that we have aws configured correctly. this means that we need a user with an attached policy that allows for the writing of events to cloudwatch, and we need to create a new logging group and stream in cloudwatch.

creating an aws user

first, create a new user in the iam console (or select an existing one). make note of the user’s security credentials (access key id and access key secret) — they are needed to configure the docker daemon in the next step. if this is a new user, simply add a new access key.

second, you will need to define a new policy and attach it to the user that you have just created. so in the same iam console, select policies from the menu on the left, and create the next policy which enables writing logs to cloudwatch:

{

"version": "2012-10-17",

"statement": [

{

"action": [

"logs:createlogstream",

"logs:putlogevents"

],

"effect": "allow",

"resource": "*"

}

]

}save the policy, and assign it to the user.

preparing cloudwatch

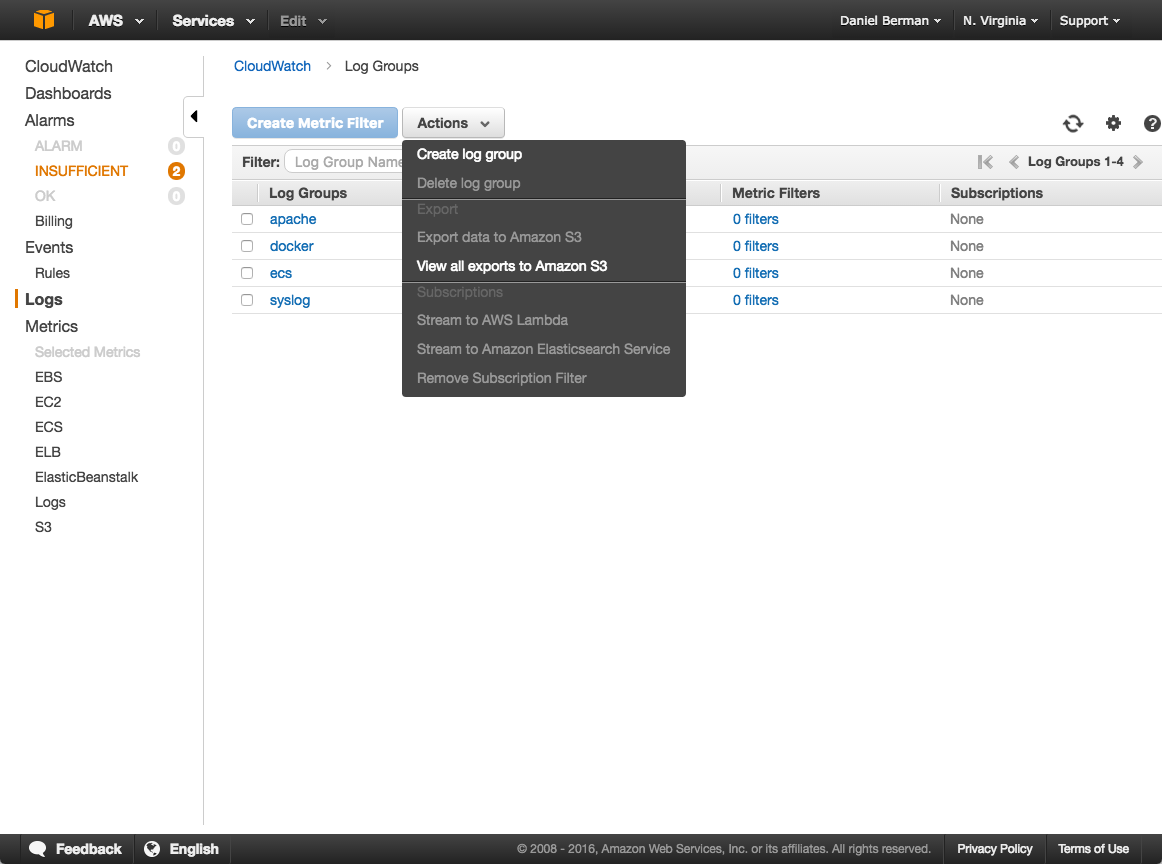

open the cloudwatch console, select logs from the menu on the left, and then open the actions menu to create a new log group:

within this new log group, create a new log stream. make note of both the log group and log stream names — you will use them when running the container.

configuring docker

the next step is to configure the docker daemon (and not the docker engine) to use your aws user credentials.

as specified in the docker documentation , there are a number of ways to do this such as shared credentials in ~/.aws/credentials and using ec2 instance policies (if docker is running on an aws ec2 instance). but this example will opt for a third option — using an upstart job.

create a new override file for the docker service in the /etc/init folder:

$ sudo vim /etc/init/docker.override

define your aws user credentials as environment variables:

env aws_access_key_id=<aws_access_key_id>

env aws_secret_access_key=<aws_secret_access_key>

save the file, and restart the docker daemon:

$ sudo docker service restart

note that in this case docker is installed on an ubuntu 14.04 machine. if you are using a later version of ubuntu, you will need to use systemd.

using the awslogs driver

now that docker has the correct permissions to write to cloudwatch, it’s time to test the first leg of the logging pipeline.

use the run command with the –awslogs driver parameters:

$ docker run -it --log-driver="awslogs" --log-opt awslogs-region="us-east-1" --log-opt awslogs-group="log-group" --log-opt awslogs-stream="log-stream" ubuntu:14.04 bash

version: "2"

services:

web:

image: ubuntu:14.04

logging:

driver: "awslogs"

options:

awslogs-region: "us-east-1"

awslogs-group: "log-group"

awslogs-stream: "log-stream"

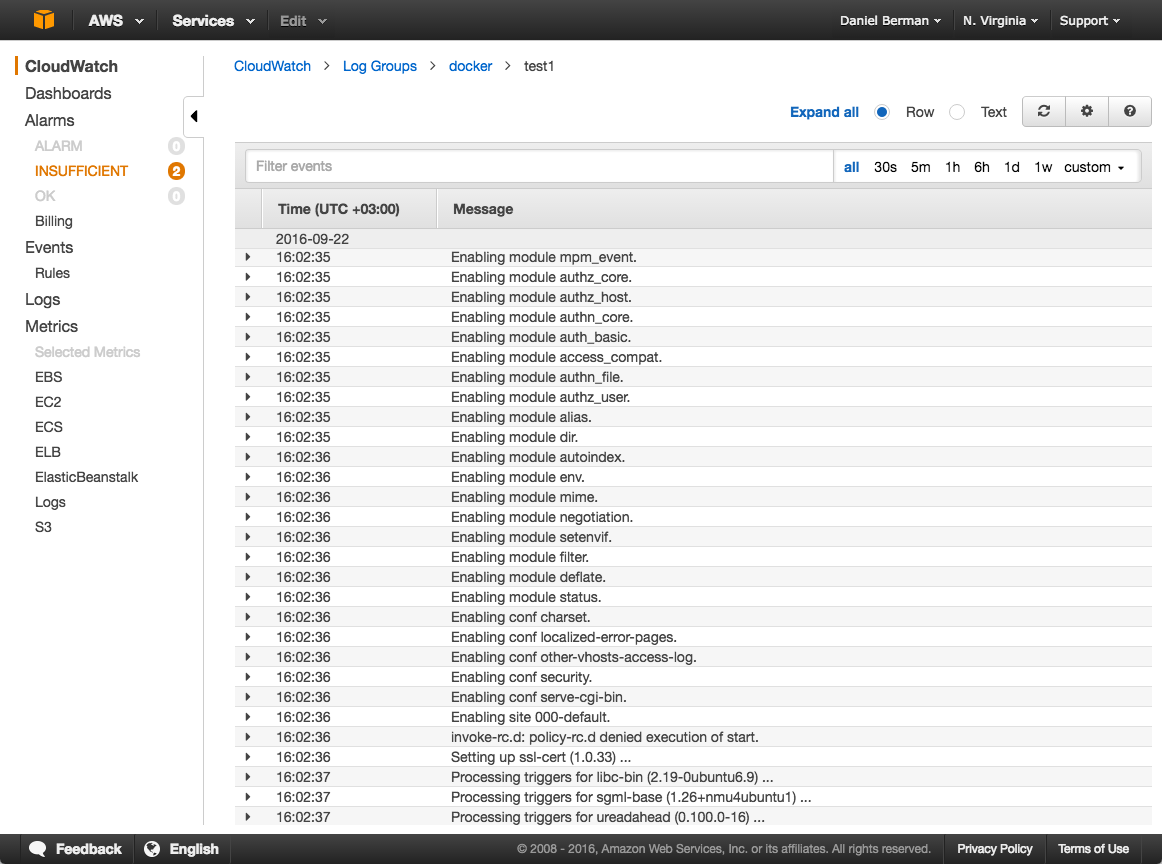

open up the log stream in cloudwatch. you should see container logs:

shipping to elk for analysis

so, you’ve got your container logs in cloudwatch. what now? if you need monitoring and analysis, the next obvious step would be to ship the data into a centralized logging system.

the next section will describe how to set up a pipeline of logs into the logz.io ai-powered elk stack using s3 batch export. two requirements by aws need to be noted here:

- the amazon s3 bucket must reside in the same region as the log data that you want to export

- you have to make sure that your aws user has permissions to access the s3 bucket

exporting to s3

cloudwatch supports batch export to s3 , which in this context means that you can export batches of archived docker logs to an s3 bucket for further ingestion and analysis in other systems.

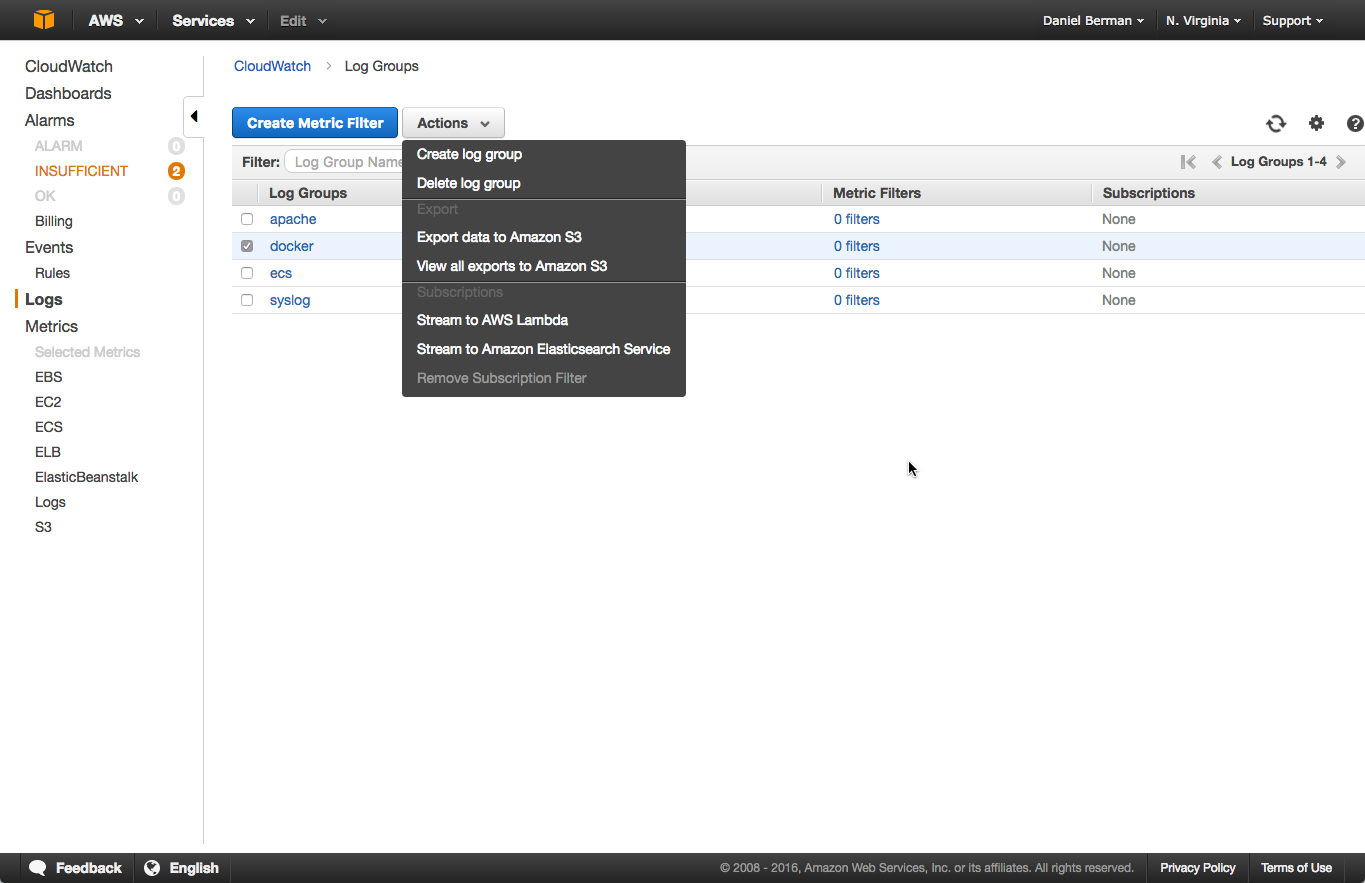

to export the docker logs to s3, open the logs page in cloudwatch. then, select the log group you wish to export, click the actions menu, and select export data to amazon s3 :

in the dialog that is displayed, configure the export by selecting a time frame and an s3 bucket to which to export. click export data when you’re done, and the logs will be exported to s3.

importing into logz.io

setting up the integration with logz.io is easy. in the user interface, go to log shipping → aws → s3 bucket.

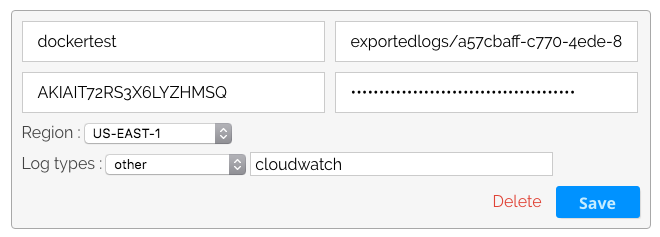

click “add s3 bucket,” and configure the settings for the s3 bucket containing the docker logs with the name of the bucket, the prefix path to the bucket (excluding the name of the bucket), the aws access key details (id + secret), the aws region, and the type of logs:

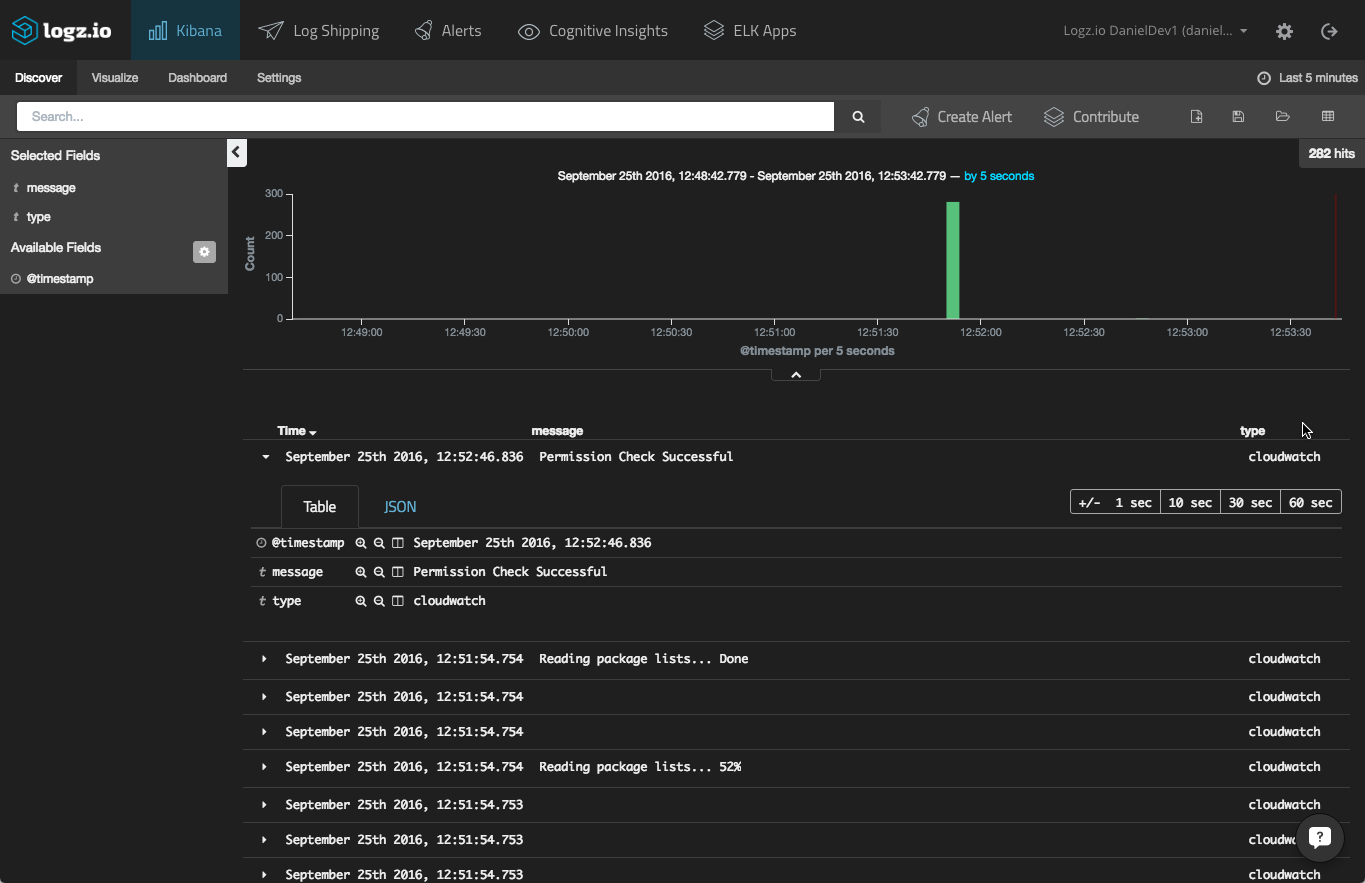

hit the “save” button, and your s3 bucket is configured. head on over to the visualize tab in the user interface. after a moment or two, the container logs should be displayed:

using logstash

if you are using your own elk stack, you can configure logstash to import and parse the s3 logs using the s3 input plugin.

an example configuration would look something like this:

input {

s3 {

bucket => "dockertest"

credentials => [ "my-aws-key", "my-aws-token" ]

region_endpoint => "us-east-1"

# keep track of the last processed file

sincedb_path => "./last-s3-file"

codec => "json"

type => "cloudwatch"

}

}

filter {}

output {

elasticsearch_http {

host => "server-ip"

port => "9200"

}

}a final note

there are other methods of pulling the data from cloudwatch into s3 — using kinesis and lambda, for example. if you’re looking for an automated process, this might be the better option to explore — i will cover it in the next post on the subject.

also, if you’re looking for a more comprehensive solution for logging docker environments using elk, i recommend reading about the logz.io docker log collector .

Published at DZone with permission of Daniel Berman, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments