LocalStack in Action (Part 1)

Introducing you to LocalStack to help you learn how to run AWS applications or lambdas on your local machine without connecting to a remote cloud provider.

Join the DZone community and get the full member experience.

Join For FreeProvisioning AWS resources for your applications/organization can be complex—creating the AWS infrastructures (SQS, SNS, Lambda, S3...) with a fine-grained permissions model—and then trying to integrate your infrastructure with your applications will take time until you test it to ensure the whole flow is working as expected.

In this article, I will introduce you to LocalStack, a cloud service emulator that runs in a single container on your laptop or in your CI environment. With LocalStack, you can run your AWS applications or lambdas entirely on your local machine without connecting to a remote cloud provider.

Prerequisites

- Docker

- Docker Compose binary

- AWS CLI

Starting and Managing LocalStack

You can set up LocalStack in different ways—install its binary script that starts a docker container and set all the infrastructure needed—or use Docker or Docker Compose.

In our lab, we will use docker-compose to launch LocalStack. You can use the Docker Compose associated with the lab. I choose Docker because I don't need to install any additional binary into my machine. I can launch it and drop the container at any time.

LocalStack With Docker and AWS CLI

You can use the official Docker Compose file from the LocalStack repo. You can remove the unnecessary ports—only required for pro—as we are using the free LocalStack.

The minimalistic docker-compose.yaml can be found here, and to launch the service, execute the following command—I am running it in the background (-d parameter):

docker compose -f docker/docker-compose.yaml up -dAs you might notice, I am mounting my Docker daemon socket into the LocalStack container. The reason I am doing this is because LocalStack can run lambda functions in three modes. The first mode is local, and in this mode, LocalStack will execute your lambda in the same container as LocalStack, meaning all functions will share the same environment. The advantage of this mode is that the execution time is less than the other modes.

The other mode is docker mode, and in this mode, each lambda invocation will spin up a new container where it will be executed, do so, each lambda will have a new, clean environment—unlike local mode where all lambdas will share the same environment—but this advantage comes at a cost that the execution time will be more than the other mode. Also, this mode requires mounting the docker socket to be mounted so that the LocalStack container can launch other containers as well.

LocalStack Lab

Scenario

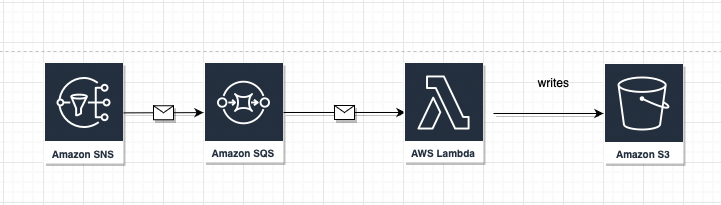

In this lab, we will create an SNS that has an SQS subscribed to it, and a lambda that starts listening on that SQS, and then processes the message and uploads it to an S3. The lab will use AWS CLI to provision the infrastructure as a first option.

Setting up the AWS Configuration for LocalStack

We already launched the LocalStack container. You can check that the container is up and running using the following command:

> docker container ls

> docker container logs localstack

...

2022-10-30T19:01:14.628 WARN --- [-functhread5] hypercorn.error : ASGI Framework Lifespan error, continuing without Lifespan support

2022-10-30T19:01:14.628 WARN --- [-functhread5] hypercorn.error : ASGI Framework Lifespan error, continuing without Lifespan support

2022-10-30 19:01:14,629 INFO success: infra entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2022-10-30T19:01:14.630 INFO --- [-functhread5] hypercorn.error : Running on https://0.0.0.0:4566 (CTRL + C to quit)

2022-10-30T19:01:14.630 INFO --- [-functhread5] hypercorn.error : Running on https://0.0.0.0:4566 (CTRL + C to quit)

Ready. <- the container is ready to accept requestsFor each AWS command, the AWS binary will use the specific endpoint for that service—we have to override this endpoint in the case of LocalStack; thus, we have to specify the parameter—endpoint-URL each time you execute the AWS command. For that, I will create an alias to make life easier to interact with AWS CLI:

alias awsls='aws --endpoint-url http://localhost:4566'I am also setting the AWS_PAGER so it will send you the command result into a pager:

export AWS_PAGER=""We can check our configuration by listing the topics:

awsls sns list-topicsAnd should see this output:

{

"Topics": []

}Creating Lab Components

In this section, we will create together our SNS topic, the S3 Bucket, our lambda function, and our SQS queue that will be sitting between the SNS and our lambda function.

We will start by creating the SNS topic, use the following command to create it:

awsls sns create-topic --name "localstack-lab-sns.fifo" --attributes FifoTopic=true,ContentBasedDeduplication=falseNow if you try to list the topics in LocalStack by typing the previous command:

awsls sns list-topics

You should see your created SNS topic:

{

"Topics": [

{

"TopicArn": "arn:aws:sns:eu-central-1:000000000000:localstack-lab-sns.fifo"

}

]

}2. Creating the SQS Queue

Now, since the SNS topic is created, we can create the SQS queue. For that, I will start first by creating the dead-letter queue that will back our SQS:

awsls sqs create-queue --queue-name localstack-lab-sqs-dlq.fifo --attributes FifoQueue=true,ContentBasedDeduplication=false,DelaySeconds=0After executing the SQS queue creation command, you will see something similar to this:

{

"QueueUrl": "http://localhost:4566/000000000000/localstack-lab-sqs-dlq.fifo"

}The SQS queue will be a FIFO queue. A FIFO queue doesn't introduce duplicate messages within a five-minute interval; it also guarantees the ordering of the messages.

awsls sqs create-queue --queue-name localstack-lab-sqs.fifo --attributes file://code/queue-attributes.jsonThe output should be like this:

{

"QueueUrl": "http://localhost:4566/000000000000/localstack-lab-sqs.fifo"

}

After creating the SNS, SQS, and the SQS DLQ, we need to subscribe SQS to the SNS topic, but for that, we will get the queue ARN first:

awsls sqs get-queue-attributes --queue-url http://localhost:4566/000000000000/localstack-lab-sqs.fifo --attribute-names All{

"Attributes": {

"ApproximateNumberOfMessages": "0",

"ApproximateNumberOfMessagesNotVisible": "0",

"ApproximateNumberOfMessagesDelayed": "0",

"CreatedTimestamp": "1667168817",

"DelaySeconds": "0",

"LastModifiedTimestamp": "1667168817",

"MaximumMessageSize": "262144",

"MessageRetentionPeriod": "1209600",

"QueueArn": "arn:aws:sqs:eu-central-1:000000000000:localstack-lab-sqs.fifo",

"ReceiveMessageWaitTimeSeconds": "0",

"VisibilityTimeout": "900",

"SqsManagedSseEnabled": "false",

"ContentBasedDeduplication": "false",

"DeduplicationScope": "queue",

"FifoThroughputLimit": "perQueue",

"FifoQueue": "true",

"RedrivePolicy": "{\"deadLetterTargetArn\":\"arn:aws:sqs:eu-central-1:000000000000:localstack-lab-sqs-dlq.fifo\",\"maxReceiveCount\":\"3\"}"

}

}After getting the SQS ARN, we can subscribe the queue to our SNS topic as follows:

awsls sns subscribe --topic-arn arn:aws:sns:eu-central-1:000000000000:localstack-lab-sns.fifo --protocol sqs --notification-endpoint arn:aws:sqs:eu-central-1:000000000000:localstack-lab-sqs.fifoThe result you get is similar to this:

{

"SubscriptionArn": "arn:aws:sns:eu-central-1:000000000000:localstack-lab-sns.fifo:a3be6641-636d-4c23-affe-8aed78b26151"

}Now we can test the SQS subscription by publishing a message to the topic and it must be received by the SQS:

awsls sns publish --topic-arn arn:aws:sns:eu-central-1:000000000000:localstack-lab-sns.fifo --message-group-id='test' --message-deduplication-id='1111' --message file://code/sqs-message.json

Your message looks like this:

{

"MessageId": "babff1a5-993f-42c2-99d5-0551c7f1083d"

}Calling the SQS's receive message to check if we have received the message from the SNS:

awsls sqs receive-message --queue-url http://localhost:4566/000000000000/localstack-lab-sqs.fifo

Your list of messages is like this:

{

"Messages": [

{

"MessageId": "689ac063-2628-485b-92f6-1c613c2bf943",

"ReceiptHandle": "ZTU1N2IzODItOTE0ZC00MjNlLTg4OTAtOGFkOWE4OTRkYTU0IGFybjphd3M6c3FzOmV1LWNlbnRyYWwtMTowMDAwMDAwMDAwMDA6bG9jYWxzdGFjay1sYWItc3FzLmZpZm8gNjg5YWMwNjMtMjYyOC00ODViLTkyZjYtMWM2MTNjMmJmOTQzIDE2NjcxNjA1MDkuMzMxMjg3Ng==",

"MD5OfBody": "492cc3f4db48b802af619016ca18939d",

"Body": "{\"Type\": \"Notification\", \"MessageId\": \"3166be17-1ad2-4a92-81ea-46bfd085334e\", \"TopicArn\": \"arn:aws:sns:eu-central-1:000000000000:localstack-lab-sns.fifo\", \"Message\": \"'{\\n \\\"id\\\": 1,\\n \\\"title\\\": \\\"iPhone 9\\\",\\n \\\"description\\\": \\\"An apple mobile which is nothing like apple\\\",\\n \\\"price\\\": 549,\\n \\\"discountPercentage\\\": 12.96,\\n \\\"rating\\\": 4.69,\\n \\\"stock\\\": 94,\\n \\\"brand\\\": \\\"Apple\\\",\\n \\\"category\\\": \\\"smartphones\\\"\\n}'\", \"Timestamp\": \"2022-10-30T20:08:11.572Z\", \"SignatureVersion\": \"1\", \"Signature\": \"EXAMPLEpH+..\", \"SigningCertURL\": \"https://sns.us-east-1.amazonaws.com/SimpleNotificationService-0000000000000000000000.pem\", \"UnsubscribeURL\": \"http://localhost:4566/?Action=Unsubscribe&SubscriptionArn=arn:aws:sns:eu-central-1:000000000000:localstack-lab-sns.fifo:d8398760-c768-4867-b950-6035ec226c9d\"}"

}

]

}3. Creating the S3 Bucket

So far, the SNS and SQS integration is working properly as the messages published to SNS are sent to the SQS. But we need to process the messages by a lambda and move them to an S3 bucket, so let's create the S3 bucket first:

awsls s3 mb s3://localstack-lab-bucketThe output is:

make_bucket: localstack-lab-bucketWe can list all the existing S3 buckets in LocalStack as follows:

awsls s3 ls4. Creating the Lambda Function

The next step is to create a lambda function that gets triggered by the SQS as a source for the lambda function and saves the messages to a file on the S3 bucket. For that, there is already a simple lambda function that gets triggered whenever a new message is in the SQS. We need to zip the script and use it to create a lambda function:

# first step, zip the function handler:

zip lambda-handler.zip lambda.pyNow, we can use that zip file to create our lambda function handler, we are giving the bucket where to put the messages as an env var:

# create the lambda

awsls lambda create-function --function-name queue-reader --zip-file fileb://lambda-handler.zip --handler lambda.lambda_handler --runtime python3.8 --role arn:aws:iam::000000000000:role/fake-role-role --environment Variables={bucket_name=localstack-lab-bucket}After executing the previous command, the show output is similar to this:

{

"FunctionName": "queue-reader",

"FunctionArn": "arn:aws:lambda:eu-central-1:000000000000:function:queue-reader",

"Runtime": "python3.8",

"Role": "arn:aws:iam::000000000000:role/fake-role-role",

"Handler": "lambda.lambda_handler",

"CodeSize": 522,

"Description": "",

"Timeout": 3,

"LastModified": "2022-10-30T22:28:28.221+0000",

"CodeSha256": "TG2Q4/k6sbNqqvL+FphBRHXiK8qv3WnrSZaNtjc6rCY=",

"Version": "$LATEST",

"VpcConfig": {},

"Environment": {

"Variables": {

"bucket_name": "localstack-lab-bucket"

}

},

"TracingConfig": {

"Mode": "PassThrough"

},

"RevisionId": "708001e0-d764-47dd-8272-881eaacf7777",

"State": "Active",

"LastUpdateStatus": "Successful",

"PackageType": "Zip",

"Architectures": [

"x86_64"

]

}Note: In the previous command, while creating the lambda function, we are using a fake role that we did not create. We will discuss this point in the limitation section of LocalStack.

Now the lambda function is created, but it won't do anything, as it is not using the SQS as the source yet. To do so, we need to create a mapping between an event source and the lambda function as follows:

awsls lambda create-event-source-mapping --function-name queue-reader --batch-size 5 --maximum-batching-window-in-seconds 60 --event-source-arn arn:aws:sqs:eu-central-1:000000000000:localstack-lab-sqs.fifoThis will be shown after executing the previous command:

{

"UUID": "81ecc36a-c054-4e7f-8018-4586f39afea3",

"StartingPosition": "LATEST",

"BatchSize": 5,

"ParallelizationFactor": 1,

"EventSourceArn": "arn:aws:sqs:eu-central-1:000000000000:localstack-lab-sqs.fifo",

"FunctionArn": "arn:aws:lambda:eu-central-1:000000000000:function:queue-reader",

"LastModified": "2022-10-30T21:09:31.984637+01:00",

"LastProcessingResult": "OK",

"State": "Enabled",

"StateTransitionReason": "User action",

"Topics": [],

"MaximumRetryAttempts": -1

}Try to send an event to the SNS again, and check the logs of your LocalStack container to see if there are any errors in the logs:

awsls sns publish --topic-arn arn:aws:sns:eu-central-1:000000000000:localstack-lab-sns.fifo --message-group-id='test' --message-deduplication-id='$(uuidgen)' --message file://code/sqs-message.jsonYou can check your LocalStack logs and see the logs of our lambda function using:

docker container logs localstackThese are your logs:

...

2022-10-31T00:55:24.364 DEBUG --- [functhread24] l.u.c.docker_sdk_client : Creating container with attributes: {'mount_volumes': None, 'ports': <PortMappings: {}>, 'cap_add': None, 'cap_drop': None, 'security_opt': None, 'dns': '', 'additional_flags': '', 'workdir': None, 'privileged': None, 'command': 'lambda.lambda_handler', 'detach': False, 'entrypoint': None, 'env_vars': {'bucket_name': 'localstack-lab-bucket', 'AWS_ACCESS_KEY_ID': 'test', 'AWS_SECRET_ACCESS_KEY': 'test', 'AWS_REGION': 'eu-central-1', 'DOCKER_LAMBDA_USE_STDIN': '1', 'LOCALSTACK_HOSTNAME': 'localhost', 'EDGE_PORT': '4566', '_HANDLER': 'lambda.lambda_handler', 'AWS_LAMBDA_FUNCTION_TIMEOUT': '3', 'AWS_LAMBDA_FUNCTION_NAME': 'queue-reader', 'AWS_LAMBDA_FUNCTION_VERSION': '$LATEST', 'AWS_LAMBDA_FUNCTION_INVOKED_ARN': 'arn:aws:lambda:eu-central-1:000000000000:function:queue-reader'}, 'image_name': 'lambci/lambda:python3.8', 'interactive': True, 'name': None, 'network': 'bridge', 'remove': True, 'self': <localstack.utils.container_utils.docker_sdk_client.SdkDockerClient object at 0xffff820b5840>, 'tty': False, 'user': None}

2022-10-31T00:55:24.396 DEBUG --- [functhread24] l.u.c.docker_sdk_client : Copying file /tmp/function.zipfile.f12211f2/. into 291fe50aefb293d9af82a9ccdfbd37a1f306f8ee5d2e81cb7e2812208666b6c0:/var/task

2022-10-31T00:55:24.525 DEBUG --- [functhread24] l.u.c.docker_sdk_client : Starting container 291fe50aefb293d9af82a9ccdfbd37a1f306f8ee5d2e81cb7e2812208666b6c0

2022-10-31T00:55:24.602 INFO --- [ asgi_gw_0] localstack.request.aws : AWS s3.ListObjectsV2 => 200

2022-10-31T00:55:26.677 INFO --- [ asgi_gw_1] localstack.request.aws : AWS s3.PutObject => 200

2022-10-31T00:55:26.843 DEBUG --- [functhread24] l.s.a.lambda_executors : Lambda arn:aws:lambda:eu-central-1:000000000000:function:queue-reader result / log output:

{"statusCode":200}

>START RequestId: 348977e4-69d1-145b-7420-243d8f8c0451 Version: $LATEST

> Job Started...!

> Job Ended ...!

> END RequestId: 348977e4-69d1-145b-7420-243d8f8c0451

> REPORT RequestId: 348977e4-69d1-145b-7420-243d8f8c0451 Init Duration: 1812.98 ms Duration: 117.60 ms Billed Duration: 118 ms Memory Size: 1536 MB Max Memory Used: 100 MBFrom the log output, the lambda function was triggered, launched in a separate container—outside the LocalStack container—and was run successfully.

You can also double-check that the lambda function already writes to the S3 bucket by listing the content of our bucket:

awsls s3 --recursive ls s3://localstack-lab-bucket2022-10-30 23:31:45 819 /processed/command-2022_10_30-10_31_45.txtTesting All Together

You can use this bash script to launch the whole stack along with the AWS resources. You can find all the previous commands there with an easy-to-use script.

./code/./launch-stack.shLocalStack Limitation

So far, LocalStack does not enforce/validate the IAM policies, so don't rely on LocalStack to test your IAM policies and roles. And even if you add the ENFORCE_IAM flag to the docker-compose stack, it will not work.

They are providing a pro version— $28/month— that provides the feature of validating and enforcing IAM permissions.

There is also another limitation that we did not face while building our infrastructure using LocalStack. Since our lambda function is written in Python, we were able to run it in Docker mode (LocalStack with Docker and AWS CLI section), but in case your lambda was written using any JVM language, you will not be able to run it, because only the local executor with locally launched LocalStack can be used together with JVM lambda functions.

To get a deep dive into the LocalStack limitations topic, you can check the official documentation.

Published at DZone with permission of Moaad Aassou. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments