Kubernetes Multi-Tenancy Best Practices With Amazon EKS

Learn best practices and considerations for your multi-tenant apps!

Join the DZone community and get the full member experience.

Join For FreeApplications running on the cloud are diving deeper towards the most critical changes in how they are developed and deployed. Perhaps, the most useful and trendy tool which has come in this space is Kubernetes. There are certain best practices that you should consider for your Kubernetes multi-tenancy SaaS application with Amazon EKS.

Kubernetes has been deployed on AWS practically since its inception. On AWS, it is popularly known as EKS. The Amazon-managed Kubernetes service provides a flexible platform for managing your containers without forcing you to control the management infrastructure.

Unprecedented issues like the COVID-19 pandemic have accelerated different organizations to accelerate their digital transformation. The leading flag bearer in these digital transformations is the next generation cloud offering in software-as-a-Service (SaaS). These SaaS products support the business when half of the world is at a halt. In this space, AWS provides a perfect platform for SaaS product deliveries, which highly complement its rich and diverse IaaS and PaaS offerings. In this article, we will see how we achieve Kubernetes Multi-tenancy in SaaS applications using EKS.

Before starting, I want to recommend you this must-read article about Multi-tenant Architecture SaaS Application on AWS. It will help you to better understand the multi-tenant environment and you’ll learn some meaningful strategies to build your SaaS Application.

Why Amazon EKS?

When it comes to configuring Kubernetes Multi-tenancy with Amazon EKS, there are numerous advantages. Some of these benefits are highlighted below:

- Amazon EKS is a managed service that means you do not need to hire an expert to manage your infrastructure.

- EKS adds an element of high availability and scalability to your master nodes. This makes it easier for the infrastructure admins to focus more on the cluster and the worker nodes.

- EKS supports Elastic Load Balancer (ELB). This helps you automate the load distribution and parallel processing of the applications running on the EKS cluster.

- With EKS, there is no need to install, upgrade, or maintain any kind of plugins of tools. Amazon EKS is a certified conformant, and hence you can use all the tools and the plugins for free from the Kubernetes community.

Read our blog about Amazon ECS vs EKS and discover what is the best container orchestration platform!

Problems and Considerations When Building Kubernetes Multi-Tenant Architecture

Before we move ahead to discuss the challenges and best practices for Kubernetes multi-tenant SaaS applications on EKS, let’s first understand what multi-tenancy is. An essential criterion for the success of any application is usability. A successful application should serve multiple users at the same time. Consequently, the processing capacity of the application should grow linearly with the growth in the number of users.

For an application scaling up rapidly, it is important to maintain performance, stability, durability, and data isolation. The Kubernetes multi-tenant architecture enables concurrent processing. It isolates the application and data from one user to another.

Now, let’s understand the challenges while creating a Kubernetes multi-tenant environment through a metaphor. In an apartment or a condominium building, you need to provide full isolation to the people staying inside. You cannot create an architectural design where one person walks through another person’s apartment to get to the bathroom. All the apartments have to be isolated. Similarly, all the tenants have to be sufficiently isolated.

Similarly, you must ensure that each person should have ample access to resources in the apartment building when it comes to resource sharing. Your building will not function well if the water is shut off in one apartment when people in another apartment take a shower. This implies that all the tenants should have access to all the resources.

However, multi-tenancy poses multiple challenges, as described in the metaphor above. Each workload must be isolated. If there is a vulnerability or a security breach, it should not propagate into another. Each workload should have a fair share of resources like compute, networking, and other resources provided by Kubernetes.

If you want to fully understand multi-tenant, this blog reviews the differences between Single-tenant vs Multi-tenant so you can have a better comprehension of both architectures.

Multi-Tenant Kubernetes Workload Architecture

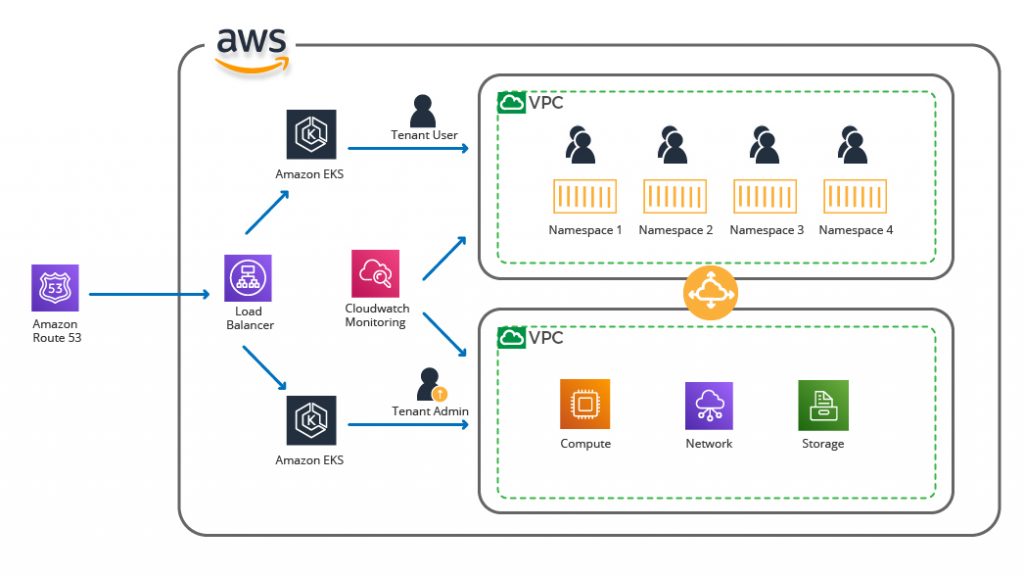

To achieve multi-tenancy, we need to create EKS clusters on AWS, which has multiple tenants on the workloads. In the architecture diagram below, we have multiple tenants hosted on different and fully isolated namespaces. Namespaces are nothing but a logical way to divide cluster resources between multiple resources.

On both the EKS clusters, we have independent components of the applications. These components can be computer resources, storage resources, etc. This isolation between namespaces can be achieved through various techniques; for instance, network policies.

Isolation Layers in EKS

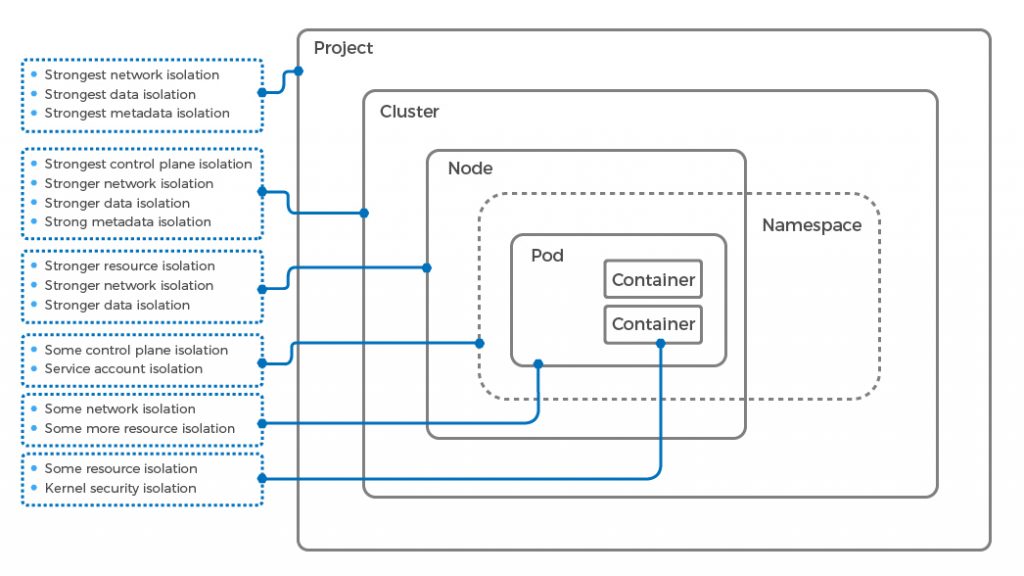

There are several layers in EKS which provide a specific layer of security and isolation for a Kubernetes multi-tenancy SaaS application. Below are some layers of isolation which you can implement in your design:

Container: A container providers a fundamental layer of isolation, but it does not isolate the identity or the network. It provides a certain level of isolation from the noisy neighbors.

Pod: Pods are nothing but a collection of containers. The pod can isolate networks for a group of containers. Kubernetes network policies help in this kind of micro-segmentation of containers.

Node: A node is a machine, either physical or virtual. A machine includes a collection of pods. A node leverages a hypervisor or dedicated hardware for the isolation of resources.

Cluster: A cluster is a collection of nodes and a control plane. This is the management layer for your containers. The cluster can provide extreme network isolation.

The diagram below shows multiple isolation layers:

Tenant Isolation Techniques in Amazon EKS

Some primary constructs which help to design EKS multi-tenancy are for Compute, Networking, and Storage. Let’s go through them one-by-one:

Compute Isolation

Namespaces are the fundamental element of multi-tenancy. Most of the Kubernetes object belongs to a particular namespace, which virtually isolates them from one another. Namespaces may not provide workload or user isolation, but it does provide RBAC (Role-based Access Control). This defines who can do what on the Kubernetes API.

Amazon EKS provides RBAC using different IAM policies. These policies are mapped to roles and groups. RBAC acts as a central component that offers a layer of isolation between the multiple tenants.

Kubernetes also allows users to limit and define the CPU request and memory for the pods. To optimize intelligent resource allocation, ResourceQuotas can be used. Resource quotas enable users to limit the number of resources consumed within one namespace. CPU utilization and memory utilization can be controlled using ResourceQuotas.

apiVersionv1.0

kindResourceQuota

metadata

namemem-cpu

namespacecustomNamespace

spec

hard

requests.cpu"2"

requests. memory2Gi

limits.cpu"2"

limits. memory4Gi

Networking Isolation

It is a default nature of the pods to communicate over the network on the same cluster across different namespaces. There are several network policies that enable the user to have fine-grained control over the pod-to-pod communication. Let’s look at the below network policy, which allows communication within namespaces:

xxxxxxxxxx

apiVersionnetworking.k8s.io/v1

kindNetworkPolicy

metadata

namenp-ns1

namespacenamespace1

spec

podSelector

policyTypes

Ingress

Egress

ingress

-from

-namespaceSelector

matchLabels

nsname:namespace1

egress

-to

-namespaceSelector

matchLabels

nsname:namespace1

There are more advanced network policies that can be used to isolate the tenants in a multi-cluster environment. These are Service Mesh and App Mesh.

A service mesh provides additional security over the network, which spans outside the single EKS network. It can provide better traffic management, observability, and security. A service mesh can also define better Authorization and Authentication policies for users to access different network layers.

Lastly, AWS App mesh is a managed service that gives consistent visibility of network traffic. It provides a detailed control panel to see and control all the different elements in the network.

Storage Isolation

Storage isolation is a necessity for tenants using a shared cluster. “Volume” is a major tool that Kubernetes offers, which provides a way to connect a form of persistent storage to a pod.

A Persistent Volume is usually declared at the cluster level along with the StorageClass (a cluster administrator, which is responsible for the configurations and the operations). Amazon EKS provides different out-of-the-box integration of storage, including Amazon EBS, Amazon EFS, and FSx for Lustre.

A PersistentVolumeClaim allows a user to request some volume storage for a pod. PVCs are defined as namespace resources and hence provide better tenancy access to storage. AWS admins can use ResourceQuotas to describe different storage classes for other namespaces. In the code below, we can see how to disable the use of storage class storage2 from the namespace1:

xxxxxxxxxx

apiVersionv1

kindResourceQuota

metadata

namestorage-ns1

namespacenamespace1

spec

hard

storage2.storageclass.storage.k8s.io/requests.storage

Multi-Cluster Isolation

Another alternative to implementing isolation is to implement multiple single tenants’ EKS clusters. With this strategy, all the Tenants will have dedicated resources.

In this kind of implementation, Terraform can be helpful in the provisioning of multiple homogeneous clusters. This can maintain similar policies across different EKS clusters, and also help to automate the provisioning and policy mapping of other EKS clusters.

This is a scalable isolation technique provided that there is an excellent provisioning and monitoring solution implemented in the infrastructure where Amazon EKS clusters are running.

Also read: Apache and Ngnix Multi-Tenant to Support SaaS Applications

Multi-Tenancy Best practices

There are multiple best practices in context to the implementation of EKS multi-tenancy. We are going to discuss some best practices for this implementation:

Categorize the Namespaces

Namespaces should be categorized based on usage. Some of the command categories can be:

- System Namespaces: For system pods.

- Service Namespaces: All the services or applications can be aligned to this namespace.

- Tenant Namespace: These namespaces should be used to run applications that do not need access from other namespaces in the cluster.

Enable RBAC

Enabling Role-Based Access Control allows better control of Kubernetes APIs for a different group of users. Using this technique, the admins can create different roles for different users, e.g., one role for admin, and the other one can be for a tenant.

Namespace Isolation Using Network Policy

Admins can have better governance of the networking between pods using Network Policies. Tenant namespaces can be easily isolated using this technique.

Limit Tenant’s Use of Shared Resources

Implementation of Resource Quotas can ensure proportionate resource usage across tenants. Resource Quotas can be in better control of system resources like CPU, memory, and storage.

Limit Tenant’s Access to Non-Namespaced Resources

It is good to make sure that the tenants do not have access to non-namespaced resources. Non-namespaced resources do not specifically belong to a particular namespace. But, they do belong to a cluster. The admins should make sure that the Tenant should not have privileges to create, update, or delete the cluster scoped resources.

Horizontal Pod Autoscaler (HPA)

This is an auto-scaling feature for the pods. HPA provides a cost-optimized solution for scaling the applications to offer a higher uptime and availability. This feature helps to manage unpredictable workloads in Production environments. Automatic sizing detects the application usage patterns and adds the corresponding scaling factor to your application. For example, if your application traffic is less during night time, then a static scale schedule will schedule the pods to sleep. On the other hand, more pods will be added to the cluster if there is an unexpected hike in the application traffic.

Are you looking to architect a SaaS application? Learn 3 ways to architect your SaaS application on AWS!

Closing Thoughts

This article covered some of the best considerations for Kubernetes multi tenancy implementation using Amazon EKS. We covered different perspectives around compute, networking, and storage. It is imperative to mention that these strategies should be weighed against the cost and complexity of any design. Depending upon the SaaS service you are implementing, using any of the above implementation models or even a hybrid approach can suit your design needs.

Published at DZone with permission of Alfonso Valdes. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments