Java Concurrency and Multi-Threading

In this post, we'll discuss various concepts we commonly use in concurrent programming and how to resolve synchronization, race conditions, and deadlocks.

Join the DZone community and get the full member experience.

Join For FreeThe Java platform was designed to support concurrent programming, with basic concurrency support in the Java programming language and the Java class libraries. How often do we think about concurrency while writing new code or while doing a code review with the team? A small bug could lead to endless hours of debugging applications for a production issue that is not easy to reproduce locally.

Why do we need concurrent programming? We are in the age where we work with machines that are equipped with multi-core CPUs. The code you deliver should be optimized to run on such machines, utilizing the hardware to its fullest. While designing a concurrent system, we should not interfere with other processes running on the CPU. We want our application to run in its own black box without impacting other processes. These days we have multicore CPUs that can easily handle this but how was this done back in the days when the single-core was used?

On a single-core CPU, a commonly used scheduling technique Time-Slice was used by the CPU. CPU time or processing time was divided into small chunks called slices. A scheduler is responsible for assigning the CPU time. The process will get its assigned time from the scheduler to finish the job. The scheduler listens to states/events from processes to move to the next process. This switching from one task to another happens at a very high speed. The user experience is not affected for him everything is running at the same time. There are numerous algorithms and techniques that can be used to handle scheduling.

As a developer working on concurrent systems, how can we ensure we design optimal solutions? To answer this question, let's first look into threads.

Thread is a bunch of instructions we want to execute in a certain way. In your code, you are waiting to print an array while that array is being written from the database by another thread. Here the reader will either write an incomplete array or be empty depending on what is present in the array at that moment, this is known as a race condition.

Let’s take singleton class as an example and try to see how threads work and try to study race conditions under the hood.

public class SingletonExample {

private static SingletonExample instance;

private SingletonExample() {}

public static SingletonExample getInstance() {

if(instance == null){

instance = new SingletonExample();

}

return instance;

}

}

CPU time |

Thread1 |

Thread2 |

Slice1 - 0 ms |

Check if the instance of SingletonExample is null |

Waiting |

Slice2 - 1 ms |

the instance is null at this point, so it enters if block |

Waiting |

Slice3- 2 ms |

Thread Scheduler pauses Thread1 |

Is in a runnable state |

Slice4 - 3 ms |

Waiting |

Check if an instance of SingletonExample is null |

Slice5 - 4 ms |

Waiting |

The instance is null at this point so it enters if block |

Slice6 - 5 ms |

Waiting |

Creates an instance of SingletonExample |

Slice7 - 6 ms |

Is in a runnable state |

Thread Scheduler pauses Thread2 |

Slice8 - 7ms |

Since it has already been checked for null, will create |

For example, there are 2 threads Thread1 and Thread2, both are calling getInstance() of our SingletonExample class. The above table depicts the action performed in the time slice by each thread.

Until Slice7, we do not see any issue and the code executes as expected. However, in slice8 scheduler returns to Thread1 and it skips the null check because that was already performed in slice1. Due to this, we create another instance of our SingletonExample, erasing the instance created by Thread2. This is a race condition, a common bug introduced in concurrent programming.

To solve the race condition encountered above, we can use the concept of synchronization. By using synchronized method declaration we can overcome this race condition, let’s look at sample code.

public class SingletonExample {

private static SingletonExample instance;

private SingletonExample() {}

public static synchronized SingletonExample getInstance() {

if(instance == null){

instance = new SingletonExample();

}

return instance;

}

}To synchronize getInstance(), we need to protect the method from being accessed by threads. Every object in java has a lock and key for synchronization, if Thread1 tries to use a protected block of code, it will request for the key. The lock object will check if the key is available, if the key is available, it will give the key to Thread1 and execute the code.

Now, Thread2 requests for the key, since the object does not have the key (the key is possessed by Thread1), Thread2 will wait until it becomes available to use. This way we ensure getInstance() does not enter into race conditions.

Now, about the object holding the key and giving it to the threads, that is accomplished by the JVM. JVM uses the SingletonExample class object to hold the key. If we use the synchronized keyword on the non-static method, the key is held by the instance of the class we are in. A better solution is to create a dedicated synchronized block inside the method and pass the key as a parameter.

public class SingletonExample {

private final Object lock = new Object();

public getInstance(){

synchroznied(lock) {

// logic goes here

}

}

}This technique works well on a single method, but if we have multiple synchronized blocks how do we go about it. Let me show what could possibly go wrong if you start using synchronized blocks across multiple methods.

public class DeadlockTest {

public static Object Lock_Thread1 = new Object();

public static Object Lock_Thread2 = new Object();

private static class Thread1 extends Thread {

public void run() {

synchronized (Lock_Thread1) {

System.out.println("Thread 1 - Holding lock_thread1");

try {

Thread.sleep(30);

} catch (InterruptedException e) {

}

System.out.println("Thread 1 - Waiting for lock_thread2");

synchronized (Lock_Thread2) {

System.out.println("Thread 1 - Holding lock 1 & 2");

}

}

}

}

private static class Thread2 extends Thread {

public void run() {

synchronized (Lock_Thread2) {

System.out.println("Thread 2 - Holding lock_thread2");

try {

Thread.sleep(30);

} catch (InterruptedException e) {

}

System.out.println("Thread 2 - Waiting for lock_thread1");

synchronized (Lock_Thread1) {

System.out.println("Thread 2 - Holding lock 1 & 2");

}

}

}

}

public static void main(String args[]) {

Thread1 T1 = new Thread1();

Thread2 T2 = new Thread2();

T1.start();

T2.start();

}

}Output:

Output:

Thread 1 - Holding lock_thread1

Thread 2 - Holding lock_thread2

Thread 1 - Waiting for lock_thread2

Thread 2 - Waiting for lock_thread1

esc to exit...

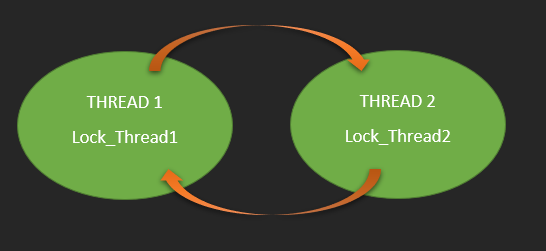

In the above example, we can see, T1 is holding Lock_Thread1 and T2 is holding Lock_Thread2. T2 is waiting for the lock lock_thread1 and T1 is waiting for the lock Lock_Thread2. This situation is known as Deadlock. Both the threads are in a blocked state, and neither will end because each thread is waiting for the other thread to exit.

This situation arises because of the order in which we obtain the locks. If we change the order of Lock_Thread1and Lock_Thread2, then run the same program the threads will no longer wait forever.

public class DeadlockTest {

public static Object Lock_Thread1 = new Object();

public static Object Lock_Thread2 = new Object();

private static class Thread1 extends Thread {

public void run() {

synchronized (Lock_Thread1) {

System.out.println("Thread 1 - Holding lock_thread1");

try {

Thread.sleep(10);

} catch (InterruptedException e) {

}

System.out.println("Thread 1 - Waiting for lock_thread2");

synchronized (Lock_Thread2) {

System.out.println("Thread 1 - Holding lock 1 & 2");

}

}

}

}

private static class Thread2 extends Thread {

public void run() {

//This is changed to use lock for Thread1

synchronized (Lock_Thread1) {

System.out.println("Thread 2 - Holding lock_thread1");

try {

Thread.sleep(10);

} catch (InterruptedException e) {

}

System.out.println("Thread 2 - Waiting for lock_thread1");

synchronized (Lock_Thread2) {

System.out.println("Thread 2 - Holding lock 1 & 2");

}

}

}

}

public static void main(String args[]) {

Thread1 T1 = new Thread1();

Thread2 T2 = new Thread2();

T1.start();

T2.start();

}

}Output:

Thread 1 - Holding lock_thread1

Thread 1 - Waiting for lock_thread2

Thread 1 - Holding lock 1 & 2

Thread 2 - Holding lock_thread1

Thread 2 - Waiting for lock_thread1

Thread 2 - Holding lock 1 & 2

As we can see, a deadlock situation can be avoided by ensuring the order in which you can obtain the lock on resources that your application needs. A good design would be to use minimal locks and to not share the locks if it is already assigned to another thread.

Conclusion

I have tried to summarize various concepts we commonly use in concurrent programming. I hope you find this article useful. Be mindful of synchronization, race conditions, and deadlocks when you work on concurrent programming assignments.

Opinions expressed by DZone contributors are their own.

Comments