Introduction to Algorithms

This tutorial walks you through the various aspects of algorithms, including instances of the problem, computational complexities, and classifications.

Join the DZone community and get the full member experience.

Join For FreeIntroduction to Algorithms

An algorithm is a step-by-step instruction to solve a given problem efficiently for a given set of inputs. It is also viewed as a tool for solving well-defined computational problems or a set of steps that transform the input into the output. Algorithms are language-independent and are said to be correct only if every input instance produces the correct output.

The Instance of the Problem

The input of the algorithm is called an instance of the problem, which should satisfy the constraints imposed on the problem statement.

Problem Set

Sort a given set of inputs in a sorted order.

- Input: A sequence of "n" unordered numbers (a1, a2, .... an)

- Output: Reordering of the numbers in ascending order.

Pseudocode for Insertion Sort

INSERTION_SORT(A)

FOR i 2 to A.length

key = A[i]

i = j – 1

FOR i > 0 and A[i] > key

A[i+1] = A[i]

i = i -1

A[i + 1] = keyExpression of Algorithms

Algorithms are described in different ways including natural languages, flowcharts, pseudocode, programming languages, etc.

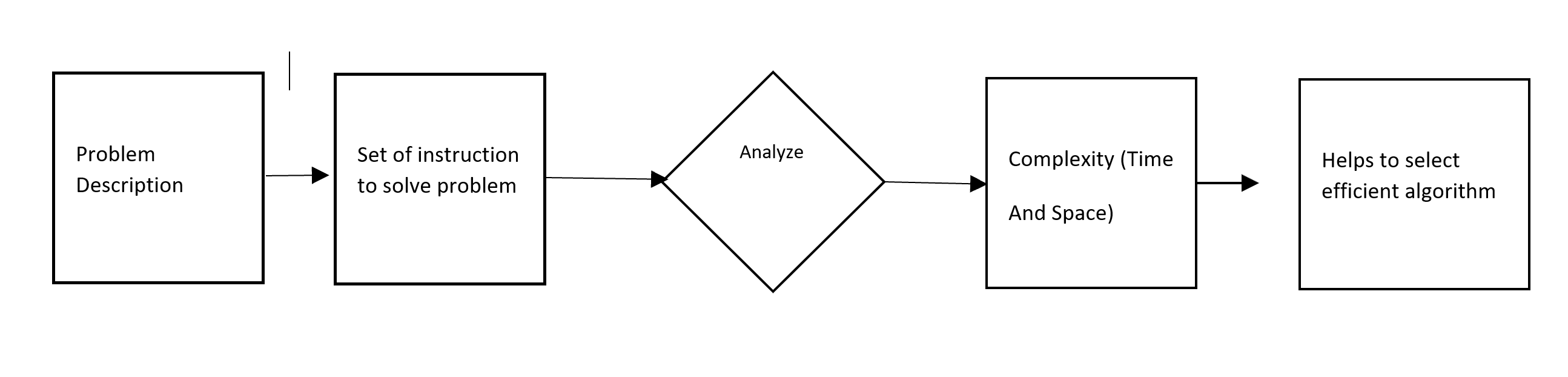

Analyzing Algorithms

Analyzing algorithms means finding out the resources required for algorithms, such as memory, execution time, and other resources. One of the most common techniques to analyze an algorithm is to look at the time and space complexities of an algorithm. It is required to analyze an algorithm since multiple algorithms might be available to solve the same problem, and hence without proper analysis, it is difficult to choose the best algorithm to implement for better performance. An algorithm is efficient when the value of the function is small or grows slowly when the size of the input increases.

Computational Complexity

In computer science, the computational complexity of an algorithm is the number of resources required to execute an algorithm. This mainly focuses on the time and space required to execute the implementation of the algorithm. The amount of resources required to run an algorithm depends on the size of the inputs. It is expressed as f(n), where n is the size of the input.

Types of Complexity

Time Complexity

This is the number of elementary operations performed by an algorithm for the input size of n. Running time and size of the input are the most important factors when analyzing the time complexity of an algorithm. The running time of an algorithm for a specific input is the number of primitive operations or steps performed. There are three bounds associated with time complexity: worst case (big O), best case (Omega), and average case (Theta).

Space Complexity

The amount of computer memory required for an algorithm of input n.

Other

This includes the number of arithmetic operations. For example, while adding two numbers, it is not possible to count input size based on size — in this case, the number of operations on bits is used for complexity. It is called bit complexity.

Order of Growth

Order growth refers to the part of the function definition that grows quicker when the value of the variable increases. The order of growth of the running time of an algorithm allows you to compare the relative performance of different algorithms for the same problem set.

The following have to be considered while finding the order of growth of function or calculating the running time of an algorithm:

- Ignore constant

- Ignore lower growing order terms

- Ignore the leading term coefficient since constant factors are less significant.

Example

For insertion, sort the algorithm (provided in the earlier section Pseudocode for Insertion Sort).

Let’s take T(n) or f(n) = an2 + an + c (a, c are constants), where…

- growth of "an" is less than compared to an2, so "an" can be dropped

- "c" is constant and never changes based on input size so "c" can be dropped

- The coefficient of an2 can be dropped

Finally, f(n) = n2 is called O(n2) in big O notation.

Classification

Algorithms may be classified using various factors and execution characteristics, such as implementation, design, complexity, and field of usage.

- By implementation

- Recursion

- By design

- Incremental approach

- Example: insertion sort

- Brute-force or exhaustive search

- Divide and conquer

- Example: merge sort, quick sort

- Heap

- Example: heap sort

- Incremental approach

- By complexity

- Constant time: Time required to run the algorithm the same way regardless of input size. For example, access to an array element: O(1)

- Logarithmic time: Time grows the logarithmic function of the input size. For example, binary search: O(log n)

- Linear time: If the time is proportional to the input size. For example, the traverse of a list: O(n)

- Polynomial time: If the time is a power of the input size. For example, the bubble sort algorithm has quadratic time complexity.

- Exponential time: If the time is an exponential function of the input size. For example, brute-force or exhaustive search

- By field of study or usage

- Searching algorithm

- Sorting algorithm

- Merge

- Medical

- Machine learning

- Date compression

References

Introduction to Algorithms by Thomas H. Cormen (Author), Charles E. Leiserson (Author), Ronald L. Rivest (Author), and Clifford Stein (Author).

Published at DZone with permission of Thamayanthi Karuppusamy. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments