Instant Integrations With API and Logic Automation

Automate API creation using one command. Self-serve and ready for ad hoc integrations. Use rule-based logic for custom integrations, 40x more concise than code.

Join the DZone community and get the full member experience.

Join For FreeIntegrating internal systems and external B2B partners is strategic, but the alternatives fall short of meeting business needs.

ETL is a cumbersome way to deliver stale data. And does not address B2B.

APIs are a modern approach, but framework-based creation is time-consuming and complex. It's no longer necessary.

API and Logic Automation enables you to deliver a modern, API-based architecture in days, not weeks or months. API Logic Server, an open-source Python project, makes this possible. Here's how.

API Automation: One Command to Create Database API

API Automation means you create a running API with one command:

ApiLogicServer create --project_name=ApiLogicProject \

--db_url=postgresql://postgres:p@localhost/nw

API Logic Server reads your schema and creates an executable project that you can customize in your IDE.

JSON: API Self-Serve for Ad Hoc Integrations

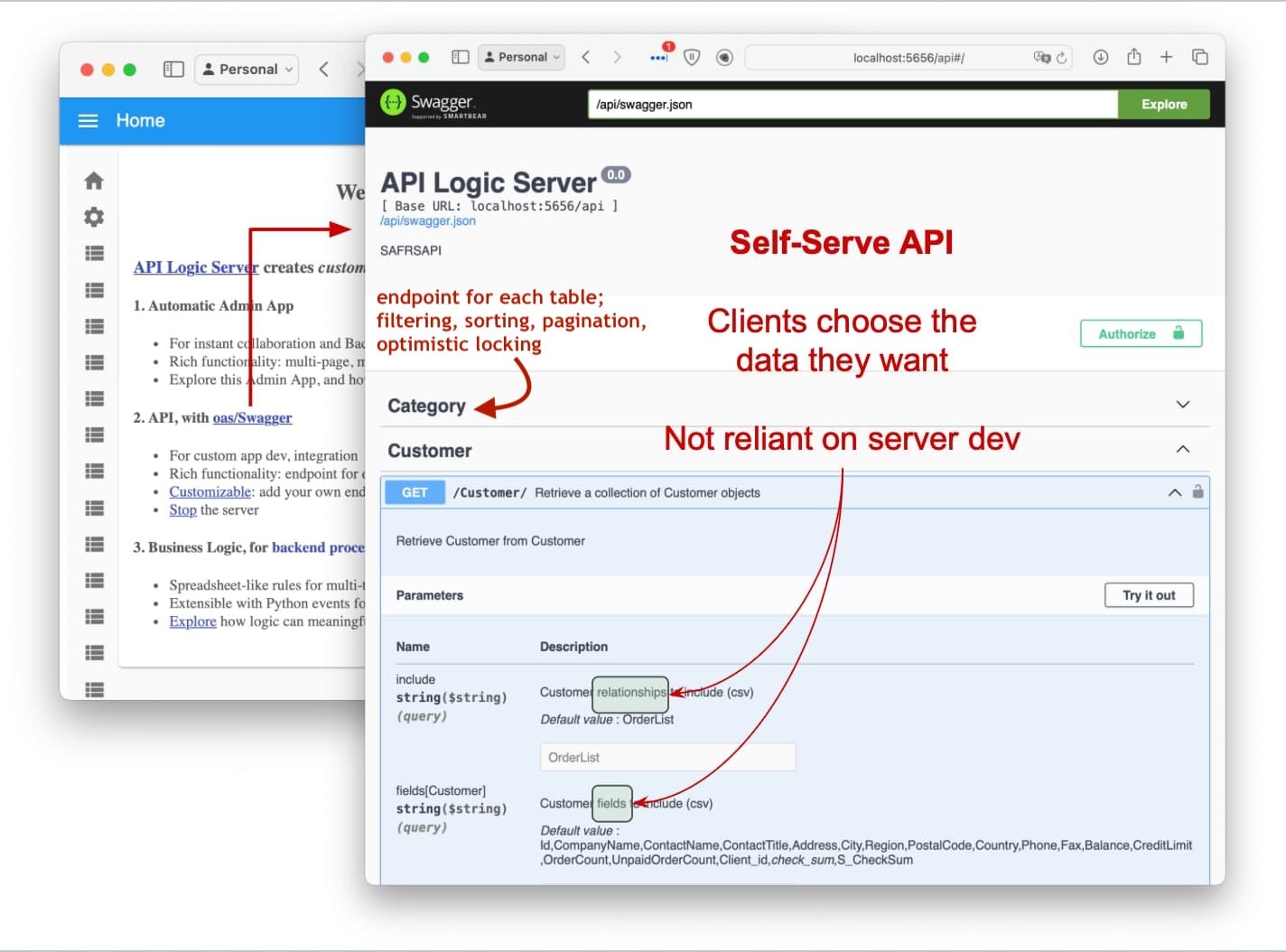

You now have a running API (and an Admin Web App not shown here):

The API follows the JSON: API standard. Rather like GraphQL, JSON APIs are self-serve: clients can request the fields and related data they want, as shown above, using automatically created Swagger.

Many API requirements are ad hoc retrievals, not planned in advance. JSON: API handles such ad hoc retrieval requests without requiring any custom server API development.

Contrast this to traditional API development, where:

- API requirements are presumed to be known in advance for custom API development and

- Frameworks do not provide API Automation. It takes weeks to months of framework-based API development to provide all the features noted in the diagram above.

Frameworks are not responsive to unknown client needs and require significant initial and ongoing server API development.

JSON: API creation is automated with one command;

self-serve enables instant ad hoc integrations,

without continuing server development.

Full Access to Underlying Frameworks: Custom Integrations

The create command creates a full project you can open in your IDE and customize with all the power of Python and frameworks such as Flask and SQLAlchemy. This enables you to build custom APIs for near-instant B2B relationships. Here's the code to post an Order and OrderDetails:

The entire code is shown in the upper pane. It's only around ten lines because:

- Pre-supplied mapping services automate the mapping between

dicts(request data from Flask) and SQLAlchemy ORM row objects. The mapping definition is shown in the lower pane.- Mapping includes

lookupsupport so clients can provide product names, not IDs.

- Mapping includes

- Business Logic (e.g., to check credit) is partitioned out of the service (and UI code) and automated with rules (shown below).

Custom Integrations are fully enabled

using Python and standard frameworks.

Logic Automation: Rules are 40X More Concise

While our API is executable, it's not deployable until it enforces logic and security. Such backend logic is a significant aspect of systems, often accounting for nearly half the effort.

Frameworks have no provisions for logic. They simply run code you design, write, and debug.

Logic Automation means you declare rules using your IDE, adding Python where required. With keyword arguments, typed parameters, and IDE code completion, Python becomes a Logic DSL (Domain Specific Language).

Declaring Security Logic

Here is a security declaration that limits customers to see only their own row:

Grant( on_entity = models.Customer,

to_role = Roles.customer,

filter = lambda : models.Customer.Id == Security.current_user().id,

filter_debug = "Id == Security.current_user().id") # customers can only see their own accountGrants are typically role-based, as shown above, but you can also do global grants that apply across roles; here for multi-tenant support:

GlobalFilter( global_filter_attribute_name = "Client_id", # try customers & categories for u1 vs u2

roles_not_filtered = ["sa"],

filter = '{entity_class}.Client_id == Security.current_user().client_id')So, if you have a database, you have an ad hoc retrieval API:

1. Single Command API Automation,

2. Declare Security.

A great upgrade from cumbersome ETL.

Declaring Transaction Logic

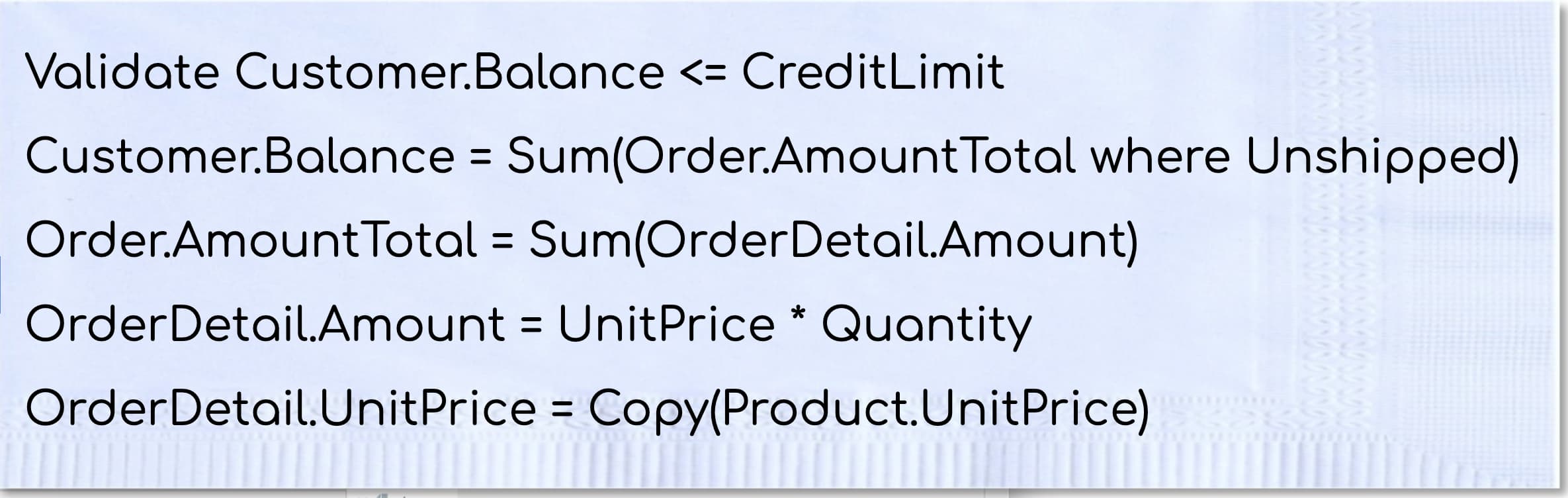

Declarative rules are particularly well-suited for updating logic. For example, imagine the following cocktail napkin spec to check credit:

The rule-based implementation below illustrates that rules look like an executable design:

Rules operate by plugging into SQLAlchemy (ORM) events. They operate like a spreadsheet to automate multi-table transactions:

- Automatic Invocation/Re-use: rules are automatically invoked depending on what was changed in the transaction. This means they are automatically re-used over transaction types:

- For example, the rules above govern inserting orders, deleting orders, shipping orders, changing Order Detail quantities or Products, etc., in about a dozen Use Cases.

- Automatic re-use and dependency management result in a remarkable 40x reduction in code; the five rules above would require 200 lines of Python.

- Automatic Multi-Table Logic: rules chain to other referencing rules, even across tables. For example, changing the OrderDetail.Quantity triggers the

Amountrule, which chains to trigger theAmountTotalrule. Just like a spreadsheet. - Automatic Ordering: rule execution order is computed by the system based on dependencies.

- This simplifies maintenance; just add new rules, and you can be sure they will be called in the proper order.

- Automatic Optimizations: rules are not implemented by the Rete algorithm - they are highly optimized for transaction processing:

- Rules (and their overhead) are pruned if their referenced data is unchanged

- Sum/count maintenance is by one row "adjustment updates," not by expensive SQL aggregate queries.

Spreadsheet-like rules are 40X more concise.

Declare and Debug in your IDE,

Extend With Python.

Message Handling

Message handling is ideal for internal application integration. We could use APIs, but Messages provide important advantages:

- Async: Our system will not be impacted if the target system is down. Kafka will save the message, and deliver it when the target is back up.

- Multi-cast: We can send a message that multiple systems (e.g., Shipping, Accounting) can consume.

Sending Messages

Observe the send_order_to_shipping code (Fig 3, above):

- This is standard Python code, illustrating that logic is rules and code: fully extensible.

- It's an event on after_flush, so that all the transaction data is available (in cache).

- This is used to create a Kafka message, using the

RowDictMapperto transform SQLAlchemy rows to dicts for the Kafka json payload. - The system-supplied

kafka_producermodule simplifies sending Kafka messages.

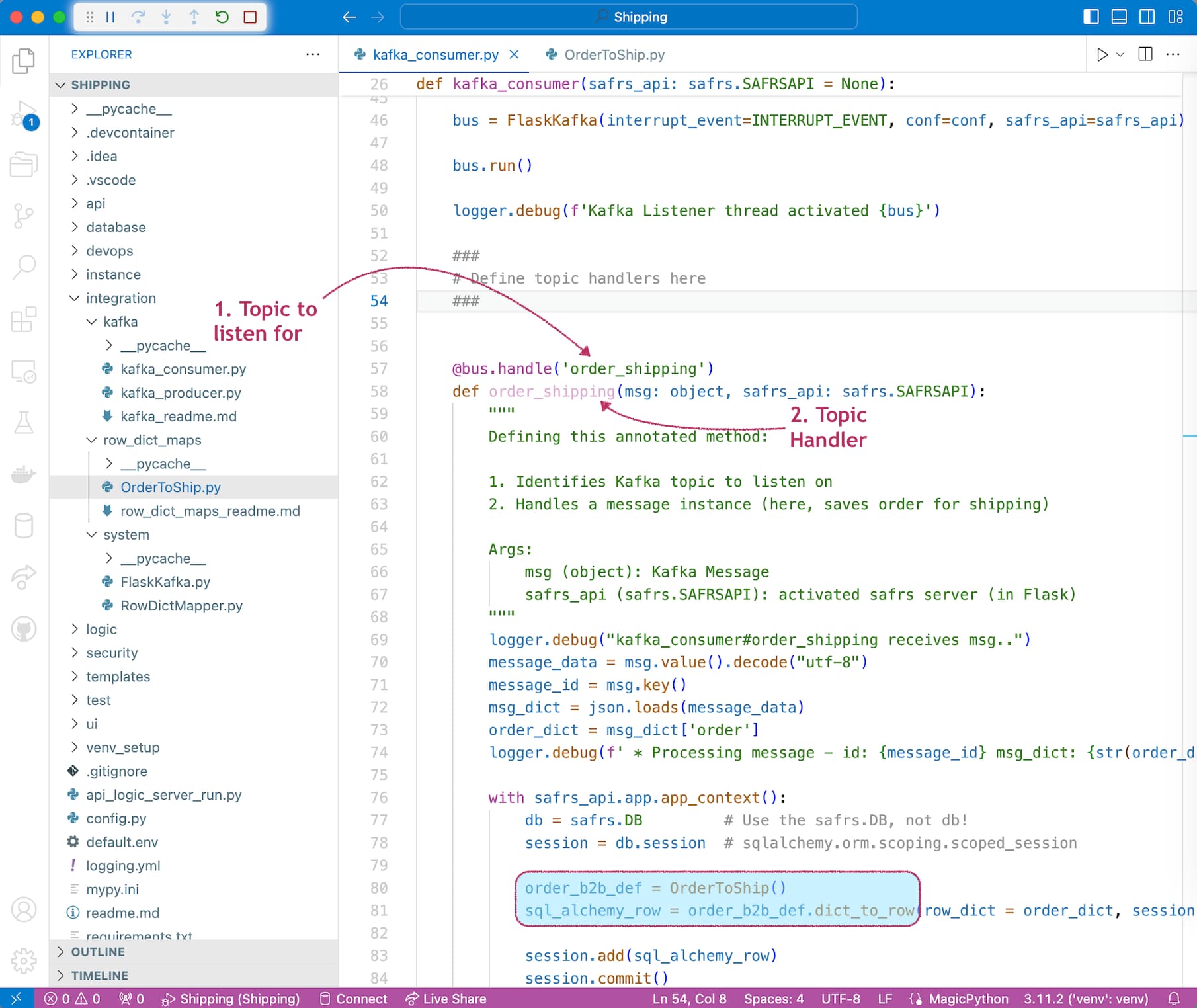

Receiving Messages

There is an analogous automation to consume such messages. You add code to pre-supplied kafka_consumer and annotate your handler method with the topic name.

The pre-supplied FlaskKafka module provides Kafka listening and thread management, making your message-handling logic similar to the custom endpoint example above.

Summary: Remarkable Business Agility

So there you have it: remarkable business agility for ad hoc and custom integrations. This is enabled by:

- API Automation: Create an executable API with one command.

- Logic Automation: Declare logic and security in your IDE with spreadsheet-like rules, 40X more concise than code.

- Standards-based customization: Python, Flask, and SQLAlchemy; develop in your IDE.

Frameworks are just too slow for the bulk of the API development, given now-available API Automation. That said, frameworks are great for customizing what is not automated. So: automation for business agility, standards-based customization for complete flexibility.

Logic Automation is a significant new capability, enabling you to reduce the backend half of your system by 40X.

Opinions expressed by DZone contributors are their own.

Comments