Importing Big Tables With Large Indexes With Myloader MySQL Tool

Join the DZone community and get the full member experience.

Join For Freeoriginally written by david ducos

mydumper is known as the faster (much faster) mysqldump alternative. so, if you take a logical backup you will choose mydumper instead of mysqldump. but what about the restore? well, who needs to restore a logical backup? it takes ages! even with myloader. but this could change just a bit if we are able to take advantage of fast index creation.

as you probably know, mydumper and mysqldump export the struct of a table, with all the indexes and the constraints, and of course, the data. then, myloader and mysql import the struct of the table and import the data. the most important difference is that you can configure myloader to import the data using a certain amount of threads. the import steps are:

- create the complete struct of the table

- import the data

when you execute myloader, internally it first creates the tables executing the “-schema.sql” files and then takes all the filenames without “schema.sql” and puts them in a task queue. every thread takes a filename from the queue, which actually is a chunk of the table, and executes it. when finished it takes another chunk from the queue, but if the queue is empty it just ends.

this import procedure works fast for small tables, but with big tables with large indexes the inserts are getting slower caused by the overhead of insert the new values in secondary indexes. another way to import the data is:

- split the table structure into table creation with primary key, indexes creation and constraint creation

- create tables with primary key

-

per table do:

- load the data

- create index

- create constraints

this import procedure is implemented in a branch of myloader that can be downloaded from here or directly executing bzr with the repository:

bzr branch lp:~david-ducos/mydumper/mydumper

the tool reads the schema files and splits them into three separate statements which create the tables with the primary key, the indexes and the constraints. the primary key is kept in the table creation in order to avoid the recreation of the table when a primary key is added and the “key” and “constraint” lines are removed. these lines are added to the index and constraint statements, respectively.

it processes tables according to their size starting with the largest because creating the indexes of a big table could take hours and is single-threaded. while we cannot process other indexes at the time, we are potentially able to create other tables with the remaining threads.

it has a new thread (monitor_process) that decides which chunk of data will be put in the task queue and a communication queue which is used by the task processes to tell the monitor_process which chunk has been completed.

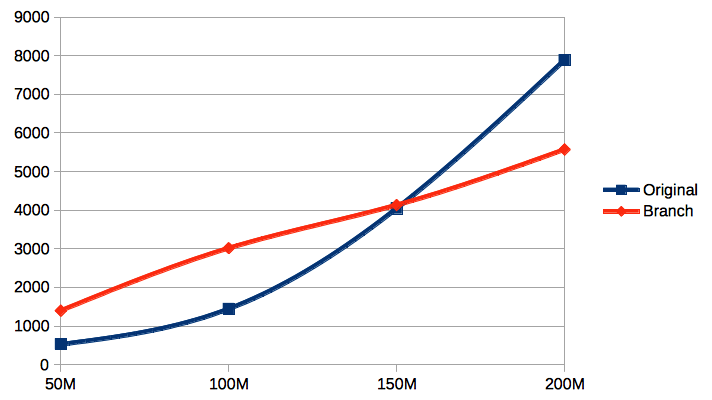

i run multiple imports on an aws m1.xlarge machine with one table comparing myloader and this branch and i found that with large indexes the times were:

as you can see, when you have less than 150m rows, import the data and then create the indexes is higher than import the table with the indexes all at once. but everything changes after 150m rows, import 200m takes 64 minutes more for myloader but just 24 minutes for the new branch.

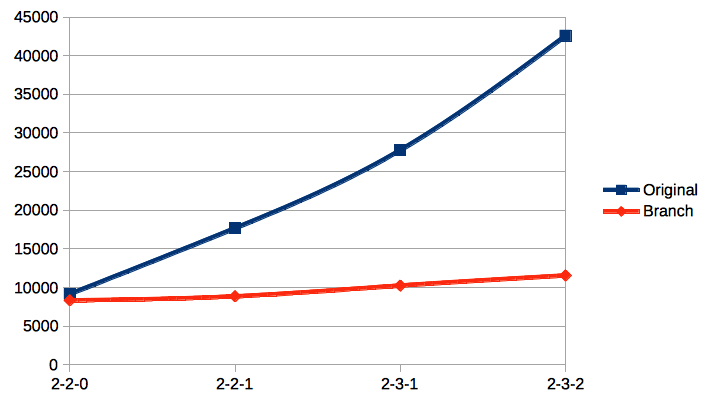

on a table of 200m rows with a integer primary key and 9 integer columns, you will see how the time increases as the index gets larger:

where:

2-2-0: two 1-column and two 2-column index

2-2-1: two 1-column, two 2-column and one 3-column index

2-3-1: two 1-column, three 2-column and one 3-column index

2-3-2: two 1-column, three 2-column and two 3-column index

conclusion

this branch can only import all the tables with this same strategy, but with this new logic in myloader, in a future version it could be able to import each table with the best strategy reducing the time of the restore considerably.

Published at DZone with permission of Peter Zaitsev, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments