Importance of Kubernetes in IoT Applications

With Kubernetes, development teams can quickly validate, roll out, and deploy changes to loT services.

Join the DZone community and get the full member experience.

Join For FreeKubernetes is a service used for deploying cloud-native applications. As cloud applications are associated with our IoT devices and products, that is where we get the requirement of building IoT applications with Kubernetes.

IoT analytics is moving from the cloud to the edge because of security, latency, autonomy, and cost. However, distributing and managing loads to several hundred nodes at the edge can be a complex task. So, a requirement that arises to distribute and manage the loads on edge devices is to use a lightweight production-grade solution such as Kubernetes.

What Is Kubernetes?

Kubernetes, or K8s is a container-orchestration system that helps application developers in deploying, scaling, and managing cloud-native applications in a hassle-free manner. Besides, containerization helps simplify the lifecycle of cloud-native applications.

How Kubernetes Works

When we have the deployment of a working Kubernetes, we typically refer to it as a cluster. A Kubernetes cluster can essentially be thought of as having two parts: the control plane and nodes.

In Kubernetes, each node is its own Linux environment. There is flexibility in that it could either be a physical machine or a virtual one. Each node in Kubernetes runs pods that comprise containers.

The control plane mainly takes care of the task of maintaining the required state of the cluster, such as the types of applications that are running and which container images are being used by them. It is worth noting that Compute machines are actually responsible for running the applications and workloads.

Kubernetes gets executed on top of an operating system, Linux for example, and communicates with pods of containers that run on the nodes.

The Kubernetes control plane accepts the instructions from an administrator (or DevOps team) and then forwards them to the computing machines.

This mechanism works well with a number of services to automatically pick which node is most ideal for the given task. After that, it allocates the necessary resources and delegates the work to the pods in that node.

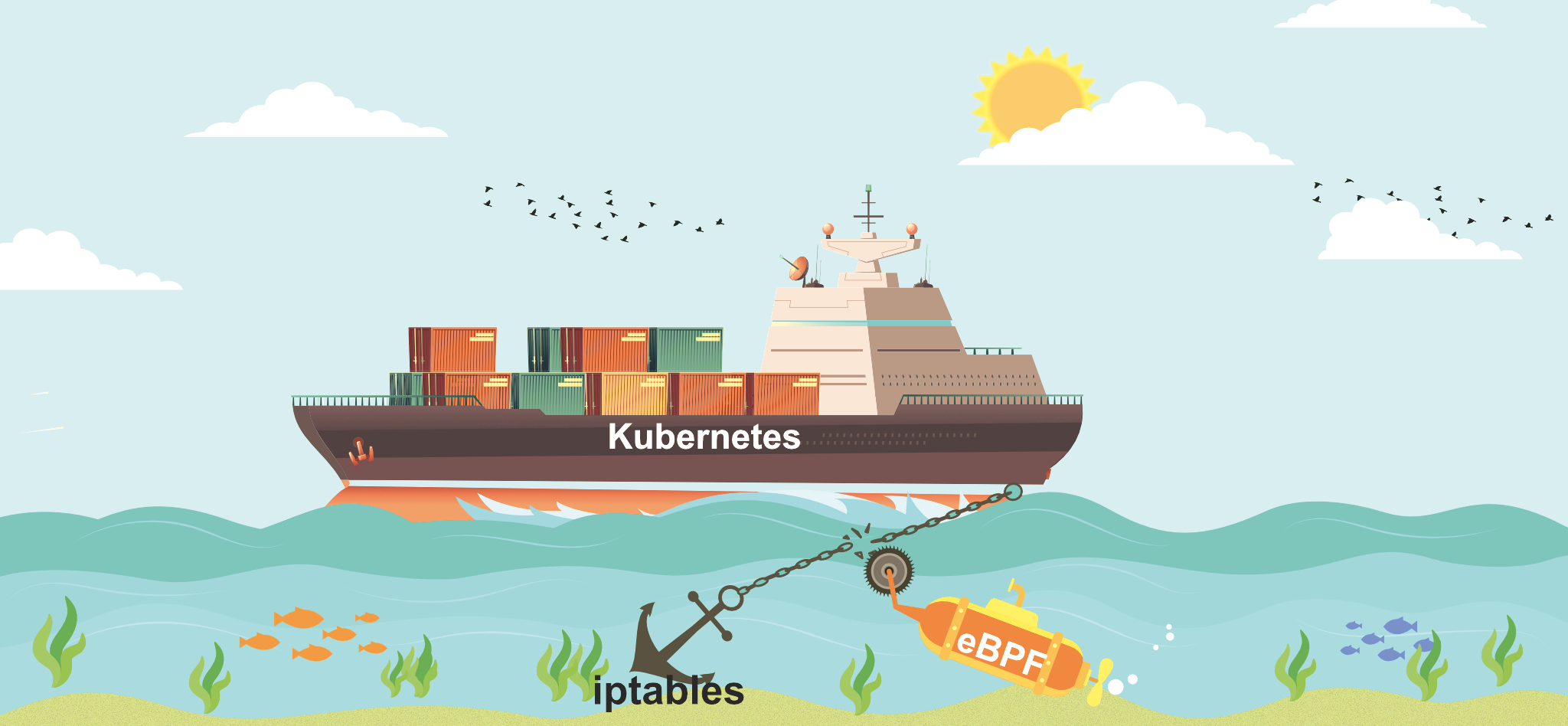

Kubernetes is a Greek word that translates to “captain” or “sailing master,” the container ship analogy is inspired by it. The captain is in charge of the ship. Hence, the analogy of Kubernetes as the captain or orchestrator of containers through the information technology space.

Just Imagine Docker containers as packing boxes. The boxes that need to go to the same destination should stay together and be loaded into the same shipping containers. In this analogy, the packing boxes are Docker containers and the shipping containers are pods.

What We Want for a cargo ship is to arrive safely at its destination and avoid the storms of the seas (internet). Kubernetes, as the captain of the ship, steers the ship along a smooth path which means ensuring all the applications under its supervision are managed.

Kubernetes gives Pods their own IP addresses whereas iptables allow users to control network traffic.

As shown in the creative, iptables are replaced with eBPF in Kubernetes.

Why Is Developing IoT Applications With Kubernetes Needed?

DevOps for IoT App Development With Kubernetes

IoT solutions must be able to swiftly deliver new features and upgrades in order to meet the demands of customers and the market. Kubernetes provides DevOps teams with a uniform deployment methodology that allows them to swiftly and automatically test and deploy new services. In the form of rolling upgrades, Kubernetes allows for zero-downtime deployments. Mission-critical IoT solutions, such as those used in vital manufacturing operations, may now be updated without disrupting processes and with minimal impact on customers and end-users.

Scalability in IoT Applications

Scalability, defined as. a system's ability to manage a growing amount of work efficiently by utilizing extra resources continues to be a difficulty for IoT developers. As a result, scalability is a fundamental challenge for many IoT solutions.

The ability to handle and serve countless device connections, send huge volumes of data, and provide high-end services such as real-time analytics necessitates a deployment infrastructure that can flexibly scale up and down as per the demands of an IoT deployment. Kubernetes allows developers to scale up and down across different network clusters automatically.

Highly Available System

A number of IoT solutions are considered business/mission-critical systems that need to be highly reliable and available. As an example, the IoT solution critical to an emergency healthcare facility of a hospital needs to be available at all times. Kubernetes is empowering developers with the required tools to deploy services that are highly available.

The architecture of Kubernetes also allows for workloads to run independently of one another. Additionally, they can be restarted with negligible impact on end-users.

Efficient Use of Cloud Resources

Kubernetes aids in increased efficiency by maximizing the use of cloud resources. IoT cloud integration is typically a collection of linked services that handle device connectivity and management, data ingestion, data integration, analytics, and integration with IT and OT systems, among other things. These services will frequently run-on public cloud providers like Amazon Web Services or Microsoft Azure.

As a result, making optimum use of cloud provider resources is critical when calculating the entire cost of managing and deploying these services. On top of the underlying virtual machines, Kubernetes adds an abstract layer. Administrators can concentrate on deploying IoT services across the most appropriate number of VMs rather than a single service on a single VM.

IoT Edge Deployment

The deployment of IoT services to the edge network is an important development in the IoT business. For example, it might be more efficient to deploy data analytics and machine learning services closer to the equipment being monitored to improve the responsiveness of a predictive maintenance solution. It might be more efficient to deploy the data analytics and machine learning services closer to the equipment being monitored.

System administrators and developers have a new management problem when running IoT services in a distributed and federated way. Kubernetes, on the other hand, provides a single framework for launching IoT services at the edge. In fact, a new Kubernetes IoT Working Group is looking into how it may provide a standardized deployment architecture for IoT cloud and IoT Edge.

Why Do We Require Load Balancing in IoT Applications?

Load balancing is the systematic and efficient distribution of network or application traffic across several servers in a server farm. Each load balancer is placed between client devices and backend servers. It receives and then distributes inbound requests to any available server capable of handling the request/work.

The most fundamental kind of load balancing in Kubernetes consists of load distribution, which is quite easy to execute at the dispatch level. Kubernetes deploys two methods of load distribution, both of them running through a feature called Kube-proxy, which manages the virtual IPs used by services.

The Driving Force Behind the Adoption of Cloud-Native Platforms Such as Kubernetes

Many organizations today are passing through a digital transformation phase. In this phase, their primary aim is to bring about a change in the manner they connect with their customers, suppliers, and partners. These organizations are taking advantage of innovations offered by technologies such as IoT platforms, IoT data analytics, or machine learning to modernize their enterprise IT and OT systems. They realize that the complexity of the development and deployment of new digital products requires new development processes. Consequently, they turn to agile development and infrastructure tools such as Kubernetes

In recent times, Kubernetes has emerged as the most used standard container orchestration framework for the purpose of cloud-native deployments. Kubernetes has become the primary choice of development teams who wish to support their migration to new microservices architecture. It also supports the DevOps culture for continuous integration (CI) and continuous deployment (CD).

In fact, Kubernetes addresses many of the complex challenges that development teams see when building and deploying IoT applications. That's why building IoT Applications with microservices has become a trend.

Upcoming Trends in Kubernetes for IoT App Development

Kubernetes in Production Operation Version 2.0

After the success of the deployment of Kubernetes in production environments with remarkable levels of agility and flexibility, companies in the production and manufacturing space are looking to further scale up workloads within a Kubernetes cluster to meet varying requirements.

Kubernetes-Native Software Boom

The software that needs to be run as part of the containers already existed in the early days of Kubernetes, with its functional purpose and architectural elements set. However, in order to fully utilize Kubernetes, we must adapt and tailor it to our individual needs. Adaptations are required, however, to fully utilize Kubernetes' advantages and better suit modern operating models. Kubernetes has now reached a point in its evolutionary development where developers may build applications directly on the platform. As a result, Kubernetes will become increasingly essential as a determinant of modern app architecture in the next years.

Kubernetes on the Edge

Kube Edge is currently an exciting project that will help in the seamless management and deployment capabilities of Kubernetes and Docker. It will also lead to packaged applications being run smoothly on the devices or at the edge.

Thus, we are already seeing the Kubernetes community expanding and advancing rapidly. These advancements have enabled the crafting of cloud-native IoT solutions that are scalable and reliable, and readily deployable in the most challenging environments.

Published at DZone with permission of Vidushi Gupta. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments