Implementing EKS Multi-Tenancy Using Capsule (Part 4)

Take a deep dive into security policy verification use cases by configuring security policy engines like Kyverno or Open Gatekeeper.

Join the DZone community and get the full member experience.

Join For FreeIn the previous articles (Part 1, Part 2, and Part 3) of this series, we have learned what multi-tenancy is, different types of tenant isolation models, challenges with Kubernetes-native services, installing the Capsule framework on AWS EKS, and creating single or multiple tenants on EKS cluster, with single or multiple AWS IAM users as tenant owners. Also, we explored how to configure namespace options, resource quotas, and limit ranges, and assign network policies for the tenants using the Capsule framework.

In this part, we will deep dive into security policy verification use cases by configuring security policy engines in Capsule.

About Open Gatekeeper and Kyverno

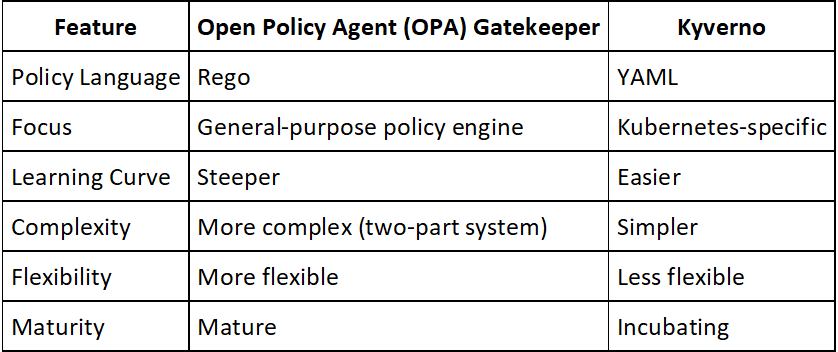

Open Gatekeeper and Kyverno are the widely used security policy engines used for enforcing rules and configurations on Kubernetes resources in a multi-tenant environment. Here's a breakdown of their key differences to help you choose the right tool:

Open Policy Agent (OPA) Gatekeeper

Concept

Gatekeeper leverages OPA, a general-purpose policy engine, to enforce policies written in Rego, a declarative policy language.

Strengths

- Flexibility: OPA's Rego language offers powerful capabilities for expressing complex policies and leveraging external data sources for decision-making.

- Maturity: OPA is a mature project with a large community and extensive integrations with other security tools.

Weaknesses

- Complexity: Learning Rego can have a steeper learning curve compared to Kyverno's YAML-based policies.

- Two-part system: Managing OPA and Gatekeeper as separate components adds some complexity.

Kyverno

Concept

Kyverno is a purpose-built policy engine for Kubernetes. Policies are written in YAML, a declarative language familiar to many Kubernetes users.

Strengths

- Ease of use: YAML-based policies are easier to learn and write compared to Rego, making Kyverno more accessible for beginners.

- Kubernetes-centric: Kyverno is specifically designed for Kubernetes, offering deep integration with Kubernetes resources and functionalities.

Weaknesses

- Less flexibility: YAML policies might not be as expressive as Rego for very complex scenarios.

- Maturity: Kyverno is a CNCF-incubated project and might have a smaller ecosystem compared to OPA.

Key Differences

Here's a table summarizing the key differences:

Choosing the right tool depends on your specific needs.

- Use OPA Gatekeeper if:

- You need highly flexible and expressive policies.

- You already have experience with Rego or OPA.

- You value a mature project with a large community.

- Use Kyverno if:

- You prioritize ease of use and a lower learning curve.

- You want a tool specifically designed for Kubernetes policies.

- You prefer a simpler, single-component solution.

Configuring Security Policy Engines in Capsule

Capsule supports both Open Gatekeeper and Kyverno security policy engines.

Kyverno as Security Policy Engine

Run the following commands in the EKS cluster for Kyverno installation and the Capsule Kyverno policy application.

# Install Kyverno

kubectl create -f https://github.com/kyverno/kyverno/releases/download/v1.10.0/install.yaml

# Apply capsule Kyverno security policies

kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/multi-tenancy/master/benchmarks/kubectl-mtb/test/policies/kyverno/all_policies.yamlThe above command installs all the below security policies needed for multi-tenancy.

- Block access to cluster resources

- Block access to multitenant resources

- Block access to other tenant resources

- Configure namespace resource quotas

- Block modification of resource quota

- Block use of NodePort services

- Block network traffic across tenant namespaces

- Block the use of existing PVs

- Block add capabilities

- Require run as a non-root user

- Block privileged containers

- Block privileged escalations

- Block the use of host path volumes

- Block the use of host networking and ports

- Block use of host PID

- Block the use of host IPC

- Require PersistentVolumeClaim for storage

OPA as Security Policy Engine

To install Gatekeeper, run the following command:

kubectl apply -f https://raw.githubusercontent.com/open-policy-agent/gatekeeper/master/deploy/gatekeeper.yamlFor OPA, you have to configure each policy separately. Find the policies of Gatekeeper here and you can refer to the earlier link to learn how to use Gatekeeper.

Security Policy Validations Using Kyverno

Let us verify the "Block access to other tenant resource" security policy.

Each tenant has its own set of resources, such as namespaces, service accounts, secrets, pods, services, etc. Tenants should not be allowed to access each other's resources.

Login as cluster administrator and let us create a new tenant: team1 with shiva as tenant owner.

aws eks --region us-east-1 update-kubeconfig --name eks-cluster1

kubectl apply team1.yamlapiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: team1

spec:

owners:

- kind: User

name: shivaCreate a new tenant: team2 with ganesha as tenant owner.

kubectl apply team2.yamlapiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: team2

spec:

owners:

- kind: User

name: ganeshaAs team1 tenant owner, run the following command to create a namespace in the given tenant:

aws eks --region us-east-1 update-kubeconfig --name eks-cluster1 --profile shiva

kubectl create ns team1-productionAs team2 tenant owner, run the following command to create a namespace in the given tenant:

aws eks --region us-east-1 update-kubeconfig --name eks-cluster1 --profile ganesha

kubectl create ns team2-productionNow with the profile ganesha who is the owner of team2, try to access the resources of team1 by running the below command.

kubectl get serviceaccounts --namespace team1-productionYou must receive an error message as shown below:

Error from server (Forbidden): serviceaccount is forbidden:

User "ganesha" cannot list resource "serviceaccounts" in API group "" in the namespace "team1-production"Now with the profile shiva, who is the owner of team1, try to access the resources of team2 by running the below command.

aws eks --region us-east-1 update-kubeconfig --name eks-cluster1 --profile shiva

kubectl get serviceaccounts --namespace team2-productionYou must receive an error message:

Error from server (Forbidden): serviceaccount is forbidden:

User "shiva" cannot list resource "serviceaccounts" in API group "" in the namespace "team2-production"Other security policies can be verified by following the steps mentioned here.

So, in this article series, we have learned right from installing Capsule, to creating tenants, configuring resource quotas, and applying network and security policies.

Now it's time to clean up.

Uninstalling Capsule

If you're using Helm as a package manager, all the Operator resources such as Deployment, Service, Role Binding, etc. must be deleted.

helm uninstall -n capsule-system capsuleUnfortunately, Helm doesn't manage the lifecycle of Custom Resource Definitions. Additional details can be found here.

This process must be executed manually as follows:

kubectl -n capsule-system scale deploy capsule-controller-manager --replicas=0

kubectl -n capsule-system get deployments

kubectl -n capsule-system get svc

kubectl -n capsule-system delete deployment capsule-controller-manager

kubectl get mutatingwebhookconfigurations

kubectl delete mutatingwebhookconfigurations capsule-mutating-webhook-configuration

kubectl get validatingwebhookconfigurations

kubectl delete validatingwebhookconfigurations capsule-validating-webhook-configuration

kubectl get crds

kubectl delete crd capsuleconfigurations.capsule.clastix.io,

kubectl delete crd globaltenantresources.capsule.clastix.io

kubectl delete crd tenantresources.capsule.clastix.io

kubectl delete crd tenants.capsule.clastix.io

kubectl get clusterrolebindings

kubectl delete clusterrolebinding capsule-manager-rolebinding

kubectl delete clusterrolebinding capsule-proxy-rolebinding

kubectl get clusterroles

kubectl delete clusterrole capsule-namespace-deleter

kubectl delete clusterrole capsule-namespace-provisioner

kubectl delete ns capsule-systemConclusions

- Namespace options, resource quotas, and limit ranges are inherited across all the tenant namespaces.

- Network policies defined at the tenant level are applied across all the tenant namespaces. Capsule also allows to define additional network policies at the namespace level.

- Host isolation features like blocking access to the underlying host infrastructure: host IPC, host PID, host path volumes, host networking and ports, etc. have been verified.

- Control pane isolation features like blocking access to cluster resources, access to multitenant resources, access to other tenant resources, etc. have been verified.

- Data isolation features like avoiding or BLOCKING a tenant to mount existing volumes have been verified.

- Security Policy engines like Kyverno, Gatekeeper, etc., and Network Policy engines like Calico, Cilium, etc. can be integrated with the Capsule framework.

- Capsule allows the addition of internal namespace users and user groups as tenant owners. However, only AWS IAM users can be added as tenant owners and not the service role or IAM user groups. This has been confirmed by the Capsule team here.

Opinions expressed by DZone contributors are their own.

Comments