Implementing EKS Multi-Tenancy Using Capsule (Part 1)

Understand multi-tenancy in Kubernetes, various tenant isolation models, and getting started with the Capsule installation.

Join the DZone community and get the full member experience.

Join For FreeWhat Is Multi-Tenancy?

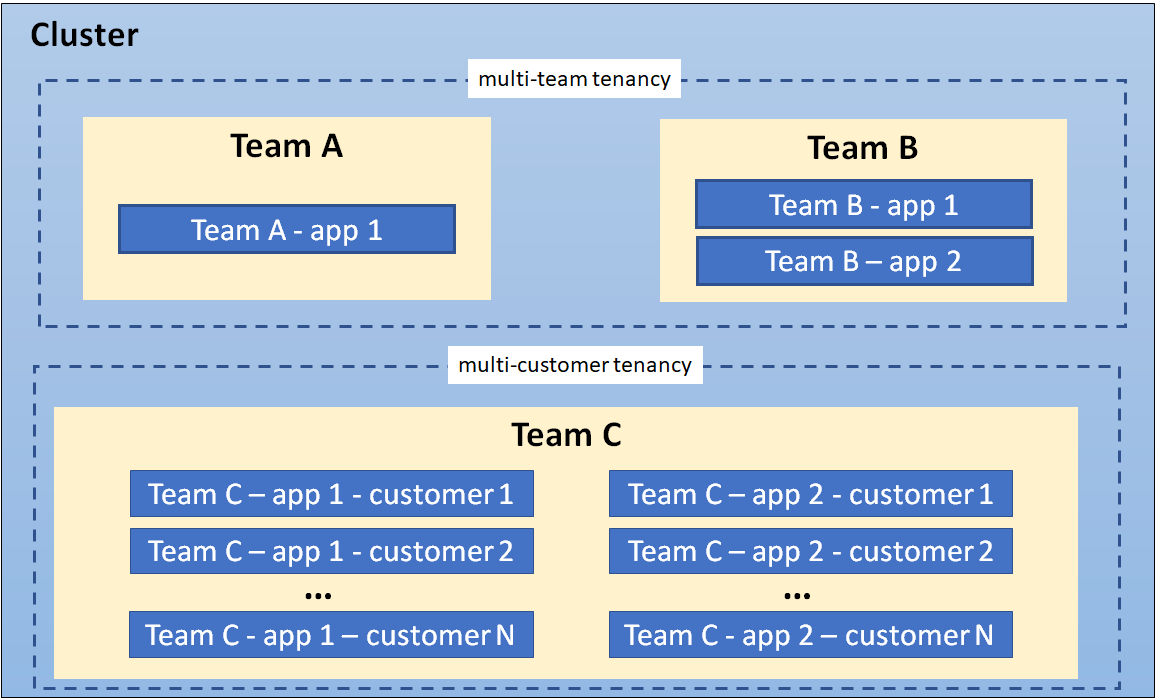

Tenancy enables users to share cluster infrastructure among:

- Multiple teams within the organization

- Multiple customers of the organization

- Multi-environments of the application

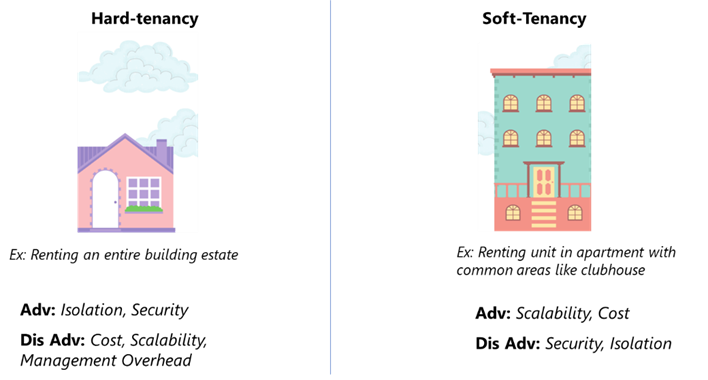

Shared clusters save costs and simplify administration. Security and isolation are key factors to consider when cluster resources are to be shared. Two prominent isolation models to achieve multi-tenancy are hard and soft tenancy models.

Shared clusters save costs and simplify administration. Security and isolation are key factors to consider when cluster resources are to be shared. Two prominent isolation models to achieve multi-tenancy are hard and soft tenancy models.

The key difference between these models lies in the level of isolation provided between tenants.

Soft tenancy has a lower level of isolation and uses mechanisms like namespaces, quotas, and limits to restrict tenant access to resources and prevent them from interfering with each other while hard tenancy has stronger isolation. Often involves separate clusters or virtual machines for each tenant, with minimal shared resources.

Kubernetes Native Services in Multi-Tenant Implementations

Kubernetes has a built-in namespace model to create logical partitions of the cluster as isolated slices. Though basic levels of tenancy can be achieved, using namespaces has some limitations:

- Implementing advanced multi-tenancy scenarios, like Hierarchical Namespaces (HNS) or exposing Container as a Service (CaaS) becomes complicated because of the flat structure of Kubernetes namespaces.

- Namespaces have no common concept of ownership. Tracking and administration challenges persist if the team controls multiple namespaces.

- Enforcing resource quotas and limits fairly across all tenants requires additional effort.

- Only highly privileged users can create namespaces. This means that whenever a team wants a new namespace, they must raise a ticket to the cluster administrator. While this is probably acceptable for small organizations, it generates unnecessary toil as the organization grows.

To solve this problem, Kubernetes provides the Hierarchical Namespace Controller (HNC), which allows the user to organize the namespaces into hierarchies. Namespaces are organized in a tree structure, where child namespaces inherit resources and policies from parent namespaces. While HNC supports a soft-tenancy approach leveraging existing namespaces, however, is a newer project still under incubation in the Kubernetes community.

Other wide projects that provide similar capabilities are Capsule, Rafay, Kiosk, etc.

In this article series, we will discuss implementing multi-tenant solutions using the Capsule framework. Capsule is a commercially supported open-source project that implements multi-tenancy using virtual control planes. Each tenant gets a dedicated control plane with its own API server and etcd instance, creating a virtualized Kubernetes cluster experience.

Capsule is one of the recommended platforms by the Kubernetes community for multi-tenancy.

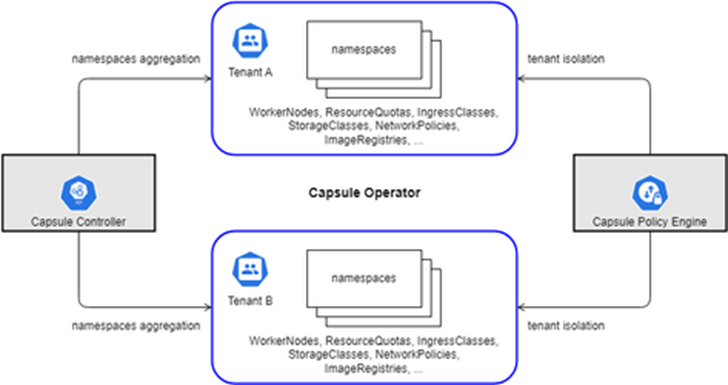

Major components of the Capsule framework include:

- Capsule controller: Aggregates multiple namespaces in a lightweight abstraction called Tenant.

- Capsule policy engine: Achieves tenant isolation by the various Network and Security Policies, Resource Quota, Limit Ranges, RBAC, and other policies defined at the tenant level.

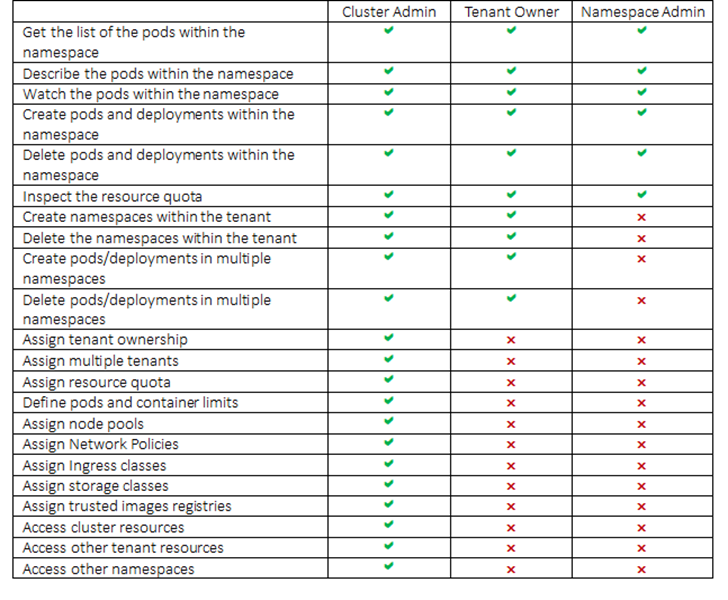

A user who owns the tenant is called a Tenant Owner. There is a small contrast between the roles of a tenant owner and namespace administrator. Listed below are the roles and responsibilities of the cluster admin, the tenant owner, and the namespace administrator.

Install Capsule Framework

We will use the AWS EKS cluster to perform the exercise. This article assumes you have already created an EKS cluster "eks-cluster1" and the following software is already installed on your local machine.

- AWS CLI (Version 2)

- Kubectl (v1.21)

- Curl (8.1.2)

- Helm Charts (3.8.2)

- Go Lang (v1.20.6)

Capsule can be installed in the two ways listed below:

Using YAML Installer

AWS CLI (Version 2)

aws eks --region us-east-1 update-kubeconfig --name eks-cluster1

kubectl apply -f https://raw.githubusercontent.com/clastix/capsule/master/config/install.yaml- If you face any error in applying the YAML file, re-running the same command should fix the problem.

- If you see the status of the pod as “

ImagePullback” or “errImagePull,” delete the pod of deployment (not the deployment).

Using Helm

As a cluster admin or root user, run the following commands to install using Helm.

aws eks --region us-east-1 update-kubeconfig --name eks-cluster1

helm repo add clastix https://clastix.github.io/charts

helm install capsule clastix/capsule -n capsule-system --create-namespaceVerify Capsule Installation

What gets installed with the Capsule framework:

- Namespace: capsule-system

- Deployments in Namespace: capsule-controller-manager

- Services Exposed:

- capsule-controller-manager-metrics-service

- capsule-webhook-service

- Secrets in Namespace:

- capsule-ca

- capsule-tls

- Webhooks: In Kubernetes, webhooks are a mechanism for external services to interact with the Kubernetes API server during the lifecycle of API requests. They act like HTTP callbacks, triggered at specific points in the request flow. This allows external services to perform validations or modifications on resources before they are persisted in the cluster. There are two main types of webhooks used in Kubernetes for admission control: Mutating Admission Webhooks and Validating Admission Webhooks. The following webhooks are installed:

- capsule-mutating-webhook-configuration

- capsule-validating-webhook-configuration

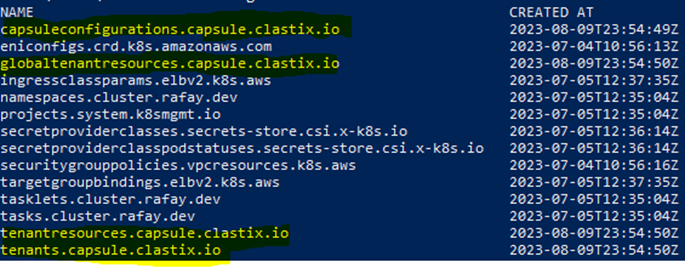

- Custom Resource Definitions (CRDs): CRDs allow the user to extend the API and introduce new types of resources beyond the built-in ones. Imagine them as blueprints for creating your own custom resources that can be managed alongside familiar resources like Deployments and Pods. The CRDs below are installed:

- capsuleconfigurations.capsule.clastix.io

- globaltenantresources.capsule.clastix.io

- tenantresources.capsule.clastix.io

- tenants.capsule.clastix.io

- Cluster Roles

- capsule-namespace-deleter

- capsule-namespace-provisioner

- Cluster Role Bindings

- capsule-manager-rolebinding

- capsule-proxy-rolebinding

Follow the below steps to check if Capsule is installed properly:

- Login in to verify the following commands as a root user or cluster administrator. This should list ‘capsule-system’ namespace.

aws eks --region us-east-1 update-kubeconfig --name eks-cluster1

kubectl get ns- Run the below commands to see capsule-related components.

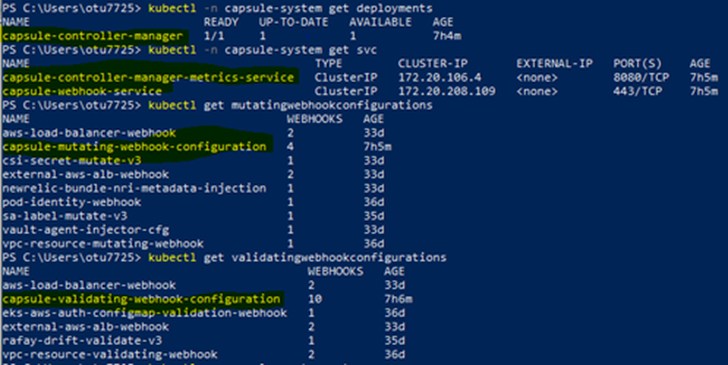

kubectl -n capsule-system get deployments

kubectl -n capsule-system get svc

kubectl -n capsule-system delete deployment capsule-controller-manager

kubectl get mutatingwebhookconfigurations

kubectl get validatingwebhookconfigurations

- Get capsule CRDs installed.

kubectl get crds If any of the CRDs are missing, apply the respective kubectl command mentioned below. Please note the Capsule version in the said URL, your mileage may vary according to the desired upgrading version.

If any of the CRDs are missing, apply the respective kubectl command mentioned below. Please note the Capsule version in the said URL, your mileage may vary according to the desired upgrading version.

kubectl apply -f https://raw.githubusercontent.com/clastix/capsule/v0.3.3/charts/capsule/crds/globaltenantresources-crd.yaml

kubectl apply -f https://raw.githubusercontent.com/clastix/capsule/v0.3.3/charts/capsule/crds/tenant-crd.yaml

kubectl apply -f https://raw.githubusercontent.com/clastix/capsule/v0.3.3/charts/capsule/crds/tenantresources-crd.yaml- View the

clusterrolesandrolesbindingsby running the below commands

kubectl get clusterrolebindings kubectl get clusterroles

- Verify the resource utilization of the framework.

kubectl -n capsule-system get pods

kubectl top pod <<pod name>> -n capsule-system --containersThe Capsule framework creates one pod replica. The CPU (cores) should be around 3m and Memory (bytes) around 26Mi.

- Verify the tenants available by running the below command as Cluster admin. The result should be “No Resources Found.”

kubectl get tenantsSummary

In this part, we have understood what multi-tenancy is, different types of tenant isolation models, challenges with Kubernetes native services, and installing the Capsule framework on AWS EKS. In the next part, we will further deep-dive into creating tenants and policy management.

Opinions expressed by DZone contributors are their own.

Comments