How to Really Build a GraphQL Server With Java

This tutorial aims to demonstrate the process of building a GraphQL server utilizing the venerable graphql-java library in Java.

Join the DZone community and get the full member experience.

Join For FreeNetflix DGS and Spring for GraphQL are modern frameworks for constructing GraphQL servers using Java or Kotlin. This tutorial aims to demonstrate the process of building a GraphQL server utilizing the venerable graphql-java library in Java. Later installments in this series will enhance this project with Hibernate ORM and with Netflix DGS and Spring for GraphQL. We will also report on the performance of these different approaches, and the final installment will survey the trade-offs between these different approaches. A preview of some of those trade-offs will appear in the earlier installments, including this first installment.

TL;DR

Upon thorough examination, I determined that establishing a GraphQL server with Netflix DGS requires significant effort, including integrating multiple frameworks, substantial boilerplate code, and frequent context switching. My observation aligns with other schema-first, resolver-oriented methodologies in GraphQL, characterized by prolonged development periods, superficial APIs, and strong dependencies on data models.

In my view, the optimal choice is to embrace a data-first, compiler-oriented strategy for GraphQL. I will share my thoughts on this alternative approach at the end of the article.

What Is a Schema-First Approach in GraphQL?

The maintainers of the Netflix DGS library strongly recommend what is commonly referred to as the "schema-first" development of GraphQL servers. So do the maintainers of the core graphql-java library, who write in their book:

"Schema-first” refers to the idea that the design of a GraphQL schema should be done on its own, and should not be generated or inferred from something else. The schema should not be generated from a database schema, Java domain classes, nor a REST API.

Schemas ought to be schema-first because they should be created in a deliberate way and not merely generated. Although a GraphQL API will have much in common with the database schema or REST API being used to fetch data, the schema should still be deliberately constructed.

We strongly believe that this is the only viable approach for any real-life GraphQL API and we will only focus on this approach. Both Spring for GraphQL and GraphQL Java only support "schema-first."

Others are not so sure, however, including the maintainers of the documentation for graphql-java, who write that their library "offers two different ways of defining the schema [code-first and schema-first]."

As advocates of a third way of low-code and data-first development, in this article, we at my company step out of our comfort zone, follow orthodox schema-first development of a demo GraphQL server with the state-of-the-art Netflix DGS library in Java, and report back on the experience.

What Constitutes a GraphQL Server?

"Build a full-featured GraphQL server with Java or Kotlin in record time."

– Netflix DGS Getting Started Guide

Netflix DGS is a framework for building GraphQL servers with Java or Kotlin, but what is a "GraphQL server" and what are its features? In our experience, a GraphQL server must have these features.

Functional Concerns

Primary software functionality that satisfies use cases for end-users:

- Queries: Flexible, general-purpose language for getting data from a data model, part of the GraphQL specification

- Mutations: Flexible, general-purpose language for changing data in a data model, part of the GraphQL specification

- Subscriptions: Flexible, general-purpose language for getting real-time or soft real-time data from a data model, part of the GraphQL specification

- Business logic: A model for expressing the most common forms of application logic: authorization, validation, and side-effects

- Integration: An ability to merge data and functionality from other services

Non-Functional Concerns

Secondary software functionality that supports the functional concerns with Quality-of-Service (QoS) guarantees for operators:

- Caching: Configurable time-vs-space trade-off for obtaining better performance for certain classes of operations

- Security: Protections from attacks and threat vectors

- Observability: The emission of diagnostic information such as metrics, logs, and traces to aid operations and troubleshooting

- Reliability: High-quality engineering that promotes efficient and correct operation

Netflix DGS In-Action

Take those features as our definition of a "GraphQL server" such that they comprise our ideal end state. That's our goal. How do we get there with Netflix DGS? It's a tall order with many features, so take baby steps. How do we achieve the goal of having a more modest version of the first feature?

Queries are a flexible, general-purpose language for getting data from a data model, and they are part of the GraphQL specification.

How do we implement a Netflix DGS GraphQL server to get data from a data model? To make this more "concrete," take "a data model" to be a database. As one handy reference point, make it a relational database with a SQL API. How do we get started?

1. Get Spring Boot

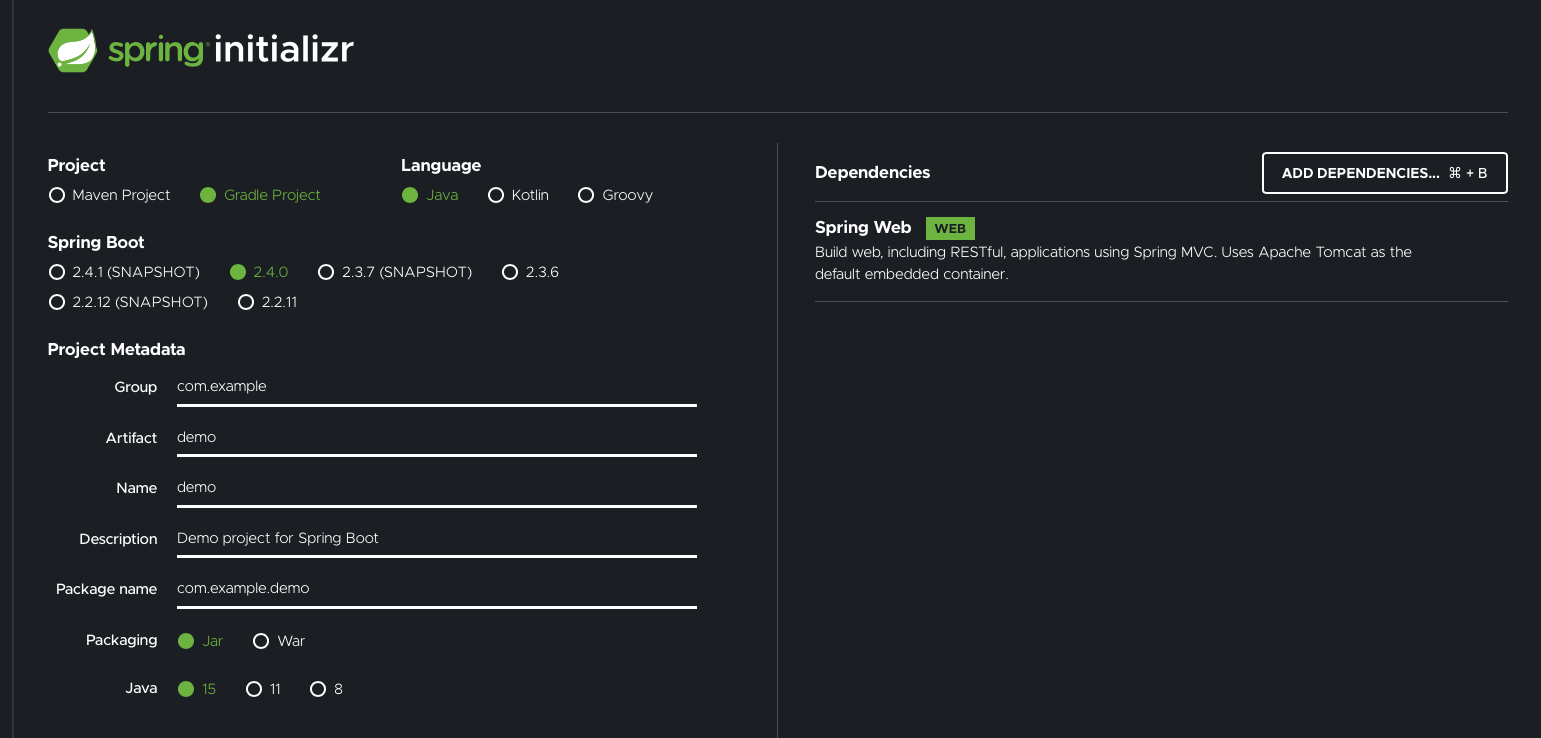

The Netflix DGS framework is "based on Spring Boot 3.0", so choosing DGS means choosing Spring Boot over alternatives like Quarkus or Vert.x, two popular alternatives to Spring, the Java application framework foundation for Spring Boot. Absent an existing Spring Boot application to build upon, create a new Spring Boot application with the web-based Spring Initializr. Mature Java and Spring Boot shops will likely substitute their own optimized inception process, but the Initializr is the best way to fulfill the promise of moving fast.

2. Get Netflix DGS

Being "opinionated," Spring Boot includes some but not all relevant "batteries." Naturally, one battery we must add either to the Gradle build file or to the Maven POM file is DGS itself.

<dependencyManagement>

<dependencies>

...

<dependency>

<groupId>com.netflix.graphql.dgs</groupId>

<artifactId>graphql-dgs-platform-dependencies</artifactId>

<version>4.9.16</version>

<type>pom</type>

<scope>import</scope>

</dependency>

...

</dependencies>

</dependencyManagement>

<dependencies>

...

<dependency>

<groupId>com.netflix.graphql.dgs</groupId>

<artifactId>graphql-dgs-spring-boot-starter</artifactId>

</dependency>

...

</dependencies>3. Get a GraphQL Schema

Being "opinionated," the DGS framework "is designed for schema-first development" so it is necessary first to create a schema file. This is for the GraphQL API, but that API is over a data model, so the schema is essentially a data model. While it might be tempting to generate the schema from the fundamental data model – the database – choosing DGS means writing a new data model from scratch. Note that if this new GraphQL data model strongly resembles the foundational database data model, this step may feel like repetition. Ignore that feeling.

type Query {

shows(titleFilter: String): [Show]

secureNone: String

secureUser: String

secureAdmin: String

}

type Mutation {

addReview(review: SubmittedReview): [Review]

addReviews(reviews: [SubmittedReview]): [Review]

addArtwork(showId: Int!, upload: Upload!): [Image]! @skipcodegen

}

type Subscription {

reviewAdded(showId: Int!): Review

}

type Show {

id: Int

title: String @uppercase

releaseYear: Int

reviews(minScore:Int): [Review]

artwork: [Image]

}

type Review {

username: String

starScore: Int

submittedDate: DateTime

}

input SubmittedReview {

showId: Int!

username: String!

starScore: Int!

}

type Image {

url: String

}

scalar DateTime

scalar Upload

directive @skipcodegen on FIELD_DEFINITION

directive @uppercase on FIELD_DEFINITION4. Get DataFetchers

The DataFetcher is the fundamental abstraction within DGS. It plays the role of a Controller in a Model-View-Controller (MVC) architecture. A DataFetcher is a Java or Kotlin method adorned with the @DgsQuery or @DgsData annotations, in a class decorated with the @DgsComponent annotation. The function of the annotations is to instruct the DGS run-time to treat the method as a resolver for a field on a type in the GraphQL schema, to invoke the method when executing queries that involve that field, and to include that field's data as it marshals the response payload for the query. Typically, there will be a DataFetcher for every Type and top-level Query field in the schema. Given that the types and fields were already defined in the schema, this step may also feel like repetition. Ignore that feeling as well.

package com.example.demo.datafetchers;

import com.example.demo.generated.types.*;

import com.example.demo.services.*;

import com.netflix.graphql.dgs.*;

import java.util.*;

import java.util.stream.*;

@DgsComponent // Mark this class as DGS Component

public class ShowsDataFetcher {

private final ShowsService showsService;

public ShowsDataFetcher(ShowsService showsService) {

this.showsService = showsService;

}

@DgsQuery // Mark this class as a DGS DataFetcher

public List<Show> shows(@InputArgument("titleFilter") String titleFilter) {

if (titleFilter == null) return showsService.shows();

return showsService.shows().stream().filter(s -> s.getTitle().contains(titleFilter)).collect(Collectors.toList());

}

}

5. Get POJOs (Optional)

If the DataFetchers play the role of Controllers in an MVC architecture, typically, there will be corresponding components for the Models. DGS does not require them, so they can be regarded as "optional," though the DGS examples have them, as do typical Spring applications. They can be Java Records or even Java Maps (more on this later). Still, typically, they are Plain Old Java Objects (POJOs) and are the fundamental units of the in-memory application-layer data model, which often mirrors the foundational persistent database data model. The one-to-one correspondence between database tables, GraphQL schema types, GraphQL schema top-level Query fields, DGS DataFetchers, and POJOs may feel like yet more repetition. Continue to ignore these feelings.

public class Show {

private final UUID id;

private final String title;

private final Integer releaseYear;

public Show(UUID id, String title, Integer releaseYear) {

this.id = id;

this.title = title;

this.releaseYear = releaseYear;

}

public UUID getId() {

return id;

}

public String getTitle() {

return title;

}

public Integer getReleaseYear() {

return releaseYear;

}

} 6. Get Real Data (Not Optional)

Unfortunately, the road laid out by the DGS Getting Started guide turns to gravel at this juncture. Its examples merely return hard-coded in-memory data, which is not an option for an actual application whose data is persisted in a relational database with an SQL API, as stipulated above.

Of course, the Model and Controller layers of a multi-layered MVC architecture being independent of the View layer, need not be GraphQL or DGS specific. Therefore, it is appropriate that the opinionated DGS guide withhold opinions on how exactly to map data between model objects and a relational database. Without that luxury, real applications typically will use Object Relational Mapping (ORM) frameworks like Hibernate or JOOQ, but those tools have their own Getting Started guides:

Consider choosing Hibernate for its greater popularity, broader industry support, a larger volume of learning resources, and slightly greater integration with the Spring ecosystem – all critical factors. In that case, here are some of the remaining steps.

7. Get Hibernate

As it is time to add another "battery" to the application, like with Spring and DGS, Hibernate is added to either the Gradle build file or the Maven POM file.

<dependencyManagement>

<dependencies>

...

<dependency>

<groupId>org.hibernate.orm</groupId>

<artifactId>hibernate-platform</artifactId>

<version>6.4.4.Final</version>

<type>pom</type>

<scope>import</scope>

</dependency>

...

</dependencies>

</dependencyManagement>

<dependencies>

...

<dependency>

<groupId>org.hibernate.orm</groupId>

<artifactId>hibernate-core</artifactId>

</dependency>

...

</dependencies>8. Get Access to the Database

The application needs access to the database, which can be configured into Hibernate via a simple hibernate.properties file in the ${project.basedir}/src/main/resources directory.

hibernate.connection.url=<JDBC url>

hibernate.connection.username=<DB role name>

hibernate.connection.password=<DB credential secret>9. Get Mappings Between POJOs and Tables

Hibernate can map the tables to POJOs – which again are the Models in the MVC architecture – to database tables (or views) by annotating those classes with @Entity, @Table, @Id, and other annotations that the Hibernate framework defines. The function of these annotations is to instruct the Hibernate run-time to treat the classes as targets for fetching corresponding data from the database (as well as flushing changes back to the database).

@Entity // Mark this as a persistent Entity

@Table(name = "shows") // Name its table if different

public class Show {

@Id // Mark the field as a primary key

@GeneratedValue // Specify that the db generates this

private final UUID id;

private final String title;

private final Integer releaseYear;

public Show(UUID id, String title, Integer releaseYear) {

this.id = id;

this.title = title;

this.releaseYear = releaseYear;

}

public UUID getId() {

return id;

}

public String getTitle() {

return title;

}

public Integer getReleaseYear() {

return releaseYear;

}

}

Hibernate can also map the tables to Java Map instances (hash tables) instead of POJOs, as mentioned above. This is done via dynamic mapping in what are called mapping files. Typically, there will be an XML mapping file for every Model in the application, with the naming convention modelname.hbm.xml, in the ${project.basedir}/main/resources directory. This substitutes the labor of writing Java POJO files for the Models, annotating them with Hibernate annotations, and writing XML mapping files for the Models and embedding the equivalent metadata there. It may feel like little was gained in the bargain, but ignoring those feelings should be second nature by now.

<!DOCTYPE hibernate-mapping PUBLIC

"-//Hibernate/Hibernate Mapping DTD 3.0//EN"

"http://www.hibernate.org/dtd/hibernate-mapping-3.0.dtd">

<hibernate-mapping>

<class entity-name="Show">

<id name="id" column="id" length="32" type="string"/> <!--no native UUID type in Hibernate mapping-->

<property name="title" not-null="true" length="50" type="string"/>

<property name="releaseYear" not-null="true" length="50" type="integer"/>

</class>

</hibernate-mapping>10. Get Fetching

As described above, the DGS demo example Controller class fetches hard-coded in-memory data. To fetch data from the database in an actual application, replace this implementation with one that establishes the crucial link between the three key frameworks: DGS, Spring, and Hibernate.

package com.example.demo.services;

import com.example.demo.generated.types.*;

import java.util.*;

import org.hibernate.cfg.*;

import org.springframework.stereotype.*;

import static org.hibernate.cfg.AvailableSettings.*;

@Service

public class ShowsServiceImpl implements ShowsService {

private StandardServiceRegistry registry;

private SessionFactory sessionFactory;

public ShowsServiceImpl() {

try {

this.registry = new StandardServiceRegistryBuilder().build();

this.sessionFactory =

new MetadataSources(this.registry)

.addAnnotatedClass(Show.class) // Add every Model class to the Hibernate metadata

.buildMetadata()

.buildSessionFactory();

}

catch (Exception e) {

StandardServiceRegistryBuilder.destroy(this.registry);

}

}

@Override

public List<Show> shows() {

List<Show> shows = new ArrayList<>();

sessionFactory.inTransaction(session -> {

session.createSelectionQuery("from Show", Show.class) // 'Show' is mentioned twice

.getResultList()

.forEach(show -> shows.add(show));

});

return shows;

}

}11. Get Iterating

At this point, there already is a fair amount of code and other software artifacts:

- GraphQL schema files (*.sdl)

- Project build files (build.gradle or pom.xml)

- Configuration files (hibernate.properties)

- DGS DataFetcher files (*.java)

- POJO files (*.java)

- Additional Controller files (*.java)

Along with packages, symbols, and annotations from three frameworks:

- DGS

- Spring

- Hibernate

And yet, it would be a stroke of luck if it even compiled, let alone functioned properly at first. Repeatable unit tests-as-code are needed to iterate on the project rapidly and with confidence until it builds and functions properly. Fortunately, the Spring Initialzr will already have added the JUnit and Spring Boot testing components to the project build files. To use mock data with a framework such as Mockito, however, its components must be added to the project build file.

<dependencies>

...

<dependency>

<groupId>org.mockito</groupId>

<artifactId>mockito-inline</artifactId>

<version>{mockitoversion}</version>

</dependency>

<dependency>

<groupId>org.mockito</groupId>

<artifactId>mockito-junit-jupiter</artifactId>

<version>{mockitoversion}</version>

<scope>test</scope>

</dependency>

...

</dependencies>

Now, all that is needed is to create unit test class files for every DataFetcher, taking care to set up mock data as required.

import com.netflix.graphql.dgs.*;

import com.netflix.graphql.dgs.autoconfig.*;

import java.util.*;

import org.junit.jupiter.api.*;

import org.mockito.*;

import org.springframework.beans.factory.annotation.*;

import org.springframework.boot.test.context.*;

import static org.assertj.core.api.Assertions.assertThat;

@SpringBootTest(classes = {DgsAutoConfiguration.class, ShowsDataFetcher.class})

public class ShowsDataFetcherTests {

@Autowired

DgsQueryExecutor dgsQueryExecutor;

@MockBean

ShowsService showsService;

@BeforeEach

public void before() {

Mockito.when(showsService.shows()).thenAnswer(invocation -> List.of(new Show("mock title", 2020)));

}

@Test

public void showsWithQueryApi() {

GraphQLQueryRequest graphQLQueryRequest = new GraphQLQueryRequest(

new ShowsGraphQLQuery.Builder().build(),

new ShowsProjectionRoot().title()

);

List<String> titles = dgsQueryExecutor.executeAndExtractJsonPath(graphQLQueryRequest.serialize(), "data.shows[*].title");

assertThat(titles).containsExactly("mock title");

}

}12. Get to a Milestone

Eventually, a milestone is reached after iterating on the implementation by writing test cases in tandem. There is a functioning Netflix DGS GraphQL server that gets some data from a foundational data model in a relational database. Building on this success, take stock of what has been accomplished so far so that planning can begin for what still needs to be done.

Recall our definition of a "GraphQL server" as one that has the Functional and Non-Functional concerns listed above, and note which features have and have not yet been implemented:

- [✓] queries

- [ ] flexible, general-purpose queries

- [ ] mutations

- [ ] subscriptions

- [ ] business logic

- [ ] integration

- [ ] caching

- [ ] security

- [ ] reliability and error handling

There still is a long way to go. Granted, the providers of frameworks like Spring, Hibernate, and Netflix DGS have thought of this. A benefit of frameworks like these is that they are frameworks: They organize the code, furnish mental models for reasoning about it, offer guidance in the form of opinions derived from experience, and provide "lighted pathways" for adding these other features and fulfilling the promise of a "full-featured GraphQL server."

For instance, we can recover some of the flexibility we seek within Hibernate by switching to JPA entity graph fetching. Alternatively, instead of fetching the data "directly" through Hibernate, the DGS DataFetchers could compose Hibernate Query Language (HQL) queries, which Hibernate then executes to retrieve the data. This resemblance to compiling SQL (perhaps even from GraphQL) is strong but abstracted away from the details of the underlying database SQL dialect.

Likewise, caching can be configured in Hibernate (or in JOOQ if that is the ORM). It can also be configured in Spring Boot. Other features, such as security, can be added into Spring with tools like Bucket4j. As for business logic, the code itself (Java or Kotlin) in the DataFetchers, the Controllers, and the Model POJOs provides obvious sites for installing business logic. Naturally, Spring has affordances to help with some of this, such as authorization and data validation.

Consequently, a "full-featured GraphQL server" probably can be built in Java or Kotlin by cobbling together Netflix DGS, Spring Boot, an ORM, and other frameworks and libraries. But, several questions leap out:

- Can this be accomplished "in record time"?

- Should a "full-featured GraphQL server" be attempted this way?

- Is there a better way?

Is There a Faster Path to GraphQL?

Remember that the goal was never to write Java or Kotlin code, juggle frameworks, endure relentless context switching, or generate boilerplate. The goal was:

Build a full-featured GraphQL server with Java or Kotlin in record time.

Getting back to basics, it is possible to answer the third question above: Is there a better way? There is.

GraphQL

What is GraphQL? One way to answer that is by listing its features. Some of those features are:

- GraphQL is a flexible, general-purpose query language over types.

- Types have fields.

- Fields can relate to other Types.

If that sounds familiar, it should because other ways of organizing data have these same features. SQL is one of them. It is not the only one but a very common one. On the other hand, GraphQL has several secondary, related features:

- GraphQL has straightforward semantics.

- GraphQL has a highly regular machine-readable input format.

- GraphQL has a highly regular machine-readable output format.

If SQL is more powerful than GraphQL, GraphQL is more uniform and is easier to work with than SQL. They seem like a match made in heaven and this raises some obvious questions:

- Can GraphQL be compiled into SQL (and other query languages)?

- Should GraphQL be compiled into SQL?

- What would be the trade-offs involved?

GraphQL to SQL: Compilers Over Resolvers

The answer to the first question is a definite "yes." Hasura, PostGraphile, Prisma, and Supabase do it. PostgREST even does something similar, albeit not for GraphQL.

The answer to the second question surely must depend on the answers to the third question.

So, what are the trade-offs of adopting a compiler approach over a resolver approach? First, what are some of the benefits?

| Benefit | Comment |

|---|---|

|

Fast |

Truly delivers in "record time." |

|

Uniform |

Solve the data-fetching problem once and for all. |

|

Features |

Build functional and non-functional concerns around that core. |

|

Efficiency |

Avoid the N+1 problem naturally. |

|

Leverage |

Exploit all of the power of the underlying database. |

|

Operate |

Easily deploy, monitor, and maintain the application. |

Second, what are some drawbacks, and how can they be mitigated?

| Drawback | Comment | Mitigation strategy |

|---|---|---|

|

Coupling |

API tied to data model. |

Database views and other forms of indirection. |

|

Business logic |

Code is a natural place to implement business logic. |

Database views, functions, and other escape hatches. |

|

Heterodoxy |

Difficulty of defying conventional wisdom. |

Truly "build a full-featured GraphQL server" in record time. |

The prevailing wisdom in the GraphQL community advocates for a schema-first, resolver-oriented approach, typically involving manually creating data fetchers over in-memory data models in languages like Java, Kotlin, Python, Ruby, or JavaScript. However, this approach often leads to prolonged development cycles, limited APIs, shallow implementations, and tight coupling to data models, resulting in operational inefficiencies and fragility. It's a rather simplistic perspective.

As the industry evolves, there's a growing recognition of the need for a more sophisticated data-first, compiler-oriented methodology. With the ever-expanding volume, velocity, and variety of data, enterprises are grappling with hundreds of data sources and thousands of tables. Manually crafting GraphQL schemas that mirror these data structures and replicating this process across multiple layers of the architecture is impractical and unsustainable.

Just as we don't reinvent the wheel whenever we encounter a new business domain requiring data solutions, we shouldn't start from scratch when building API servers. Instead, we should leverage full-featured, purpose-built API products, adapting them to our specific needs efficiently and confidently. This approach enables us to tackle business challenges swiftly and effectively, freeing up time to focus on solving the next set of problems.

Published at DZone with permission of David Ventimiglia. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments