How To Optimize Feature Sets With Genetic Algorithms

Dive deep into the world of feature optimizations using genetic algorithms, and explore how they can help identify the most relevant features for machine learning models.

Join the DZone community and get the full member experience.

Join For FreePrerequisites

Genetic Algorithms is an advanced topic. Even though the content has been prepared to keep in mind the requirements of a beginner, the reader should be familiar with the fundamentals of Programming and Basic Algorithms before starting with this article. Additionally, consider sharpening your mathematical skills; they will greatly aid in comprehending the examples.

Introduction to Optimization

Optimization entails the art of enhancing performance. In any given process, we encounter a collection of inputs and outputs, as illustrated in the diagram.

Optimization involves the search for input values that yield the most favorable output results. The concept of favorable can vary depending on the specific problem at hand, but in mathematical contexts, it typically involves the pursuit of maximizing or minimizing one or more objective functions through adjustments to the input parameters.

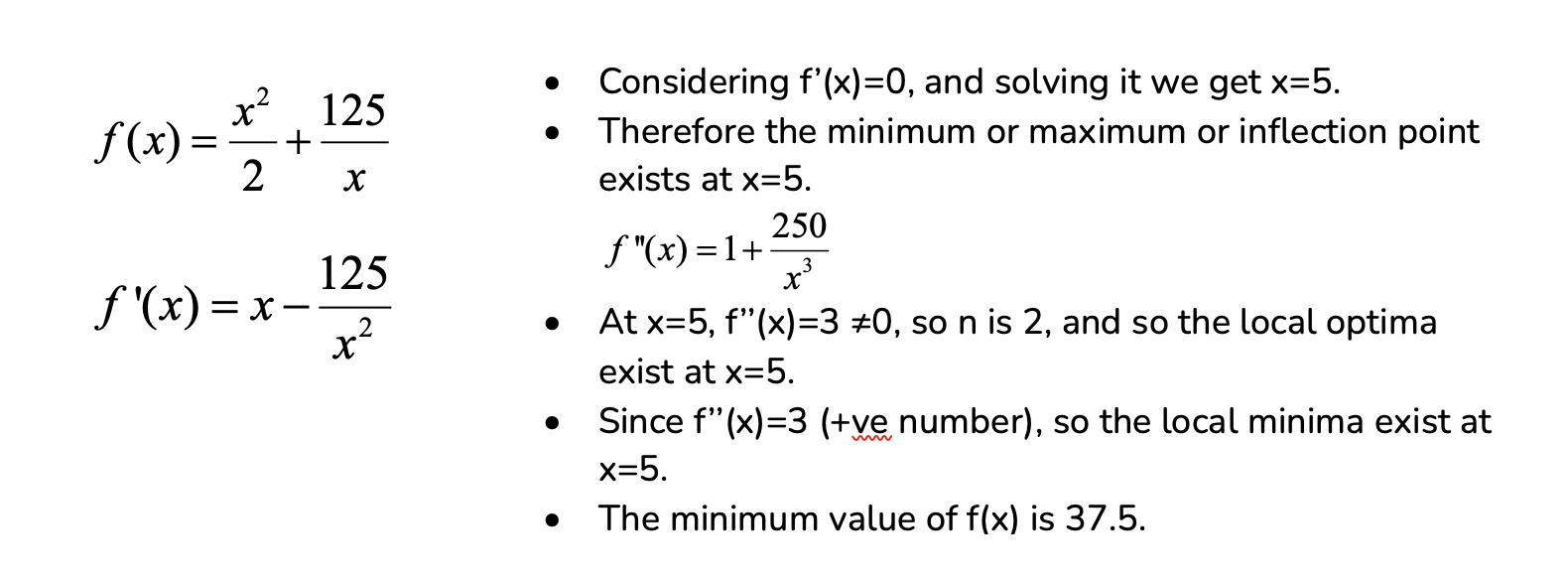

Let's consider y =f(x); if f’(x)=0 at a point x=x*, then the optimum (max or min) or the inflection point exists at that point.

Upon closer examination, it becomes apparent that the first non-zero higher-order derivative is typically denoted as 'n,' such that.

- If n is an odd number, x* is an inflection point.

- Otherwise, x* is a local optimum point.

Expanding on this idea...

- If the value of the next high-order derivative is +ve, x* is a local minimum point.

- If the value of the next high-order derivative is -ve, x* is a local maximum point.

For example:

Principles of Optimization

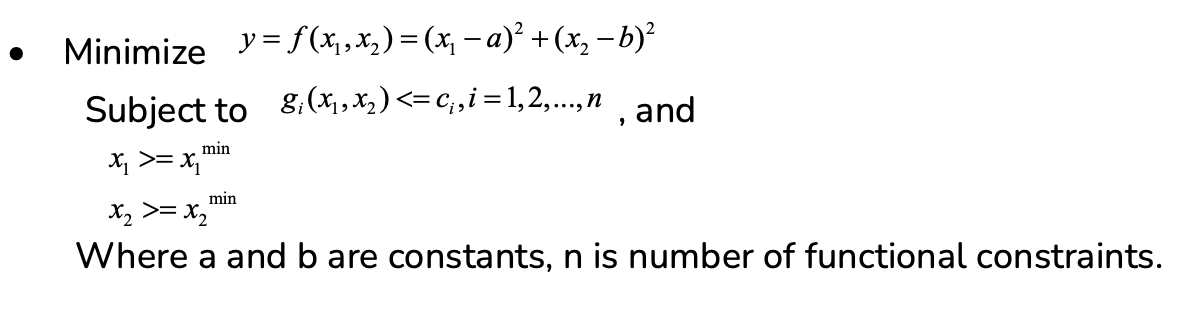

Consider a constrained optimization problem below:

Based on the characteristics of the constraints, a feasible region is identified. Any point situated within this feasible region is considered a potential candidate for the optimal solution. The points located within the feasible region are referred to as free points, while those situated on the boundary of the region are categorized as bound points. Hence, an optimal solution can manifest as either a free point or a bound point within the feasible region. Gradient-based methods, particularly derivative-based approaches, have been a conventional means of addressing unconstrained optimization problems. However, it's important to note that they come with several limitations and drawbacks.

What Is a Genetic Algorithm?

Throughout history, nature has consistently provided an abundant wellspring of inspiration for humanity. Genetic Algorithms (GAs) are search-based algorithms rooted in the principles of natural selection and genetics. GAs constitute a subset of the broader field of computation known as Evolutionary Computation.

Genetic Algorithms (GAs) were originally developed by John Holland, along with his students and colleagues at the University of Michigan, notably including David E. Goldberg. Since their inception, GAs have been applied to a wide array of optimization problems, consistently achieving a high level of success.

In Genetic Algorithms (GAs), a population of potential solutions to a given problem is established. These solutions then undergo processes of recombination and mutation, mirroring the principles found in natural genetics, resulting in the creation of new offspring. This iterative process spans multiple generations. Each individual or candidate solution is evaluated based on its fitness value, typically determined by its objective function performance. Fitter individuals are accorded a higher probability of reproducing and generating even more capable offspring. This aligns with the Darwinian Theory of "Survival of the Fittest."

In this manner, GAs continually evolve and refine the quality of individuals or solutions across generations until a predefined stopping criterion is met. Genetic Algorithms exhibit a significant degree of randomness in their operations, but they outperform simple random local search methods, as they also incorporate historical information to guide their search for optimal solutions.

Why Genetic Algorithm?

Genetic Algorithms (GAs) possess the remarkable capability to deliver a "sufficiently good" solution in a timely manner, especially when dealing with large-scale problems where traditional algorithms might struggle to provide a solution. GAs offer a versatile and generic framework for tackling intricate optimization problems. Here are some advantages of using Genetic Algorithms (GAs):

- Versatility: GAs can be applied to a wide range of optimization problems, making them a versatile choice for various domains, including engineering, finance, biology, and more.

- Global search: GAs excel at exploring the entire search space, enabling them to find solutions that might be missed by local search algorithms. This makes them suitable for problems with multiple local optima.

- No need for derivatives: Unlike many optimization techniques, GAs do not require derivatives of the objective function, making them applicable to problems with non-continuous, noisy, or complex fitness landscapes.

- Parallelism: GAs can be parallelized effectively, allowing for faster convergence on high-performance computing systems.

- Stochastic nature: The stochastic nature of GAs ensures that they can escape local optima and explore the search space more thoroughly.

- Adaptability: GAs can adapt and adjust their search strategies over time, which is particularly useful for dynamic or changing optimization problems.

- Solution diversity: GAs maintain a diverse population of solutions, which can help in finding a wide range of possible solutions and prevent premature convergence.

- Interpretability: In some cases, GAs can provide insights into the structure of the solution space, helping users better understand the problem.

- Combinatorial problems: GAs are well-suited for combinatorial optimization problems, such as the traveling salesman problem and job scheduling.

- Parallel evolution: GAs can be used to evolve multiple solutions simultaneously, which is valuable in multi-objective optimization and other complex scenarios.

It's important to note that while GAs offer these advantages, they are not always the best choice for every optimization problem, and their performance can vary depending on the problem's characteristics. Proper problem analysis and algorithm selection are essential for achieving optimal results.

Genetic Algorithm Terminology

- Populations and generations: A population is an array of individuals. For example, if the size of the population is 100 and the number of variables in the fitness function is 3, you represent the population by a 100-by-3 matrix. The same individual can appear more than once in the population. For example, the individual (2, -3, 1) can appear in more than one row of the array. At each iteration, the genetic algorithm performs a series of computations on the current population to produce a new population. Each successive population is called a new generation.

- Parents and children: To create the next generation, the genetic algorithm selects certain individuals in the current population, called parents, and uses them to create individuals in the next generation, called children. Typically, the algorithm is more likely to select parents that have better fitness values.

- Individuals: An individual is any point to which you can apply the fitness function. The value of the fitness function for an individual is its score. For example, if the fitness function is:

- f(x1,x2,x3)=(2x1+1)2+(3x2+4)2+(x3−2)2

- The vector (2, -3, 1), whose length is the number of variables in the problem, is an individual. The score of the individual (2, –3, 1) is f(2, –3, 1) = 51.

An individual is sometimes referred to as a genome or chromosome, and the vector entries of an individual as genes.

- Fitness functions: The fitness function is the function you want to optimize. For standard optimization algorithms, this is known as the objective function.

- Fitness values and best fitness values: The fitness value of an individual is the value of the fitness function for that individual. The best fitness value for a population is the smallest fitness or largest fitness value for any individual in the population, depending on the optimization problem.

- Convergence: The point at which the GA reaches a solution that meets the termination criteria. This can be an optimal or near-optimal solution.

- Search space: The set of all possible solutions to the optimization problem.

- Diversity: Diversity refers to the average distance between individuals in a population. A population has high diversity if the average distance is large; otherwise, it has low diversity. Diversity is essential to the genetic algorithm because it enables the algorithm to search a larger region of space.

- Genotype: The internal representation of a chromosome (e.g., a binary or numerical string).

- Phenotype: The actual solution represented by a chromosome. It is obtained by decoding the genotype.

- Crossover rate: The probability that two parents will undergo crossover to produce offspring in a new generation.

- Mutation rate: The probability that a gene (or a portion of the chromosome) will undergo mutation in a new generation.

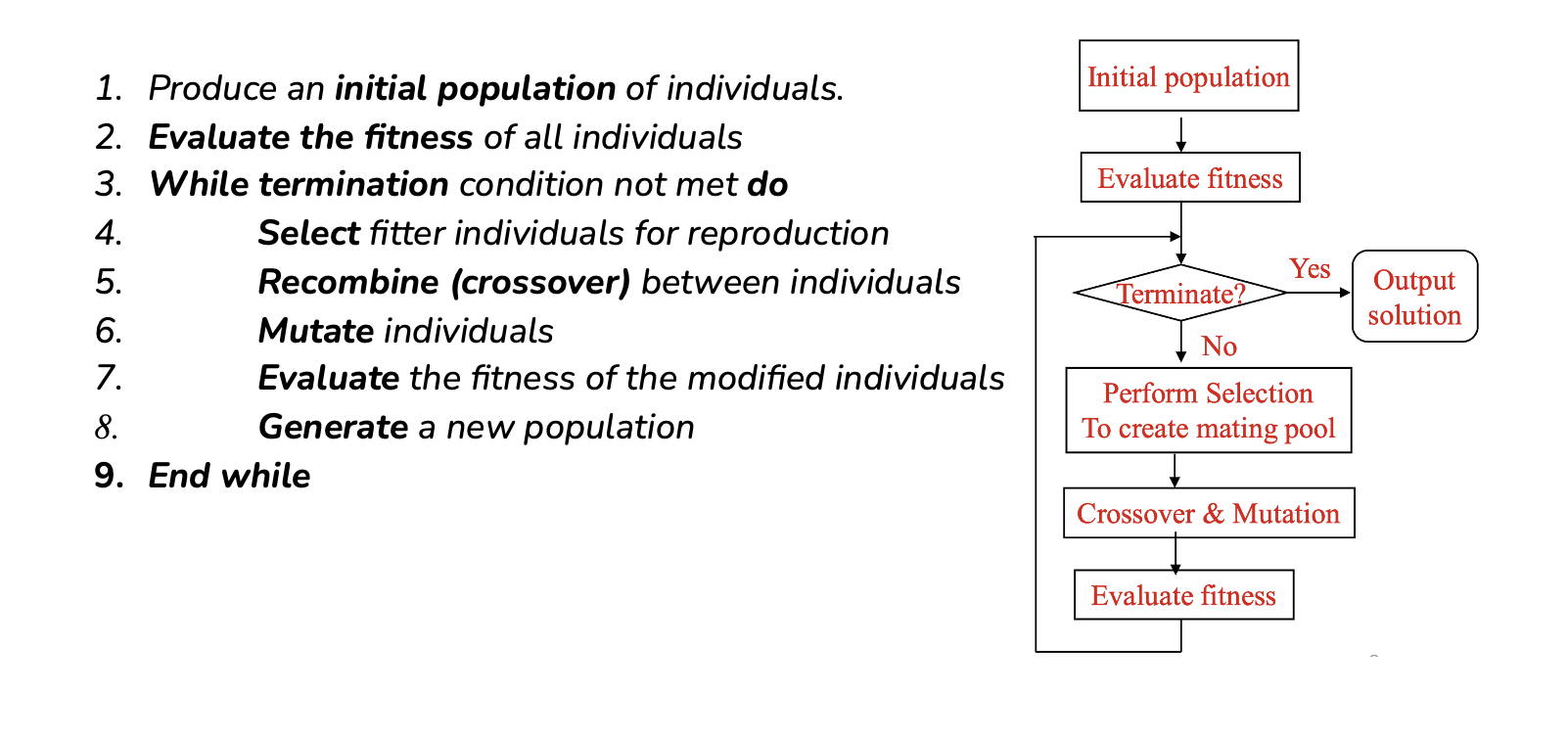

Fundamental Genetic Algorithm (GA): Pseudocode

Detailed Strategies of a Fundamental Genetic Algorithm (GA)

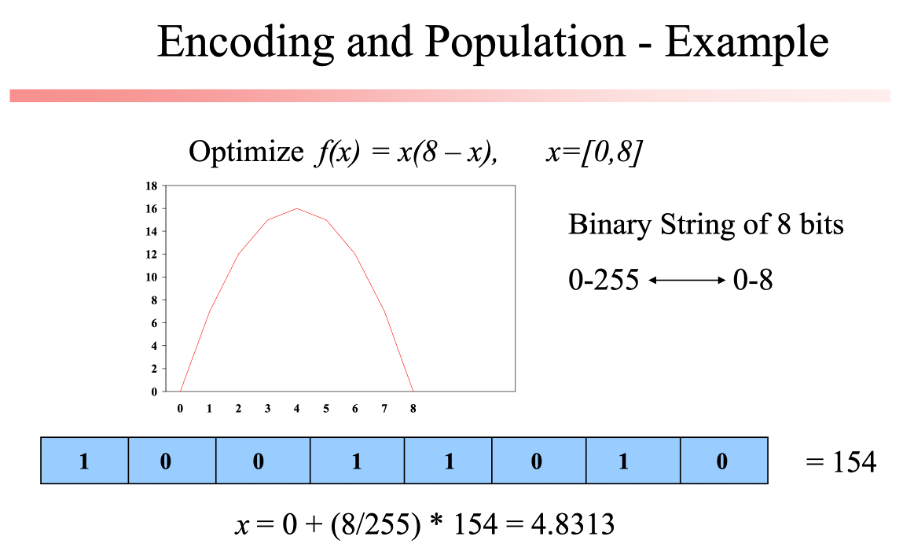

Encoding and Population

Chromosome encodes a solution in the search space

- Usually as strings of 0's and 1's

- If l is the string length, the number of different chromosomes (or strings) is 2l

Population

- A set of chromosomes in a generation

- Population size is usually constant

- Common practice is to choose the initial population randomly.

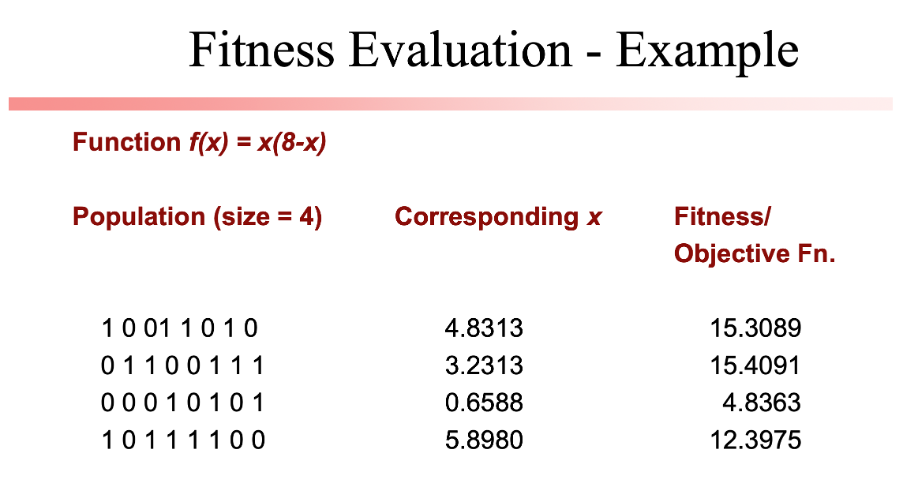

Fitness Evaluation

- Fitness/objective function is associated with each chromosome.

- This indicates the degree of goodness of the encoded solution.

- If the minimization problem is to be solved, then fitness = 1 / objective or fitness = -1 * objective.

- If the maximization problem is to be solved, then fitness = objective

Selection

- More copies to good strings.

- Fewer copies to bad string.

- Proportional selection scheme.

- Number of copies taken is directly proportional to its fitness.

- Mimics the natural selection procedure to some extent.

- Roulette wheel selection and Tournament selection are two frequently used selection procedures.

Crossover

- Exchange of genetic information

- It takes place between randomly selected parent chromosomes

- Single-point crossover and Uniform crossover are the most commonly used schemes.

- Probabilistic operation

Mutation

- Random alteration in the genetic structure

- Introduces genetic diversity into the population.

- Exploration of new search areas

- Mutating a binary gene involves simple negation of the bit

- Mutating a real coded gene defined in a variety of ways

- Probabilistic operation

Elitism

- A strategy that involves preserving the best-performing individuals from one generation to the next.

- The fittest individuals are guaranteed to survive and become part of the next generation without undergoing any genetic operations like crossover or mutation.

- Elitism ensures that the best solutions found so far are not lost during the evolutionary process and can continue to contribute to the population.

- It ensures Steady Improvement and Accelerated Convergence.

Termination Criteria

- The cycle of selection, crossover, and mutation is repeated a number of times until one of these occurs

- The average fitness value of a population is more or less constant over several generations.

- The desired objective function value is attained by at least one string in the population.

Number of generations (or iterations) is greater than some threshold (most commonly used).

Variations in Genetic Algorithms (GAs)

- Differential Evolution (DE): DE is a variant of GAs specifically designed for real-valued optimization problems. It uses vector-based mutation and recombination operators.

- Estimation of Distribution Algorithms (EDAs): EDAs model and learn the probability distribution of promising solutions in the population and use this distribution to generate new candidate solutions.

- Self-Adaptive Genetic Algorithms: Allow the algorithm to adapt its genetic operators (mutation rates, crossover types) based on the evolving population's characteristics, leading to efficient convergence.

- Niching Algorithms: These algorithms focus on finding multiple diverse solutions in a single run, often in multimodal optimization problems where there are multiple peaks or modes in the fitness landscape.

- Multi-objective Evolutionary Algorithms (MOEAs): MOEAs address problems with multiple conflicting objectives. They aim to find a set of Pareto-optimal solutions representing trade-offs between these objectives.

- Hybrid Algorithms: Integrate GAs with other optimization techniques, machine learning models, or domain-specific heuristics to enhance performance and robustness.

I aimed to provide a concise overview of Genetic Algorithms and Optimisation. However, if you have any particular questions or need more detailed information on this extensive subject, please feel free to ask in the comments.

I appreciate your time and attention! You may reach me on LinkedIn.

Opinions expressed by DZone contributors are their own.

Comments