How to Migrate Your Data From Redshift to Snowflake

How to configure a Redshift Airbyte source and a Snowflake Airbyte destination, and create an Airbyte connection that automatically migrates data to Snowflake.

Join the DZone community and get the full member experience.

Join For Free

For decades, data warehousing solutions have been the backbone of enterprise reporting and business intelligence. But, in recent years, cloud-based data warehouses like Amazon Redshift and Snowflake have become extremely popular. So, why would someone want to migrate from one cloud-based data warehouse to another?

The answer is simple: More scale and flexibility. With Snowflake, users can quickly scale out data and compute resources independently by automatically adding nodes. Using the VARIANT data type, Snowflake also supports storing richer data such as objects, arrays, and JSON data. Debugging Redshift is not always straightforward as well, as Redshift users know. Sometimes it goes beyond feature differences that could trigger a desire to migrate. Maybe your team just knows how to work with Snowflake better than Redshift, or perhaps your organization wants to standardize on one particular technology.

This recipe will explain the steps you need to take to migrate from Redshift to Snowflake to maximize your business value using Airbyte.

Pre-Requisites

1. You'll need to get Airbyte to move your data. To deploy Airbyte, follow the simple instructions in our documentation here.

2. Both Redshift and Snowflake are SaaS services, and you'll need to have an account on these platforms to get started.

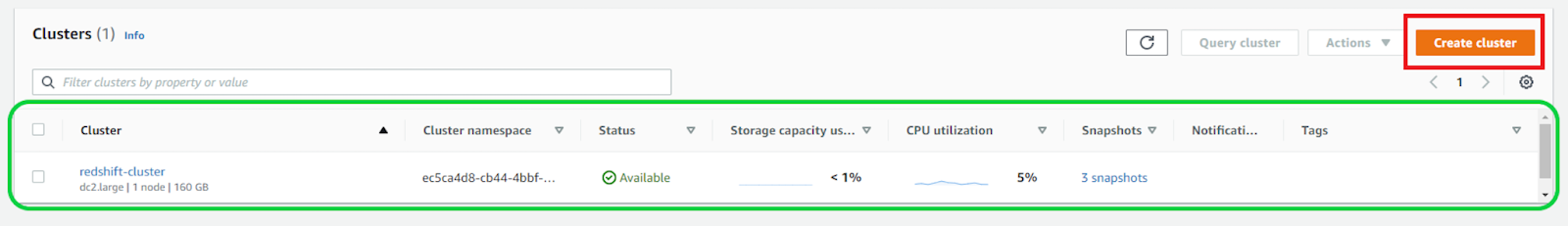

3. When you create a data warehouse cluster with Redshift, it adds sample data by default within it. Our data is stored in the "redshift-cluster" that we created within the "dev" database. The clusters created can be seen on the dashboard under "Amazon Redshift > Clusters". To create a new cluster, click on the icon highlighted below and follow along.

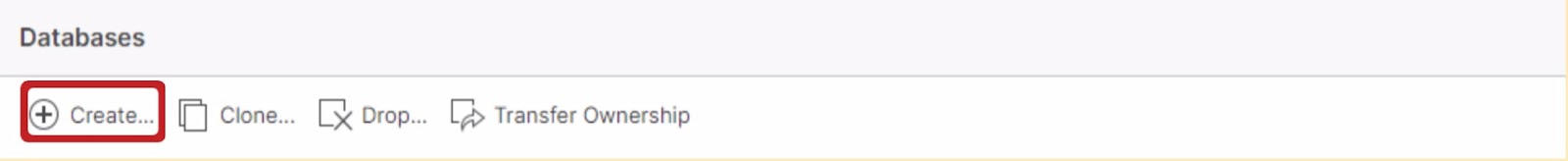

4a. For the Destination, you will need to create an empty database and warehouse within Snowflake to host your data. To do so, click on the databases icon as shown in the navigation bar and hit "Create..."

Provide a name for your database. In the example below, we have named our database "Snowflake_Destination."

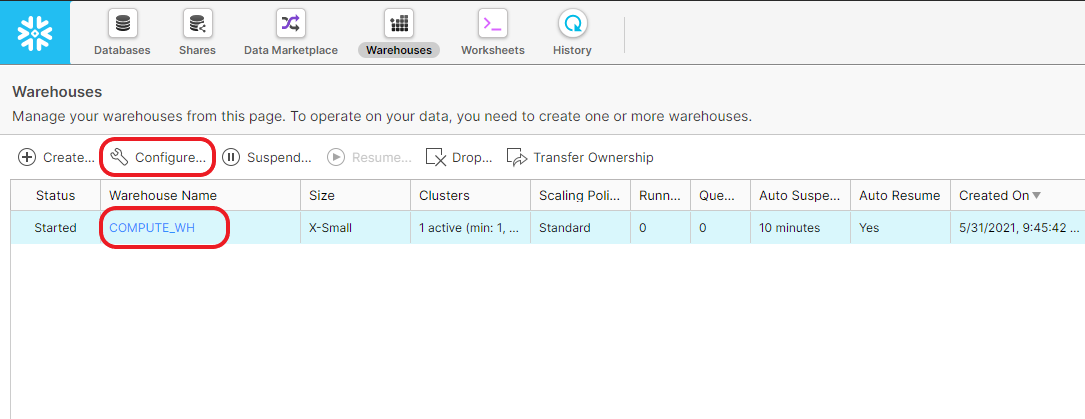

4b. After setting up the database, click on the warehouse icon and create a warehouse named "COMPUTE_WH." In our example, we have used an x-small compute instance. However, you can scale up the compute instance using bigger instance types or adding more instances. This can be achieved with a few simple clicks and will be demonstrated in a later section to illustrate the business value of the migration. Now you have all the prerequisites to start the migration.

Step 1: Set Up the Redshift Source in Airbyte

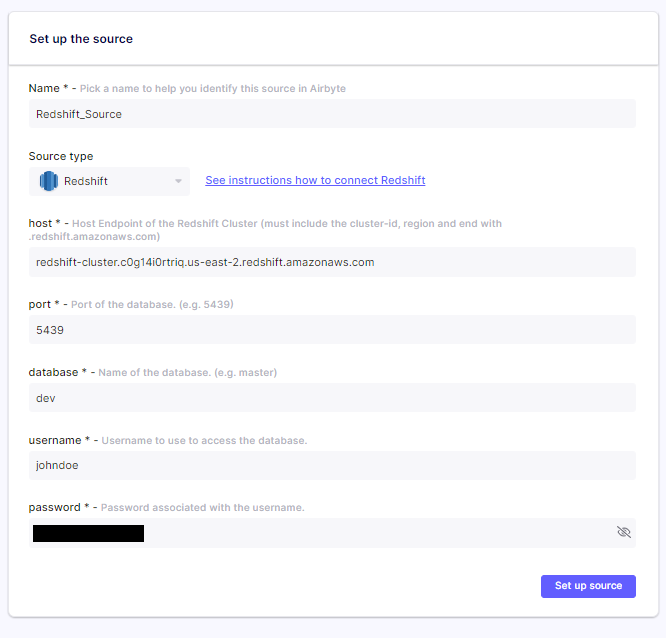

Open Airbyte by navigating to http://localhost:8000 in your web browser. Proceed to set up the source by filling out the details as follows:

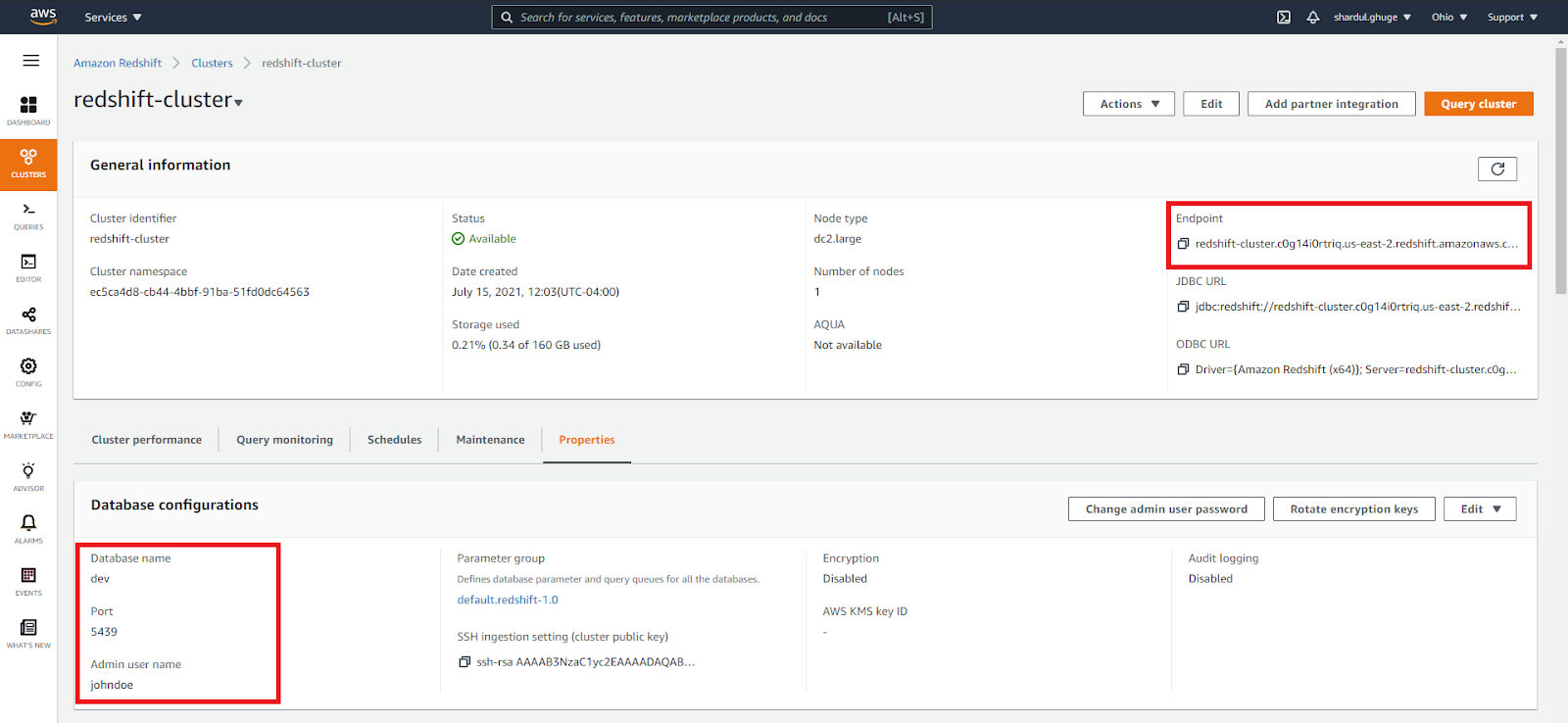

In the "Set up the source" Airbyte screen, we have named the source "Redshift_Source" in this example, but you can change it to something else if you like. We picked the source type as Redshift. The remaining information can be obtained from the redshift-cluster dashboard, as illustrated in the highlighted parts of the screenshot below.

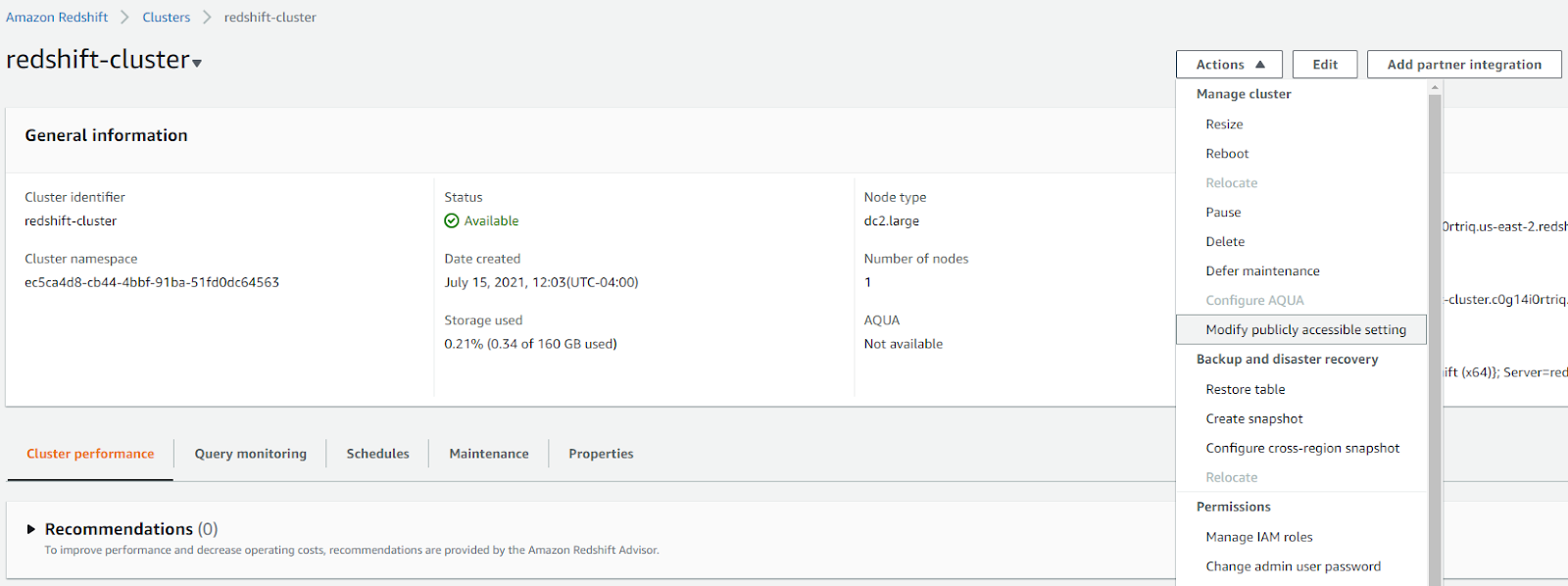

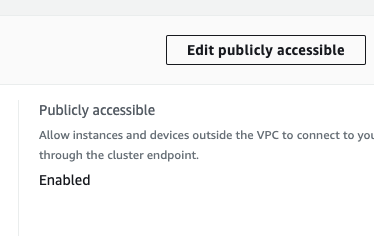

Apart from this, a few more settings need to be adjusted in your Redshift console. For example, suppose you are running Airbyte locally on your machine and connecting to Redshift. In that case, you will need to enable your cluster to be publicly accessible over the internet so that Airbyte can connect to it. You can do so by navigating to the "Actions" dropdown menu on the Redshift console, clicking "Modify publicly accessible setting," and changing it to be enabled (if not already).

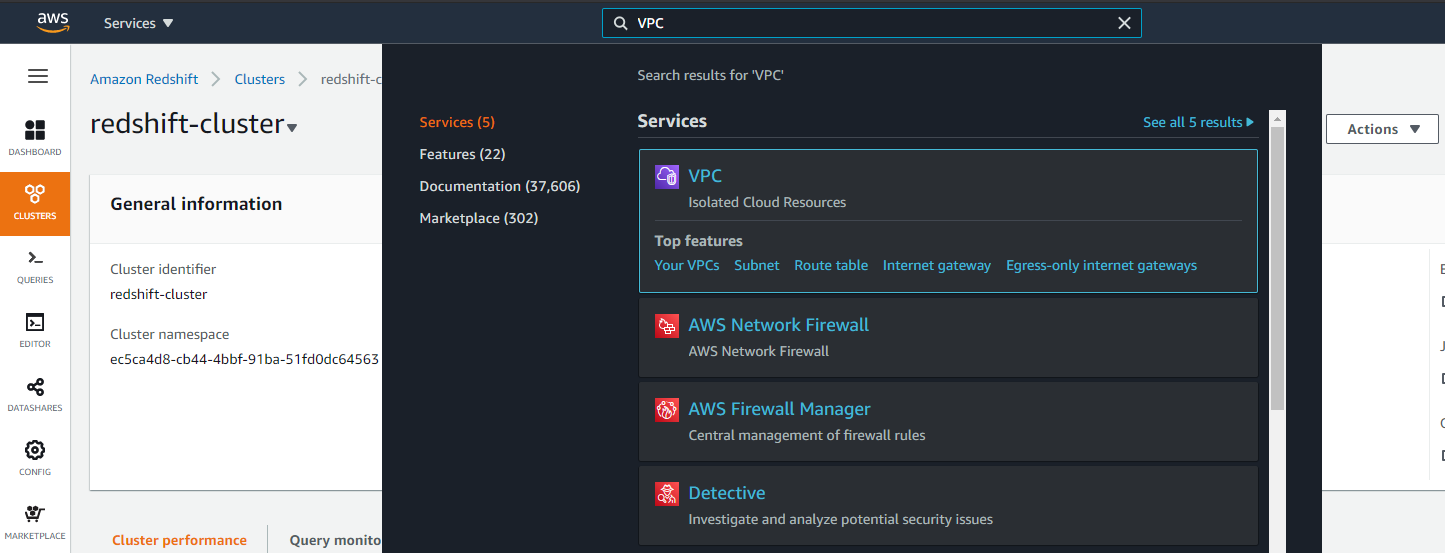

Next, go to the VPC service offered by AWS. You can do so by searching for it in the search bar, as shown below.

Next, go to the VPC service offered by AWS. You can do so by searching for it in the search bar, as shown below.

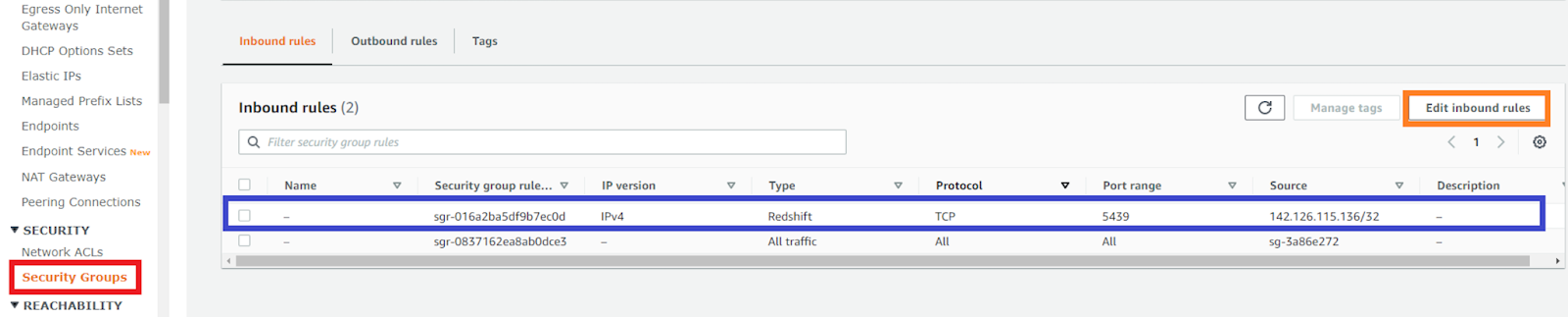

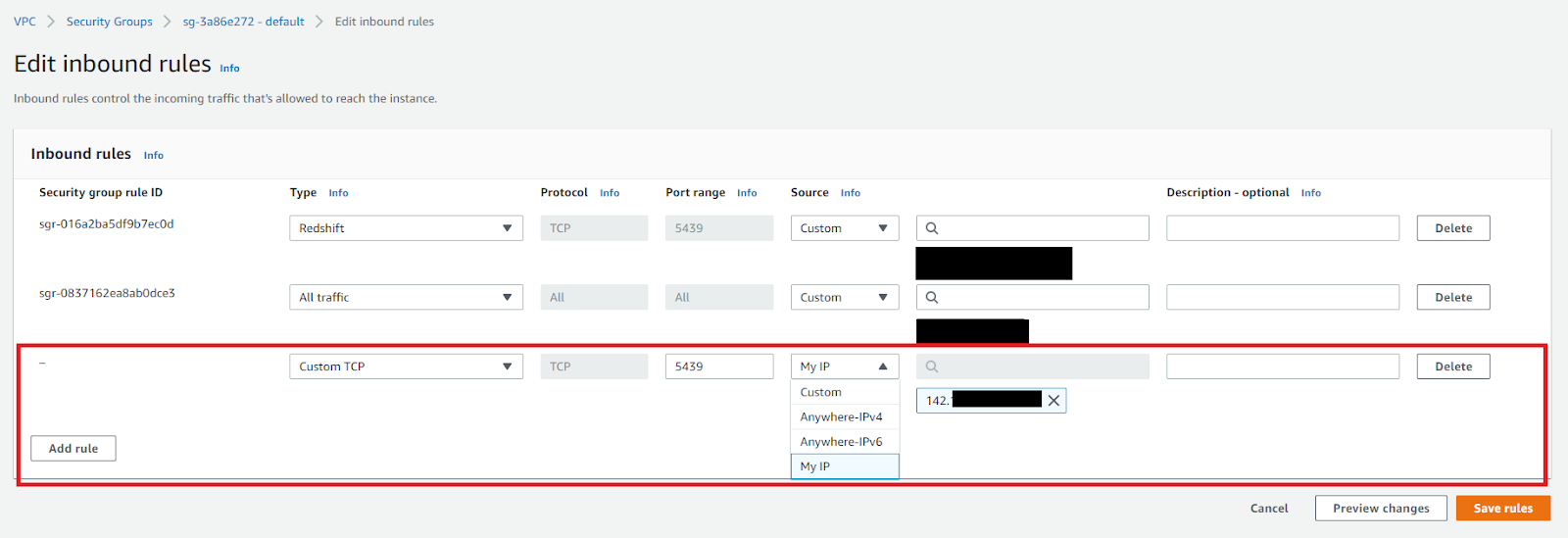

Then navigate to the "Security Groups" tab on the left to land on the page that looks like the one below to create a custom inbound and outbound rule. For example, you will need to create a custom TCP rule over port 5439 that allows incoming connections from your local IP address. After the rule is saved, it will be displayed on the dashboard as highlighted in blue.

The custom inbound rule needs to have the specifications as outlined in the image below. Note: the same step needs to be done for the outbound rules as well, and then your Redshift source will be ready for connection with Airbyte.

Step 2: Set Up the Snowflake Destination in Airbyte

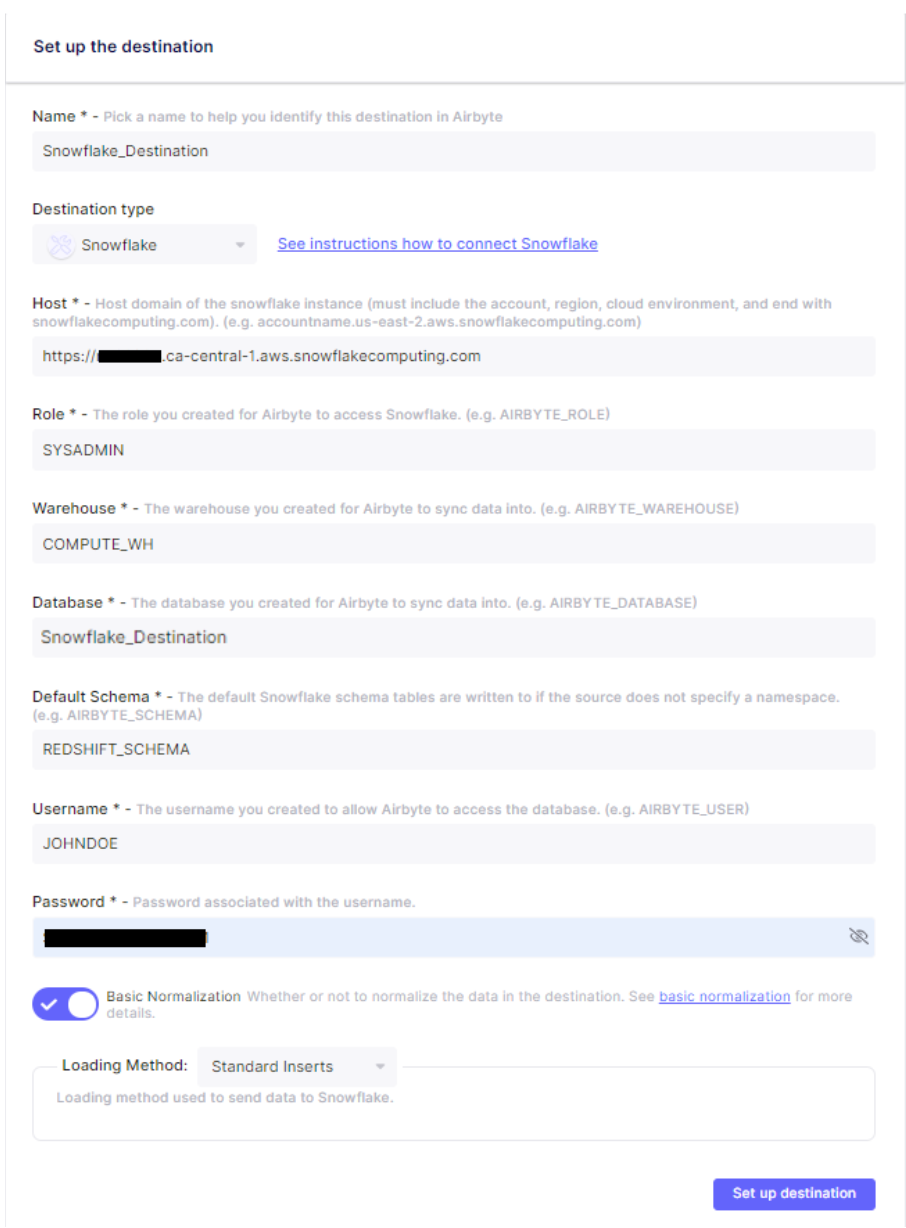

After the Source is configured, proceed to the "Set up the destination" page in Airbyte to configure the destination.

Similar to our previous step, the destination name is customizable. In this example, we have used “Snowflake_Destination.” Then, we picked the destination type as Snowflake. Refer to the image below for details on the other required fields.

The host value can be retrieved from your Snowflake dashboard.

Note: For this example, we are using the SYSADMIN role in Snowflake, but it is highly recommended to create a custom role in Snowflake with reduced privileges for use with Airbyte. By default, Airbyte uses Snowflake's default schema for writing data, but you can put data in another schema if you wish (like REDSHIFT_SCHEMA in our case). Having a separate schema is helpful when you want to separate the data you are migrating from the existing data. Lastly, the username and password should be as per the credentials setup during the Snowflake account creation.

Hit the “Setup the destination” button, and if everything goes well, you should see a message telling you that all the connection tests have passed.

Step 3: Set Up the Connection

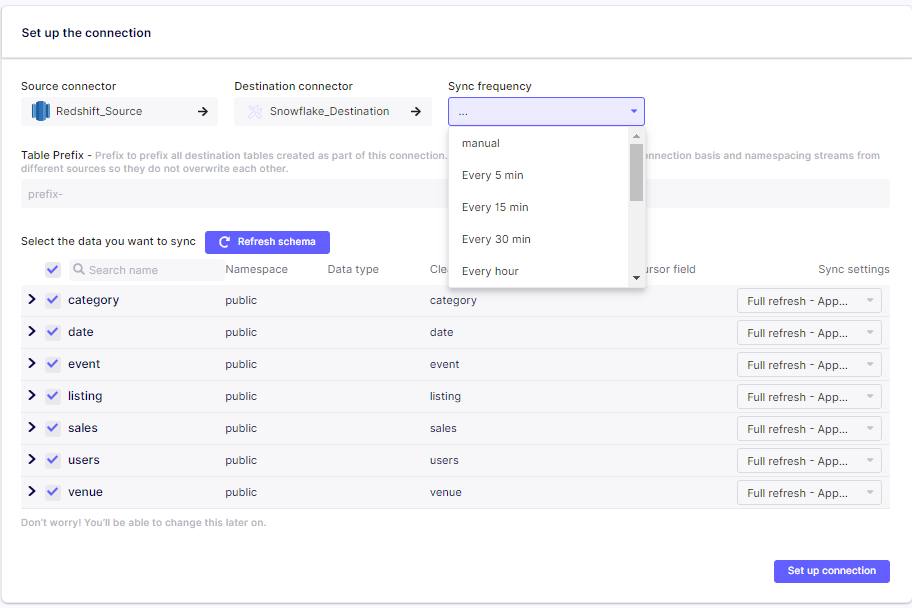

Once the destination is set up, you will be directed to the "Set up the connection" screen in Airbyte. In this step, you will notice that Airbyte has already detected the tables and schemas to migrate. By default, all tables are selected for migration. However, if you only want a subset of the data to be migrated, you can un-select the tables you wish to skip. In this step, you can also specify details such as sync frequency between source and destination. There are several interval options, as shown in the figure — from 5 minutes to every hour. The granularity of the sync operation can also be set by selecting the correct sync mode for your use case. Read sync mode in the Airbyte docs for more details.

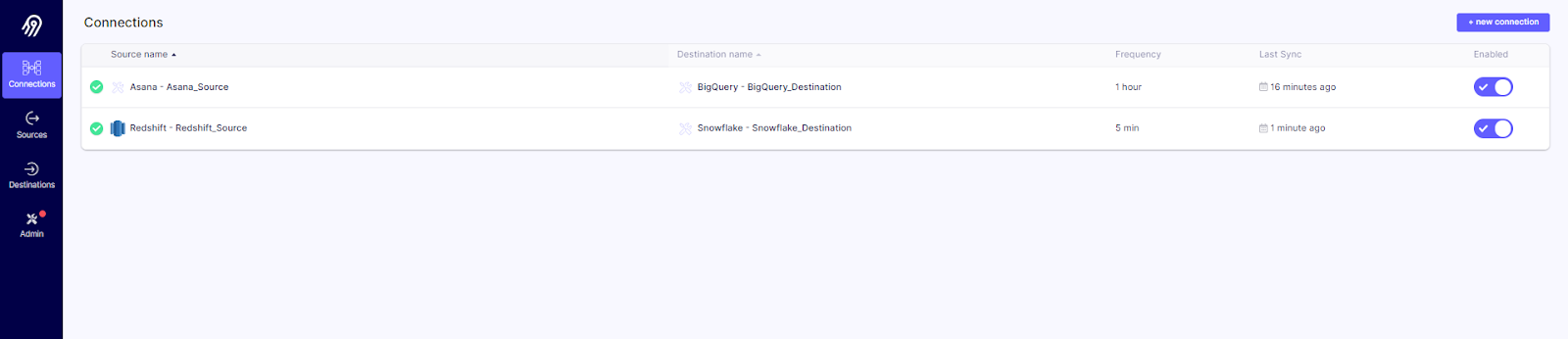

After we have specified all our customizations, we can click on "Set up Connection" to kick-off data migration from Redshift to Snowflake. At a glance, you'll be able to see the last sync status of your connection and when the previous sync happened.

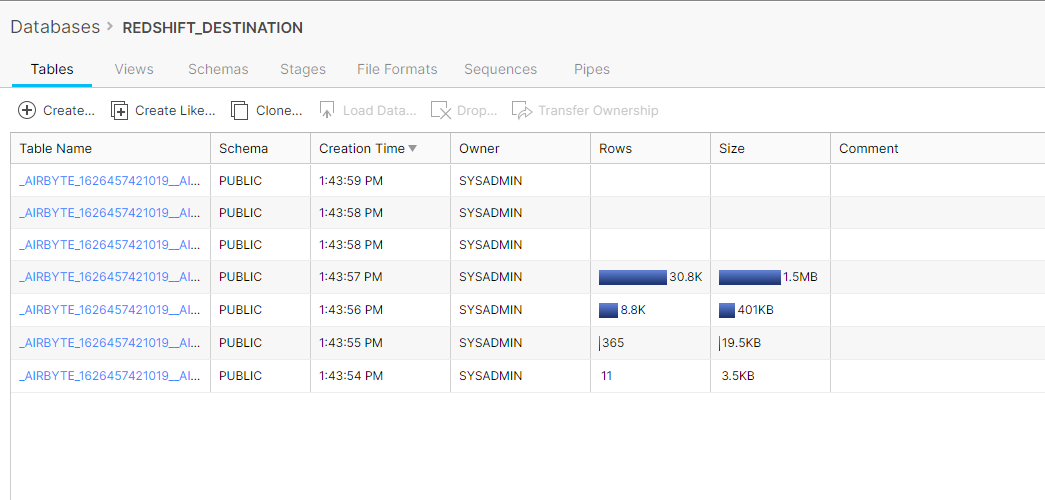

That's how easy it is to move your data from Redshift to Snowflake using Airbyte. However, if you want to validate that the migration has happened, look at the destination database in Snowflake, and you will notice that additional data tables are present.

Step 4: Evaluating the Results

After successfully migrating the data, let's evaluate Snowflake's key features which inspired the migration. In Snowflake, computing power and storage are decoupled, making Snowflake's storage capacity not dependent on the cluster size. Furthermore, Snowflake enables you to scale your data with three simple clicks, compared to Redshift's cumbersome process.

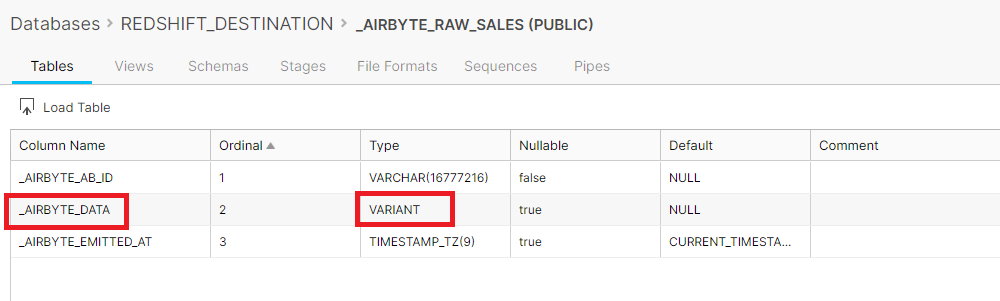

As shown in the figure below, Airbyte loads JSON data into a Snowflake table using the VARIANT data type. Using Snowflake's powerful JSON querying tools, you can work with JSON data that is stored in a table along with non-JSON data. On the other hand, Redshift has limited support for semistructured data types, requiring multiple complex sub-table joins to produce a reporting view.

Wrapping Up

To summarize, here is what we’ve done during this recipe:

- Configured a Redshift Airbyte source

- Configured a Snowflake Airbyte destination

- Created an Airbyte connection that automatically migrates data from Redshift to Snowflake

- Explored the easy to use scaling feature and support for JSON data in Snowflake using the VARIANT data type

We know that development and operations teams working on fast-moving projects with tight timelines need quick answers to their questions from developers who are actively developing Airbyte. They also want to share their learnings with experienced community members who have “been there and done that.”

Published at DZone with permission of John Lafleur. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments