How to Deal With Slow APIs

APIs that perform well at the beginning can degrade over time. Learn how to manage and reverse these performance issues.

Join the DZone community and get the full member experience.

Join For FreeThanks to Santhosh Gandhe, Senior Engineering Manager at LeanTaaS for sharing his thoughts with the DZone community.

As an engineer, you spend a lot of time with APIs -- you’re either building APIs for others or consuming others’ APIs. Working with APIs is as much an art as it is science. One of the most common mistakes engineers make is not thinking enough about performance from the ground up. We want to make things work first and deal with performance later. That’s fine, but it helps to be aware of it while building that v1 so you can avoid chaos later. If you’ve done this long enough, I’m sure you know initially everything works well but over time things become slow.

It gets tricky when we’re consuming others’ APIs which we have little to no control over. Often, many APIs come back within milliseconds initially, but over time, as complexity increases, they start to become slow. Some user action could trigger an expensive query in an API we’re consuming and things come to a stall. APIs don’t come with a performance expectation or guarantee, so when consuming APIs, it helps to be aware of what kind of things can slow them down, especially in the critical paths of your project.

Let’s take a use case where one or two user actions are sharing a single view area to display their responses. If one of the APIs connected to these actions takes a long time, then we could have a situation where the view gets jumbled if it’s not handled properly.

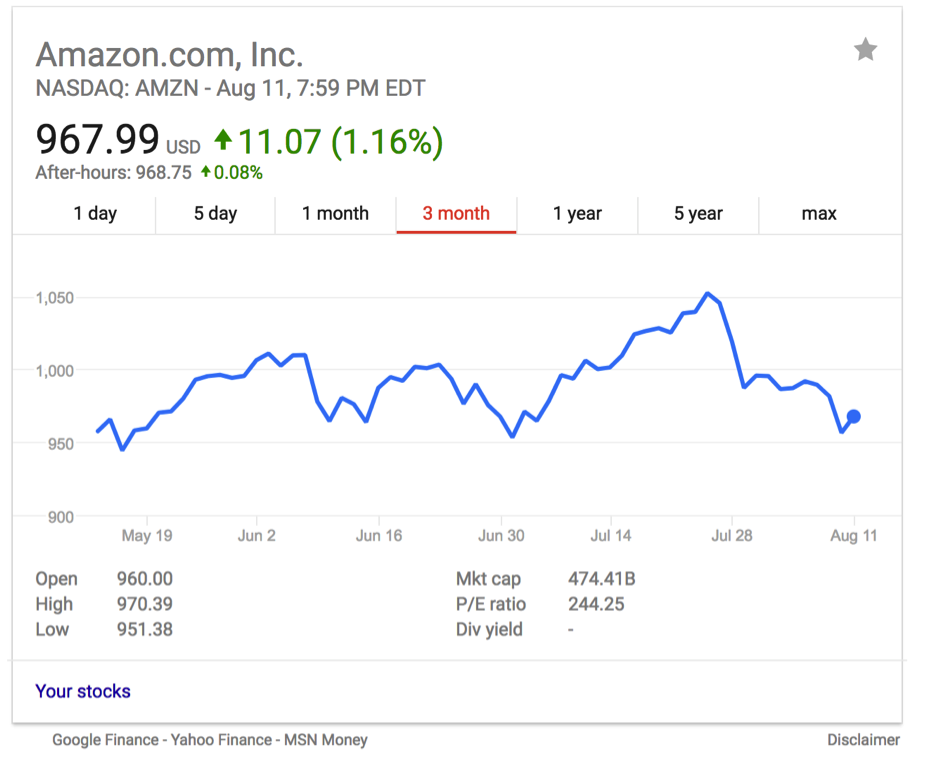

For example, Google 'amzn stock' and you’ll see something like below: one single stock trend chart controlled by user selected time range controls like 1 day, 5 days, 1 month, etc.

As we switch between time periods, the view area will reflect the change. Let’s assume that your API call to fetch a 1-year trend chart got worse and the user clicked on it and it takes forever to load and the user lost patience and switched to a lesser time period, say 3 months, and got the chart loaded in no time. While they are viewing the 3 months chart, the API call that you fired to fetch the 1-year data will come back with its response and redraw the chart with 1-year data.

This is not a complicated problem to solve. You can just look at the currently active time period and ignore the response that arrived late. But as you build your v1, you may not think you need this handling while developing your UI because all of your APIs returned immediately when you developed it. You may not have anticipated that your APIs can slow down in certain scenarios or over time.

Now, if such a scenario affects the state of a common shared service in your application that’s serving many components, the situation can get a lot worse, and often trying to find the root cause in such situations can become very complicated.

Let’s see how we could solve this. An easy way is to maintain a flag in that common shared service that tracks the current backend API we’re expecting a response from. But what if we called the same API multiple times? The flag won’t work. We could extend the flag to store the ‘state’ of each backend API call, but that can get messy and complicated -- every time we store state, we’re taking a big risk because we need to “remember” to keep that state updated as the API changes and that almost always leads to undesirable bugs down the road.

The less “global” state you maintain, the better. It not only helps keep your code simple and modular, but it also gives you more freedom to increase concurrency.

A better approach, in this case, is to not deal with the response coming out of a slow API but to simply stop receiving responses from it. Simply terminate the APIs you no longer care about a response for and move on. You can easily do that by keeping track of all in-progress API calls, and when you need to fire a new one, simply terminate the older ones you no longer need and move on.

If you’re using a jQuery ajax method, then keep the reference to the XMLHttpRequest returned by the jQuery ajax method and invoke the abort method in your flow at the right time.

If you’re using ES6 promises, then sorry, that just won’t work -- you cannot terminate an in-progress API call attached to a promise. More about promises here.

Welcome to the RxJS world! RxJS tries to bring order to the chaos of API performance with a fully asynchronous, event driven model. If something takes time, let it take time. Let’s run with whatever we have. I’m not here to debate whether it’s good or bad or whether it’s the best approach, but I find it handy to deal with these types of situations.

In this case, you can simply use a switch construct on your observables and get to the latest asynchronous event that you are interested in without worrying about any state maintenance or terminating previously in-progress APIs. When you use the right constructs, this library does all these actions internally for you so you don’t have to worry about them!

Check out plunker as an example.

In this example, a slow API is mimicked with an Observable.timer. The RxJS subject instance asyncActionSubject created as part of the shared service constructor uses the switch construct to simply switch to the latest observable returned. The rest are all taken care of by the RxJS framework. Check out the RxJS GitHub page here for more details.

Key Takeaways

When consuming APIs, always think about what scenario can cause it to slow down. You can get a sense for this based on what the API is doing, how much and what type of data it returns, and what can happen if the simple case you are testing with can get complex. This is especially important if your code path is servicing user actions with lots of filters and selectors, for example.

Always think about the big picture -- how will users interact with your code, which in turn affects the APIs you are consuming? Step back and think about what can go wrong and handle those cases from the ground up.

Always try to be as decentralized and stateless as you can. Centralization and statefulness are enemies for debugging and concurrency. You will save a LOT of headaches later down the road.

Be careful with asynchronous calls -- they are good but they can get tricky. Understand how they work in your case and what happens when the calls return in a time sequence.

Last but not least, less is always better. Ask for the minimum amount of data you need, and actually consume the minimum amount of data you want even if an API returns more.

Thanks to Amy Tsai for helping with this post.

###

Santhosh Gandhe is Engineering Manager for LeanTaaS, a software company applying lean principles, predictive analytics, and machine learning to solve some of the most challenging operational issues in health care. Prior to joining LeanTaaS, Santhosh held senior engineering management positions with Bed, Bath and Beyond, Inc.; Wizcom Corporation, Agentrics, and MyGenSource. He holds a Master of Computer Applications from Kakathiya University.

Opinions expressed by DZone contributors are their own.

Comments