Apache Parquet vs. CSV Files

When you only pay for the queries that you run, or resources like CPU and storage, it is important to look at optimizing the data those systems rely on.

Join the DZone community and get the full member experience.

Join For FreeYou have surely read about Google Cloud (i.e. BigQuery, Dataproc), Amazon Redshift Spectrum, and Amazon Athena. Now, you are looking to take advantage of one or two. However, before you jump into the deep end, you will want to familiarize yourself with the opportunities of leveraging Apache Parquet instead of regular text, CSV, or TSV files. If you are not thinking about how to optimize for these new query service models, you are throwing money out the window.

What Is Apache Parquet?

Apache Parquet is a columnar storage format with the following characteristics:

Apache Parquet is designed to bring efficient columnar storage of data compared to row-based files like CSV.

Apache Parquet is built from the ground up with complex nested data structures in mind.

Apache Parquet is built to support very efficient compression and encoding schemes.

Apache Parquet allows for lower data storage costs and maximized effectiveness of querying data with serverless technologies like Amazon Athena, Redshift Spectrum, and Google Dataproc.

Parquet is a self-describing data format that embeds the schema or structure within the data itself. This results in a file that is optimized for query performance and minimizing I/O. Parquet also supports very efficient compression and encoding schemes.

Parquet and the Rise of Cloud Warehouses and Interactive Query Services

The rise of interactive query services like Amazon Athena, PrestoDB and Redshift Spectrum makes it easy to use standard SQL to analyze data in storage systems like Amazon S3. If you are not yet sure how you can benefit from those services, you can find more information in this intro post about Amazon Redshift Spectrum and this post about Amazon Athena features and benefits. Also, data warehouses like Google BigQuery and the Google Dataproc platform can leverage different formats for data ingest.

The data format you select can have significant implications on performance and cost. We will walk you through a few examples of those considerations.

Parquet vs. CSV

CSV is simple and ubqitous. Many tools like Excel, Google Sheets, and a host of others can generate CSV files. You can even create them with your favoritre text editing tool. We all love CSV files, but everything has a cost — even your love of CSV files, especially if CSV is your default format for data processing pipelines.

Amazon Athena and Spectrum charge you by the amount of data scanned per query. (Many other services also charge based on data queried, so this is not unique to AWS.)

Google and Amazon charge you for the amount of data stored on GS/S3.

Google Dataproc charges are time-based.

Defaulting to the use of CSV will have both technical and financial outcomes (not in a good way). You will learn to love Parquet just as much as your trusty CSV.

Example: A 1 TB CSV File

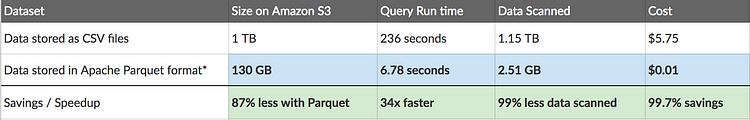

The following demonstrates the efficiency and effectiveness of using a Parquet file vs. CSV.

By converting your CSV data to Parquet’s columnar format and then compressing and partitioning it, you save money and reap the rewards of better performance. The following table compares the savings created by converting data into Parquet vs. CSV.

If over the course of a year, you stick with the uncompressed 1 TB CSV files as foundation of your queries, your costs will be $2,000. Using Parquet files, your total cost will be $3.65. I know you love your CSV files, but do you love them that much?

Also, if time is money, your analysts can be spending close to five minutes waiting for a query to complete simply because you use raw CSV. If you are paying someone $150 an hour and they are doing this once a day for a year then they spent about 30 hours simply waiting for a query to complete. That is roughly about $4,500 in unproductive “wait” time. Total wait time for the Apache Parquet user? About 42 mins or $100.

Example 2: Parquet, CSV, and Your Redshift Data Warehouse

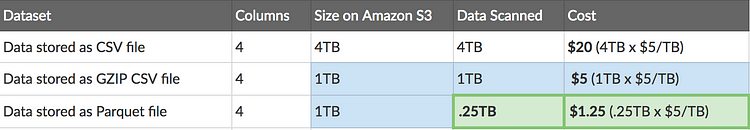

Amazon Redshift Spectrum enables you to run Amazon Redshift SQL queries against data in Amazon S3. This can be an effective strategy for teams that want to partition data when some of it resides within Redshift and other data resides on S3. For example, let’s assume you have about 4 TB of data in a historical_purchase table in Redshift. Since it's not accessed frequently, offloading it to S3 makes sense. This will free up that space in Redshift while still providing your team access via Spectrum. Now, the big question becomes: What format are you storing that 4 TB historical_purchase table in? CSV? How about using Parquet?

historical_purchase table has four equally sized columns, stored in Amazon S3 in three files: uncompressed CSV, GZIP CSV, and Parquet.

- Uncompressed CSV file: The uncompressed CSV file has a total size of 4 TB. Running a query to get data from a single column of the table requires Redshift Spectrum to scan the entire file 4 TB. As result, this query will cost $20.

- GZIP CSV file: If you compress your CSV file using GZIP, the file size is reduced to 1 GB. Great savings! However, Redshift Spectrum still has to scan the entire file. The good news is your CSV file is four times smaller than the uncompressed one, so you pay one fourth of what you did before. So this query will cost $5.

- Parquet file: If you compress your file and convert it to Apache Parquet, you end up with 1 TB of data in S3. However, because Parquet is columnar, Redshift Spectrum can read only the column that is relevant for the query being run. It only needs to scan just one-fourth of the data. This query would only cost $1.25.

If you are running this query once a day for a year, using uncompressed CSV files will cost $7,300. Even compressed CSV queries will cost over $1,800. However, using the Apache Parquet file format, it will cost about $460. Still in love with your CSV file?

Summary

The trend toward “serverless,” interactive query services, and zero administration pre-built data processing suites is rapidly progressing. It is providing new opportunities for teams to go faster with lower investments. Athena and Spectrum make it easy to analyze data in Amazon S3 using standard SQL. Also, Google supports loading Parquet files into BigQuery and Dataproc.

When you only pay for the queries that you run, or resources like CPU and storage, it is important to look at optimizing the data those systems rely on.

Published at DZone with permission of Thomas Spicer. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments