How to Apply the 80:20 Rule to Performance Testing

Performance maintenance is essential, and accordingly performance testing is hugely necessary. The performance testing space continues to evolve however, in this day of continuous delivery and integration. Here's an overview of the 80:20 rule as applied to performance testing, from a fear of testing to testing complex scenarios.

Join the DZone community and get the full member experience.

Join For Freeperformance testing continues to change rapidly in the age of continuous integration and continuous delivery. yet many companies are still apprehensive of large performance tests, as they are seen as daunting and time-consuming. this post will argue for teams to embrace integrating performance testing earlier in the development cycle, even if it’s not fully testing all the flows in the website or application, as the resulting data will still be very valuable.

the fear of testing

despite the transformation of software development cycles, in many cases, performance testing, if done much at all, still remains relegated to the later stages of the product’s lifecycle. part of the reason for this is fear. testing is scary. it’s difficult just getting started with unit, integration and functional testing right now, and for many users, adding performance testing on top of that sounds incredibly hard, because it requires a different set of expertise to even get started, and in addition to that, there is the impression that it takes a lot of time.

back in the days before agile and continuous integration, when the waterfall development model determined that software was built and then made stable in the end, we were able to get a performance expert to work with the dev teams and create a realistic simulation of the user traffic (flows + calculate number of users for each). however, this not only consumed a lot of time and resources, you also had to wait to get value from the test results and then start fixing performance issues, which is harder to do in the late stages of the development process.

using the 80:20 approach to performance testing

in a world where applications are constantly changing, it’s ineffective and inefficient to have such an approach. the frequent build and release process of continuous integration necessitates finding performance bottlenecks early, and having an easy way to overcome them. i suggest being inspired by the 80:20 rule, the pareto principle , and start testing small and early.

teams should spend 20% of their efforts and get 80% of the knowledge needed to get started. you’ll have more tests, which will be easy to run many times at various stages of the development process, which will, in the big picture, give plenty of data to work with. connecting simple scenarios to ci pipeline ensures you’ll keep on learning, as the app continues to evolve.

other ways to save time and be able to test faster that companies have been increasingly adopting including a shift toward open source tools for performance testing , and leveraging the cloud to run load tests globally at massive scale.

it’s important to accept that while teams want to get the data of their performance tests 100% accurate (including number of concurrent users, the functions being triggered, and requests per second, as well as covering all the flows), you don’t have to adhere to this all-or-nothing strategy. in the “good enough” approach, there is room to allow for early testing, and it’s also easier and cheaper to find and fix performance issues early on. when working with ci pipelines we have the chance to run a lot of tests, all of the time, so even if the tests and results are incomplete, you will still get value.

work toward testing simple scenarios

so you might be asking, how we can practically apply this in real scenarios? for example, in approaching running performance tests on a static site, instead of creating flows, you can just hit random pages (you might want to choose to go test according to the most visited pages, for example) and then iterate a list. similarly, when looking at an api test, a mobile app or microservices, just hit endpoints, not flows. additionally, work toward testing simple scenarios first. for example, if several api endpoints are required to a business transaction, simulate it.

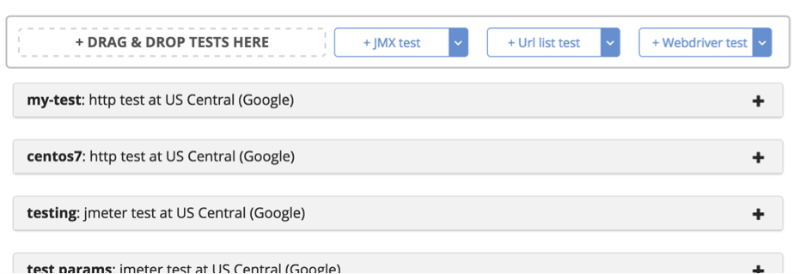

i want to demonstrate how to do this with blazemeter . the basic scenario of testing a static content site can be solved by blazemeter’s url test. in it, you can list all the resources you’d like to test, set the number of users, and click on ‘play’ to generate the traffic.

you can also choose to create a very basic test using jmeter to test your rest api . now you can go to my ci tool of choice, and configure blazemeter’s plugin for it. this will enable you to add a blazemeter test step to your build process, in which you can configure a performance test to run on your application when it’s built. you can learn more about this process in blazemeter’s documentation .

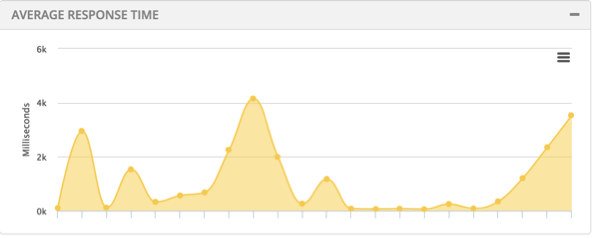

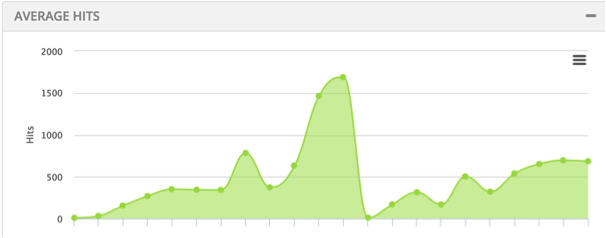

not only you’ll be able to view the results on each specific build in blazemeter, and even set fail criteria on the test (which your ci tool can later use to help determine if the build is a successful build or not), but you will also see the performance trends of your application, and how it progressed between the different builds. so even if your application’s performance is up to par, you will be able to predict how it’ll behave in later builds, and solve issues even before they occur.

next steps: testing complex scenarios

with all that said, “large” performance tests are still very much needed. it is still important to try and run a real world simulation, a complex scenario with real user flows. taking the ‘good enough’ approach, you can add another easy step in between. take all the basic scenarios that you created, and you continue to run, and configure them to run simultaneously, thus using the scenarios you already have, and start getting data to work with.

along these lines, you have the ability with blazemeter to run several basic tests in parallel, with each testing something else (i.e loads a specific part of the app), enabling to you easy to get a more comprehensive view of how the app behaves. blazemeter’s multi-test feature allows you to link several of your basic tests, run them simultaneously, and see all the results on the same graph.

taking advantage of the ci plugin is also possible here, by simply configuring the blazemeter step to start the new multi-test you’ve just created, to run with your build. this way, you’ll get more of the same blazemeter reporting goodness, but on a much more advanced scenario.

taking advantage of the ci plugin is also possible here, by simply configuring the blazemeter step to start the new multi-test you’ve just created, to run with your build. this way, you’ll get more of the same blazemeter reporting goodness, but on a much more advanced scenario.

so to sum up, while trying to get the 100% coverage for your test is a great goal to have - and it is very much important - it’s also not always the best approach in the short term. you can get a lot of info, and fix most issues, by running simple tests. when approaching performance testing in the ci process, just do it. test early, test small, but just test!

Published at DZone with permission of Jason Silberman. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments