How to Analyze Test Automation Results

Learn how to structure an approach for analyzing the large volume of results from your automated tests with monitoring, logging, and dashboards.

Join the DZone community and get the full member experience.

Join For FreeAs test automation is introduced to the software delivery process, the amount of available test results explodes. Robots, or test execution agents, can run 24/7 without breaks, and, on top of this, the number of test cases accumulate during each sprint. As such, more results are produced to be managed and analyzed. This requires the right approach.

If the time spent investigating test results exceeds the time saved by running automated tests, then automation does not improve output quality and it's not worth the cost. To reap the benefits of automation it is essential to know how to properly handle the growing amount of test results.

Below are four tips for how to best handle and analyze test results generated by automation.

1. Set Up Automated Monitoring

Any test team already have plenty of tasks as part of the software delivery process, so simply adding another task of monitoring a result log, does not necessarily result in quality improvement.

Having a test team constantly monitoring tests results on their own comes with several risks, for example:

- How to make sure that results are checked with regular intervals? Manual monitoring can be interrupted by calendar conflicts, like meetings, vacation, etc.

- If test cases rarely fail, the need for monitoring will be perceived as less important over time. This sentiment is very damaging to regression testing which is all about identifying unforeseen problems at any time.

Instead, make sure that the tool used for test automation allows for setting up alerts and/or sending out messages when the test team needs to act, e.g when one or more test cases fail or when the execution of a test case takes longer than a predefined critical limit.

By setting up automated notifications like these, testers can react when needed to check in on the automated test cases, and not waste time by stating that nothing has failed.

2. Figure Out Why Cases Are Failing

It is obvious that if a tester spends more time analyzing why an automated test case fails, than it takes to execute the case, automation loses its purpose. Investigating a failing test case and pinpointing the reason for failure should be easy and happen fast. Both product owners, developers, and testers need swift feedback to catch irregularities as fast possible.

A test automation platform should include the following features to help testers be more productive in the analysis phase:

- Video recording of the machines running the test case. This is a very powerful tool as it allows the test team to see exactly what happened when the test case ran.

- Logging functionality. This should contain all output from the test case in the step-by-step order of how the test case was executed.

- Debug functionality. This could include a step-by-step walk-through of failing test cases to see values, states etc. This is very helpful for identifying why a test case fail. In the LEAPWORK Automation Platform, the debugging functionality comes in the form of a scrubbing preview mode of the video recording.

- Replay functionality. Combining the video recording with the logging and debug functionalities allows you to see the big picture. With these insights, even testers who don't know much about the test case can debug it and draw conclusions quickly.

3. Share Results

Release platforms like Quality Center, Jira, and TFS can be used for both managing tests and handling bugs. They are widely used among test teams as tools for keeping track of bugs, test strategies, test case descriptions, and more.

Introducing test automation probably won't change the fact that these platforms serve as the center of collective testing efforts. This is why you should integrate your test automation platform by either pushing results to the test management system or pulling results from the test automation platform using an API.

4. Use a Dashboard for Results

Fast and transparent feedback is a cornerstone in DevOps. This allows the development team to react to issues quickly and fix them before a bug is released into a production environment.

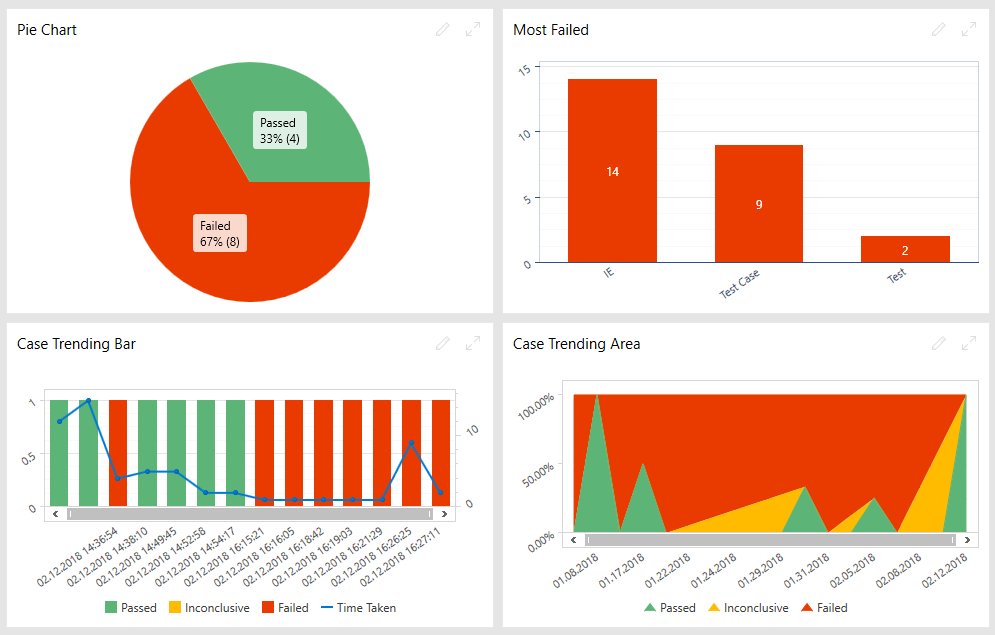

An effective way to share results in and between teams is to use visual dashboards on shared monitors in a team's workspace. For example, showing a simple graphical representation of the latest results from regression tests on the test environment will give the team a clear indication of the current quality of the software under test.

Example of a dashboard from the LEAPWORK Automation Platform: Summary

Summary

- Set up automated monitoring to make sure testers spend their time most effectively.

- Figure out why test cases are failing by utilizing your test automation platform's logging, debugging, and reviewing functionalities.

- Integrate with your release management platform either by pushing or pulling test results.

- Ensure fast and transparent feedback with shared dashboards of real-time test results.

Published at DZone with permission of Kasper Fehrend, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments