How Many GPUs Should Your Deep Learning Workstation Have?

Trying to get the right number of GPUs for a deep learning workstation can be tricky. Here’s how to optimize your deep learning workstation without blowing your budget.

Join the DZone community and get the full member experience.

Join For FreeChoosing the Right Number of GPUs for a Deep Learning Workstation

If you build or upgrade your deep learning workstation, you will inevitably wonder how many GPUs you need for an AI workstation focused on deep learning or machine learning. Is one adequate, or should you add 2 or 4?

The GPU you choose is perhaps the most crucial decision for your deep learning workstation. When it comes to GPU selection, you want to pay close attention to three areas: high performance, memory, and cooling. Two companies own the GPU market: NVIDIA and AMD. We’ll give our best recommendations that excel in these areas at the end of this guide.

Let’s discuss whether GPUs are a good choice for a deep learning workstation, how many GPUs are needed for deep learning, and which GPUs are the best picks for your deep learning workstation.

Can Any GPU Be Used For Deep Learning?

When you are diving into the world of deep learning, there are two choices for how your neural network models will process information: by utilizing the processing power of CPUs or by using GPUs. In brief, CPUs are probably the simplest and easiest solution for deep learning, but the results vary on the efficiency of CPUs when compared to GPUs.

GPUs can process multiple processes simultaneously, whereas CPUs tackle processes in order one at a time. This means you can get more done and done faster by utilizing GPUs instead of CPUs. Most people in the AI community recommend GPUs for deep learning instead of CPUs for this very reason.

There is a wide range of GPUs to choose from for your deep learning workstation. As we will discuss later, NVIDIA dominates the market for GPUs, especially for their uses in deep learning and neural networks. Broadly, though, there are three main categories of GPUs you can choose from: consumer-grade GPUs, data center GPUs, and managed workstations, or servers, among these possibilities.

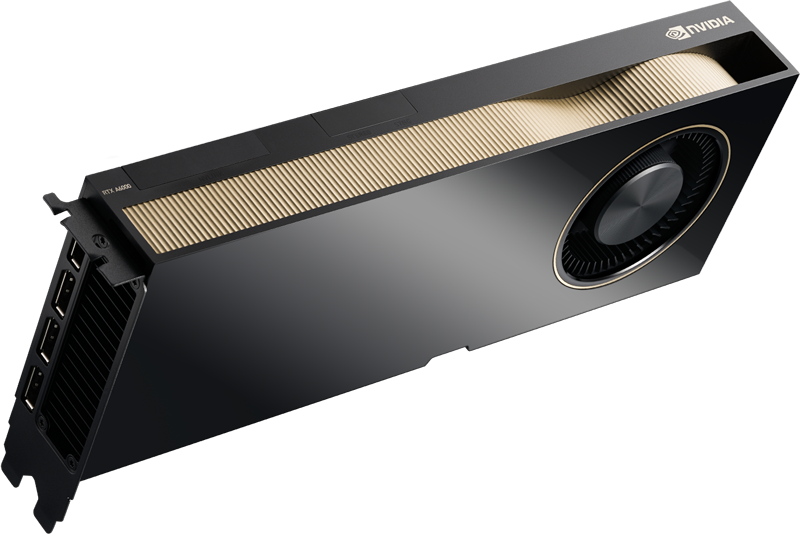

GPU

Consumer-grade GPUs are smaller and cheaper but aren’t quite up to the task of handling large-scale deep learning projects, although they can serve as a starting point for workstations.

These GPUs can cheaply upgrade or build workstations and are excellent for model development and low-level testing. However, as you begin to get into data points in the billions, these types of GPUs will begin to fall off in efficiency and use of time.

Datacenter GPUs are the industry standard for deep learning workstations in production. Truly, these are the best GPUs for deep learning right now. These GPUs are built for large-scale projects and deliver enterprise-level performance.

Managed workstations and servers are full-stack, enterprise-grade systems. Machine learning and deep learning procedures are the focus of these systems. Systems are plug-and-play, and they may be deployed on bare metal or in containers.

These move beyond simply hobby projects, small business type of projects, and into the realm of corporation-level usage. These will be far above anything one might do on their budget or utilize to its full potential on their own.

With all of this in mind, we highly recommend starting with high-quality consumer-grade GPUs unless you know you will be building or upgrading a large-scale deep learning workstation. In this case, we recommend examining data center GPUs.

Is One GPU Enough For Deep Learning?

Now, we can discuss the importance of how many GPUs to use for deep learning. The training phase of a deep learning model is the most resource-intensive task for any neural network.

A neural network scans data for input during the training phase to compare against standard data. This allows the deep learning model to form predictions and forecasts of what to expect based on data inputs with expected or determined results.

This is why you need GPUs for deep learning. Deep Learning models can be taught more quickly by doing all operations at once with the help of a GPU rather than one after the other. However, the more data points are being manipulated and used for input and forecasting, the more difficult it will be to work on all tasks.

Adding a GPU opens an extra channel for the deep learning model to process data quicker and more efficiently. By multiplying the amount of data processed, these neural networks can learn and begin creating forecasts more quickly and efficiently.

Your motherboard will serve an essential role in this process because it will have many PCIe ports to support additional GPUs. Most motherboards will allow up to four GPUs.

However, most GPUs have a width of two PCIe slots, so if you plan to use multiple GPUs, you will need a motherboard with enough space between PCIe slots to accommodate these GPUs.

By having the optimal amount of GPUs for a deep learning workstation, you can make your entire deep learning model run at peak possible efficiency.

GPUs to use for deep learning

Which GPU Is Best For Deep Learning?

As we mentioned earlier, many GPUs can be used for deep learning, but most of the best GPUs are from NVIDIA. All of our recommendations will support this because NVIDIA has some of the highest quality GPUs on the market right now. However, AMD quickly gains ground in graphics-intensive workloads and as the cornerstone of a reliable data center.

Whether you are looking to dip your toe in the deep learning waters and start with a consumer-grade GPU, jumping in with our recommendation for a top-tier data center GPU, or even making the leap to have a managed workstation server, we have you covered with these top three picks.

While the number of GPUs for a deep learning workstation may change based on which you spring for, maximizing the amount you can have connected to your deep learning model is ideal. Starting with at least four GPUs for deep learning will be your best bet.

1. NVIDIA RTX A6000

The NVIDIA RTX A6000 is one of our favorite picks for consumer-grade GPUs. One of the highest-rated GPUs for any GPU-intensive build, the A6000 has over 10,000 cores and 48GB VRAM. As a result, this is one of the premier choices for deep learning builds, upgrades, and applications.

With cutting-edge performance and features, the RTX A6000 lets you work at the speed of inspiration-to tackle the urgent needs of today and meet the rapidly evolving, compute-intensive tasks of tomorrow.

Built on the NVIDIA Ampere Architecture-based GPU, the RTX A6000 combines 84 second-generation RT Cores, 336 third-generation Tensor Cores, and 10,752 CUDA Cores with 48 GB of graphics memory for unprecedented rendering, AI, graphics, and compute performance. Connect two RTX A6000s with NVIDIA NVLink™ for 96 GB of combined GPU memory. And access the power of your workstation from anywhere with remote-access software. Engineer unique products, state-of-the-art design buildings, drive scientific breakthroughs, and create immersive entertainment with the world's most powerful graphics solution.

The best part is that it is relatively affordable compared to other GPUs, especially when compared to some of the different high-quality GPUs available today.

2. NVIDIA RTX A4500

This is our number one pick for anyone trying to take a serious stab at building or upgrading a deep learning workstation. Check out our benchmark review of the NVIDIA RTX A4500 and see why we are such big fans.

It was specifically built with deep learning in mind and, as simply as we can say it, shines as the premier choice of GPUs for deep understanding.

The NVIDIA RTX A4500 delivers the power, performance, capabilities, and reliability professionals need to do more. Powered by the latest generation of NVIDIA RTX technology, combined with 20GB of ultra-fast GPU memory, the A4500 provides amazing performance with your favorite applications and the capability to work with larger models, renders, datasets, and scenes with higher fidelity and greater interactivity, taking your work to the next level.

The NVIDIA RTX A4500 includes 56 RT Cores to accelerate photorealistic ray-traced rendering up to 2x faster than the previous generation. Hardware-accelerated Motion BVH (bounding volume hierarchy) improves motion blur rendering performance by up to 10X compared to the previous generation.

With 224 Tensor Cores to accelerate AI workflows, the RTX A4500 provides the compute power necessary for AI development and training workloads, as well as inferencing deployments.

The NVIDIA RTX A4500 comes with a hefty price tag, but those seriously interested in deep learning workstations should carefully consider the costs and benefits of a GPU like this.

3. NVIDIA DGX Station

Our last recommendation is a system built on 8x NVIDIA A100 GPUs and falls out of the realm of a typical small-scale workstation: the NVIDIA DGX Station. The NVIDIA DGX A100 is the universal system for all AI workloads, offering unprecedented compute density, performance, and flexibility in the world’s first 5 petaFLOPS AI system. Featuring the NVIDIA A100 Tensor Core GPU, DGX A100 enables enterprises to consolidate training, inference, and analytics into a unified, easy-to-deploy AI infrastructure that includes direct access to NVIDIA AI experts.

It's positioned as an all-in-one AI solution to handle any size workload.

Ready to Figure Out How Many GPUs You Need for a Deep Learning Workstation?

It is always best to consult with deep learning rig experts before deciding which GPU would best handle your workloads and how many you might need for a deep learning workstation (or server). Let us know how we can help!

Published at DZone with permission of Kevin Vu. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments