How I Found a Go Issue on ARM that Crashed the Database Server

A distributed database like TiDB is a very complex project. Its performance depends on multiple factors. I will share a peculiar Go bug that caused TiDB to crash on ARM.

Join the DZone community and get the full member experience.

Join For FreeTiDB is an open-source, MySQL-compatible, distributed SQL database. Its architecture adopts a layered design. The storage layer is implemented in Rust, while the computing layer is implemented in Go. A distributed database like TiDB is a very complex project. Its performance depends on multiple factors, such as the operating system and the hardware platform it is running on, and the compiling language it uses.

In this article, I will share a peculiar Go bug that caused TiDB to crash on the Advanced RISC Machine (ARM) platform.

What Was the Problem and How Did It Come to My Attention?

TiDB is tested heavily to ensure that the program is correct and reliable and capture any performance regressions. For new releases, we conduct extra performance and stability tests.

We first observed this Go issue in one of these tests for TiDB 5.4. During the test, a QA engineer reported that the database was stuck and unresponsive. We ran the test again, but the issue didn’t recur, so we did not give enough attention at the time.

Later, during the release test of TiDB 6.0, we ran into the same issue. This time we could reproduce it stably. We also observed that the reproduction took a long time with the ARM platform. Sometimes it’s 6-8 hours, and sometimes longer; but it’s usually within 24 hours.

What Does the Issue Look Like?

When this issue occurred, the tidb-server process no longer responded to requests. However, the process did not panic, and the service ports were still on.

As shown on the monitoring interface below, there was something wrong with the tidb-4-peer node. The process was alive. However, since it did not respond to requests, there was no data logged for it.

TiDB Monitoring on Grafana

After logging in to the machine, I observed that the CPU usage of the tidb-server process was 100%. On a multi-core machine, it occupies exactly one core.

Go Issue Verified

I wanted to know what the current process was doing by checking the goroutine stack:

curl http://127.0.0.1:10080/debug/pprof/goroutine?debug=2 > goroutine.txtHowever, since port 10080 could not respond to the request, this operation got stuck and could not obtain the corresponding goroutine stack information.

The CPU usage was exactly 100% of one core, so I figured that there might be a dead loop somewhere. The reproduction rans in our own test environment. This allowed us to use Delve (dlv), a Go debugging tool, to debug the TiDB process.

dlv attach 4637As shown in the debug log below, only one thread was active, while all others were in the futex state. Essently, they were locked.

(dlv) threads

* Thread 4637 at 0x132fb0c /usr/local/go1.18/src/runtime/sys_linux_arm64.s:596 runtime.futex

Thread 4639 at 0x132f504 /usr/local/go1.18/src/runtime/sys_linux_arm64.s:150 runtime.usleep

Thread 4640 at 0x132fb0c /usr/local/go1.18/src/runtime/sys_linux_arm64.s:596 runtime.futex

Thread 4641 at 0x132fb0c /usr/local/go1.18/src/runtime/sys_linux_arm64.s:596 runtime.futex

Thread 4642 at 0x132fb0c /usr/local/go1.18/src/runtime/sys_linux_arm64.s:596 runtime.futex

Thread 4643 at 0x132fb0c /usr/local/go1.18/src/runtime/sys_linux_arm64.s:596 runtime.futex

Thread 4644 at 0x132fb0c /usr/local/go1.18/src/runtime/sys_linux_arm64.s:596 runtime.futex

Thread 4645 at 0x132fb0c /usr/local/go1.18/src/runtime/sys_linux_arm64.s:596 runtime.futex

Thread 4646 at 0x132fb0c /usr/local/go1.18/src/runtime/sys_linux_arm64.s:596 runtime.futex

Thread 4647 at 0x132fb0c /usr/local/go1.18/src/runtime/sys_linux_arm64.s:596 runtime.futex

Thread 4649 at 0x1320fa8 /usr/local/go1.18/src/runtime/symtab.go:1100 runtime.gentraceback

The call stack of the thread in the futex state was basically in the runtime.stopm function. As shown in the source code snippet below, the call would do a stop-the-world operation for garbage collection (GC), in which the execution of the program was suspended until all objects in the heap were processed. This operation needed to acquire the lock but failed, so the operation hung.

(dlv) bt

0 0x000000000132fb0c in runtime.futex

at /usr/local/go1.18/src/runtime/sys_linux_arm64.s:596

1 0x00000000012f6bbc in runtime.futexsleep

at /usr/local/go1.18/src/runtime/os_linux.go:66

2 0x00000000012cfff4 in runtime.notesleep

at /usr/local/go1.18/src/runtime/lock_futex.go:159

3 0x00000000013017e8 in runtime.mPark

at /usr/local/go1.18/src/runtime/proc.go:1449

4 0x00000000013017e8 in runtime.stopm

at /usr/local/go1.18/src/runtime/proc.go:2228

5 0x0000000001305e04 in runtime.exitsyscall0

at /usr/local/go1.18/src/runtime/proc.go:3937

6 0x000000000132c0f4 in runtime.mcall

at /usr/local/go1.18/src/runtime/asm_arm64.s:186Then, I switched to the active thread and found the gentraceback function at the bottom of the call stack.

dlv) tr 4649

Switched from 4637 to 4649

(dlv) bt

0 0x0000000001320fa8 in runtime.funcdata

at /usr/local/go1.18/src/runtime/symtab.go:1100

1 0x0000000001320fa8 in runtime.gentraceback

at /usr/local/go1.18/src/runtime/traceback.go:357

2 0x0000000001cbcfdc in github.com/tikv/client-go/v2/txnkv/transaction.(*batchExecutor).process

at :01

3 0x0000000001cb1ee8 in github.com/tikv/client-go/v2/txnkv/transaction.(*twoPhaseCommitter).doActionOnBatches

at /root/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220321123529-f4eae62b7ed5/txnkv/transaction/2pc.go:1003

4 0x0000000001cb125c in github.com/tikv/client-go/v2/txnkv/transaction.(*twoPhaseCommitter).doActionOnGroupMutations

at /root/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220321123529-f4eae62b7ed5/txnkv/transaction/2pc.go:963

5 0x0000000001caf588 in github.com/tikv/client-go/v2/txnkv/transaction.(*twoPhaseCommitter).doActionOnMutations

at /root/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220321123529-f4eae62b7ed5/txnkv/transaction/2pc.go:740

6 0x0000000001cbf758 in github.com/tikv/client-go/v2/txnkv/transaction.(*twoPhaseCommitter).commitMutations

at /root/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220321123529-f4eae62b7ed5/txnkv/transaction/commit.go:213

7 0x0000000001cb6f9c in github.com/tikv/client-go/v2/txnkv/transaction.(*twoPhaseCommitter).execute.func2

at /root/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220321123529-f4eae62b7ed5/txnkv/transaction/2pc.go:1585

8 0x000000000132e844 in runtime.goexit

at /usr/local/go1.18/src/runtime/asm_arm64.s:1259I ran the next command several times in dlv and found that it was consistently stuck in the gentraceback code block. Actually, the same line repeated multiple times in the corresponding block in Go 1.18 with the same printed parameters. This indicated a dead loop in this code segment.

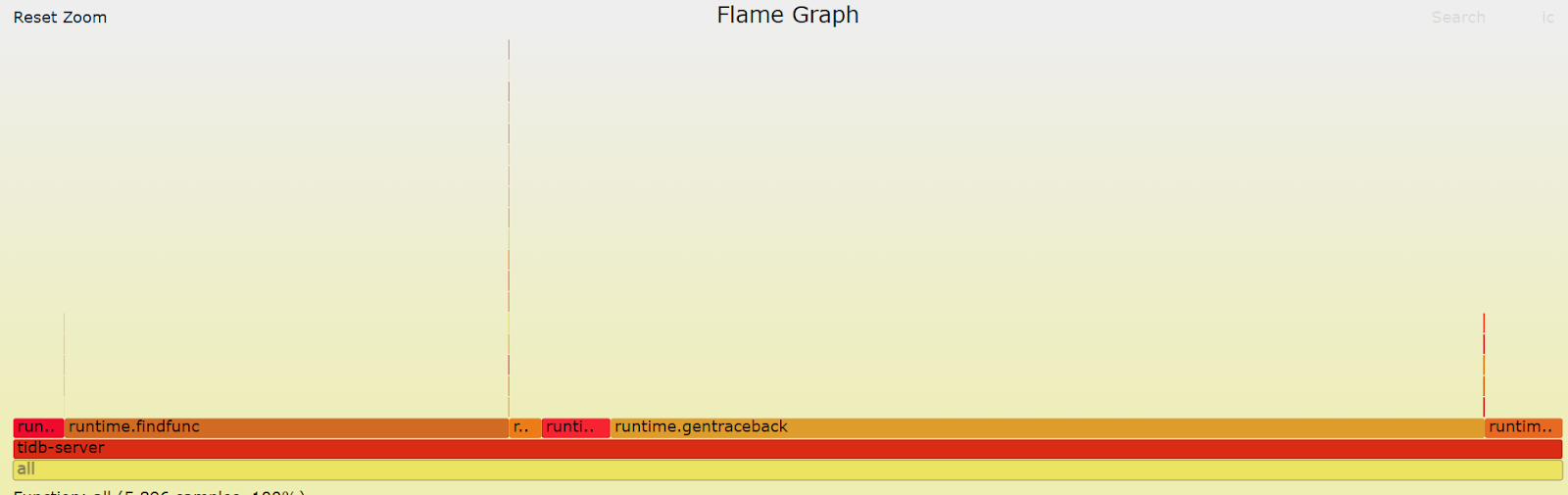

The flame graph of the process also indicated that the execution time was mostly spent in the gentraceback and findfunc functions.

Flame graph of the process

After further analysis, I suspected that we had triggered a Go runtime bug. One of the threads entered into a dead loop in the gentraceback function, which required a global lock. Other threads eventually were executed in the runtime GC-related code, but got stuck while waiting for the lock. In the end, the entire process burned out the CPU on one thread, while all other threads were waiting for the lock.

After locating the dead loop, I tried to modify the Go code to avoid the occurrence by checking its exit condition. As expected, by compiling TiDB with the modified Go code and bypassing the dead loop, our database worked normally. However, we still needed an official fix. We wanted the Go team to address the root cause of the bug, instead of us using a workaround.

Filing an Issue to the Go Team

A colleague of mine reviewed Go’s issue list to see if this was a known bug. We found a similar issue, but it had been fixed in Go 1.16 and Go 1.17. Our issue can be triggered in Go 1.18, so it is new for Go. I submitted the issue on the Go repository. After several rounds of issue reproduction and communications, @cherrymui from the Go community found the root cause and provided a verifiable fix.

Conclusion and Follow-Up Work

The story doesn’t end when we discover a bug and open an issue. As I mentioned earlier, a database is a very complex project. The underlying OS, compiler, hardware may all have bugs that affect its stability and performance.

This Go bug has certain peculiarities. It does not have a stable trigger on TiDB. We also found that different hardware systems with varied TiDB versions might have different levels of difficulties to trigger it. After rounds of testing, we were able to stably reproduce it on the ARM platform.

We have followed the progress of the issue and other people have encountered this problem later. The Go team will backport corresponding fixes in the subsequent versions of 1.18. On the TiDB side, we decided to release the TiDB 5.4.14 binary for the ARM platform based on the patched Go, and the updated version of 6.x based on the officially fixed Go version.

If you are a Go developer who is interested in distributed systems, TiDB could be a great project to start with.

Published at DZone with permission of Arthur Mao. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments