How Checksum Smartly Manages Data Integrity in HDFS

In two words, data integrity can be defined as an assurance of the accuracy and consistency of data throughout the entire life cycle.

Join the DZone community and get the full member experience.

Join For FreeEnsuring data integrity is a basic necessity or back bond in big data processing environment to achieve accurate outcomes. Of course, the same is applicable while executing any data moving operations with traditional data storage systems (RDBMS, Document Repository, etc.) through various applications. Data transportation happens over networks, device-to-device transfers, ETL processes, and much more. In two words, data integrity can be defined as an assurance of the accuracy and consistency of data throughout the entire life cycle.

In a big data processing environment, data(rest) gets persisted in a distributed manner because of the huge volume. So, achieving data integrity on top of it is challenging. Hadoop Distributed File Systems (HDFS) has been efficiently built/developed to store any type of data in a distributed manner in the form of the data block (breaks down the huge volume of data into a set of individual blocks) with data integrity commitment. There might be multiple reasons to get corrupt data blocks in HDFS, starting from IO operation on the system disk, network failure, etc.

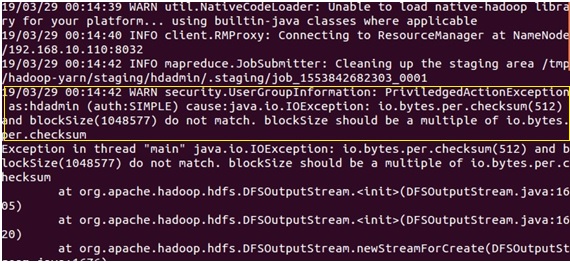

Internally, HDFS smartly utilizes checksum for data integrity. A checksum is a small-sized datum derived from a block of digital data for the purpose of detecting errors. HDFS calculates/computes checksums for each data block and eventually stores them in a separate hidden file in the same HDFS namespace. HDFS uses 32-bit Cyclic Redundancy Check (CRC32) as the default checksum algorithm because of 4 bytes long and less than 1% storage overhead. Similarly verifies checksums while reading data from the data nodes. If there is a discrepancy or error in the checksum value, the exception 'CheckSumException' will be thrown to the client during retrieval of data for processing. 512 bytes is the default value.

Once data blocks are received by data nodes in the multi-node cluster, they compute and store checksum as well before storing the data in the disk. And would be compared with stored one in the data nodes while the client read the data. Besides, every individual data node maintains a persistent log of checksum verification to keep track of when the last verification occurred on each data block. Programmatically, we can disable the checksum verification while submitting a job to the cluster. Using Apache Hadoop 3.1, a comparison of checksums of a file stored in HDFS can be done with the locally stored file. Please read at https://issues.apache.org/jira/browse/HDFS-13056.

Published at DZone with permission of Gautam Goswami, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments