How BERT Enhances the Features of NLP

BERT has enhanced NLP by helping machines understand the context of human language using its bidirectional approach. This blog explores how it achieves this.

Join the DZone community and get the full member experience.

Join For FreeLarge language models have played a catalytic role in how human language is comprehended and processed. NLP has bridged the communication gap between humans and machines, leading to seamless customer experiences.

NLP is great for interpreting simple languages with straightforward intent. But it still has a long way to go when it comes to interpreting ambiguity in text arising from homonyms, synonyms, irony, sarcasm, and more.

Bidirectional Encoder Representations from Transformers (BERT) play a key role in enhancing NLP by helping it comprehend the context and meaning of every word in a given sentence.

Let's read the blog below to understand the workings of BERT and its invaluable role in NLP.

Understanding BERT

BERT is an open-sourced machine learning framework developed by Google for NLP. It enhances NLP by providing proper context to a given text by using a bidirectional approach. It analyzes the text from both ends, i.e., the words preceding and following.

BERT's two-way approach helps it produce precise word representations. By producing deep contextual representations of words, phrases, and sentences, BERT enhances NLP's performance in various tasks.

Working of BERT

BERT's exceptional performance can be attributed to its transformer-based architecture. Transformers are neural network models that perform exceedingly well in tasks dealing with sequential data like language processing.

BERT employs a multi-layer bi-directional transformer encoder for processing words in tandem. The transformer captures complicated relationships in a sentence to understand the nuances of the language.

It also catches minor differences in meaning, which can significantly alter the context of a sentence. This is in contrast to NLP which utilizes a unidirectional approach for predicting the next word in a sequence, hindering contextual learning.

BERT's main aim is to produce a language model, so it only utilizes an encoder mechanism. Tokens are fed sequentially into the transformer encoder. They are initially embedded into vectors and then processed in the neural network.

A series of vectors are produced, each corresponding to an input token, offering contextualized representations. BERT deploys two training strategies to address this issue: Masked Language Model (MLM) and Next Sentence Prediction (NSP).

Masked Language Model (MLM)

This approach involves masking certain portions of the words in each input sequence. The model receives training to predict the original value of the masked words based on the context provided by the surrounding words.

To understand this better, let's look at the example below:

"I am drinking soda."

In the sentence above, what other apt words can be used if the word 'drinking' is removed? The other words that can be used are 'sipping, sharing, slurping, etc.,' but random words like 'eating, chopping' cannot be used.

Hence, the model must understand the language structure to select the right word. The model is provided with model inputs with a word blanked or <MASK> along with the word that should be there. This process creates data by obtaining a text and running over it.

Next Sentence Prediction (NSP)

This process trains a model to determine whether the second sentence complies with the first sentence. BERT predicts whether the second sentence is connected to the first sentence. This is achieved by transforming the output of the [CLS] token into a 2 x 1 shaped vector using a classification layer.)

The probability of the second sentence following the first sentence is calculated through SoftMax. In essence, the BERT model involves training both the above methods together. This results in a robust language model with enhanced features for comprehending context within sentences and the relationships between them.

Role of BERT in NLP

BERT plays an indispensable role in NLP. Its role in diverse NLP tasks are outlined below:

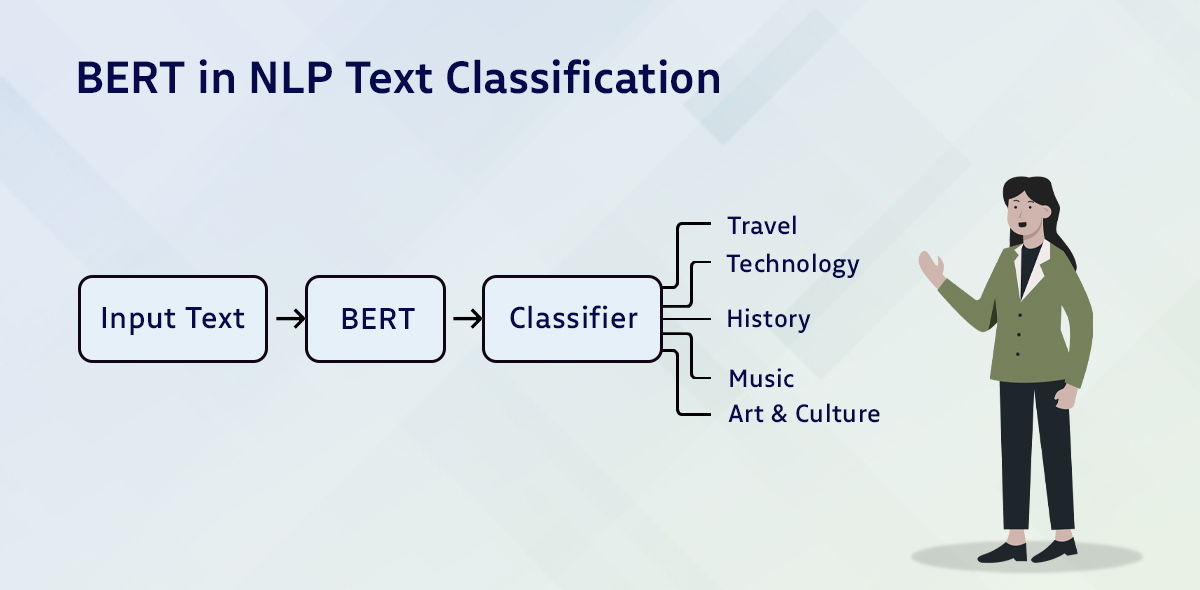

Text Classification

It is used in sentiment analysis to classify text into positive, negative, and neutral. BERT can be used here by adding a classification layer atop the transformer output, i.e., the [CLS] token. This token represents the collected information from the overall input sequence. It can then be used as an input for the classification layer to predict a particular task.

Question Answering

It can be trained to answer questions by acquiring knowledge about two extra vectors that mark the start and end of the answer. BERT is trained with questions and accompanying passages, enabling it to predict the starting and ending positions of the answer in a given passage.

Named Entity Recognition (NER)

NER uses text sequences to identify and classify entities such as person, company, date, etc. The NER model is trained by obtaining the output vector of each token from the transformer, which is then fed into the classification layer.

Language Translation

This is used to translate languages. Key language inputs and associated translated outputs can be used to train the model.

Google Smart Search

BERT utilizes a transformer to research several tokens and sentences simultaneously with self-interest, enabling Google to recognize the goal of seeking text and producing relevant results.

Text Summarization

BERT promotes a popular framework for extractive and abstractive models. The former creates a summary by identifying the most important sentences in a document. In contrast, the latter creates a summary using novel sentences, which involves rephrasing or using new words rather than just extracting key sentences.

Pros and Cons of the BERT NLP Model

As a large language model, BERT has its pros and cons. For clarity, please refer to the points below:

Pros

- Training of BERT in multiple languages makes it great for projects outside of English.

- It is an excellent choice for task-specific models.

- As BERT is trained with a large corpus of data, it becomes easy to use for small, defined tasks.

- BERT can be used promptly after fine-tuning.

- It is highly accurate owing to frequent updates

Cons

- BERT is enormous as it receives training on a large pool of data, which impacts how it predicts and learns from the data.

- It is expensive and requires much more computation, given its size.

- BERT is fine-tuned for downstream tasks, which tend to be fussy.

- It is time-consuming to train BERT as it has a lot of weight, which requires updating.

- BERT is prone to biases in training data, which one needs to be aware of and strive to overcome to create inclusive and ethical AI systems.

Future Trends in the BERT NLP Model

Research is underway to address challenges in NLP, like robustness, interpretability, and ethical considerations. Advances in zero-shot learning, few-shot learning, and commonsense reasoning will be used to develop intelligent learning models.

These are expected to drive innovation in NLP and pave the way for developing far more intelligent language models. These versions will help in unlocking insights and enhance decision-making in specialized domains.

Integrating BERT with AI and ML technologies like computer vision and reinforcement learning is bound to bring in a new era of innovation and possibilities.

Conclusion

Advanced NLP models and techniques are emerging as BERT continues to evolve. BERT will make the future of AI exciting by enhancing its capability to comprehend and process language. The potential of BERT extends further than mundane NLP tasks.

Specialized or domain-specific model versions are being developed for healthcare, finance, and law. BERT model has enabled a contextual understanding of languages. It symbolizes a significant milestone in NLP with breakthroughs in diverse tasks like text classification, named entity recognition, and sentiment analysis.

Opinions expressed by DZone contributors are their own.

Comments