Go Microservices, Part 7: Service Discovery and Load Balancing

Continue on your microservice development journey by learning how to set up service discovery and load balancing in your new service.

Join the DZone community and get the full member experience.

Join For Freethis part of the blog series will deal with two fundamental pieces of a sound microservice architecture - service discovery and load-balancing - and how they facilitate the kind of horizontal scaling we usually state as an important non-functional requirement in 2017.

introduction

while load-balancing is a rather well-known concept, i think service discovery entails a more in-depth explanation. i’ll start with a question:

“how does service a talk to service b without having any knowledge about where to find service b?”

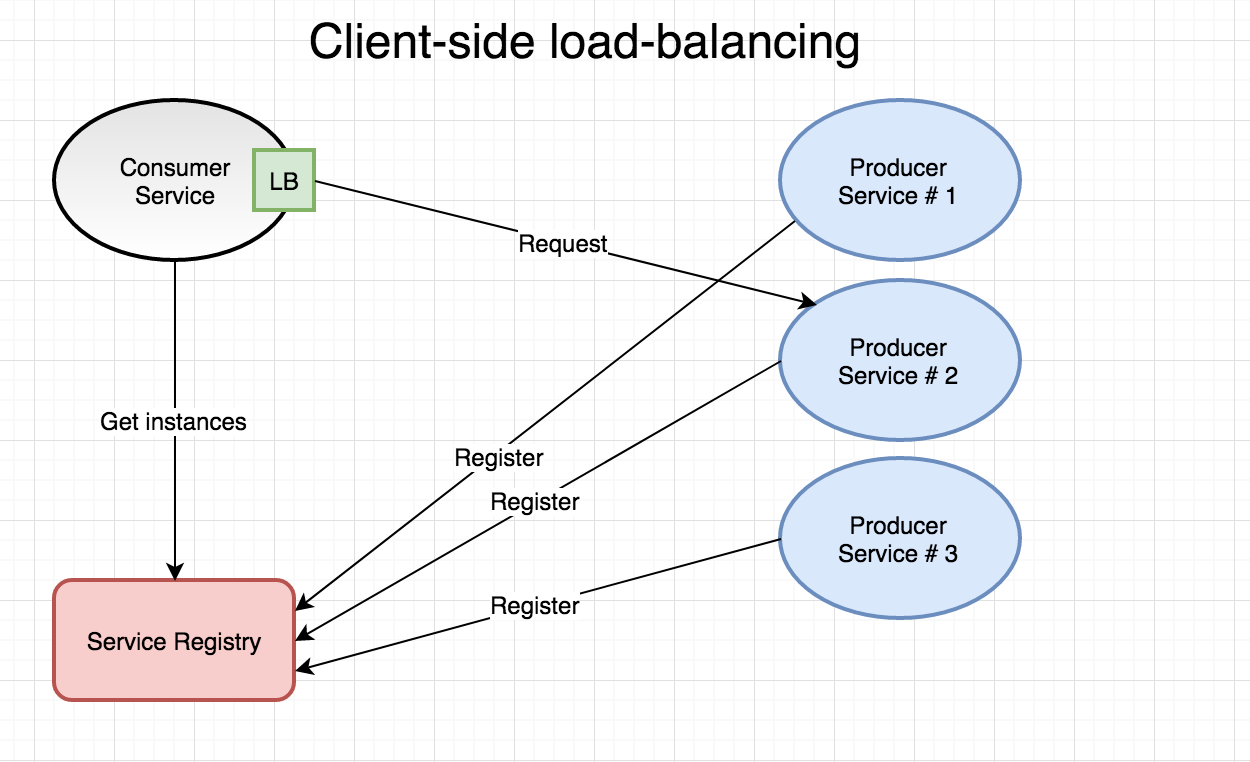

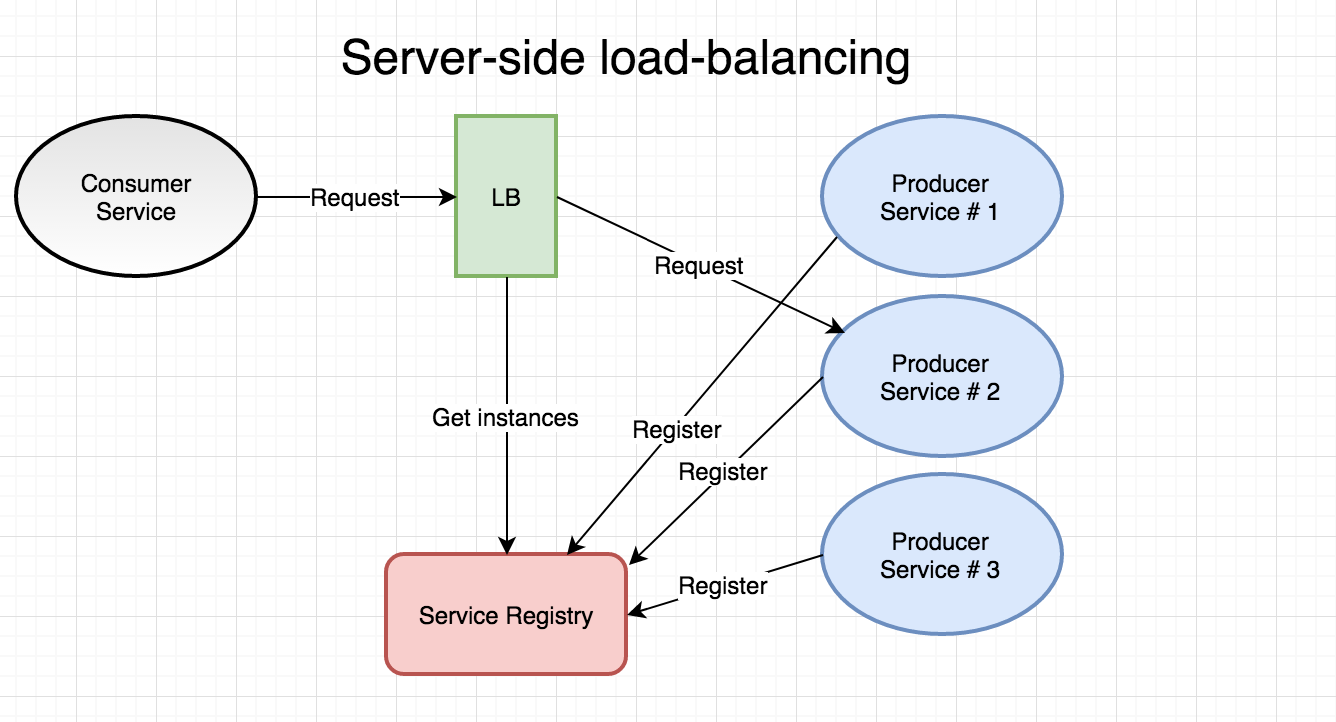

in other words - if we have 10 instances of service b running on an arbitrary number of cluster nodes, someone needs to keep track of all these 10 instances. so when service a needs to communicate with service b, at least one proper ip address or hostname for an instance of service b must be made available to service a (client-side load balancing) - or - service a must be able to delegate the address resolution and routing to a 3rd party given a known logical name of service b (server-side load balancing). in the continuously changing context of a microservice landscape, either approach requires service discovery to be present. in its simplest form, service discovery is just a registry of running instances for one or many services.

if this sounds a lot like a dns service to you, it kind of is. the difference being that service discovery is for use within your internal cluster so your microservices can find each other, while dns typically is for more static and external routing so external parties can have requests routed to your service(s). also, dns servers and the dns protocol are typically not well suited for handling the volatile nature of microservice environments with ever-changing topology with containers and nodes coming and going, clients often not honoring ttl values, failure detection etc.

most microservice frameworks provide one or several options for service discovery. by default, spring cloud/netflix oss uses netflix eureka (also supports consul, etcd, and zookeeper) where services register themselves with a known eureka instance and then intermittently sends heartbeats to make sure the eureka instance(s) know they’re still alive. an option (written in go) that’s becoming more popular is consul that provides a rich feature set including an integrated dns. other popular options are the use of distributed and replicable key-value stores such as etcd where services can register themselves. apache zookeeper should also be mentioned in this crowd.

in this blog post, we’ll primarily deal with the mechanisms offered by “docker swarm” (e.g. docker in swarm mode) and showcase the service abstraction that we explored in part 5 of the blog series and how it actually provides us with both service discovery and server-side load-balancing. additionally, we’ll take a look at mocking of outgoing http requests in our unit tests using gock since we’ll be doing service-to-service communication.

note: when referring to “docker swarm” in this blog series, i am referring to running docker 1.12 or later in swarm mode . “ docker swarm ” as a standalone concept was discontinued with the release of docker 1.12.

two types of load balancers

in the realm of microservices, one usually differentiates between the two types of load-balancing mentioned above:

-

client-side: it’s up to the client to query a discovery service to get actual address information (ips, hostnames, ports) of services they need to call, from which they then pick one using a load-balancing strategy such as round-robin or random. also, in order to not have to query the discovery service for each upcoming invocation, each client typically keeps a local cache of endpoints that has to be kept in reasonable sync with the master info from the discovery service. an example of a client-side load balancer in the spring cloud ecosystem is

netflix ribbon

. something

similar

exists in the

go-kit

ecosystem that’s backed by etcd. some advantages of client-side load-balancing is resilience, decentralization and no central bottlenecks since each service consumer keeps its own registry of producer endpoints. some drawbacks are higher internal service complexity and risk of local registries containing stale entries.

-

server-side: in this model, the client relies on the load-balancer to look up a suitable instance of the service it wants to call given a logical name for the target service. this mode of operation is often referred to as “proxy” since it functions both as a load-balancer and a reverse-proxy. i’d say the main advantage here is simplicity. the load-balancer and service discovery mechanism is typically built into your container orchestrator and you don’t have to care about installing or managing those components. also, the client (e.g. our service) doesn’t have to be aware of the service registry - the load-balancer takes care of that for us. being reliant on the load-balancer to route all calls arguably decreases resilience and the load-balancer

could

theoretically become a performance bottleneck.

note that the actual registration of producer services in the server-side example above is totally transparent to you as developer when we’re using the service abstraction of docker in swarm mode. i.e - our producer services aren’t even aware they are operating in a server-side load-balanced context (or even in the context of a container orchestrator). docker in swarm mode takes care of the full registration/heartbeat/deregistration for us.

in the example domain, we’ve been working with since part 2 of the blog series , we might want to ask our accountservice to fetch a random quote-of-the-day from the quotes-service . in this blog post, we’ll concentrate using docker swarm mechanics for service discovery and load-balancing. if you’re interested in how to integrate a go-based microservice with eureka, i wrote a blog-post including that subject in 2016. i’ve also authored a simplistic and opinionated client-side library to integrate go apps with eureka including basic lifecycle management.

consuming service information

let’s say you want to build a custom-made monitoring application and need to query the /health endpoint of every instance of every deployed service. how would your monitoring app know what ip’s and ports to query? you need to get hold of actual service discovery details. if you’re using docker swarm as your service discovery and load-balancing provider and need those ips, how would you get hold of the ip address of each instance when docker swarm is keeping that information for us? with a client-side solution such as eureka, you’d just consume the information using its api. however, in the case of relying on the orchestrator’s service discovery mechanisms, this may not be as straightforward. i’d say there’s one primary option to pursue and a few secondary options one could consider for more specific use cases.

docker remote api

primarily, i would recommend using the docker remote api, e.g. use the docker apis from within your services to query the swarm manager for service and instance information. after all, if you’re using your container orchestrator’s built-in service discovery mechanism, that’s the source you should be querying. for portability, if that’s an issue, one can always write an adapter for your orchestrator of choice. however, it should be stated that using the orchestrator’s api have some caveats too - it ties your solution closely to a specific container api and you’d have to make sure your application can talk to the docker manager(s), e.g. they’d be aware of a bit more of the context they’re running in and using the docker remote api does increase service complexity somewhat.

alternatives

- use an additional separate service discovery mechanism - i.e. run netflix eureka, consul or similar and make sure microservices that want to be made discoverable register/deregister themselves there in addition to the docker swarm mode mechanics. then just use the discovery service’s api for registering/querying/heartbeating etc. i dislike this option as it introduces complexity into services when docker in swarm mode can handle so much of this for us more or less transparently. i almost consider this option an anti-pattern so don’t do this unless you really have to.

- application-specific discovery tokens - in this approach, services that want to broadcast their existence can periodically post a “discovery token” with ip, service name etc. on a message topic. consumers that need to know about instances and their ips can subscribe to the topic and keep its own registry of service instances up-to date. when we look at netflix turbine without eureka in a later blog-post, we’ll use this mechanism to feed information to a custom turbine discovery plugin i’ve created by letting hystrix stream producers register themselves with turbine using discovery tokens. this approach is a bit different as it doesn’t really have to leverage the full service registry - after all, in this particular use-case, we only care about a specific set of services.

source code

feel free to check out the appropriate branch for the completed source code of this part from github :

git checkout p7

scaling and load balancing

we’ll continue this part by taking a look at scaling our “accountservice” microservice to run multiple instances and see if we can make docker swarm automatically load-balance requests to it for us.

in order to know what instance that actually served a request we’ll add a new field to the “account” struct that we can populate with the ip address of the producing service instance. open /accountservice/model/account.go :

type account struct {

id string `json:"id"`

name string `json:"name"`

// new

servedby string `json:"servedby"`

}

when serving an account in the getaccount function, we’ll now populate the servedby field before returning. open /accountservice/service/handlers.go and add the getip() function as well as the line of code that populates the servedby field on the struct:

func getaccount(w http.responsewriter, r *http.request) {

// read the 'accountid' path parameter from the mux map

var accountid = mux.vars(r)["accountid"]

// read the account struct boltdb

account, err := dbclient.queryaccount(accountid)

account.servedby = getip() // new, add this line

...

}

// add this func

func getip() string {

addrs, err := net.interfaceaddrs()

if err != nil {

return "error"

}

for _, address := range addrs {

// check the address type and if it is not a loopback the display it

if ipnet, ok := address.(*net.ipnet); ok && !ipnet.ip.isloopback() {

if ipnet.ip.to4() != nil {

return ipnet.ip.string()

}

}

}

panic("unable to determine local ip address (non loopback). exiting.")

}

the getip() function should go into some “utils” package since it’s reusable and useful for a number of different occurrences when we need to determine the non-loopback ip-address of a running service.

rebuild and redeploy our service by running copyall.sh again from $gopath/src/github.com/callistaenterprise/goblog :

> ./copyall.shwait until it’s finished and then type:

> docker service ls

id name replicas image

yim6dgzaimpg accountservice 1/1 someprefix/accountservicecall it using curl:

> curl $managerip:6767/accounts/10000

{"id":"10000","name":"person_0","servedby":"10.255.0.5"}lovely. we see that the response now contains the ip address of the container that served our request. let’s scale the service up a bit:

> docker service scale accountservice=3

accountservice scaled to 3wait a few seconds and run:

> docker service ls

id name replicas image

yim6dgzaimpg accountservice 3/3 someprefix/accountservicenow it says replicas 3/3. let’s curl a few times and see if get different ip addresses as servedby .

curl $managerip:6767/accounts/10000

{"id":"10000","name":"person_0","servedby":"10.0.0.22"}

curl $managerip:6767/accounts/10000

{"id":"10000","name":"person_0","servedby":"10.255.0.5"}

curl $managerip:6767/accounts/10000

{"id":"10000","name":"person_0","servedby":"10.0.0.18"}

curl $managerip:6767/accounts/10000

{"id":"10000","name":"person_0","servedby":"10.0.0.22"}we see how our four calls were round-robined over the three instances before 10.0.0.22 got to handle another request. this kind of load-balancing provided by the container orchestrator using the docker swarm “service” abstraction is very attractive as it removes the complexity of client-side based load-balancing such as netflix ribbon and also shows that we can load-balance without having to rely on a service discovery mechanism to provide us with a list of possible ip-addresses we could call. also - from docker 1.13 docker swarm won’t route any traffic to nodes not reporting themselves as “healthy” if you have implemented the healthcheck. this is very important when having to scale up and down a lot, especially if your services are complex and may take more than the few hundreds of milliseconds to start our “accountservice” currently needs.

footprint and performance when scaling

it may be interesting to see if and how scaling our accountservice from one to four instances affects latencies and cpu/memory usage. could there be a substantial overhead when the swarm mode load-balancer round-robins our requests?

> docker service scale accountservice=4give it a few seconds to start things up.

cpu and memory usage during load test

running the gatling test with 1k req/s:

container cpu % mem usage / limit

accountservice.3.y8j1imkor57nficq6a2xf5gkc 12.69% 9.336 mib / 1.955 gib

accountservice.2.3p8adb2i87918ax3age8ah1qp 11.18% 9.414 mib / 1.955 gib

accountservice.4.gzglenb06bmb0wew9hdme4z7t 13.32% 9.488 mib / 1.955 gib

accountservice.1.y3yojmtxcvva3wa1q9nrh9asb 11.17% 31.26 mib / 1.955 gibwell, well! our 4 instances are more or less evenly sharing the workload and we also see that the three “new” instances stay below 10 mb of ram given that they never should need to serve more than 250 req/s each.

performance

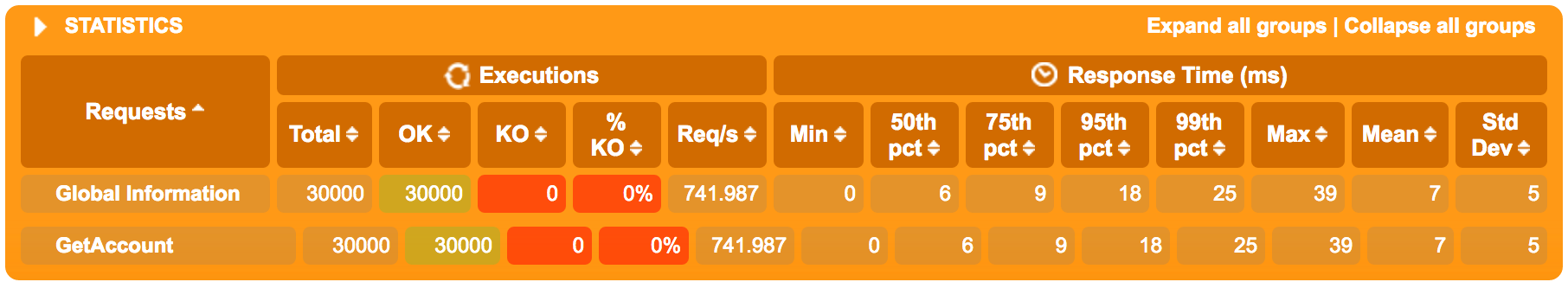

first - the gatling excerpt using one (1) instance:

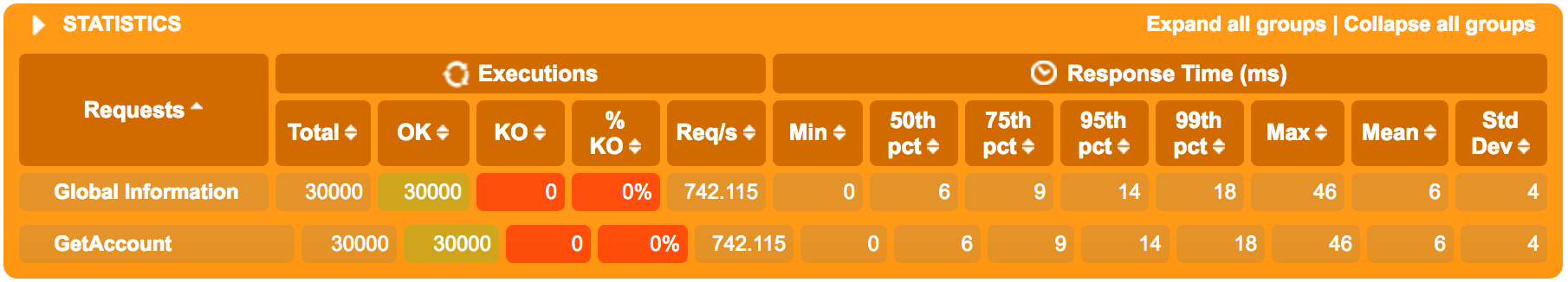

next - from the run with four (4) instances:

next - from the run with four (4) instances:

the difference isn’t all that great - and it shouldn’t be - all four service instances are after all running on the same virtualbox-hosted docker swarm node on the same underlying hardware (i.e. my laptop). if we would add more virtualized instances to the swarm that can utilize unused resources from the host os we’d probably see a much larger decrease in latencies as it would be separate logical cpus etc. handling the load. nevertheless - we do see a slight performance increase regarding the mean and 95/99-percentiles. we can safely conclude that the swarm mode load-balancing has no negative impact on performance in this particular scenario.

bring out the quotes!

remember that java-based quotes-service we deployed back in part 5 ? let’s scale it up and then call it from the “accountservice” using its service name “quotes-service”. the purpose of adding this call is to showcase how transparent the service discovery and load-balancing becomes when the only thing we need to know about the service we’re calling is its logical service name.

we’ll start by editing /goblog/accountservice/model/account.go so our response will contain a quote:

type account struct {

id string `json:"id"`

name string `json:"name"`

servedby string `json:"servedby"`

quote quote `json:"quote"` // new

}

// new struct

type quote struct {

text string `json:"quote"`

servedby string `json:"ipaddress"`

language string `json:"language"`

}note that we’re using the json tags to map from the field names that the quotes-service outputs to struct names of our own, quote to text , ipaddress to servedby, etc.

continue by editing /goblog/accountservice/service/handler.go . we’ll add a simplistic getquote function that will perform an http call to http://quotes-service:8080/api/quote whose return value will be used to populate the new quote struct. we’ll call it from the main getaccount handler function.

first, we’ll deal with a connection: keep-alive issue that will cause load-balancing problems unless we explicitly configure the go http client appropriately. in handlers.go , add the following just above the getaccount function:

var client = &http.client{}

func init() {

var transport http.roundtripper = &http.transport{

disablekeepalives: true,

}

client.transport = transport

}this init method will make sure any outgoing http request issued by the client instance will have the appropriate headers making the docker swarm-based load-balancing work as expected. next, just below the getaccount function, add the package-scoped getquote() function:

func getquote() (model.quote, error) {

req, _ := http.newrequest("get", "http://quotes-service:8080/api/quote?strength=4", nil)

resp, err := client.do(req)

if err == nil && resp.statuscode == 200 {

quote := model.quote{}

bytes, _ := ioutil.readall(resp.body)

json.unmarshal(bytes, "e)

return quote, nil

} else {

return model.quote{}, fmt.errorf("some error")

}

}nothing special about it. that “?strength=4” argument is a peculiarity of the quotes-service api that can be used to make it consume more or less cpu. if there is some problem with the request, we return a generic error.

we’ll call the new getquote func from the getaccount function, assigning the returned value to the quote property of the account instance if there were no error:

// read the account struct boltdb

account, err := dbclient.queryaccount(accountid)

account.servedby = getip()

// new call the quotes-service

quote, err := getquote()

if err == nil {

account.quote = quote

}(all this error-checking is one of my least favorite things about go, even though it arguably produces code that is safer and maybe shows the intent of the code more clearly.)

unit testing with outgoing http requests

if we would run the unit tests in /accountservice/service/handlers_test.go now, they would fail! the getaccount function under test will now try to do an http request to fetch a famous quote, but since there’s no quotes-service running on the specified url (i guess it won’t resolve to anything) the test cannot pass.

we have two strategies to choose from here given the context of unit testing:

1) extract the getquote function into an interface and provide one real and one mock implementation, just like we did in part 4 for the bolt client. 2) utilize an http-specific mocking framework that intercepts outgoing requests for us and returns a pre-determined answer. the built-in httptest package can start an embedded http server for us that can be used for unit-testing, but i’d like to use the 3rd party gock framework instead that’s more concise and perhaps a bit easier to use.

in /goblog/accountservice/service/handlers_test.go , add an init function above the testgetaccount(t *testing) function that will make sure our http client instance is intercepted properly by gock:

func init() {

gock.interceptclient(client)

}the gock dsl provides fine-granular control over expected outgoing http requests and responses. in the example below, we use new(..), get(..) and matchparam(..) to tell gock to expect the http://quotes-service:8080/api/quote?strength=4 get request and respond with http 200 and a hard-coded json string as body.

at the top of testgetaccount(t *testing) , add:

func testgetaccount(t *testing.t) {

defer gock.off()

gock.new("http://quotes-service:8080").

get("/api/quote").

matchparam("strength", "4").

reply(200).

bodystring(`{"quote":"may the source be with you. always.","ipaddress":"10.0.0.5:8080","language":"en"}`)defer gock.off() makes sure our test will turn off http intercepts after the current test finishes since the gock.new(..) will turn http intercept on which could potentially fail subsequent tests.

let’s assert that the expected quote was returned. in the innermost convey -block of the testgetaccount test, add a new assertion:

convey("then the response should be a 200", func() {

so(resp.code, shouldequal, 200)

account := model.account{}

json.unmarshal(resp.body.bytes(), &account)

so(account.id, shouldequal, "123")

so(account.name, shouldequal, "person_123")

// new!

so(account.quote.text, shouldequal, "may the source be with you. always.")

})

run the tests

try running all tests from the /goblog/accountservice folder:

> go test ./...

? github.com/callistaenterprise/goblog/accountservice[no test files]

? github.com/callistaenterprise/goblog/accountservice/dbclient[no test files]

? github.com/callistaenterprise/goblog/accountservice/model[no test files]

ok github.com/callistaenterprise/goblog/accountservice/service0.011s

deploy and run this on the swarm

rebuild/redeploy using ./copyall.sh and then try calling the accountservice using curl:

> curl $managerip:6767/accounts/10000

{"id":"10000","name":"person_0","servedby":"10.255.0.8","quote":

{"quote":"you, too, brutus?","ipaddress":"461caa3cef02/10.0.0.5:8080","language":"en"}

}scale the quotes-service to two instances:

> docker service scale quotes-service=2give it some time, it may take 15-30 seconds as the spring boot-based quotes-service is not as fast as our go counterparts to start. then call it again a few times using curl, the result should be something like:

{"id":"10000","name":"person_0","servedby":"10.255.0.15","quote":{"quote":"to be or not to be","ipaddress":"768e4b0794f6/10.0.0.8:8080","language":"en"}}

{"id":"10000","name":"person_0","servedby":"10.255.0.16","quote":{"quote":"bring out the gimp.","ipaddress":"461caa3cef02/10.0.0.5:8080","language":"en"}}

{"id":"10000","name":"person_0","servedby":"10.0.0.9","quote":{"quote":"you, too, brutus?","ipaddress":"768e4b0794f6/10.0.0.8:8080","language":"en"}}we see that our own servedby is nicely cycling through the available accountservice instances. we also see that the ipaddress field of the quote object has two different ips. if we hadn’t disabled the keep-alive behavior, we’d probably be seeing that the same instance of accountservice keeps serving quotes from the same quotes-service instance.

summary

in this part, we touched upon the concepts of service discovery and load-balancing in the microservice context and implemented calling of another service using only its logical service name.

in part 8, we’ll move on to one of the most important aspects of running microservices at scale - centralized configuration.

Published at DZone with permission of Erik Lupander, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments