Gluster Storage for Kubernetes With Heketi

Running stateful applications on Kubernetes is doable, and we'll use Gluster, Heketi, and Google Cloud Platform to see it in action.

Join the DZone community and get the full member experience.

Join For Freea key point when running containers using kubernetes is managing stateful applications, such as a database. we'll use gluster along with kubernetes in this post to demonstrate how you can run stateful applications on kubernetes

glusterfs and heketi

glusterfs is an open-source scalable network filesystem that can be created using off the shelf hardware. gluster allows the creation of various types of volumes such as distributed, replicated, striped, dispersed, and many combinations of these as described in detail here .

heketi is a gluster volume manager that provides a restful interface to create/manage gluster volumes. heketi makes it easy for cloud services such as kubernetes, openshift, and openstack manila to interact with gluster clusters and provision volumes as well as manage brick layout.

use case

the purpose of this post is to demonstrate how to create a glusterfs cluster, manage this cluster using heketi to provision volumes, and then install a demo application to use the gluster volume.

we will create a 4-node kubernetes cluster with two unformatted disks on each node. we shall then install glusterfs as daemonset and heketi as a service to create gluster volumes, which will be consumed by a postgres database using statefulset. we shall have another application that adds 1 entry per second to the postgres db and a flask application that will allow us to view the contents of the database. we shall also move the postgres db from one node to another and still be able to access our data.

implementation steps

prerequisites

- a google cloud platform account with admin privileges.

- api should be enabled on gcp account.

kubernetes cluster creation and bootstrapping

-

log onto the google cloud console and open google cloud shell

- create a directory called “gluster-heketi” and cd gluster-heketi

- clone the git repo using the following command:

git clone https://github.com/infracloudio/gluster-heketi-k8s.git .- edit “cluster_and_disks.sh” and change the variable project_id to your gcp project.

- the cluster_name, zone, and node_count variables can be changed if needed.

- execute the script “cluster_and_disks.sh”

this will create a 4-node gke cluster, and each node will have 2 unformatted disks attached to it. this script will also generate a topology file, which is going to be used as a kubernetes configmap. the topology contains details of the kubernetes nodes in the cluster and their mounted disks.

note: following these steps will create resources which are chargeable by gcp. ensure to follow cleanup steps mentioned below to delete all resources once done with the demo.setting up gluster, heketi, and the storage class

-

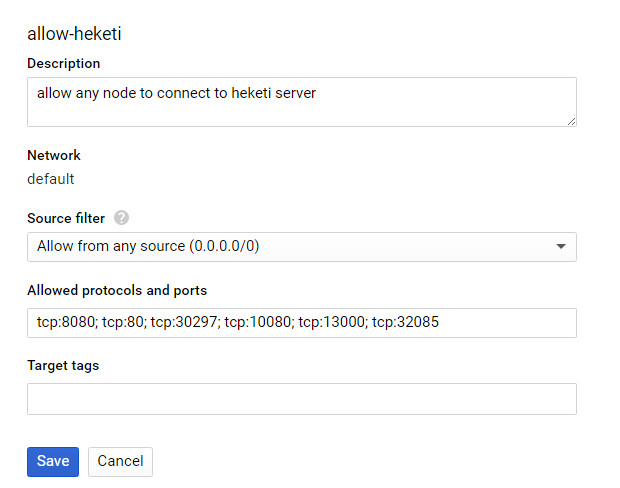

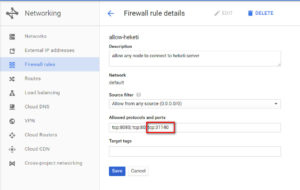

in network configuration, create a firewall rule called “allow-heketi” and open the ports as shown below:

-

run the following command to import the configmap in kubernetes

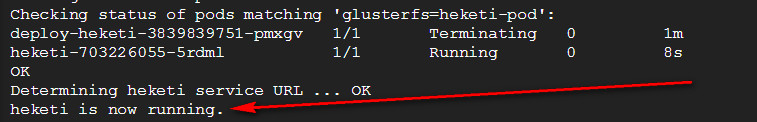

kubectl create -f heketi-turnkey-config.yaml - run the following command to start a gluster daemon set and install the heketi service. this creates a temporary pod that is based on a container created by janos lenart .

kubectl create -f heketi-turnkey.yaml- process logs of this pod can be viewed using the command:

kubectl logs heketi-turnkey -f-

the above yaml file will create a daemonset with the gluster installation, which will take control of all nodes and devices mentioned in the topology file. it will also install heketi as a pod and expose the heketi api via a service.

-

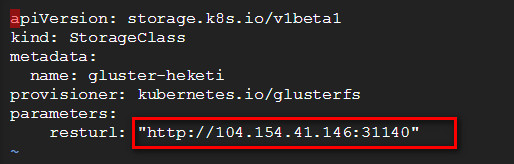

the next step is the creation of our

storage class

, through which we will allow dynamic creation of gluster volumes by calling the heketi service. the following steps need to be done in order to correctly set up the storage class:

-

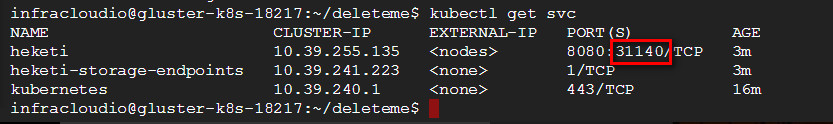

note the node port of heketi service as shown below:

-

note the node port of heketi service as shown below:

-

open the firewall for this port in your allow-heketi firewall rule as shown below:

-

note the public ip of one of the kubernetes nodes and update the rest url key in “storage_class.yaml” with the public ip of a node and the node port from above. (in a real world implementation, you might have a domain name for the kubernetes cluster endpoint). for example:

- now run “kubectl create -f storage_class.yaml” so kubernetes can talk to heketi in order to create volumes as needed.

-

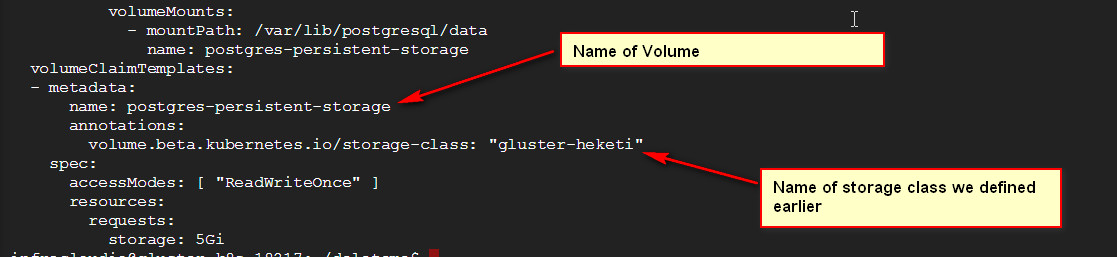

next, run “kubectl create -f postgres-srv-ss.yaml” to create a postgres pod in a statefulset. this set is backed by a gluster volume. the configuration to achieve this is shown below: this statefulset is exposed by a service name called “postgres”.

-

run “kubectl get pvc” to check if the volume got created and bound to the pod as expected. the result should look like below:

- run “kubectl create -f pgwriter-deployment.yaml”. this will create a deployment for a pod that will write 1 entry per second to the postgres db created above.

- run “frontend.yaml”. this will create a deployment of a flask-based frontend, which will allow querying the postgres db. an external load balancer (called loadbalancer) is also created to front the service.

-

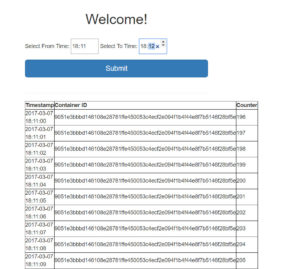

enter the loadbalancer ip in the browser to access the front end. enter (in hh:mm format) the previous minute (system time) and click submit. a list of entries with timestamps and counter values is shown.

-

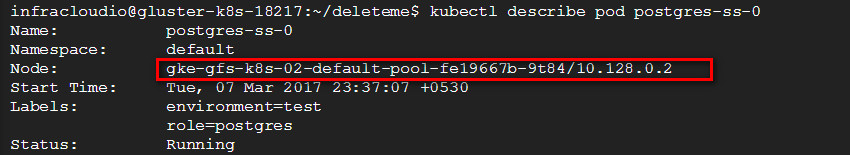

run “kubectl describe pod postgres-ss-0” and note the node on which the pod is running.

-

now we will delete the statefulset (effectively the db) and create it again. run the following commands:

-

kubectl delete -f postgres-srv-ss.yaml

-

kubectl create -f postgres-srv-ss.yaml

-

-

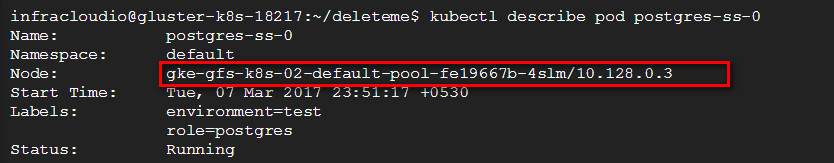

run “kubectl describe pod postgres-ss-0” and observe that the pod is assigned to a new node.

- go back to the front end and put the same hh:mm as before. the data can be observed to be intact despite host movement of the database.

clean up

- run the following command to delete the loadbalancer:

kubectl delete -f frontend.yaml-

delete the gke cluster:

-

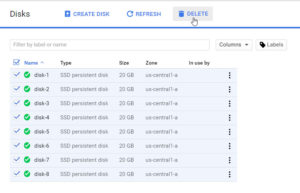

once the gke cluster is deleted, delete the disks:

conclusion

heketi is a simple and convenient way to create and manage gluster volumes. it pairs very well with kubernetes storage classes to give an on-demand volume creation capability, which can be used with other gluster features such as replication, striping, etc to handle many complex storage use cases.

Published at DZone with permission of Harshal Shah, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments