GitHub Restore and Disaster Recovery: Better Get Ready

This article covers all the aspects concerning building a reliable and agile Disaster Recovery (DR) strategy.

Join the DZone community and get the full member experience.

Join For FreeFirst, in case you missed it, you may want to read my previous blog post about GitHub backup. I described the potential threats to GitHub environments: human errors (mistakes or malicious actions), outages, and cyber-attacks.

While backup is an essential part of any disaster recovery strategy, the organization should pay special attention to some important aspects. In this blog post, I will cover all the aspects concerning building a reliable and agile Disaster Recovery (DR) strategy that will keep your business continuity with ease, and we will also see three use cases.

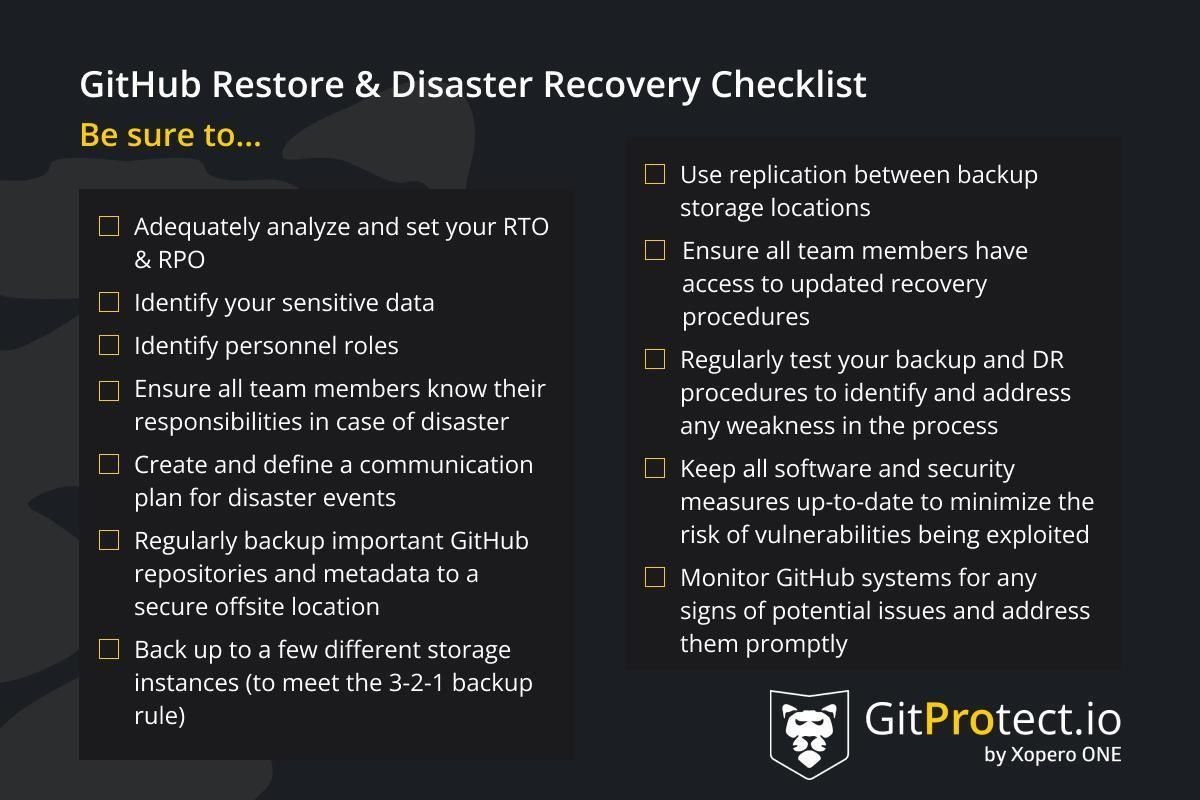

Without further ado, let’s review what you should include in your GitHub disaster recovery strategy.

What Should Your GitHub Disaster Recovery Strategy Include?

Sensitive Data Identification

A good disaster recovery plan should identify what data requires stronger security measures, how often that data should be backed up, and who is authorized to access the original copy and its backups.

It means that the organization should identify its active and important GitHub projects, taking into account metadata as well.

Recovery Point Objective (RPO) and Recovery Time Objective (RTO)

RPO and RTO are two key metrics when it comes to building a recovery strategy: they determine how much data the company can afford to lose and how quickly it should restore systems and processes in the event of failure.

Recovery Point Objective

RPO determines the time between backups and the amount of data the company can lose between those backups. So it’s critical to assess the target frequency of backup. For example, if the company can tolerate 8 hours of data loss (a normal working day) in a disaster, its RPO is 8 hours. In this case, the company can back up its data once a day.

On the other hand, if the company’s RPO is five minutes, the company must back up its data much more frequently.

Recovery Time Objective

RTO is another critical metric, which refers to the maximum amount of time that a business process can be down after a disaster strikes. So, in simple language, it’s the set target time for the recovery of IT and business operations. For example, if the organization sets its RTO to five hours, it means that the organization has to restore all its critical data within those five hours after a disaster. During those five hours, the organization will need to identify and mitigate the cause of downtime and get back to the normal working process within those five hours.

RTO helps to find the balance between cost efficiency and the preparation process in the event of failure. If your RTO is low, it means that you’re highly prepared for a disaster and have a comprehensive Disaster Recovery strategy permitting you to recover the data fast and guaranteeing business continuity. A higher RTO, on the other hand, may appear less expensive to maintain, but when a disaster strikes, your business may finish investing a lot more time and money in data recovery.

To find this tradeoff, it is assumed that high-priority and crucial services need a lower RTO, so they’re restored in the first place and should be backed up with more frequency and replicated between different storages.

How To Check RTO and RPO?

It takes a rigorous examination of all your systems to determine an RTO. To be effective, your RTO must be realistic. You can't just pick a number and put it in your SLA; it should be measured and checked on a regular basis.

To do so, you should review your backup configuration and Disaster Recovery plans regularly. A regular check should include the assessment of backup and recovery processes and the review of backup logs and reports: what’s the backup frequency? Do you use replication? Incremental backups? Granular recovery for instant access to your critical data?

This analysis will permit you to make improvements to your RTO and RPO metrics on time.

At the same time, you shouldn’t forget about regular testing and simulations to ensure that your backup configuration is effective and meets the RTO and RPO objectives you set.

Distribution of Staff Roles

The company’s plan should include the names, contact details, and obligations of team members who are responsible for disaster recovery processes. So there should be people (and they should be instructed thoroughly) who are responsible for declaring a disaster, reporting to management, dealing with customers, third-party vendors, the press, and, of course, those who are in charge of managing the crisis and recovering from it.

Disaster Recovery Procedures

It is crucial for the company to establish and document the set of actions and plans aimed at reducing and minimizing the impact of the catastrophic event. It’s worth remembering that the first few hours are the most critical, so the team must know for sure what to do to restore normal operations as quickly and efficiently as possible.

Thus, CTOs and security leaders should outline all the steps that are crucial to take in the event of a disaster, including data backup and recovery, emergency communication protocols, and activation of contingency plans.

Communication Plans for the Events of Failure

In case of a disaster, a company needs to have a comprehensive communication plan for delivering the information to stakeholders and other affected parties, including management, employees, media, customers, and compliance authorities. In an ideal world, this plan should include the most appropriate communication channels, message templates, and a person or a team who will be responsible for coordinating and disseminating information during the event of failure. This is the best way to keep stakeholders' and customers’ confidence and loyalty.

Moreover, it’s worth continuously evaluating and improving the company’s communication plan according to the feedback from stakeholders and experience from previous disaster events (if they took place).

Must-Have Features for Your GitHub Restore Plan

Now, let's take a look at complete Disaster Recovery options and some GitHub restore features important not just to recover in case of a failure but also for everyday work.

Any-to-Any GitHub Restore: Choose Your Destination

Regardless of whether you use the on-premise or SaaS GitHub version, you should be able to freely restore your data to any instance, from any instance: from self-hosted to cloud and conversely. You should also have the possibility to restore your GitHub data to your local machine or crossover to another git hosting service provider (like Bitbucket or GitLab). To this end, you should be able to restore all repositories in bulk and choose the exact point in time for the copy you want to restore. Thus, you need to have long-term retention. GitHub SLA limits recovery to only up to 90 days after repositories have been deleted.

Granular Recovery: For Daily Operations

Quite often, companies protect their GitHub Enterprise Server by backing up the entire underlying VM. In this case, the company would have to recover the entire virtual machine in case of a failure, which can take a lot of time: all the GitHub data must be recovered before being accessible again. Thus, to bring speed, efficiency, and peace of mind to daily operations, it’s important to have the possibility to recover only chosen individual GitHub repositories and metadata instead. This is what is called granular recovery, and it is a powerful tool against developer errors to ensure quick and immediate recovery of key data and uninterrupted workflow.

GitHub Disaster Recovery Use Cases

When you're analyzing third-party backup tools to protect your GitHub repositories and metadata, ensure that the backup provider you choose can guarantee data recoverability and accessibility under any disaster scenario. Moreover, you should get step-by-step guidance on what recovery option you can use for faster data restoration in any failure event. Let's look at the possible scenarios your GitHub environment can face and how to prevent data loss and assure your DevOps team's workflow continuity.

Use Case 1: GitHub Outage

We have already mentioned that, as with any other git service provider, GitHub can experience an outage. Do you want to stop your working process due to a long-term downtime of the GitHub cloud infrastructure? Surely, not. Then you need to have a few options to overcome that outage:

- Restore your GitHub repositories as a file to your local machine. You can perform that operation from the last copy or just from some appropriate point in time.

- Recover all your critical GitHub data to GitHub on-premise instance. For that reason, you can use the same or a new instance.

- Use crossover recovery. Migrate and restore your GitHub repositories and metadata to other git hosting services, like Bitbucket or GitLab.

Use Case 2:Your Infrastructure Outage.

Ensure that your backup solution is a multi-storage system and allows you to assign many cloud and on-premise data stores. In this case, you can follow the 3-2-1 backup rule and restore GitHub data from a copy once one of your servers is down.

Use Case 3: Your Backup Provider's Infrastructure Outage

Every backup provider lives in security. That's why it is obvious that it should have a Disaster Recovery response to the scenario if its infrastructure goes down. Ensure your backup vendor has a Disaster Recovery plan of its own infrastructure.

And One More Thing...

GitHub disaster recovery and data restoration depend on how well-prepared your GitHub backup is for any potential failure.

Published at DZone with permission of Greg Bak. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments