Generative AI in the Crosshairs: CISOs' Battle for Cybersecurity

ChatGPT and large language models (LLM) are the early signs of how generative AI will shape many business processes.

Join the DZone community and get the full member experience.

Join For FreeChatGPT and large language models (LLM) are the early signs of how generative AI will shape many business processes. Security and risk management leaders, specifically CISOs, and their teams need to secure how their organization builds and consumes generative AI and navigate its impacts on cybersecurity. The level of hype, scale, and speed of adoption of Generative AI (GenAI) has raised end-user awareness of LLMs, leading to uncontrolled uses of LLM applications. It has also opened the floodgates to business experiments and a wave of AI-based startups promising unique value propositions from new LLM and GenAI applications. Many business and IT project teams have already launched GenAI initiatives or will start soon.

In this article, we explore how GenAI impacts CISOs and their strategies in this evolving landscape.

Businesses will embrace generative AI to reimagine future Products, Services, and Operational Excellence, regardless of Security.

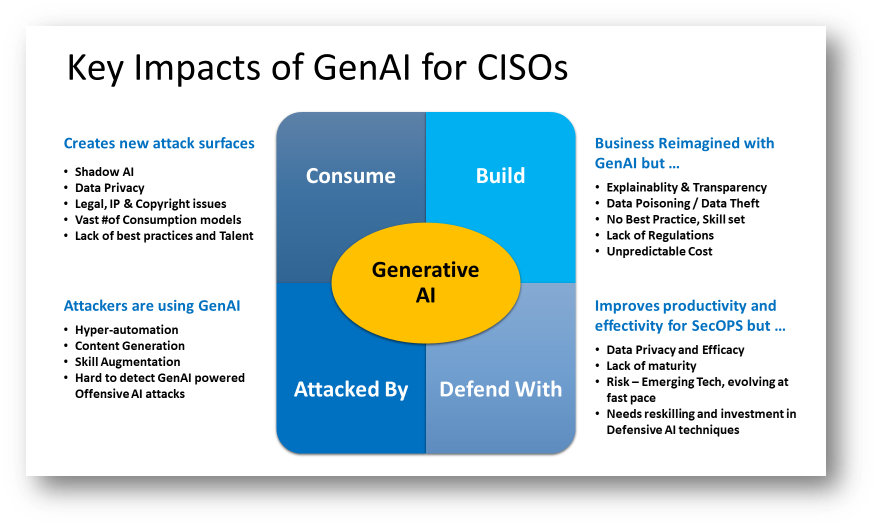

Key Impacts of GenAI on CISO

CISOs and security teams need to prepare for the impacts of generative AI in four different areas.

1. Consume

- Manage and monitor how the organization “consumes” GenAI: ChatGPT was the first example; embedded GenAI assistants in existing applications will be the next. These applications all have unique security requirements that are not fulfilled by legacy security controls.

- Consumption of GenAI applications, such as large language models (LLMs), from business experiments and unmanaged, ad hoc employee adoption creates new attack surfaces and risks on individual privacy, sensitive data, and organizational intellectual property (IP).

2. Build

- Secure enterprise initiatives to “build” GenAI applications: AI applications have an expanded attack surface and pose new potential risks that require adjustments to existing application security practices.

- Many businesses are rushing to capitalize on their IP and develop their own GenAI applications, creating new requirements for AI application security.

3. Defend With

- Generative cybersecurity AI: Receive the mandate to exploit GenAI opportunities to improve security and risk management, optimize resources, defend against emerging attack techniques, or even reduce costs.

- A proliferation of overoptimistic generative AI (GenAI) announcements in the security and risk management markets could still drive promising improvements in productivity and accuracy for security teams but also lead to waste and disappointments.

- Invest in Defensive AI Technique Development

4. Attacked By

- GenAI: Adapt to malicious actors evolving their techniques or even exploiting new attack vectors thanks to the development of GenAI tools and techniques.

- Attackers will use GenAI. They’ve started with the creation of more seemingly authentic content, phishing lures, and impersonating humans at scale. The uncertainty about how successfully they can leverage GenAI for more sophisticated attacks will require more flexible cybersecurity roadmaps.

Recommendations for CISO

To address the various impacts of generative AI on their organizations’ security programs, chief information security officers (CISOs) and their teams must:

By 2027, generative AI will contribute to a 30% reduction in false positive rates for application security testing and threat detection by refining results from other techniques to categorize benign from malicious events.

Through 2025, attacks leveraging generative AI will force security-conscious organizations to lower thresholds for detecting suspicious activity, generating more false alerts and thus requiring more — not less — human response.

1. Invest in Defensive AI Techniques Development

- Interactive threat intelligence: Access to technical documentation, threat intelligence from the security provider, or crowdsourced (using various methods separately or in combination, including prompt engineering, retrieval augmented generation, and querying an LLM model using an API).

- Adaptive Risk scoring and profiling

- Security engineering automation: Generate security automation code and playbooks on demand, leveraging the conversational prompt.

- BAS

- GenAI-powered Penetration Testing

2. Embrace Secure Application Development Assistants

Code generation tools (e.g., GitHub Copilot, Open AI Codex, Amazon CodeWhisperer) are embedding security features, and application security tools are already leveraging LLM applications. Some examples of use cases for these secure code assistants include:

Application Security Teams

- Vulnerability detection: Highlights issues in code snippets entered in the prompts or by performing a scan of the source code.

- False positive reduction: Used as a confirmation layer for other code analysis techniques, the feature analyzes the alert and the related code and indicates in conversational language why it might be a false positive.

- Auto-Remediation assistant: Suggests updates in the code to fix identified vulnerabilities as part of the finding summary that is generated.

Create re-skilling and update DevSecOPS processes to include BAS and GenAI-powered Penetration Testing.

Software Engineering/App Dev Teams

- Code generation: Creates script/code from developers' input, including natural language comments or instructions, or acts as an advanced code completion tool. Some tools can also indicate whether the generated code resembles an open-source project or can help validate that code (generated or human-written) complies with standard industry security practices.

- Unit test creation: Suggests a series of tests for a submitted function to ensure its behavior is as expected and is resistant to malicious inputs.

- Code documentation: Generates explanations of what a piece of code does or the impact of a code change.

While the use cases are easy to identify, it is still really early to get relevant qualitative measurements of these assistants.

3. GenAI in Security Operations and Security Operation Toolset

- Prioritize security resource involvement for use cases with direct financial and brand impacts, such as code automation, customer-facing content generation, and customer-facing teams, such as support centers.

- Assess third-party security products for Non-AI-related controls (such as IAM, data governance, and SSE functions) and AI-specific security (such as monitoring, controlling, and managing LLM inputs.)

- Prepare to evaluate emerging products enabling zero-code customization of prompts.

- Test emerging products that inspect and review outputs for potential misinformation, hallucinations, factual errors, bias, copyright violations, and other illegitimate or unwanted information that the technology might generate, which might lead to unintended or harmful outcomes. Rather, implement an interim manual review process.

- Progressively deploy automated action only with pre-established accuracy tracking metrics. Ensure that any automated action can quickly and easily be reverted.

- Include LLM model transparency requirements when evaluating third-party applications. First, tools don’t include necessary visibility of users’ actions.

- Consider the security advantages of private hosting of smaller or domain-specific LLMs but work with the infrastructure and application teams to evaluate infrastructural and operational challenges.

- Initiate experiments of “generative cybersecurity AI,” starting with chat assistants for security operations centers (SOCs) and application security. GenAI utilities have made their way into a number of security operations tools from vendors like Microsoft, Google, Sentinel One, and CrowdStrike. These utilities have the potential to improve the productivity and skill set of the average administrator and improve security outcomes and communication.

- The first wave of GenAI implementation within SOC tools (e.g., Microsoft Security CoPilot) consists of conversational prompts replacing existing query-based search features and as the front end for the users. This new interface reduces the skill requirements to use the tool, reducing the length of the learning curve and enabling more users to benefit from the tools. These GenAI features will be increasingly embedded in existing security tools to improve operator proficiency and productivity. The first implementations of these prompts support threat analysis and threat hunting workflows:

- Alert enrichment: Automatically add contextual information to an alert, including threat intelligence or categorization in known frameworks.

- Alert/risk scoring explanation: Refines existing scoring mechanisms to identify false positives or contribute to an existing risk-scoring engine.

- Attack surface/threat summarization: Aggregate multiple alerts and available telemetry information to summarize the content according to the target reader use case.

- Mitigation assistants: Suggest changes in security controls and new or improved detection rules.

- Documentation: Develop, manage, and maintain cohesive security policy documentation and best practices policies and procedures.

Security operation chatbots make it easier to surface insights from SOC tools. But, experienced analysts are still needed to assess the quality of the outputs, detect potential hallucinations, and take appropriate actions according to the organization’s requirements.

Note: Most of these tools are in their early days (both from capability and pricing perspective); they look promising, but watch out!! - The novelty might reside mostly in interactivity, which has value, but the search and analysis capabilities are already available.

4. Develop a Responsible and Trustworthy AI Framework (RTAF)

5. Take an integrated approach to Risk and Security Management (RSM)

6. Re-define and Re-enforce Governance Workflows

- Work with organizational counterparts who have active interests in GenAI, such as those in legal and compliance and lines of business, to formulate user policies, training, and guidance. This will help minimize unsanctioned uses of GenAI and reduce privacy and copyright infringement risks.

- Mandate using RTAF and RSM when developing new first-party or consuming new third-party applications leveraging LLMs and GenAI.

- Build a corporate policy that clearly lists governance rules, includes all necessary workflows, and leverages existing data classification to restrict the uses of sensitive data in prompts and third-party applications.

- Define workflows to inventory, approve and manage consumptions of GenAI. Include “generative AI as a feature” included during a software update of existing products.

- Classify use cases with the highest potential business impacts and identify the teams more likely to initiate a project quickly.

- Define new vendor risk management requirements for providers leveraging GenAI.

- Obtain and verify hosting vendors’ data governance and protection assurances that confidential enterprise information transmitted to its large language model (LLM) — for example, in the form of stored prompts — is not compromised. These assurances are gained through contractual license agreements as confidentiality verification tools that run in hosted environments are not yet available.

- Reinforce methods for how they assess exposure to unpredictable threats and measure changes in the efficacy of their controls, as they cannot guess if and how malicious actors might use GenAI.

- Formulate user policies, training, and guidance to minimize unsanctioned uses of GenAI, privacy, and copyright infringement risks.

- Plan for the necessary impact assessments demanded by privacy and AI regulations, such as the EU’s General Data Protection Regulation (GDPR) and the upcoming Artificial Intelligence Act.

Key Challenges and Recommendations When Embracing Generative Cybersecurity AI

- Generative cybersecurity AI will impact security and risk management teams, but security leaders should also prepare for the “external/indirect” impact of GenAI on security programs, such as assisted RFP analysis, code annotation, and various other content generation and automation affecting compliance, HR and many other teams.

- Privacy and third-party dependencies: As providers rush to release features, many of them leverage a third-party LLM, using an API to interact with a GenAI provider or use third-party libraries or models directly. This new dependency might create privacy issues and third-party risk management challenges.

- Short-term staff productivity: Will the alert enrichment reduce diagnosis fatigue or make it much worse by adding generated content? Junior staff might only get fatigued by the amount of data because they can’t really determine whether it makes sense.

- Costs: Many of the new generative cybersecurity AI features and products are currently in private beta or preview. There is little information on the impact these features will have on security solution prices. Commercial models are generally priced based on the volume of tokens used, and security providers are likely to make their clients pay for them. Training and developing a model is also expensive. The cost of using GenAI might be much higher than the cost of other techniques addressing the same use case.

- Quality: For most of the early implementations of generative cybersecurity AI applications, organizations will aim at “good enough” and basic skill augmentation. In most of my tests/experimentation evaluations of the secure code assistant outputs, quality gives mixed results (50-60% success). Threat intelligence (TI) and alert scoring features might be biased by the model’s training set or impacted by hallucination (fabricated inaccurate outputs).

- Regression to the mean versus state of the art: For specialized use cases, such as incident response against advanced attacks, the quality of the outputs issued by GenAI might not be up to the standard of the most experienced teams. This is because its outputs partially come from crowdsourced training datasets issued from lower maturity practices.

Risks From Unsanctioned Use of LLM Applications

- Sensitive data exposure: Rules on what providers do with the data sent in the prompt will vary per provider. Enterprises have no method to verify if their prompt data remains private, according to vendor claims. They must rely on vendor license agreements to enforce data confidentiality.

- Potential copyright / IP violations: The responsibility for copyright violation coming from the generated outputs (based on training data that organizations can’t identify) falls back on the users and enterprises using it. There might also be rules of usage for the generated output.

- Biased, incomplete, or wrong responses: The risk of “AI hallucinations” (fabricated answers) is real!! Guess what, answers might also be wrong (e.g., practically, technically, syntax, etc.) due to relying on biased, fragmented, or obsolete training datasets.

- LLM content input and output policy violations: Enterprises can control prompt inputs using legacy security controls, but they need another layer of controls to ensure the crafted prompt meets their policy guidelines — for example, around the transmission of questions that violate preset ethical guidelines. LLM outputs must also be monitored and controlled so that they, too, meet company policies — for example, around domain-specific harmful content. Legacy security controls do not provide this type of domain-policy-specific content monitoring.

- Brand / Reputation damage: Beyond the clumsiness of “regenerate response” or “as an AI language model” found in customer-facing content, customers of your organization are likely to expect some level of transparency.

Four Patterns of Consumption of GenAI Applications

1. 3rd applications or agents: Such as the web-based or mobile app versions of ChatGPT (out of the box).

2. Generative AI embedded in enterprise applications: Organizations can directly use commercial applications that have GenAI capabilities embedded within them. An example of this would be using an established software application (see AI Design Patterns for Large Language Models) that now includes LLMs (like Microsoft 365 Copilot or image-generation capabilities like Adobe Firefly).

3. Embed model APIs into custom applications: Enterprises can build their own applications, integrating GenAI via foundation model APIs. Most closed-source GenAI models, such as GPT-3, GPT-4, and PaLM, are available for deployment via cloud APIs. This approach can be further refined by prompt engineering — this could include templates, examples, or the organization’s own data — to better inform the LLM output. An example would be searching a private document database to find relevant data to add to the foundation model’s prompt, augmenting its response with this additional relevant, similar information.

4. Extend GenAI via fine-tuning: Fine-tuning takes an LLM and further trains it on a smaller dataset for a specific use case. For instance, a bank could fine-tune a foundation model with its own terms and policies, customer insights, and risk exposure knowledge into the model and improve its performance on specific use cases. Prompt engineering approaches are limited by the context window of the models, whereas fine-tuning enables a larger corpus of data to be incorporated.

The integration of third-party models and fine-tuning blur the lines between consumption and building your own GenAI application.

Not all LLMs can be fine-tuned.

Custom code to integrate or privately host third-party models requires security teams to expand their controls beyond those required for consumption of third-party AI services, applications, or agents by adding the infrastructure and internal application life cycle attack surfaces.

Seven tactics to gain better control of GenAI consumption

The ability to prevent unsanctioned uses is limited, especially since employees can access GenAI applications and commercial or open-source LLMs from unmanaged devices. Security leaders must acknowledge that blocking known domains and applications is not a sustainable or comprehensive option. It will also trigger “user bypasses,” where employees would share corporate data with unmonitored personal devices to get access to the tools. Many organizations have already shifted from blocking to an “approval” page with a link to the organization’s policy and a form to submit an access request. Organizations can leverage seven tactics to gain better control of GenAI consumption.

1. Develop a Responsible and Trustworthy AI Framework (RTAF)

2. Take an integrated approach to Risk and Security Management (RSM)

3. Define a governance entity and workflow: The immediate objective is to establish an inventory for all businesses and projects and to define acceptable use cases and policy requirements. GenAI applications might require a specific approval workflow and continual usage monitoring where possible, but also periodic user attestations that actual usage conforms to preset intentions.

4. Monitor and block: Organizations should plan to block access to OpenAI domains or, apply some level of data leakage prevention, leveraging existing security controls, or deploy security service edge (SSE) solutions that can intercept web traffic to known applications.

5. Continuous communication on short acceptable use policy: Often, a one- or two-page policy document can be used to share internal contact for approval, highlight the risks of the applications, forbid the use of client data, and request documentation and traceability of outputs generated by these applications.

6. Investigate and Embrace Observability for prompt engineering and API integrations: Intercepting inputs and outputs with a custom-made prompt might improve results but also enable more security controls. Larger organizations might investigate prompt augmentation techniques, such as Retrieval Augmented Generation (RAG).

7. Prefer private hosting options when available, which afford extra security and privacy controls.

Generative AI Consumption Is New Attack Surface

As with any innovation or emerging technology (e.g., Metaverse, cryptocurrency as two recent examples), malicious actors will seek creative methods to exploit the immaturity of GenAI security practices and awareness in novel ways.

The biggest risk of generative AI will be its potential to rapidly create believable fake content to influence popular opinion and provide seemingly legitimate content to add a layer of authenticity to scams.

When securing GenAI consumption, CISOs, and their teams should anticipate the following changes in the attack surface:

- Adversarial prompting: The application of direct and indirect prompts is a prominent attack surface. The notions of “prompt injections” and “adversarial prompting” emerge as threats when consuming third-party GenAI applications or when building your own. Early research work shows how to exploit application prompts. Security teams need to consider this new interface as a potential attack surface.

“Prompt injection” is an adversarial prompting technique. It describes the ability to insert hidden instructions or context in the application’s conversational or system prompt interfaces or in the generated.

- Generative AI as a lure: The popularity of GenAI will result in significant use of the topic as a lure to attract victims and as a new form of potential fraud. Expect counterfeit GenAI apps, browser plug-ins, applications and websites.

- Digital supply chain: Attackers will exploit any weakness in GenAI components that will be widely deployed as microservices in popular business applications. These subroutines could be impacted by several machine learning (ML) attack techniques, such as training data manipulation and other attacks to manipulate the response.

- Regulations Will Impact Generative AI Consumption - Upcoming regulations are a latent threat for organizations that are consuming (and building) AI applications. As laws might change requirements for the providers, organizations from heavily regulated industries or in regions with more stringent privacy laws that are also actively seeking to enact AI regulations might need to put a hold on or revert the consumption of LLM applications. The level of detail might differ depending on the sensitivity of the content. The applications and services might include logging, but organizations might need to implement their own process for the most sensitive content, such as code, rules, and scripts deployed in production.

AI Adds New Attack Surface to Existing Applications

Recommendations for the Security and Safety of AI Applications

At a high level, the Security and Safety aspects of AI applications can be categorized into five categories:

- Explainability and model monitoring

- ModelOps

- AI application security

- Privacy

- Secure Foundation for AI Application Platform

Implementing controls for GenAI applications will heavily depend on the AI model implementation. The scope of security controls will depend on the pattern of AI usage like if an application includes:

- Adapt to hybrid development models, such as front-end wrapping (e.g., prompt engineering), private hosting of third-party models, and custom GenAI applications with in-house model design and fine-tuning.

- Consider the data security options when training and fine-tuning models, notably the potential impact on accuracy or additional costs. This must be weighed against requirements in modern privacy laws, which often include the ability for individuals to request that organizations delete their data.

- Upskill your security champions, software engineering champions, and DevOps champions on 'Secure GenAI coding' practices.

- Update Security by Design, Secure Software Development Lifecycle (Secure SDLC) guidance

- Update Security by Default and Privacy By Design Guidance

- Apply a Responsible and Trustworthy AI Framework (RTAF) and Integrated approach to Risk and Security Management (IRSM) principles.

- Augment Testing Policies to add requirements for testing against adversarial prompts and prompt injections.

- Evaluate and Deploy the ‘Monitor model operations’ toolset.

The Promise of Fully Automated Defense

The primary hurdle in embracing automated responses lies not in technical capabilities but in the accountability of those involved. The opacity surrounding the inner workings and data sources of GenAI raises concerns among security leaders about directly automating actions based on the outputs of generative cybersecurity AI applications.

In the coming 6-9 months, many security providers will introduce features that explore the possibilities of composite AI—integrating multiple AI models and complementing them with other techniques. For instance, a GenAI module might generate remediation strategies upon detecting new malware, and various autonomous agents would execute these actions, which could involve isolating the affected host, removing unopened emails, or sharing indicators of compromise (IOCs) with other organizations. The allure of automated actions to prevent attacks, restrict lateral movement, or dynamically adjust policies is a compelling proposition for enterprises and technology providers, provided it proves effective.

However, the challenge arises when it comes to explaining and documenting the automated responses. This limitation, particularly in critical operations or when dealing with first-line personnel, may hinder but not entirely halt response automation. Mandatory approval workflows and comprehensive documentation will be essential until the organization establishes enough trust in the system to gradually expand the degree of automation. Effective explainability may even emerge as a distinguishing factor for security providers or a legal requirement in certain jurisdictions.

Published at DZone with permission of Gaurav Agarwaal. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments