Effective Bulk Data Import into Neo4j (Part 3)

Here we are, the finale! Today, we take a look at LOAD JSON, a piece of the import puzzle that converts JSON to a CSV.

Join the DZone community and get the full member experience.

Join For FreeWelcome back to the third part of our series. In part one, we took a look at importing a dataset from Stack Overflow as well as LOAD CSV, a tool that helps process JSON data. Part two focused on ways LOAD CSV can be leveraged. Here, we'll examine the final procedures to complete the import process.

LOAD JSON

One example procedure is LOAD JSON, which uses Java to convert JSON to a CSV instead of jq. We just put in two lines of Java to get the JSON URL, turn it into a row of maps on a single map, return it to Cypher, and perform a LOAD CSV:

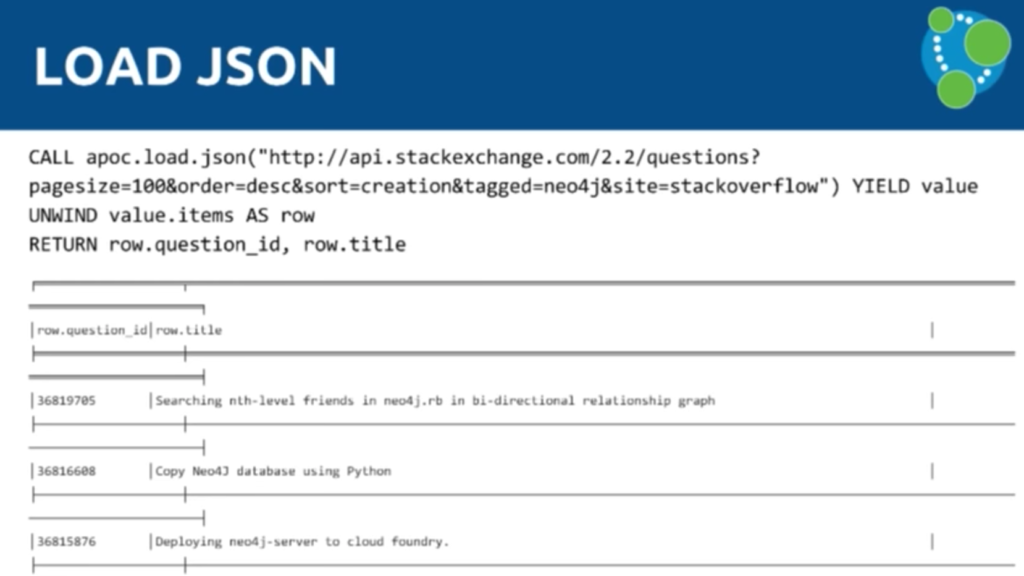

Below is the Stack Overflow API. We call the JSON directly, pass in the URI, and don't need a key for the API so we just call for the questions. It will send 100 questions with the Neo4j tag and will come back with the value as the default. You YIELD that and use the UNWIND keyword to separate all the items, which then returns the question ID and the title:

Instead of doing LOAD CSV, you could do LOAD JSON instead. And now we're in exactly the same place — instead of having to use the CSV file, we can just phrase this to JSON directly.

And you can apply all the previous tips related to LOAD CSV to LOAD JSON as well.

But now we're going to explore how to bulk data import the initial dataset.

We took the initial dataset — comprised of gigantic XML files — and wrote Java code to turn them into CSV files. Neo4j comes with a bulk data import tool, which uses all your CPUs and disk I/O performance to ingest the CSV files as quickly as your machine(s) will allow. If you have a big box with many CPUs, it can saturate both CPUs and disks while importing the data.

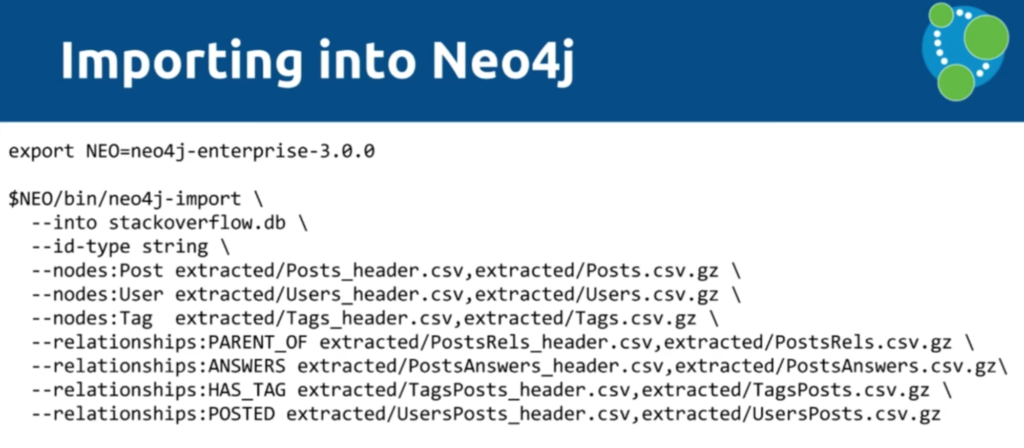

In your BIN directory when you download Neo4j 3.0, you get a tool called neo4j-import, which essentially allows you to build an offline database. Like we said, we're skipping the transactional layer and building the actual store files of the database. You can see below what CSV files we're processing:

While this is extremely fast, you first have to put the data into the right format. In the above example, we give each post a header so that we can separate them into header files.

Often when you get a Hadoop dump, you end up with the data spread out over a number of part files, and you don't want to have to add a header into all of those. With this tool, you don't have to. Instead, you note that the headers, relationships, etc. are in different files. Then we say, create this graph in this folder.

We're running this for the whole of the Stack Overflow dataset — all the metadata of Stack Overflow is going to be in a Neo4j database once this script is run.

Below is what the files look like. This is the format you need in order to effectively define a mapping. You can define your properties, keys and start IDs:

So we've converted an SQL server to CSV, then to XML, and now back to some sort of variant of CSV using the following Java program — the magic import dust:

This is what the generated Posts file looks like:

And below is our Neo4j script, which includes 30 million nodes, 78 million relationships and 280 million properties that were imported in three minutes and eight seconds:

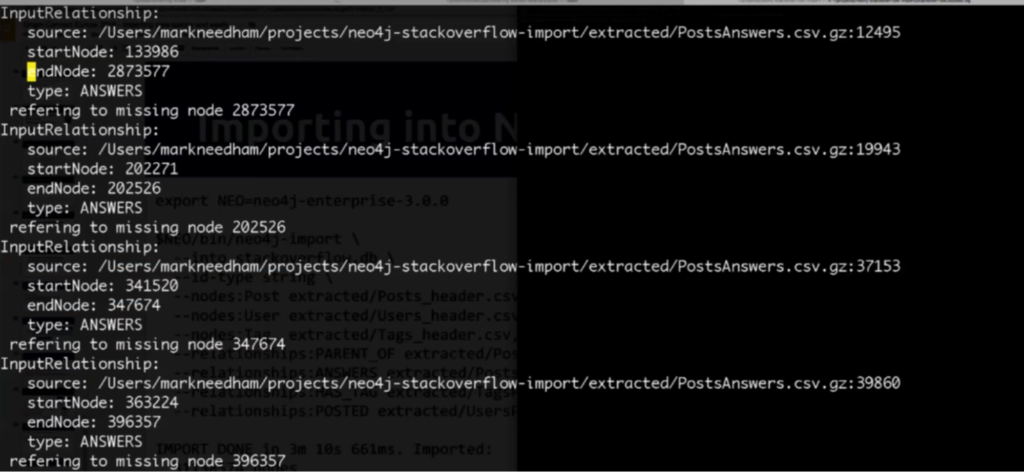

The other cool thing you'll see is that you get a file telling you if there was anything that it couldn't create, which is essentially a report of potentially bad data:

Summary

Remember — the quality of your data is incredibly important:

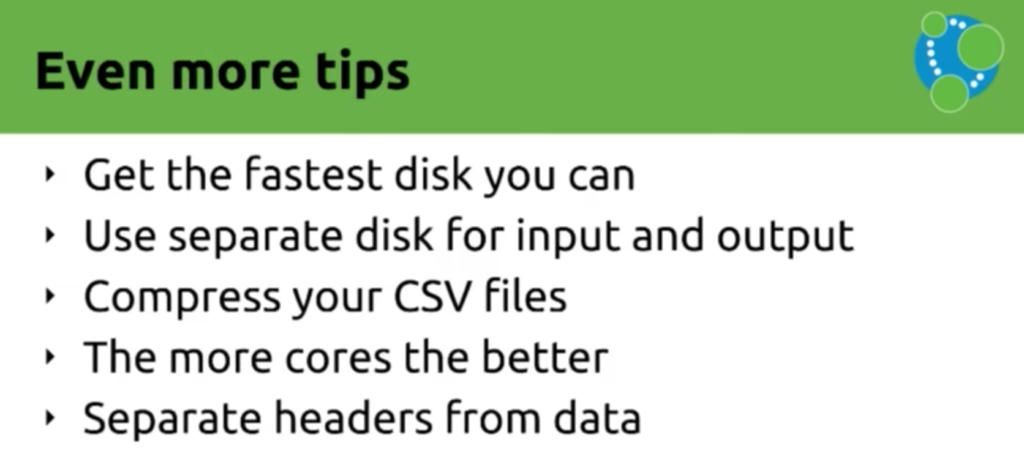

And some final tips to leave you with:

Published at DZone with permission of Mark Needham, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments