Docker Containers and Kubernetes: An Architectural Perspective

You've likely heard of both Docker and Kubernetes in the containerization space. Take a look at how the architecture of the two work together.

Join the DZone community and get the full member experience.

Join For FreeUnderstanding both Docker and Kubernetes is essential if we want to build and run a modern cloud infrastructure. It's even more important to understand how solution architects and developers can use them when designing different cloud-based solutions. Both VMs and Containers are aimed at improving server utilization and reducing physical server sprawl and total cost of ownership for the IT department. However, if we can see the growth in terms of adoption in the last few years, there is an exponential growth in container-based cloud deployment.

Fundamentals of VM and Docker Concepts

Virtual machines provide the ability to emulate a separate OS (the guest), and therefore a separate computer, from right within your existing OS (the host). A Virtual Machine is made up of a userspace plus the kernel space of an operating system. VMs run on top of a physical machine using a “hypervisor,” also called a virtual machine manager, which is a program that allows multiple operating systems to share a single hardware host.

There are mainly two modes of operation in CPU, user mode and kernel mode. When we start a user mode application the OS creates a process with a private virtual address space to play around. We may have many userspace processes running but they can be made to run in isolation so if one process crashes then the other processes are unharmed. However, user processes may require special services from the OS (e.g, I/O, child process creation, etc.) which can be achieved by performing system calls which temporarily switches the process into kernel mode by a method called trapping. The instructions which can only be executed in kernel mode (eg, system calls) are called sensitive instructions. There are also a set of instructions which can trap if executed in user mode called as privileged instructions.

Containers are a way of packaging software, mainly all of the application’s code, libraries and dependencies. They provide a lightweight virtual environment that groups and isolates a set of processes and resources such as memory, CPU, disk, etc., from the host and any other containers. The isolation guarantees that any processes inside the container cannot see any processes or resources outside the container. A container provides operating system-level virtualization by abstracting the userspace. Containers also provide most of the features provided by virtual machines like IP addresses, volume mounting, resource management (CPU, memory, disk), SSH (exec), OS images, and container images, but containers do not provide an init system as containers are designed to run a single process. Generally, containers make use of Linux kernel features like namespaces (ipc, uts, mount, pid, network and user), cgroups for providing an abstraction layer on top of an existing kernel instance for creating isolated environments similar to virtual machines.

Figure 1: Difference between VMs and Containers

Namespace: One of the OS functions that allows sharing of global resources like network and disk to processes i.e global resources were wrapped in namespaces so that they are visible only to those processes that run in the same namespace. We can take an example where a user needs to get a chunk of disk and put that in namespace “A” and then processes running in namespace “B” can't see or access it. Similarly, processes in namespace “A” can't access anything in memory that is allocated to namespace “B.” Also processes in “A” can't see or talk to processes in namespace “B.” This namespace function provides isolation for global resources and this is how Docker works. The OS kernel knows the namespace that was assigned to the process and during API calls it makes sure that process can only access resources in its own namespace. Docker uses these namespaces together in order to isolate and begin the creation of a container.

There are several different types of namespaces in a kernel that Docker makes use of, for example:

NET: It provides the container with its own view of the network stack of the system (e.g. IP addresses, Routing tables, port numbers, any other.

PID (Process ID): The PID namespace ( Linux command “ ps -aux ” PID column) provides containers their own scan view of processes including an independent init (PID 1), which is the ancestor of all processes.

MNT: It provides a container its own view of the “mounts” on the system. So the processes in different mount namespaces have different views of the filesystem hierarchy.

UTS (UNIX Timesharing System): It provides a process to identify system identifiers (i.e. hostname, domain name, etc.). UTS allows containers to have their own hostname and NIS domain name that is independent of other containers and the host system.

IPC (Interprocess Communication): IPC namespace is responsible for isolating IPC resources between processes running inside each container.

USER: This namespace is used to isolate users within each container. It functions by allowing containers to have a different view of the UID (user ID) and gid (group ID) ranges that can be different inside and outside a user namespace which provides a process to have an unprivileged user outside a container without sacrificing root privilege inside a container.

Control groups (cgroups): It's a Linux kernel feature that isolates and manages different resource like CPU, memory, disk I/O, network, etc. consumption. Cgroups also ensure that a single container doesn’t exhaust one of those resources and bring the entire system down.

Docker Containers

Docker is container-based technology and containers are just user space of the operating system. At the low level, a container is just a set of processes that are isolated from the rest of the system, running from a distinct image that provides all files necessary to support the processes. It is built for running applications. In Docker, the containers running share the host OS kernel. Docker is an open-source project based on Linux containers. It uses Linux Kernel features like namespaces and control groups to create containers on top of an operating system. Docker enclose an application’s software into an invisible box with everything it needs to run like OS, application code, Run-time, system tools and libraries etc. Docker containers are built off Docker images and the images are read-only, So Docker adds a read-write file system over the read-only file system of the image to create a container. Docker is currently the most popular container and why its so popular, different architecture components, use cases will describe in details in below sections.

Components of Docker:

Docker is composed of six different components namely:

Docker Client;

Docker Daemon;

Docker Images;

Docker Registries;

Containers

Docker Engine

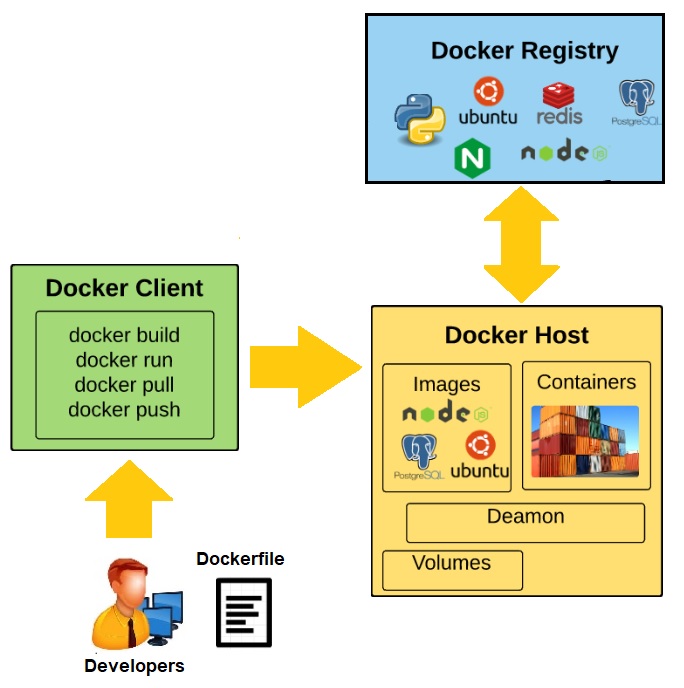

Figure 2: High-Level Components of Docker Container

Docker Client: This is the CLI (Command Line Interface) tool used to configure and interact with Docker. Whenever developers or users use the commands like docker run the client sends these commands to Docker daemon (dockerd) which carries them out. The docker command uses the Docker API and the Docker client can communicate with more than one daemon.

Docker Daemon: This is the Docker server which runs as the daemon. This daemon listens to API requests and manages Docker objects (images, containers, networks, and volumes). A daemon can also communicate with other daemons to manage Docker services.

Images: Images are the read-only template/snapshot used to create a Docker container and these Images can be pushed to and pulled from public or private repositories. This is the build component of docker. Images are lightweight, small, and fast as compared to Virtual Machine Images.

Docker file: Used for building images

Containers: Applications run using containers and containers are the running instance of an image. We can create, run, stop, move, or delete a container using the CLI and also can connect a container to one or more networks, attach storage to it, or even create a new image based on its current state.

Docker Registries: This is the distribution component of docker or called as Central repository of Docker images which stores Docker images. Docker Hub and Docker Cloud are public registries that anyone can use, and Docker is configured to look for images on Docker Hub by default. When we use the docker pull or docker run commands then the required images are pulled from our configured registry. When we use the docker push command, our image is pushed to our configured registry. We can upgrade the application by pulling the new version of the image and redeploying the containers.

Docker Engine: Combination of Docker daemon, Rest API and CLI tool

Advantages of Docker

Easy to move apps between cloud platforms

Distribute and share content

Accelerate developer onboarding

Empower developer creativity

Eliminate environment inconsistencies

Ship software faster

Easy to scale an application

Remediate issues efficiently

Lightweight

Easy to start (few seconds)

Easy to Migrate

Very Less OS maintenance

Docker Key Use Cases

Modernize Traditional Apps

DevOps (CI/CD)

Hybrid Cloud

Microservices

Infrastructure Optimization

Big Data

Docker Container Orchestration Using Kubernetes

In a Docker cluster environment, there are lot of task need to manage like scheduling, communication, and scalability and these tasks can be taken care by any of the orchestration tools available in market but some of the industry recognized tools are Docker Swarm which is a Docker native orchestration tool and “Kubernetes” which is one of the highest velocity projects in open source history.

Kubernetes is an open-source container management (orchestration) tool. It’s container management responsibilities include container deployment, scaling, and descaling of containers and container load balancing. There are Cloud-based Kubernetes services called as KaaS (Kubenetes as a Service ) also available namely AWS ECS, EKS, Azure AKS, and GCP GKE.

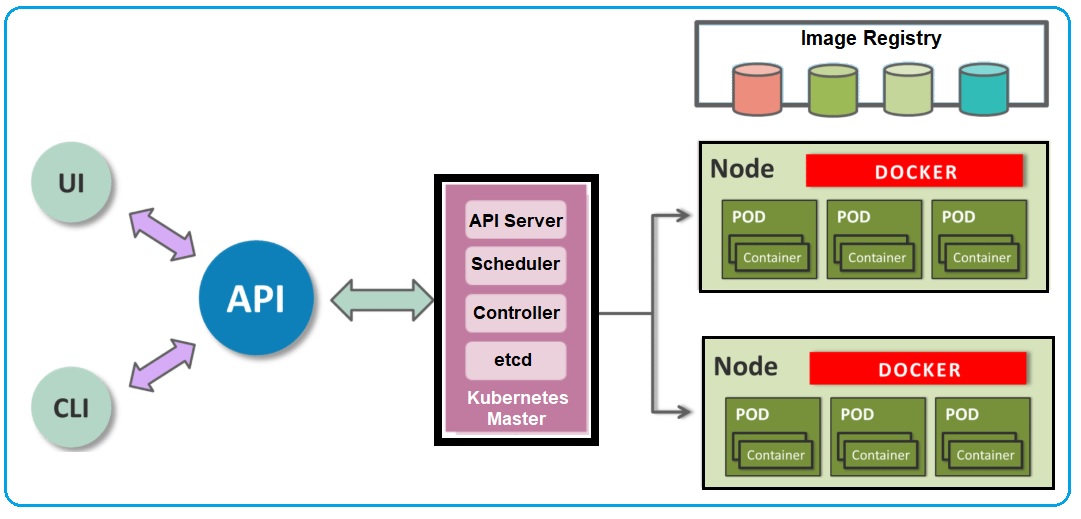

Figure 3: High-Level view of Kubernetes Architecture

Master Components: Master components provide the cluster’s control plane and are responsible for global activities about the cluster such as scheduling, detecting and responding to cluster events.

- API Server: Exposes the Kubernetes API and is the front-end for the Kubernetes control plane. The API server is the entry points for all the REST commands used to control the cluster. It processes the REST requests, validates them and executes the bound business logic.

- etcd: All cluster data is stored in etcd storage and its a simple, distributed, consistent key-value store. It’s mainly used for shared configuration and service discovery. It provides a REST API for CRUD operations.

- Controller-manager: Controller manager runs controllers that handle routine tasks in the cluster e.g. Replication Controller, Endpoints Controller etc. A controller uses API server to watch the shared state of the cluster and makes corrective changes to the current state to change it to the desired one.

- Scheduler: The scheduler has the information regarding resources available on the members of the cluster. The Scheduler also watches newly created pods that have no node assigned and selects a node for them to run on.

Node Components: Node components run on every node, maintain running pods and provide them the Kubernetes runtime environment.

- kubelet: The primary node agent that watches for pods that have been assigned to its node and performs actions to keep it healthy and functioning e.g. mount pod volumes, download pod secrets, run containers, perform health checks etc. kubelet gets the configuration of a pod from the apiserver and ensures that the described containers are up and running. This is the worker service that’s responsible for communicating with the master node. It also communicates with etcd, to get information about services and write the details about newly created ones.

- kube-proxy: Enables the Kubernetes service abstraction by maintaining network rules on the host and performing connection forwarding. kube-proxy acts as a network proxy and a load balancer for a service on a single worker node. It takes care of the network routing for TCP and UDP packets.

- Docker: It used for actually running containers. Docker runs on each of the worker nodes, and runs the configured pods. It takes care of downloading the images and starting the containers.

Opinions expressed by DZone contributors are their own.

Comments