Building the World's Most Resilient To-Do List Application With Node.js, K8s, and Distributed SQL

This article demonstrates how you can build cloud-native Node.js applications with Kubernetes (K8s) and distributed SQL.

Join the DZone community and get the full member experience.

Join For FreeDeveloping scalable and reliable applications is a labor of love. A cloud-native system might consist of unit tests, integration tests, build tests, and a full pipeline for building and deploying applications at the click of a button.

A number of intermediary steps might be required to ship a robust product. With distributed and containerized applications flooding the market, so too have container orchestration tools like Kubernetes. Kubernetes allows us to build distributed applications across a cluster of nodes, with fault tolerance, self-healing, and load balancing — plus many other features.

Let’s explore some of these tools by building a distributed to-do list application in Node.js, backed by the YugabyteDB distributed SQL database.

Getting Started

A production deployment will likely involve setting up a full CI/CD pipeline to push containerized builds to the Google Container Registry to run on Google Kubernetes Engine or similar cloud services.

For demonstration purposes, let’s focus on running a similar stack locally. We’ll develop a simple Node.js server, which is built as a docker image to run on Kubernetes on our machines.

We’ll use this Node.js server to connect to a YugabyteDB distributed SQL cluster and return records from a rest endpoint.

Installing Dependencies

We begin by installing some dependencies for building and running our application.

-

Docker is used to build container images, which we’ll host locally.

-

Creates a local Kubernetes cluster for running our distributed and application

YugabyteDB Managed

Next, we create a YugabyteDB Managed account and spin up a cluster in the cloud. YugabyteDB is PostgreSQL-compatible, so you can also run a PostgreSQL database elsewhere or run YugabyteDB locally if desired.

For high availability, I’ve created a 3-node database cluster running on AWS, but for demonstration purposes, a free single-node cluster works fine.

Seeding Our Database

Once our database is up and running in the cloud, it’s time to create some tables and records. YugabyteDB Managed has a cloud shell that can be used to connect via the web browser, but I’ve chosen to use the YugabyteDB client shell on my local machine.

Before connecting, we need to download the root certificate from the cloud console.

I’ve created a SQL script to use to create a todos table and some records.

CREATE TYPE todo_status AS ENUM ('complete', 'in-progress', 'incomplete');

CREATE TABLE todos (

id serial PRIMARY KEY,

description varchar(255),

status todo_status

);

INSERT INTO

todos (description, status)

VALUES

(

'Learn how to connect services with Kuberenetes',

'incomplete'

),

(

'Build container images with Docker',

'incomplete'

),

(

'Provision multi-region distributed SQL database',

'incomplete'

);We can use this script to seed our database.

> ./ysqlsh "user=admin \

host=<DATABASE_HOST> \

sslmode=verify-full \

sslrootcert=$PWD/root.crt" -f db.sqlWith our database seeded, we’re ready to connect to it via Node.js.

Build a Node.js Server

It’s simple to connect to our database with the node-postgres driver. YugabyteDB has built on top of this library with the YugabyteDB Node.js Smart Driver, which comes with additional features that unlock the powers of distributed SQL, including load-balancing and topology awareness.

> npm install express

> npm install @yugabytedb/pg

const express = require("express");

const App = express();

const { Pool } = require("@yugabytedb/pg");

const fs = require("fs");

let config = {

user: "admin",

host: "<DATABASE_HOST>",

password: "<DATABASE_PASSWORD>",

port: 5433,

database: "yugabyte",

min: 5,

max: 10,

idleTimeoutMillis: 5000,

connectionTimeoutMillis: 5000,

ssl: {

rejectUnauthorized: true,

ca: fs.readFileSync("./root.crt").toString(),

servername: "<DATABASE_HOST>",

},

};

const pool = new Pool(config);

App.get("/todos", async (req, res) => {

try {

const data = await pool.query("select * from todos");

res.json({ status: "OK", data: data?.rows });

} catch (e) {

console.log("error in selecting todos from db", e);

res.status(400).json({ error: e });

}

});

App.listen(8000, () => {

console.log("App listening on port 8000");

});Containerizing Our Node.js Application

To run our Node.js application in Kubernetes, we first need to build a container image. Create a Dockerfile in the same directory.

FROM node:latest

WORKDIR /app

COPY . .

RUN npm install

EXPOSE 8000

ENTRYPOINT [ "npm", "start" ]All of our server dependencies will be built into the container image. To run our application using the npm start command, update your package.json file with the start script.

…

"scripts": {

"start": "node index.js"

}

…Now, we’re ready to build our image with Docker.

> docker build -t todo-list-app .

Sending build context to Docker daemon 458.4MB

Step 1/6 : FROM node:latest

---> 344462c86129

Step 2/6 : WORKDIR /app

---> Using cache

---> 49f210e25bbb

Step 3/6 : COPY . .

---> Using cache

---> 1af02b568d4f

Step 4/6 : RUN npm install

---> Using cache

---> d14416ffcdd4

Step 5/6 : EXPOSE 8000

---> Using cache

---> e0524327827e

Step 6/6 : ENTRYPOINT [ "npm", "start" ]

---> Using cache

---> 09e7c61855b2

Successfully built 09e7c61855b2

Successfully tagged todo-list-app:latestOur application is now packaged and ready to run in Kubernetes.

Running Kubernetes Locally With Minikube

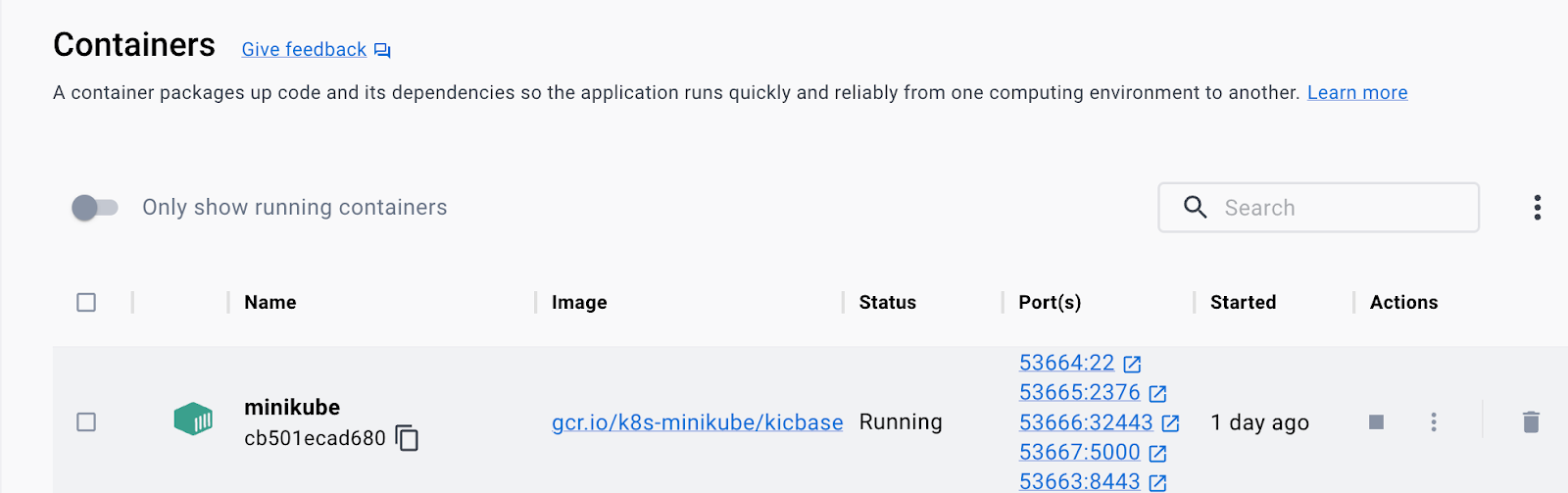

To run a Kubernetes environment locally, we’ll run Minikube, which creates a Kubernetes cluster inside of a Docker container running on our machine.

> minikube start

That was easy! Now we can use the kubectl command-line tool to deploy our application from a Kubernetes configuration file.

Deploying to Kubernetes

First, we create a configuration file called kubeConfig.yaml which will define the components of our cluster. Kubernetes deployments are used to keep pods running and up-to-date. Here we’re creating a cluster of nodes running the todo-app container that we’ve already built with Docker.

apiVersion: apps/v1

kind: Deployment

metadata:

name: todo-app-deployment

labels:

app: todo-app

spec:

selector:

matchLabels:

app: todo-app

replicas: 3

template:

metadata:

labels:

app: todo-app

spec:

containers:

- name: todo-server

image: todo

ports:

- containerPort: 8000

imagePullPolicy: NeverIn the same file, we’ll create a Kubernetes service, which is used to set the networking rules for your application and expose it to clients.

---

apiVersion: v1

kind: Service

metadata:

name: todo-app-service

spec:

type: NodePort

selector:

app: todo-app

ports:

- name: todo-app-service-port

protocol: TCP

port: 8000

targetPort: 8000

nodePort: 30100Let’s use our configuration file to create our todo-app-deployment and todo-app-service. This will create a networked cluster, resilient to failures and orchestrated by Kubernetes!

> kubectl create -f kubeConfig.yamlAccessing Our Application in Minikube

> minikube service todo-app-service --url

Starting tunnel for service todo-app-service.

Because you are using a Docker driver on darwin, the terminal needs to be open to run it.We can find the tunnel port by executing the following command.

> ps -ef | grep docker@127.0.0.1

503 2363 2349 0 9:34PM ttys003 0:00.01 ssh -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no -N docker@127.0.0.1 -p 53664 -i /Users/bhoyer/.minikube/machines/minikube/id_rsa -L 63650:10.107.158.206:8000The output indicates that our tunnel is running at port 63650. We can access our /todos endpoint via this URL in the browser or via a client.

> curl -X GET http://127.0.0.1:63650/todos -H 'Content-Type: application/json'

{"status":"OK","data":[{"id":1,"description":"Learn how to connect services with Kuberenetes","status":"incomplete"},{"id":2,"description":"Build container images with Docker","status":"incomplete"},{"id":3,"description":"Provision multi-region distributed SQL database","status":"incomplete"}]}Wrapping Up

With a distributed infrastructure in place in our application and database tiers, we’ve developed a system built to scale and survive.

I know, I know, I promised you the most resilient to-do app the world has ever seen and didn’t provide a user interface. Well, that’s your job! Extend the API service we’ve developed in Node.js to serve the HTML required to display our list.

Look out for more from me on Node.js and distributed SQL — until then, keep on coding!

Opinions expressed by DZone contributors are their own.

Comments