Distributed Lock Implementation With Redis

Many libraries use Redis for distributed locking, but some of these good libraries haven't considered all of the pitfalls that may arise in a distributed environment.

Join the DZone community and get the full member experience.

Join For FreeUses of Distributed Locks

Distributed Mutual Exclusion

There are several resources in a system that mustn't be used simultaneously by multiple processes if the program operation must be correct. For example, a file mustn't be simultaneously updated by multiple processes or the use of printers must be restricted to a single process simultaneously. Therefore, exclusive access to such a shared resource by a process must be ensured. This exclusiveness of access is called mutual exclusion between processes. The sections of a program that need exclusive access to shared resources are referred to as critical sections. Solutions are needed to grant mutual exclusive access by processes. We can use distributed locking for mutually exclusive access to resources.

Features of Distributed Locks

A distributed lock service should satisfy the following properties:

Mutual exclusion: Only one client can hold a lock at a given moment. This is an essential property of a distributed lock.

Deadlock free: Every request for a lock must be eventually granted; even clients that hold the lock crash or encounter an exception.

Different Implementations

Many distributed lock implementations are based on the distributed consensus algorithms (Paxos, Raft, ZAB, Pacifica) like Chubby based on Paxos, Zookeeper based on ZAB, etc., based on Raft, and Consul based on Raft. There is also a proposed distributed lock by Redis creator named RedLock.

Many libraries use Redis for distributed locking, but some of these good libraries haven't considered all of the pitfalls that may arise in a distributed environment.

In the following section, I show how to implement a distributed lock step by step based on Redis, and at every step, I try to solve a problem that may happen in a distributed system. Please note that I used a leased-based lock, which means we set a key in Redis with an expiration time (leased-time); after that, the key will automatically be removed, and the lock will be free, provided that the client doesn't refresh the lock. Complete source code is available on the GitHub repository: https://github.com/siahsang/red-utils

First Attempt: Single Instance of Redis

For simplicity, assume we have two clients and only one Redis instance. A plain implementation would be:

xxxxxxxxxx

/**

* @param lockName name of the lock

* @param leaseTime the duration we need for having the lock

* @param operationCallBack the operation that should be performed when we successfully get the lock

*

* @return true if the lock can be acquired, false otherwise

*/

boolean tryAcquire(String lockName, long leaseTime, OperationCallBack operationCallBack) {

boolean getLockSuccessfully = getLock(lockName, leaseTime);

if (getLockSuccessfully) {

try {

operationCallBack.doOperation();

} finally {

releaseLock(lockName);

}

return true;

} else {

return false;

}

}

boolean getLock(String lockName, long expirationTimeMillis) {

// Create a unique lock value for current thread

String lockValue = createUniqueLockValue();

try {

// Check if key 'lockName' is set before,

// If not then put it with expiration time 'expirationTimeMillis'.

String response = storeLockInRedis(lockName, lockValue, expirationTimeMillis);

return response.equalsIgnoreCase("OK");

} catch (Exception exception) {

releaseLock(lockName);

throw exception;

}

}

void releaseLock(String lockName) {

// This is important in order to avoid removing a lock

// that was created by another client.

String lockValue = createUniqueLockValue();

// Remove the key 'lockName' if it have value 'lockValue'

removeLockFromRedis(lockName, lockValue);

}

But what is wrong with that?

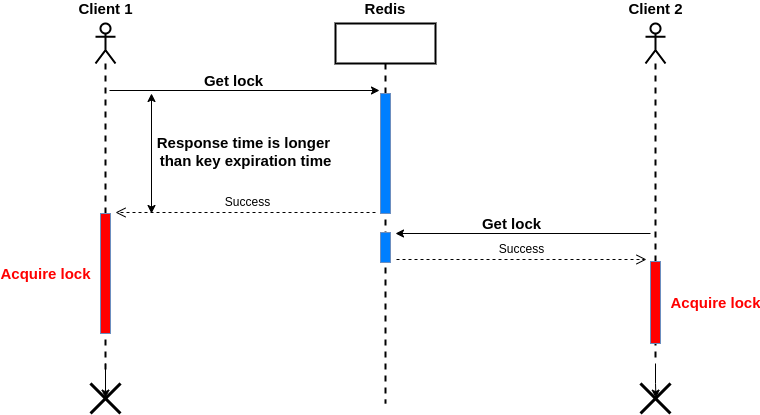

Suppose the first client requests to get a lock, but the server response is longer than the lease time; as a result, the client uses the expired key, and at the same time, another client could get the same key, now both of them have the same key simultaneously! It violet the mutual exclusion. The following diagram illustrates this situation:

To solve this problem, we can set a timeout for Redis clients, and it should be less than the lease time. But there is another problem, what would happen if Redis restarted (due to a crash or power outage) before it can persist data on the disk? We consider it in the next section.

Second Scenario: Single Instance of Redis and Node Outage

As you know, Redis persist in-memory data on disk in two ways:

Redis Database (RDB): performs point-in-time snapshots of your dataset at specified intervals and store on the disk.

Append-only File (AOF): logs every write operation received by the server, that will be played again at server startup, reconstructing the original dataset.

By default, only RDB is enabled with the following configuration (for more information please check https://download.redis.io/redis-stable/redis.conf):

save 900 1

save 300 10

save 60 10000

For example, the first line means if we have one write operation in 900 seconds (15 minutes), then It should be saved on the disk.

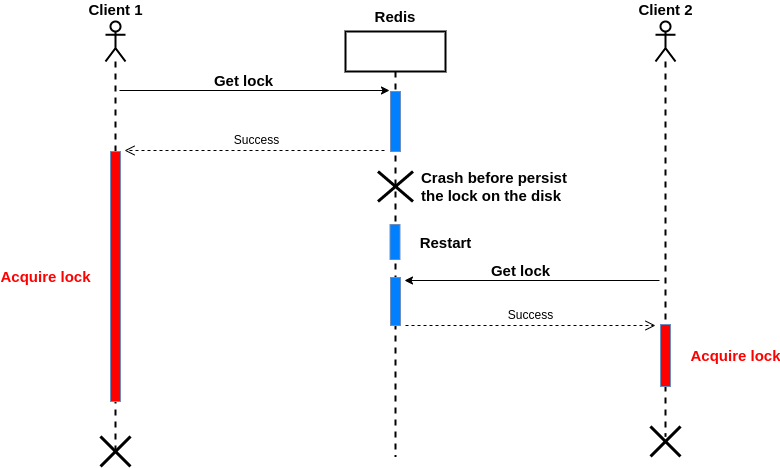

So in the worst case, it takes 15 minutes to save a key change. If Redis restarted (crashed, powered down, I mean without a graceful shutdown) at this duration, we lose data in memory so other clients can get the same lock:

To solve this issue, we must enable AOF with the fsync=always option before setting the key in Redis. Note that enabling this option has some performance impact on Redis, but we need this option for strong consistency.

In the next section, I will show how we can extend this solution when having a master-replica.

Third Scenario: Master-Replica

In this configuration, we have one or more instances (usually referred to as the slaves or replica) that are an exact copy of the master.

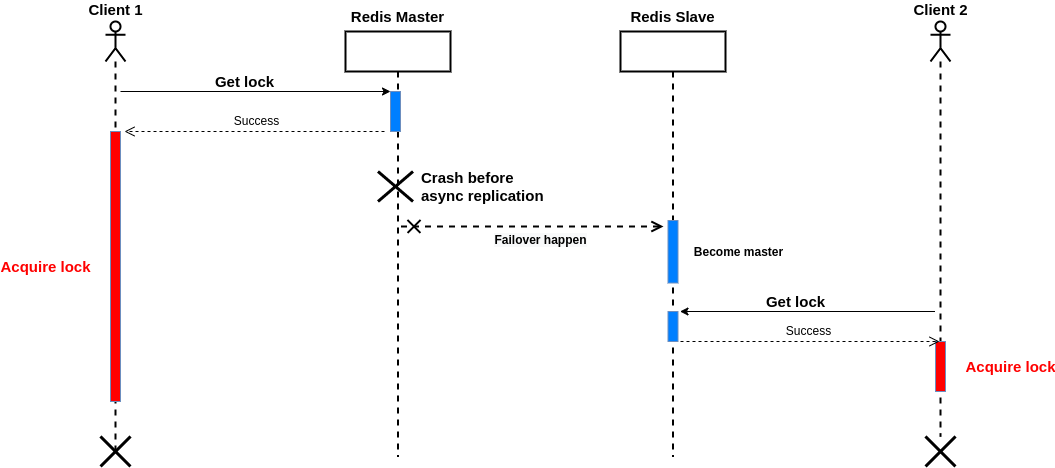

By default, replication in Redis works asynchronously; this means the master does not wait for the commands to be processed by replicas and replies to the client before. The problem is before the replication occurs, the master may be failed, and failover happens; after that, if another client requests to get the lock, it will succeed! Or suppose there is a temporary network problem, so one of the replicas does not receive the command, the network becomes stable, and failover happens shortly; the node that didn't receive the command becomes the master. Eventually, the key will be removed from all instances! The following picture illustrates this situation:

As a solution, there is a WAIT command that waits for specified numbers of acknowledgments from replicas and returns the number of replicas that acknowledged the write commands sent before the WAIT command, both in the case where the specified number of replicas is reached or when the timeout is reached. For example, if we have two replicas, the following command waits at most 1 second (1000 milliseconds) to get acknowledgment from two replicas and return:

WAIT 2 1000

So far, so good, but there is another problem; replicas may lose writing (because of a faulty environment). For example, a replica failed before the save operation was completed, and at the same time master failed, and the failover operation chose the restarted replica as the new master. After synching with the new master, all replicas and the new master do not have the key that was in the old master!

To make all slaves and the master fully consistent, we should enable AOF with fsync=always for all Redis instances before getting the lock.

Note: Again in this approach, we are scarifying availability for the sake of strong consistency.

x

boolean tryAcquire(String lockName, long leaseTime, OperationCallBack operationCallBack) {

// same as before

}

boolean getLock(String lockName, long expirationTimeMillis) {

// Create a unique lock value for current thread

String lockValue = createUniqueLockValue();

try {

// Check if key 'lockName' is set before,

// If not then put it with expiration time 'expirationTimeMillis'.

String response = storeLockInRedis(lockName, lockValue, expirationTimeMillis);

if (!response.equalsIgnoreCase("OK")){

return false;

}

// wait until we get acknowledge from other replicas or throws exception otherwise

waitForReplicaResponse();

return true;

} catch (Exception exception) {

releaseLock(lockName);

throw exception;

}

}

void releaseLock(String lockName) {

// same as before

}

In the last section of this article I want to show how clients can extend the lock, I mean a client gets the lock as long as it wants.

Fourth Scenario: Auto-Refreshing The Lock

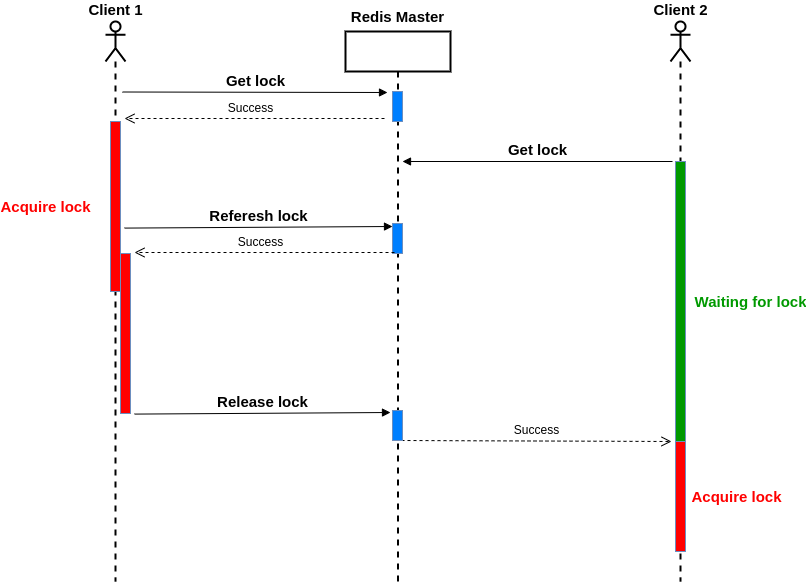

In this scenario, a lock that is acquired can be held as long as the client is alive and the connection is OK. We need a mechanism to refresh the lock before the lease expiration. We also should consider the case where we cannot refresh the lock; in this situation, we must immediately exit (perhaps with an exception).

Besides, other clients should be able to wait for getting the lock and entering the critical section as soon the holder of the lock released the lock:

Here is the pseudocode; for implementation, please refer to the GitHub repository:

https://github.com/siahsang/red-utils

xxxxxxxxxx

REQUIREMENTS:

1. ANY MODIFICATION MADE BY THIS ALGORITHM MUST HAPPEN WHEN AOF=FULLSYNC ON ALL REDIS INSTANCES

2. WE MUST WAIT FOR GETTING ACKNOWLEDGEMENT FOR ALL MODIFICATION COMMANDS

3. WHEN IT CAN NOT REFRESH THE LOCK(FOR EXAMPLE REDIS CRASHED OR IS SHUTTING DOWN INCORRECTLY) WE MUST IMMEDIATELY RETURN FROM CURRENT EXECUTION

4. WE MUST SET A DEFAULT RESPONSE TIMEOUT WHICH IS MUCH LESS THAN THE LOCK EXPIRE TIME OF LOCK, FOR EXAMPLE, 2 SECONDS

ALGORITHM:

UUID = GENERATE NEW UUID

LEASE_TIME = 30 SECONDS

FUNCTION ACQUIRE(LOCK_NAME)

GET_LOCKED_SUCCESSFULLY = GET_LOCK(LOCK_NAME, LEASE_TIME)

IF(GET_LOCKED_SUCCESSFULLY == FALSE)

SUBSCRIBE FOR CHANNEL 'LOCK_NAME'

// THIS IS BECAUSE THE CLIENT THAT HOLDS THE

// LOCK MAY HAVE DIED BEFORE INFORM OTHERS.

// ALSO THERE MAY BE RACE CONDITIONS THAT CLIENTS MISS SUBSCRIPTION SIGNAL

WHILE(GET_LOCKED_SUCCESSFULLY == FALSE)

TTL = GET TTL FOR KEY 'LOCK_NAME'

IF(TTL>=0)

WAIT CURRENT THREAD FOR TTL SECONDS

ELSE

GET_LOCKED_SUCCESSFULLY = GET_LOCK(LOCK_NAME , LEASE_TIME)

END IF

END WHILE

// AT THIS POINT WE GET LOCK SUCCESSFULLY

UNSUBSCRIBE FOR CHANNEL 'LOCK_NAME'

END IF

IF(TIMER IS NOT STARTED FOR THIS LOCK)

CREATE A TIMER TO REFRESH LOCK EVERY 10 SECONDS

EXECUTE OPERATION

STOP TIMER

RELEAS_LOCK(LOCK_NAME)

PUSH MESSAGE TO SUBSCRIBERS FOR CHANNEL 'LOCK_NAME'

END FUNCTION

FUNCTION GET_LOCK(LOCK_NAME, LEASE_TIME)

THREAD_ID = GET CURRENT THREAD_ID

LOCK_VALUE = THREAD_ID + ':' + UUID

RESULT = NOT_OK

IF(KEY 'LOCK_NAME' DO NOT EXIST IN REDIS)

CREATE KEY 'LOCK_NAME', VALUE 'LOCK_VALUE', EXPIRE_TIME 'LEASE_TIME'

RESULT = OK

// IN THIS CASE THE SAME THREAD IS REQUESTING TO GET THE LOCK

ELSE IF(KEY 'LOCK_NAME' AND VALUE 'LOCK_VALUE' EXIST IN REDIS)

INCREASE EXPIRE_TIME FOR LOCK_NAME TIME

RESULT = OK

ELSE

RESULT = NOT_OK

END IF

WAIT FOR ALL REPLICAS TO PERSIST DATA

RETURN RESULT

END FUNCTION

FUNCTION RELEAS_LOCK(LOCK_NAME)

THREAD_ID = GET CURRENT THREAD_ID

LOCK_VALUE = THREAD_ID + ':' + UUID

DELETE KEY 'LOCK_NAME' WITH VALUE 'LOCK_VALUE'

END FUNCTION

Conclusion

We have implemented a distributed lock step by step, and after every step, we solve a new issue. But some important issues that are not solved and I want to point here; please refer to the resource section for exploring more about these topics:

I assume clocks are synchronized between different nodes; for more information about clock drift between nodes, please refer to the resources section.

I assume there aren't any long thread pause or process pause after getting lock but before using it.

Getting locks is not fair; for example, a client may wait a long time to get the lock, and at the same time, another client gets the lock immediately.

Many libraries use Redis for providing distributed lock service. It is worth being aware of how they are working and the issues that may happen, and we should decide about the trade-off between their correctness and performance.

Thank you for reading!

Resources

Distributed Operating Systems: Concepts and Design, Pradeep K. Sinha

Designing Data-Intensive Applications: The Big Ideas Behind Reliable, Scalable, and Maintainable Systems, Martin Kleppmann

https://curator.apache.org/curator-recipes/shared-reentrant-lock.html

https://redis.io/topics/distlock

https://www.consul.io/commands/lock

https://etcd.io/docs/current/dev-guide/api_concurrency_reference_v3

https://martin.kleppmann.com/2016/02/08/how-to-do-distributed-locking.html

Opinions expressed by DZone contributors are their own.

Comments