DevOps Practices for PowerShell Programming

In this article, I’ll try to explain with a working example on how to program PowerShell (not just scripting) with all the goodness of Devops practices.

Join the DZone community and get the full member experience.

Join For FreePowershell is a powerful scripting language and I have seen a lot of developers and administrators miss out on all the goodness of DevOps practices such as versioning, test automation, artifact versioning, CI/CD, etc.

In this blog, I’ll try to explain with a working example on how to program PowerShell (not just scripting) with a predetermined module structure, ensuring quality with unit tests and deliver code in a reliable and repeatable way using continuous integration and continuous delivery pipelines.

Build Quality Software and Deliver it Right

Mature software development teams rely on strong engineering practices to incrementally deliver their software. However, these development practices are not fully used by operation teams where PowerShell is widely used. This blog explains how to structure the code using plaster, version control using git, build the code with psake, test modules with pester, artifact versioning and sharing via NuGet packages using Artifacts and create a release pipeline with Azure DevOps pipelines.

Why Bother?

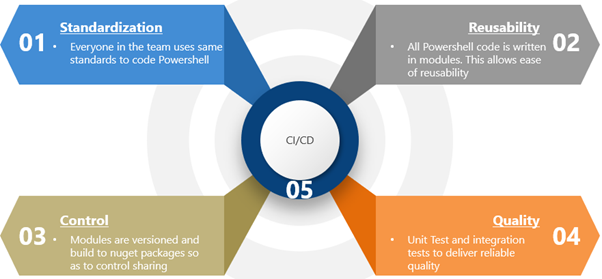

We can simply write a bunch of PowerShell scripts to meet the need, then why bother about all these DevOps practices? Why should someone care about them? I think benefits broadly fall into below 5 categories.

Standardization of Module design: When multiple people in a teamwork with PowerShell, it is paramount to have standards on how to develop PowerShell modules. Implementing and ensuring certain development standards reduce the complexity and overhead of code deviations and also helps to create a mindset of collective code ownership in the team.

I’ve used plaster templates to create standard modules. Plaster templates allow teams to customize how to structure a module or a PowerShell script file. A team can define multiple plaster templates depending on the need.

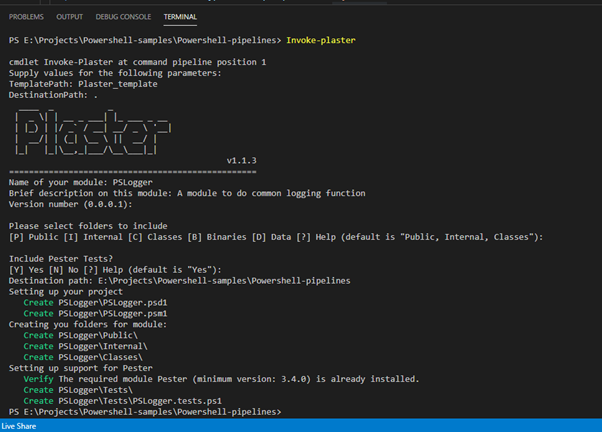

In the sample project I have created a plaster template to create a module. This template creates a module along with a set of default folders(Public, Internal, Classes, Tests) and a default pester test case.

Reusability: The ability to share a PowerShell module with other teams have obvious benefits. E.g. if a team writes a custom module to log data to Splunk, it might be beneficial to share this module with other teams. But the question is, how can we reliability share a module via a private repo (Like a private PowerShell Gallary).

In my code example, I've used Azure DevOps Artifacts to store/version my PowerShell module. This allows us to share a module (and control who can consume it) based on the version (beta, prerelease, release)

Control: In large Enterprises (also in most small organizations), traceability is an important factor. There is a need to be in control of when what, why, who has changed the code. There is a need to have certain controls in place from planning (via user stories), code creation to all the way of code deployment in production. Mature DevOps teams have certain tools and processes to ensure there is a right level of traceability in the code promotion process.

In this example I have used git workflow where the master branch is protected. A pull request is created which is then approved by a fellow team member to make changes to master. In the release pipeline there are also controls to ensure only people with certain roles can push code to the production environment. In this example, this is achieved by configuring pre-deployment approvals in the release pipeline.

Quality: Mature DevOps teams have “Quality First Mindset”. Quality is ensured via a set of automated tests such as unit, integration, and functional test.

In this example, I’ve used pester to write sample unit and integration tests. Unit tests are run via build pipeline and integration tests are run during release pipeline execution.

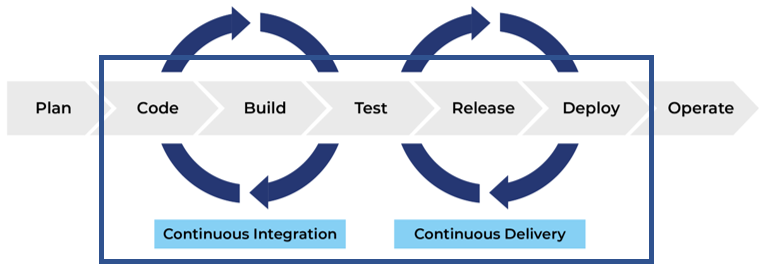

CI/CD: Continuous integration and continuous delivery is a cornerstone practice for mature DevOps teams. Continuous integration offers a great way to get faster feedback to the team on their code changes. Continuous delivery offers a reliable and repeatable way of deploying changes to DTAP street.

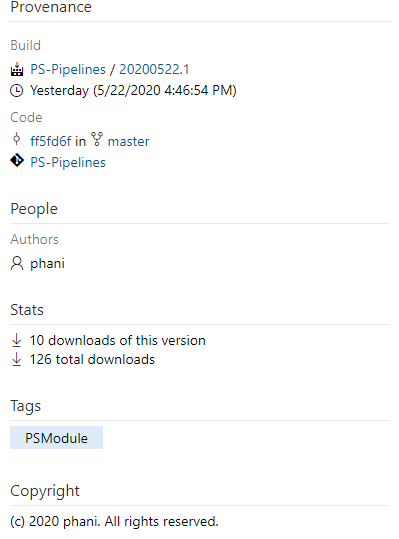

In my example, I am using YAML based build pipeline and a release pipeline to deploy to DTAP. Continuous integration pipeline builds the module, increments the version number, runs pester unit tests, and publishes test and code coverage results. Release pipeline pulls the latest artifact from the azure artifact, runs integration test, and promotes to next view.

Working Example

To explain various practices such as versioning, CI/CD, etc, I’ll use a sample(dummy) module “PSLogger” to illustrate the same. I’ll explain the individual components of the pipeline and how to implement a CI/CD pipeline for PowerShell. I’ve made this project public, so you can have a look at code as well as the release pipeline and a custom dashboard I created to give an overview of the release process.

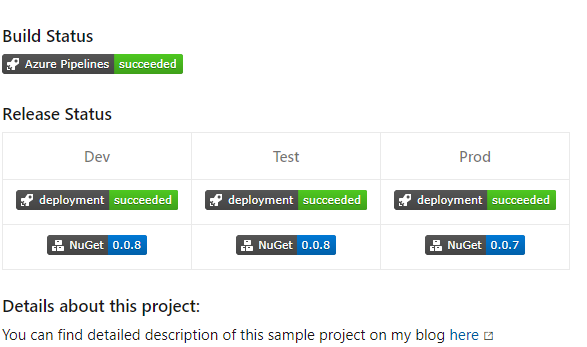

Dashboard: As Dr. Covey says, “Begin with End in mind” – I want to start with the result of the implementation of above said DevOps practices. This dashboard is a one-stop view of build trends, test results, open pull requests, and release status. You can access this dashboard here

Version Control: I use git to version control my codebase and I use Azure Repos for the same. I’m a big proponent of trunk-based development (TBD). In a nutshell, TBD is about having a single branch without the need for any other long-living branches such as development or release branches. TBD not only ensures faster feedback but also avoids wastage by avoiding (or reducing) merger conflicts. You can read more about TBD here

Code standardization: I use plaster templates to structure PowerShell modules. You can find more information on how to use plaster here. In the example project, you can use the plaster template to create a PowerShell module by running the following command.

Invoke-Plaster

CI pipeline: I use psake to build the PowerShell module. I also use the BuildHelpers module in the build process. A CI pipeline is setup to trigger build on every check-in. I use YAML based multi-stage pipeline in azure-pipelines.yml. CI Pipeline has two stages namely “Build” and “publishArtifacts” with the following tasks

"Install Dependencies and initialize": I use the PSDepend module to ensure build dependencies. PSDepend uses build.dependencies.psd1 to resolve dependencies.

xxxxxxxxxx

powershell

.\Build_Release\build.ps1 -ResolveDependency -TaskList 'Initialize'

displayName: "Install Dependencies and initialize"

R4

"Nuget tool Install and NugetAuthenticate": PowerShell module version is updated based on the latest module available in my NuGet Artifact feed. To achieve this, I get the latest module-info from Nuget feed. To do this, ensure NuGet is available in the build agent by installing it.

xxxxxxxxxx

taskNuGetToolInstaller@1

inputs

versionSpec

taskNuGetAuthenticate@0

inputs

forceReinstallCredentialProvidertrue

“Build Module": This task is responsible for updating the version number of the module and removing the “Tests” folder. The latest module version number is fetched from NuGet feed and the same is incremented. If there is no module available (in the case of first time publish to Nuget feed), the default version of 0.0.1 is selected. This task accepts a token, NuGet feed name, and URL. These are set as pipeline variables.

xxxxxxxxxx

powershell

.\Build_Release\build.ps1 -TaskList 'BuildModules' -Parameters @{ADOPat='$(ADOPAT)';NugetFeed='$(NugetFeed)';ADOArtifactFeedName='$(ADOArtifactFeedName)'}

displayName: "Building modules"

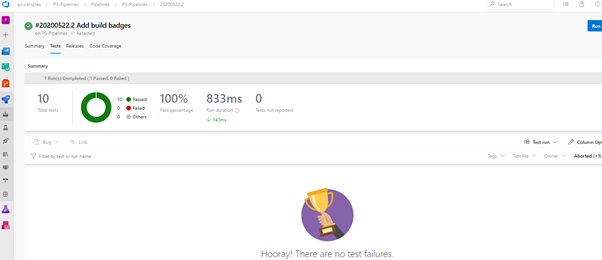

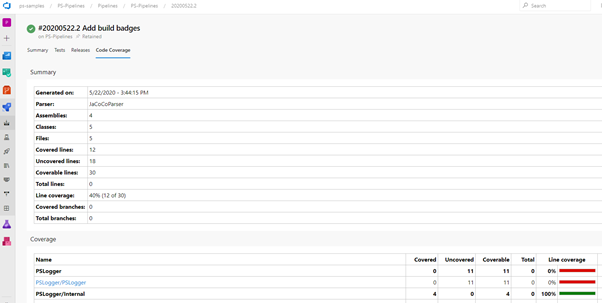

“Test": In the test step, all units are executed by pester and test results and code coverage results are published. A “Test” task in build.pskae.ps1 is responsible for running and creating test and coverage results files.

x

Task 'Test' {

$testScriptsPath = "$ENV:BHModulePath"

$testResultsFile = Join-Path -Path $ArtifactFolder -ChildPath 'TestResults.Pester.xml'

$codeCoverageFile = Join-Path -Path $ArtifactFolder -ChildPath 'CodeCoverage.xml'

$codeFiles = (Get-ChildItem $testScriptsPath -Recurse -Exclude "*.tests.ps1" -Include ("*.ps1", "*.psm1")).FullName

# Load modules to prep Tokenizer tests

Import-Module -Name $ENV:BHPSModulePath

if (Test-Path $testScriptsPath) {

$pester = @{

Script = $testScriptsPath

# Make sure NUnitXML is the output format

OutputFormat = 'NUnitXml' # !!!

OutputFile = $testResultsFile

PassThru = $true # To get the output of invoke-pester as an object

CodeCoverage = $codeFiles

ExcludeTag = 'Incomplete'

CodeCoverageOutputFileFormat = 'JaCoCo'

CodeCoverageOutputFile = $codeCoverageFile

}

$result = Invoke-Pester @pester

}

}

A sample test report and a code coverage report are shown below.

“PublishArtifacts”: This stage is responsible for publishing artifacts (PowerShell module as a NuGet package) to Azure Artifacts feed. As a best practice, I use only one feed to publish and use different views (@PreRelease and @Release) to promote artifacts across environments. The NuGet feed is registered via helper.registerfeed.ps1 and published via publish.ADOFeed.ps1

xxxxxxxxxx

# helper.registerfeed.ps1

[CmdletBinding()]

param (

[string]$ADOArtifactFeedName,

[string]$FeedSourceUrl,

[string]$ADOPat

)

$nugetPath = (Get-Command NuGet.exe).Source

if (-not (Test-Path -Path $nugetPath)) {

$nugetPath = Join-Path -Path $env:LOCALAPPDATA -ChildPath 'Microsoft\Windows\PowerShell\PowerShellGet\NuGet.exe'

}

# Create credentials

$password = ConvertTo-SecureString -String $ADOPat -AsPlainText -Force

$credential = New-Object System.Management.Automation.PSCredential ($ADOPat, $password)

Get-PackageProvider -Name 'NuGet' -ForceBootstrap | Format-List *

$registerParams = @{

Name = $ADOArtifactFeedName

SourceLocation = $FeedSourceUrl

PublishLocation = $FeedSourceUrl

InstallationPolicy = 'Trusted'

PackageManagementProvider = 'Nuget'

Credential = $credential

Verbose = $true

}

Register-PSRepository @registerParams

Write-Host "Feed registered"

Get-PSRepository -Name $ADOArtifactFeedName

xxxxxxxxxx

# publish.ADOFeed.ps1

[CmdletBinding()]

param (

[string]$ADOArtifactFeedName,

[string]$FeedSourceUrl,

[string]$ADOPat,

[string]$ModuleFolderPath

)

if (-Not $PSBoundParameters.ContainsKey('ModuleFolderPath')) {

$ModuleFolderPath = $(Pipeline.Workspace) -join "\Staging"

}

$nugetPath = (Get-Command NuGet.exe).Source

if (-not (Test-Path -Path $nugetPath)) {

$nugetPath = Join-Path -Path $env:LOCALAPPDATA -ChildPath 'Microsoft\Windows\PowerShell\PowerShellGet\NuGet.exe'

}

. $PSScriptRoot\helper.registerfeed.ps1 -ADOArtifactFeedName $ADOArtifactFeedName -FeedSourceUrl $FeedSourceUrl -ADOPat $ADOPat

$module = (Get-ChildItem -Path $ModuleFolderPath -Directory).FullName

$publishParams = @{

Path = $module

Repository = $ADOArtifactFeedName

NugetApiKey = $ADOPat

Force = $true

Verbose = $true

ErrorAction = 'SilentlyContinue'

}

Write-Host "Publishing Module"

Publish-Module @publishParams -Credential $credential

Status: Build and deployment status is reported in the dashboard (above) and also as build badges. I’ve added badges in README.md file.

Release Pipeline

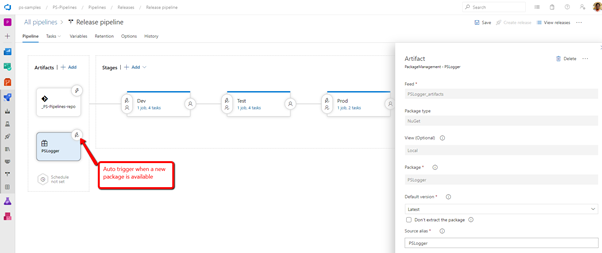

The release pipeline is responsible for promoting artifact (PowerShell module) from Dev to Test and then to Prod. Here is a high-level overview of the pipeline. A new release is triggered when a new version of the artifact is available in the Azure Artifacts.

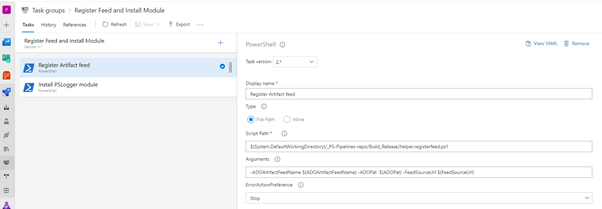

Stages: There are 3 stages in the pipeline – Dev, Test & Prod. All 3 stage broadly does following.

- Register Nuget Feed as PSRepository. I invoke helper.registerfeed.ps1 for the same.

- Install the latest module from the feed.

Both Dev and Test stages also run integration tests before they promote the package to the next stage. I use “Promotes a package to a Release View in VSTS Package Management” extension to achieve this.

Azure Artifacts:

I’ve created a feed “PSLogger_artifacts” in Azure Artifacts. Every successful CI pipeline creates a new artifact with a default (@Local) view.

The release pipeline is responsible for promoting the view based on quality gates defined in the Dev and Test stage to the next view.

I hope this blog gives you detailed insights into how to set up a pipeline and ensure quality standards and release gates for PowerShell development. I'm curious to know what you think about the various DevOps practices mentioned in this blog.

Published at DZone with permission of Phani Bhushan. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments