DevOps Midwest: A Community Event Full of DevSecOps Best Practices

DevOps Midwest 2023 brought together experts in scale, availability, and security best practices. Read some of the highlights from this DevSecOps-focused event.

Join the DZone community and get the full member experience.

Join For FreeIf you know anything about St. Louis, it is likely the home of the Gateway Arch, the Cardinals, and St. Louis-style BBQ. But it is also home to a DevOps event that featured some fresh perspectives on scaling, migrating legacy apps to the cloud, and how to think about value when it comes to your applications and environments; DevOps Midwest.

The quality of the conversations was notable, as this event drew experts and attendees who were working on interesting enterprise problems of scale, availability, and security. The speakers covered a wide range of DevOps topics, but throughout the day, a couple of themes kept showing up: DevSecOps and secrets management. Here are just a few highlights from this amazing event.

Lessons Learned From Helping Agencies Adopt DevSecOps

In his sessions "Getting from "DevSecOps" to DevSecOps: What Has Worked and What Hasn't- Yet." Gene Gotimer shared some of the stories from his life helping multiple US government agencies understand and adopt DevOps.

Show Value From the Start, Don't Wait for Them to 'Get It'

While he worked for DISA, the Defense Information Systems Agency, he helped them evaluate the path to go from manual releases to a more automated path. The challenge he faced was getting them to see the value of CI/CD. He focused on adding automated testing earlier in their process, which is at the heart of the DevSecOps' shift left' strategy. He met unexpected resistance as the teams failed to 'just get it' and see the long-term benefits of the new approach, mainly due to their lack of familiarity with testing best practices, resulting in making implementation decisions that caused overall longer testing cycles.

His biggest takeaway from the experience was realizing the established team was not going to have an 'ah ha' moment where they just collectively see the value. Value needs to be clearly defined in the goals of a project. Ultimately, you need to show that the new path is not just 'good' but is it overall better, stated in values the existing team understands.

Never Let a Crisis Go to Waste: Be Ready

In another engagement, this time with the TSA, the Transportation Security Administration, he built a highly secure DevOps pipeline based on Chef that was capable of automatically updating dependencies on hundreds of systems. After a year of work, he was limited to only showing demos of his tools, and he was restricted to a sandbox. The fear of a new approach and the reluctance to change meant he was only able to roll out the new tooling only when it was a last resort. An emergency meant doing updates the old way could not meet a deadline that was driven by a new vulnerability, so they gave his new tooling a chance.

After successfully updating all the systems in a few short hours, the department lead was joyous that the 'last year of work meant they could update so fast.' But the reality is it took them a year to hit a crisis point that forced the change. The tool had been ready for months. It was only after the whole team saw the new approach in action that resistance disappeared.

The larger lesson he took away from this was that being ready paid off. If they had come to him and he had not been ready to meet the moment, then the team would have never experienced the benefits of an automated approach.

Carrots Not Sticks

The final story Gene shared was about his time working for ICE, U.S. Immigration, and Customs Enforcement. While there, he worked to improve their security while working in AWS GovCloud. While the team was practicing DevOps, they had over 150 security issues in their build process, which took over 20 minutes to complete. He and his team worked to lower those security issues to less than twenty overall while tuning the whole process to take around six minutes.

Unfortunately, the new system was not approved or adopted for many months. Gene's security team had been trying to sell the security benefits, which never took priority for the DevOps team. While there was administrative buy-in, the new process was not adopted until, finally, a different, smaller team realized the new process was 3x faster than their approach. They saw security as a side benefit. In the end, as other teams started adopting the faster process, the overall security was improved.

Gene stressed that they could have gone to the CIO and demanded that the new approach be used for security reasons, but knew that would mean even more resistance overall. What ended up working was showing that the new way was ultimately better, easier, and faster.

Gene ended his talk by reminding us always to continue building interesting things and to keep learning and innovating. He left us with a quote from Andrew Clay Shafer, a pioneer in DevOps:

"You’re either building a learning organization, or you are losing to someone who is."

Secrets Management in DevOps

Three different talks at DevOps Midwest dealt with secret security in DevOps explicitly. Two talks discussed security in the context of the migration of applications to the cloud, and one talked about the problems of secrets in code and how git can help keep you safe.

Picking a (Safe) Cloud Migration Strategy

In his talk "You're Not Just the Apps Guy Anymore; Embracing Cloud DevOps," John Richards of Paladin Cloud covered why moving to the cloud matters as well as the challenges and unique opportunities that migrating to the cloud brings.

He laid out three migration strategies.

1. Lift and shift

2. Re-architecture

3. Rebuild with cloud-native tools

In "lift and shift," you simply take the existing application and drop it into a cloud environment. This can bring the ability to scale on demand, but it also means you are not reaping the full benefits of the cloud. While this is the fastest and least costly method, you still need to spend time figuring out how to "connect the plumbing." Part of that plumbing is figuring out how to call for secrets in the cloud. Most likely, while the application lived on an on-prem server, the secrets were previously stored on the same machine. Setting and leveraging the built-in environment variables in the cloud is a good short-term step for teams crunched for time. He lays out better secret management approaches in the other migration paths.

In a re-architecture, you start with a 'lift and shift' migration and slowly build onto it, changing the application slowly over time to take advantage of the scale and performance gains the cloud offers. This is a flexible path but requires a higher overall investment, but if done correctly, the team can maximize value while building for the future. This is a good time for more robust secrets management to be adopted, especially as more third-party services need authentication and authorization. Tools like Vault or the built-in cloud services can be rolled out as the application evolves.

The third path is completely rebuilding the application with cloud-native tools. This is the most expensive migration path but brings the greatest benefits. This approach allows you to innovate, taking advantage of edge computing and technology that was simply not available when the legacy application was first created. This also means adopting new secret management tools immediately and across the whole team at once. This approach definitely requires the highest level of buy-in from all teams involved.

John also talked about shared cloud responsibility. For teams used to controlling and locking down on-premises servers, it is going to be an adjustment to partner with the cloud providers to defend your applications. Living in a world of dynamic attack surfaces makes defense-in-depth a necessity, not a nice to have; secrets detection and vulnerability scanning are mandatory parts of this approach. Your cloud provider can only protect you so much… misconfiguration or leaving your keys out in the open will lead to bad things.

How To Migrate to the Cloud Safely

While John's talk took a high-level approach to possible migration paths, Andrew Kirkpatrick from StackAdapt, gave us a very granular view of how to actually perform migration in his session "Containerizing Legacy Apps with Dynamic File-Based Configurations and Secrets." Andrew walked us through the process of taking an old PHP-based BBS running on a single legacy server and moving it to containers, making it highly scalable and highly available in the process. He also managed to make it more secure along the way.

He argued that every company has some legacy code that is still running in production and that someone has to maintain it, but nobody wants to touch it. The older the code, the higher the likelihood that patches will introduce new bugs. Andrew said that the sooner you move it to containers and the cloud, the better off everyone is going to be and the more value you can extract from that application. While the lift and shift approach might not seem like the best use of advanced tools like Docker Swarm or Helm, in all reality. "you can use fancy new tech to run terrible, ancient software, and the tech doesn't care.

He warned that most tutorials out there make some assumptions you have to take into account. While they might get you to a containerized app, most tutorials do not factor in scale or security concerns. For example, if a tutorial just says to download an image, it does not tell you to make sure there are no open issues with the image on Docker Hub. If you downloaded the Alpine Linux Docker image in the three years that the unlocked root accounts issue went unsolved, your tutorial did not likely account for that.

Once he got the BBS software running in a new container, he addressed the need to connect it to the legacy DB. He laid out a few paths for possibly managing the needed credentials, but the safest by far would be to use a secrets manager like Hashicorp Vault or Doppler.

He also suggested a novel approach for leveraging these types of tools, storing configuration values. While secrets managers are designed to safely store credentials and give you a way to programmatically call them to the tool, those keys are all just arbitrary strings. There is no reason you could not store settings values alongside your secrets and programmatically call them when you are building a container.

Leveraging Git to Keep Your Secrets Secret

The final talk that mentioned keeping your secrets out of source code was presented by me, the author of this article. I was extremely happy to be part of the event and present an extended version of my talk, "Stop Committing Your Secrets - Git Hooks To The Rescue!"

In this session, I laid out some of the findings of the State of Secrets Sprawl report:

- 10M secrets found exposed in 2022 in public GitHub repositories.

- That is a more than 67% increase compared to the six million found in 2021.

- On average, 5.5 commits out of 1,000 exposed at least one secret.

- That is a more than 50% increase compared to 2021.

At the heart of this research is git, the most widely used version control system on earth and the defacto transportation mechanism for modern DevOps. In a perfect world, we would all be using secret managers throughout our work, as well as built-in tools like `.gitignore` to keep our credentials out of our tracked code histories. But even in organizations where those tools and workflows are in place, human error still happens.

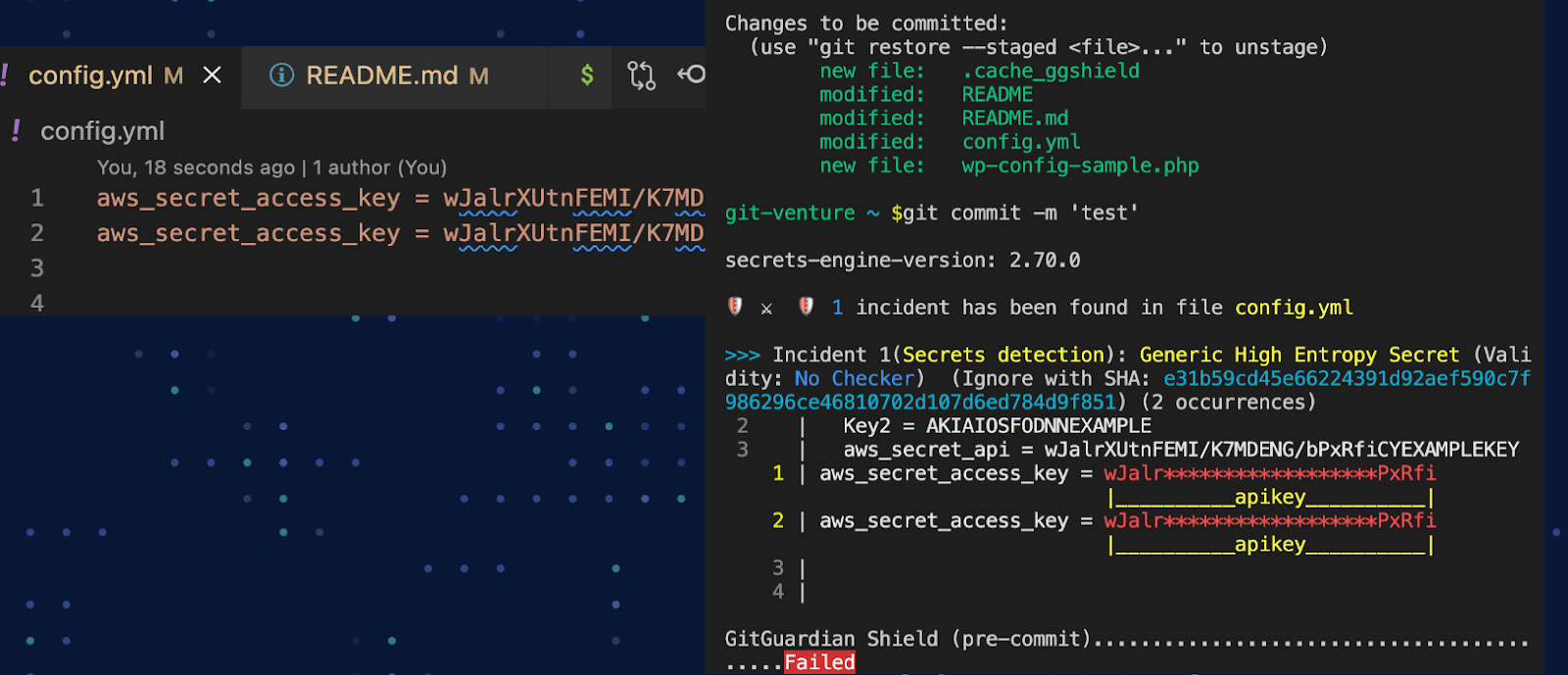

What we need is some sort of automation to stop us from making a git commit if a secret has been left in our code. Fortunately, git gives us a way to do this on every attempt to commit with git hooks. Any script stored in the git hooks folder that is also named exactly the same as one of the 17 available git hooks will get fired off by git when that git event is triggered. For example, a script called `pre-commit` will execute when `git commit` is called from the terminal.

GitGuardian makes it very easy to enable the pre-commit check you want, thanks to ggshield. In just three quick commands, you can install, authenticate the tool, and set up the needed git hook at a global level, meaning all your local repositories will run the same pre-commit check.

$ pip install ggshield

$ ggshield auth login

$ ggshield install --mode globalThis free CLI tool can be used to scan your repositories as well whenever you want; no need to wait for your next commit.

After setting up your pre-commit hook, each time you run `git commit,` GitGuardain will scan the index and stop the commit and tell you exactly where the secret is and what kind of secret is involved, and give you some helpful tips on remediation.

DevSecOps Is a Global Community: Including the Midwest

While many of the participants at DevOps Midwest were, predictably, from the St. Louis area, everyone at the event is part of a larger global community. A community that is not defined by geographic boundaries but is instead united by a common vision.

DevOps believes that we can make a better world by embracing continuous feedback loops to improve collaboration between teams and users. We believe that if repetitive and time-consuming tasks can be automated, they should be automated. We believe that high availability and scalability go hand-in-hand with improved security.

No matter what approach you take to migrate to the cloud or what specific platforms and tools you end up selecting, keeping your secrets safe should be a priority. Using services can help you understand the state of your own secrets sprawl on legacy applications as you are preparing to move through historical scanning and keep you safe as the application runs in its new home, thanks to our real-time scanning. And with ggshield, you can keep those secrets that do slip into your work out of your shared repos.

Published at DZone with permission of Dwayne McDaniel. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments