Develop XR With Oracle Cloud, Database on HoloLens, Ep 1: Spatial, AI/ML, Kubernetes, and OpenTelemetry

This is the first piece in a series on developing XR applications and experiences using Oracle. Here, explore Spatial, AI/ML, Kubernetes, and OpenTelemetry.

Join the DZone community and get the full member experience.

Join For FreeThis is the first piece in a series on developing XR applications and experiences using Oracle. Specifically, I will show applications running with the following:

- Oracle database and cloud technologies

- Hololens 2 (Microsoft Mixed Reality Headset)

- MRTK (Mixed Reality Toolkit) APIs (v2.7.2)

- Unity (v2021.1.20f) platform (leading software for creating and operating interactive, real-time 3D content)

Throughout the blog, I will reference a corresponding workshop video that you can view here:

Extended Reality (XR) and HoloLens

XR (extended reality) is the umbrella term for VR, AR, and MR. The fourth evolution, metaverse (omniverse, etc.), or mesh, essentially refers to one extent or another and the inevitable integration of XR into the everyday, analogous to how smartphones are today.

While the concepts shown can be applied to one extent or another in different flavors of XR and devices, the focus is on what will be the most common and everyday usage of XR in the future: the eventual existence of XR glasses. This being the case, the HoloLens is used for developing and demonstrating, as it is the most advanced technology that exists currently to that end. The HoloLens presents holograms and sounds spatially to the wearer/user who can interact with them via hands, speech, and eye gaze. There is much more to the HoloLens than this basic definition, of course, and much more ahead in this space in general.

The XR Hololens application(s) is developed using the MRTK, which provides an extensive array of APIs that are portable across devices. This is of course to a varying degree depending on the nature and capability of the device but, is in line with Apple ARKit, Google ARCore, etc. It is also forward-looking such that it will be applicable to future devices (glasses, etc.) from other vendors such as Apple and others.

Demonstrations

I will start the series by demonstrating an XR version of a popular Oracle LiveLabs workshop “Simplify Microservices with converged Oracle Database." It demonstrates a number of different areas of modern app dev, as well as DevSecOps, including Kubernetes, microservices and related data patterns, Spatial, Maps, AI/ML/OML, Observability (in particular tracing), basic Graph, etc.

Future installments of this series will continue to give examples and explain XR-enablement of Oracle Database functionality such as Graph, IoT, Event Mesh, Sagas, ML, Unified Observability, Chaos testing, and more. Additionally, future installments will address industry use cases as well as Oracle AI cloud offerings such as computer vision, speech recognition, and text semantics that work in tandem with the database. At the same time, I will show more aspects and use cases of XR/MR with these services.

Video 1: “GrabDish” (Online Store/Food Delivery) Frontend

The microservices workshop and the XR version of it use a “GrabDish” food delivery service application to show the concepts mentioned.

The video and this blog are broken into two demos: I will list the Oracle technologies used, followed by the MRTK/Hololens that use them. Unity is the development tool that brings these two together (Unreal Engine is an alternative).

- Food options (sushi, burger, pizza) are shown as 3d images. These images are loaded from the cloud object store via the Oracle database that fronts them.

- Users can select all objects with either hand, voice/speech, or eye-gaze (simply by staring at it).

- Users can then bring the object closer to them by making a hand gesture or saying "come to me" and once the image is close can rotate it by simply staring at one side or another, or can grab it and move it in this way.

- Once the food is selected, a suggestive sale for a drink is generated via AI/ML/OML (in the original workshop this is a food and wine pairing and in the XR version this is a tea pairing for the sushi selected).

- "Dwell" refers to a prolonged eye-gaze at a particular location. This is demonstrated when the user dwells their eye-gaze on a tea, and as a result, hears the name of the tea spoken. This audio is also retrieved via the database.

- The items selected for the order are placed on a new MRTK resource called a dock. This is a very useful construct (serving a container and categorization mechanism) that allows a number of items to be placed on it and resized such that all fit.

- A dwell button with volumetric UI is then selected to place the order. The order is inserted into the Oracle database in JSON format and the appropriate inventory is reduced using relational SQL. This demonstrates two of the many data types supported by the Oracle converged database.

- Another data model supported in the database is spatial. This is demonstrated when the "Deliver Order" button is selected. The restaurant and delivery addresses (in this case, Rittenhouse Square in Philadelphia) are sent to the Oracle spatial cloud service backed by the Oracle database where a routing API is used to GeoCode the driving path between the two addresses and return the result as GeoJSON. This is then fed to the Map API (Google, BingMaps, Mapbox, etc.), where it is plotted on a 3D map. A car follows this ray trace between points in the 3D map in the HoloLens. Again, this map can be manipulated via hand, speech, and eye-gaze.

- These data types can be accessed in the Oracle database via any number of languages and also via REST endpoints.

Video 2: "GrabDish" DevOps (Kubernetes, Health Probes, Tracing/OpenTelemetry, etc.)

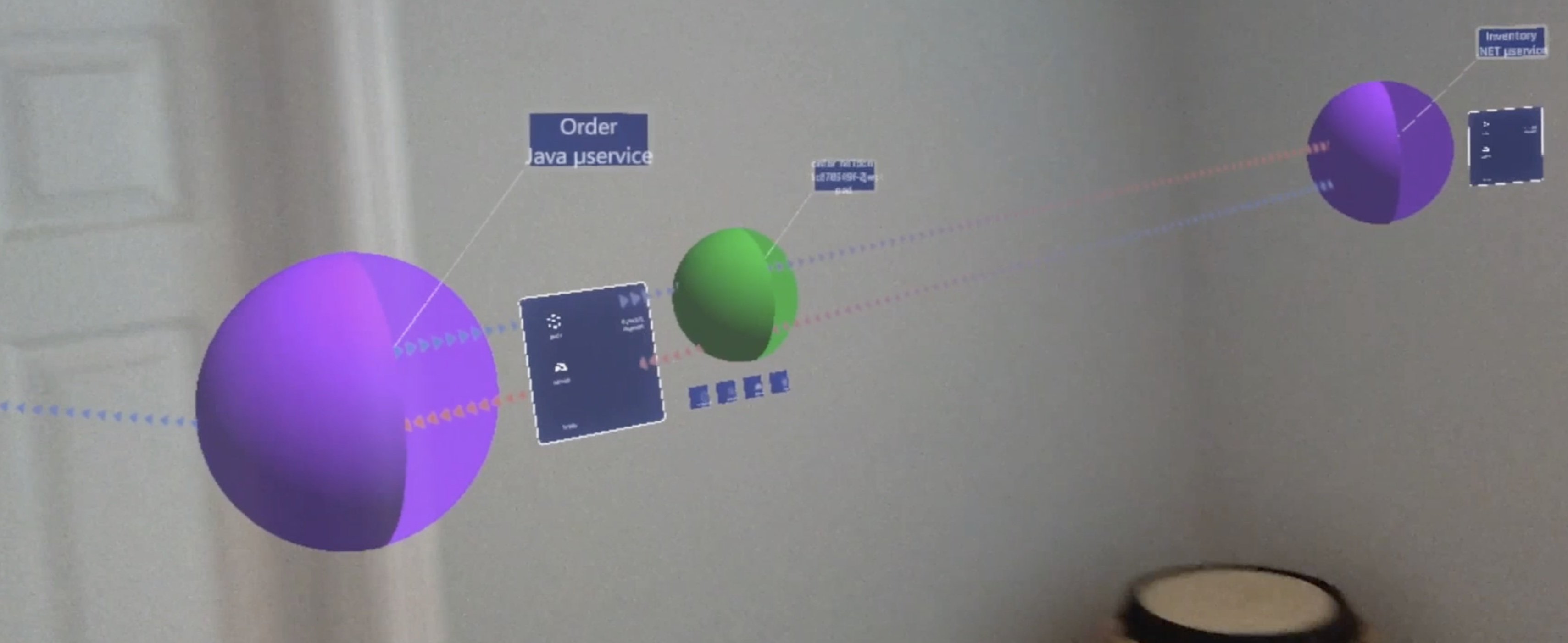

- A 3D visual representation of a live Kubernetes cluster and its related resources including namespaces, deployments, pods (fronted/dispatch to by services), etc. is shown in the same hierarchical relationship as the in Kubernetes, and resources/objects can be grabbed, rotated, moved, and scaled, etc. in a relational and proportionate manner.

- The tags for these resources re-orient themselves so that they are always facing the user. Because the HoloLens is constantly spatially mapping the mesh of the environment, the placement of the objects remains consistent even across restarts. This can, of course, be extended to incorporate remote and GPS aspects and the mesh can be shared for digital double, remote placement (eg advanced remote assist, etc.) use cases.

- Each pod has a menu with various options/actions, including the ability to select and view that pod’s logs. The logs can be read either by scrolling with the hand or by simply reading them as eye-tracking is used to scroll and advance the page.

- The original workshop is opened in a browser in order to set the health advertised by the Order service to "down". This is done to trigger the Kubernetes health probes to restart the service. This is reflected in the XR application by the pod turning red in color and an audible alert about the health status. The sound is also spatially mapped. The source is the pod object so that if the pod is across the room, the sound comes from across the room.

- Visual tracing is then demonstrated by placing an order. OpenTracing/OpenTelemetry is used to trace the flow of information through this system. This is translated and mapped to the visual representation of the Kubernetes objects and database involved. Specific order and saga tags are selected in order to identify and particular trace and these labels can be visually observed flowing through the graph of nodes. This of course has greater potential in graph analytics.

Additional Thoughts

Please see the blogs I publish for more information on Oracle converged database as well as various topics around microservices, observability, transaction processing, etc.

Also, please feel free to contact me with any questions or suggestions for new blogs and videos as I am very open to suggestions. Thanks for reading and watching.

Content thanks go to the wonderful Ruirui Hou for Chinese audio, Hiromu Kato for Japanese audio, Chaosmonger Studio for the car graphic, Altaer-lite for the sushi graphic, and Gian Marco Rizzo for the hamburger graphic.

Opinions expressed by DZone contributors are their own.

Comments