Deploying Your Microservices

Ready to deploy your awesome microservices? Read on for one architect's advice on how to make it happen.

Join the DZone community and get the full member experience.

Join For FreeYou have evaluated your requirements, discussed the options with your team, and determined that the best approach for building your application is to leverage microservices. You look forward to the improved productivity, speed, and scalability promised by the approach. You've successfully written a few services and are ready to deploy.

Working with monolithic applications is not easy, but while a lot can go wrong, it is relatively straight-forward. Deploying a monolith typically means running the application on a server (physical or virtual), configuring that server properly, and, where necessary, replicating that server setup X times behind a load-balancer.

Your new microservices approach calls for a large number of separate applications and that number is very likely to grow. Services may be RESTful, scheduled jobs, event based, etc. They each have different server configurations and scaling requirements. Even with all of this complexity microservices need to be created and deployed quickly and often automatically as part of the CI/CD pipeline.

Here are some of the deployment options:

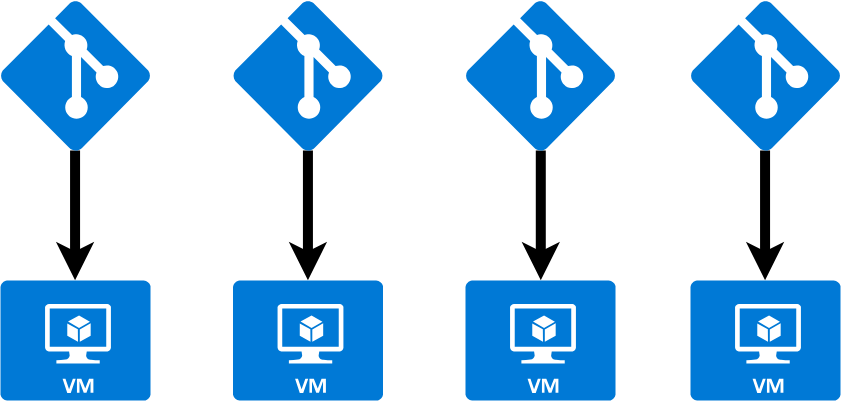

Virtual Machines

This architectural pattern is probably the easiest to understand for someone transitioning from monolithic applications. In this setup, every microservice gets its own virtual machine.

This architectural pattern makes the services easy to understand because each service is isolated with its own CPU, memory, and server configuration. You can log in to a server and debug a service in the same way you would with traditional monoliths. You leverage the same infrastructure that has been available for years and proven to be a solid choice. Scaling a service can be done through increasing or decreasing the size of the VM and many IaaS hosts offer a mechanism for auto-scaling.

Creating these machines can be a difficult process. There are a handful of software options to help. Netflix created an open-source application, animator, to simplify the process of creating EC2 instances. It leverages a base ami and package link that installs the application. Other options include HashiCorp's Packer and boxfuse.

This pattern is shrinking in popularity due to its many drawbacks. The first is that it's expensive. Each service now requires an entire operating system increasing the necessary size and power of each VM. If auto-scaling is available, it tends to be very slow, so the best practice is to over-provision resources to be ready for any peak traffic. Services like EC2 only come in a few fixed configurations to choose from which may not fit your needs. All of this means paying for a large amount of unused compute resources.

VMs are also slow to launch. The machines need to be built and initialized before they can begin handling traffic. This will slow down your CI/CD pipeline and make adding new services a slow and potentially painful process.

Finally, this architecture will create a lot of responsibility for you or someone on your operations team. From configuring each machine to monitoring its uptime and handling errors. This pattern is not fault-tolerant, so a major error or bug could take down any individual service causing errors.

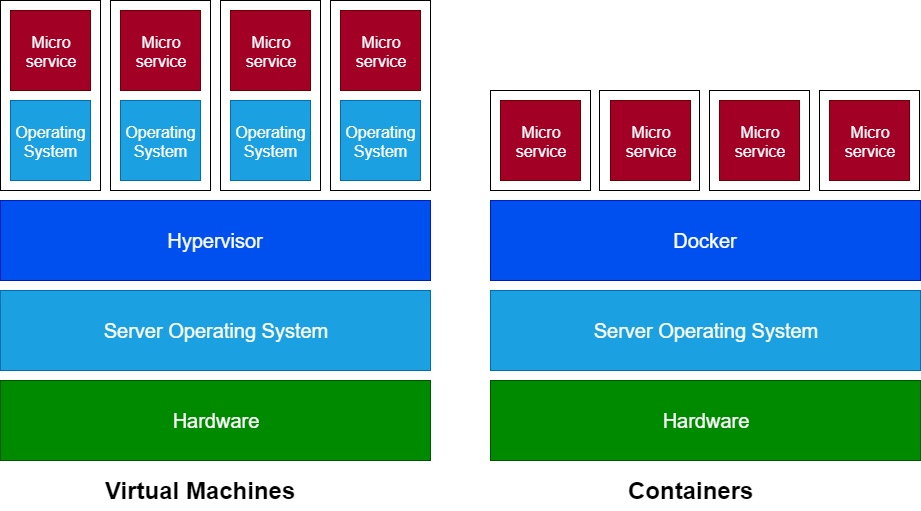

Containers

Containers and virtual machines share similar use cases. Both provide resource isolation and allocation benefits, but where virtual machines virtualize the underlying hardware, containers virtualize the operating system. Removing the need to copy and configure an entire operating system makes containers portable and efficient.

Since containers are abstractions at the application level, they leverage the host operating system and share that kernal with all other containers. While containers are fully isolated processes, they require less resources and size as there is no operating system to duplicate. This made containers an ideal option for developing and deploying applications. Docker was released in 2013 and quickly became the defacto standard for containerizing an application.

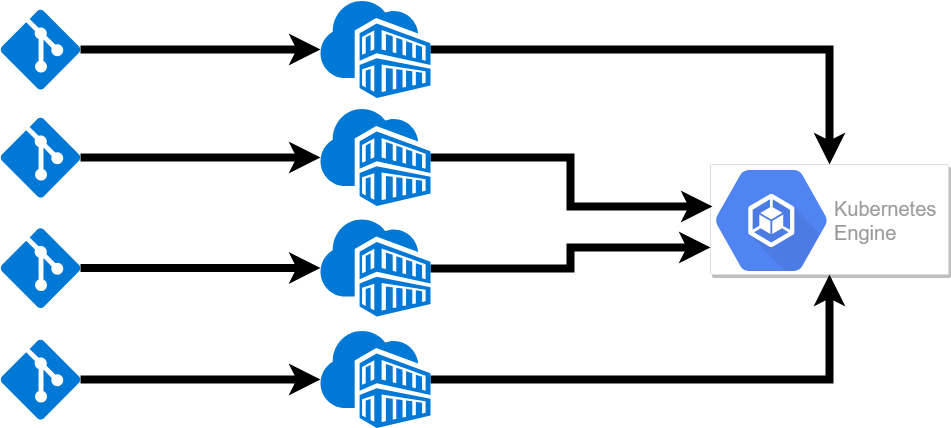

Container Orchestration

Having your application in well packaged stand-alone containers is the first step in managing multiple microservices. The next is managing all of the containers, allowing them to share resources and setting configuration for scaling. This is where a container orchestration platform comes in.

When you use a container orchestration tool, you typically describe the configuration in a YAML or JSON file. This file will specify where to download the container image, how networking between containers should be handled, how to handle storage, where to push logs, and any other necessary configuration. Since this configuration lives in a text file you can add it to source control to track changes and easily include it in your CI/CD pipeline.

Once containers are configured and deployed, the orchestration tool manages its lifecycle. This includes starting and stopping a service, scaling it up or down through launching replicas and restarting containers after failure. This greatly simplifies the amount of management needed.

Containers also benefit from being supported on all operating systems and most cloud platforms. This allows you to move between AWS, GCP, and Azure without rewriting your code.

There are many orchestration tools available, including Amazon's ECS, Docker Swarm, Apache Mesos, and the most popular Kubernetes. Kubernetes was created at Google, but has since been released as open source. It has become so popular that both Azure (AKS) and Amazon AWS (EKS) have adopted support for it. While containers are relatively new when compared to VMs, it is quickly evolving and becoming a standard for microservices deployment.

A standard implementation is for each microservice to be packaged as a container, those containers are then deployed to a container orchestration service like EKS.

If you would like a deeper understanding of leveraging containers and Kubernetes for your architecture you can download the commercetools Blueprint Architecture for Modern Commerce.

Depending on the service you choose you may still have to provision the underlying infrastructure. Your containers will only be able to scale to the size of your Kubernetes cluster. You will also be paying for idle resources as services have a minimum capacity set. While saving money when compared to VMs you are still being charged for unused resources.

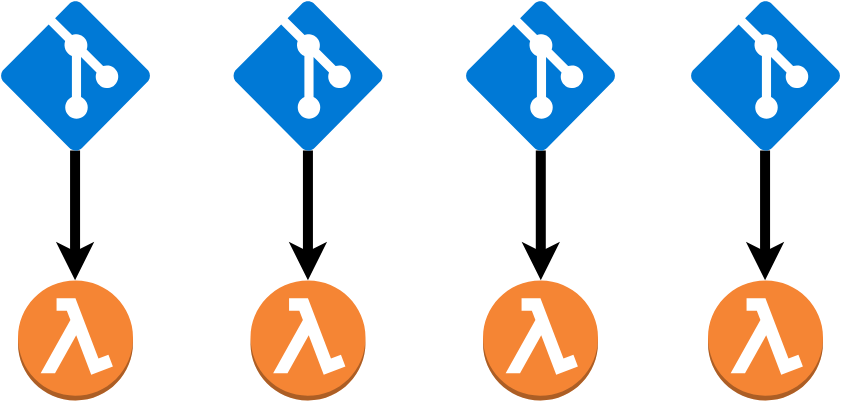

Functions as a Service

Functions as a Service (FaaS) or Serverless is a new and fast-growing option. Instead of virtualizing the hardware (VM) or virtualizing the operating system (containers), serverless pushes the smallest possible component, a function or package of code. In this setup each microservice will be deployed to a FaaS like AWS Lambda.

There are plenty of memes pointing out that serverless is just someone else's servers. The goal of serverless is not to remove servers completely, but instead to allow you to never think about them. You have no access to the underlying infrastructure, no insight on the hardware, no idea what operating system is being used. The only thing you know is that your code is running.

Amazon pioneered the concept with AWS Lambda. Ever since then competitors have been launched including Google Cloud Functions and Azure Functions. Lambda is still the clear leader with faster start times, additional integrations (e.g. step functions), and a larger ecosystem.

Lambda functions are a stateless service, the code package is uploaded into AWS and executed based on a specific trigger:

- Directly through a CLI request.

- HTTP request routed through an API Gateway.

- Schedule (CRON Job).

- Cloud Event from another service like S3, Simple Email Service, DynamoDB, etc.

There are multiple options to streamline deployment including Amazon SAM (Serverless Application Model), ClaudiaJS, Apex, Architect, and the poorly named Serverless Framework. You can also utilize a tool like Terraform or Cloud Formation to define your FaaS infrastructure.

FaaS comes with many advantages, one of the biggest being price. You only pay for the resources used. There is no idle time or underlying infrastructure to pay for, as a result, cloud hosting bills can often be 1/10 the price of more traditional deployments.

FaaS also scales immediately with no need to monitor or configure the service. As events increase new copies of your code will automatically be started. Each instance will stop once the code has finished execution.

With reduced configuration, lower prices, and almost infinite capacity FaaS may seem like the perfect option for every microservices deployment. Unfortunately, it does come with some significant drawbacks that can often be a deal breaker depending on the requirements.

FaaS is not intended for long-running processes, each host has its own time limit, but most timeout around 300 seconds of total execution time. Each service must be completely stateless as a new instance can be spawned for each request. There are limitations on language support and a fear of lock-in as they typically integrate tightly with other offerings such as Step Functions, CloudWatch, S3, etc. You are also restricted on CPU power and total memory used.

The biggest issue for customer-facing services is referred to as cold starts. A cold start is a latency experienced after triggering a function. This is the time needed to load the function into memory before actually executing the request. In order to reduce average response time, AWS will keep idle containers available waiting to run for functions that are called frequently. According to Chris Munns, the Senior Developer Advocate for Serverless at AWS, cold starts account for less than 0.2% of all function invocations.

Many people will try to avoid cold starts by keeping their functions "warm." They call the function at regular intervals to keep that idle container in memory. While this approach works for sequential requests, a burst of concurrent requests will cause AWS to spawn new instances, each incurring an initial cold start. Depending on language, framework, and code size, a cold start can take up to two seconds to process. While this is a non-issue for event-triggered executions if using FaaS for HTTP requests a two second load time can feel like an eternity and will have a significant impact on your conversion rate.

This infrequent cold start may be enough to push you away from FaaS. If not, you can take solace in the knowledge that cold starts continue to decrease in time as Lambda evolves and improves.

Summary

Deploying a microservices application is difficult. While you gain simplicity in development, scaling, and team size, your infrastructure, and deployment now has added complexity. There can be hundreds of different services each with its own requirements from configuration to scaling. While there are services to simplify deployment, each has its drawbacks and benefits that must be weighed before making a decision. In the end, there is no right solution — only the best for your business.

Often the best architecture for a company will involve a hybrid approach. Combining tools like serverless for background processes and Kubernetes for time critical services. This hybrid approach can lower costs while keeping your ecommerce application responsive.

Opinions expressed by DZone contributors are their own.

Comments