Demystifying Virtual Thread Performance: Unveiling the Truth Beyond the Buzz

Virtual threads can provide high enough performance and resource efficiency based on your concurrency goal but also let you have a simple development model.

Join the DZone community and get the full member experience.

Join For FreeIn the previous articles, you learned about the virtual threads in Java 21 in terms of history, benefits, and pitfalls. In addition, you probably got inspired by how Quarkus can help you avoid the pitfalls but also understood how Quarkus has been integrating the virtual threads to Java libraries as many as possible continuously.

In this article, you will learn how the virtual thread performs to handle concurrent applications in terms of response time, throughput, and resident state size (RSS) against traditional blocking services and reactive programming. Most developers including you and the IT Ops teams also wonder if the virtual thread could be worth replacing with existing business applications in production for high concurrency workloads.

Performance Applications

I’ve conducted the benchmark testing with the Todo application using Quarkus to implement 3 types of services such as imperative (blocking), reactive (non-blocking), and virtual thread. The Todo application implements the CRUD functionality with a relational database (e.g., PostgreSQL) by exposing REST APIs.

Take a look at the following code snippets for each service and how Quarkus enables developers to implement the getAll() method to retrieve all data from the Todo entity (table) from the database. Find the solution code in this repository.

Imperative (Blocking) Application

In Quarkus applications, you can make methods and classes with @Blocking annotation or non-stream return type (e.g. String, List).

@GET

public List<Todo> getAll() {

return Todo.listAll(Sort.by("order"));

}Virtual Threads Application

It’s quite simple to make a blocking application into a virtual thread application. As you see in the following code snippets, you just need to add a @RunOnVirtualThread annotation into the blocking service, getAll() method.

@GET

@RunOnVirtualThread

public List<Todo> getAll() {

return Todo.listAll(Sort.by("order"));

}Reactive (Non-Blocking) Application

Writing a reactive application should be a big challenge for Java developers when they need to understand the reactive programming model and the continuation and event stream handler implementation. Quarkus allows developers to implement both non-reactive and reactive applications in the same class because Quarkus is built on reactive engines such as Netty and Vert.x. To make an asynchronous reactive application in Quarkus, you can add a @NonBlocking annotation or set the return type with Uni or Multi in the SmallRye Mutiny project as below the getAll() method.

@GET

public Uni<List<Todo>> getAll() {

return Panache.withTransaction(() -> Todo.findAll(Sort.by("order")).list());

}Benchmark scenario

To make the test result more efficient and fair, we’ve followed the Techempower guidelines such as conducting multiple scenarios, running on bare metal, and containers on Kubernetes.

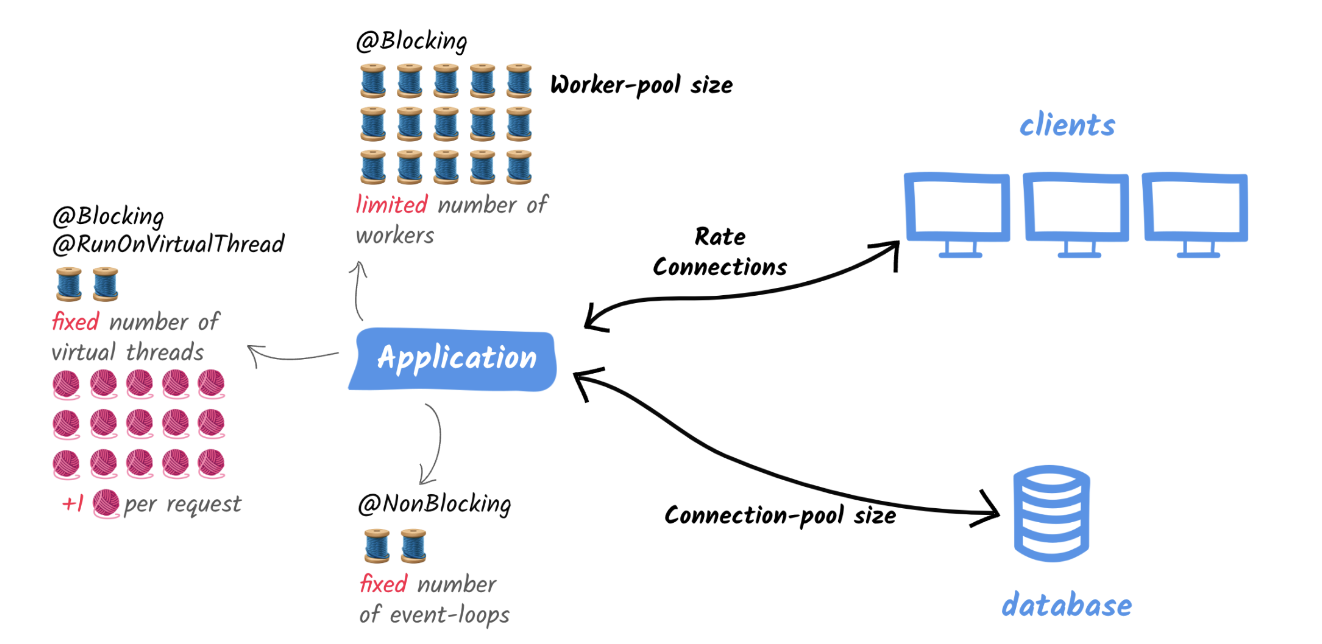

Here is the same test scenario for the 3 applications (blocking, reactive, and virtual threads), as shown in Figure 1.

- Fetch all rows from a DB (quotes)

- Add one quote to the returned list

- Sort the list

- Return the list as JSON

Figure 1: Performance test architecture

Response Time and Throughput

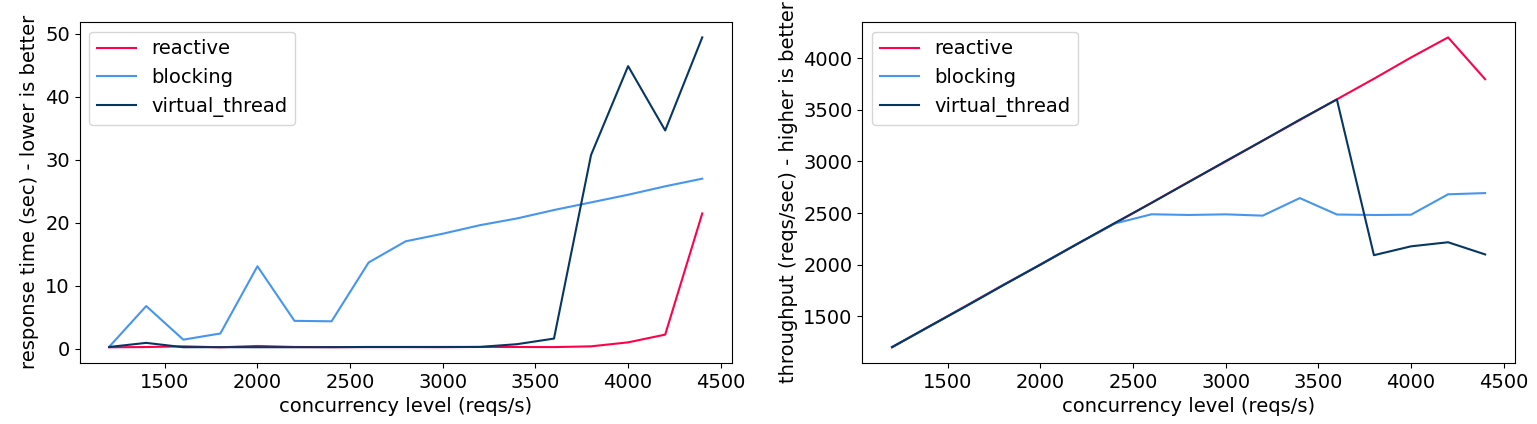

During the performance test, we’ve increased the concurrency level from 1200 to 4400 requests per second. As you expected, the virtual thread scaled better than worker threads (traditional blocking services) in terms of response time and throughput. More importantly, it didn’t outperform the reactive service all the time. When the concurrent level reached 3500 requests per second, the virtual threads went way slower and lower than the worker threads.

Figure 2: Response time and throughput

Resource Usage (CPU and RSS)

When you design a concurrent application regardless of cloud deployment, you or your IT Ops team need to estimate the resource utilization and capacity along with high scalability. The CPU and RSS (resident set size) usage is a key metric to measure resource utilization. With that, when the concurrency level reached out to 2000 requests per second in CPU and Memory usage, the virtual threads turned rapidly higher than the worker threads.

Figure 3: Resource usage (CPU and RSS)

Memory Usage: Container

Container runtimes (e.g., Kubernetes) are inevitable to run concurrent applications with high scalability, resiliency, and elasticity on the cloud. The virtual threads had lower memory usage inside the limited container environment than the worker thread.

Figure 4: Memory usage - Container

Conclusion

You learned how the virtual threads performed in multiple environments in terms of response time, throughput, resource usage, and container runtimes. The virtual threads seem to be better than the blocking services on the worker threads all the time. But when you look at the performance metrics carefully, the measured performance went down than the blocking services at some concurrent levels. On the other hand, the reactive services on the event loops were always higher performed than both the virtual and worker threads all the time.

Thus, the virtual thread can provide high enough performance and resource efficiency based on your concurrency goal. Of course, the virtual thread is still quite simple to develop concurrent applications without a steep learning curve as the reactive programming.

Opinions expressed by DZone contributors are their own.

Comments