Deep Learning for Signal Processing: What You Need to Know

Speech, autonomous driving, image processing, wearable techn, and communication systems work due to signal processing. Now, it's making waves in deep learning.

Join the DZone community and get the full member experience.

Join For FreeUsing Deep Learning for Signal Processing

According to the Institute of Electrical and Electronic Engineers (IEEE), Signal Processing encapsulates our daily lives without any of us even knowing. Computers, radios, videos, mobile phones are all enabled by signal processing. Signal Processing is a branch of electrical engineering that models and analyzes data representations of physical events. It is at the core of the digital world. Speech and audio, autonomous driving, image processing, wearable technology, and communication systems all work thanks to signal processing. And now, signal processing is starting to make some waves in deep learning.

What Is a Signal?

A signal is the physical support of information. According to the above diagram, signal processing is the intersection of Mathematics, Informatics, and Physical stimuli. Signals include almost all forms of data that can be digitized such as images, videos, audio, and sensor data. Mathematics is necessary to evaluate it, Informatics enables the implementation and the physical world will generate the signals.

Deep Learning for Signal Data

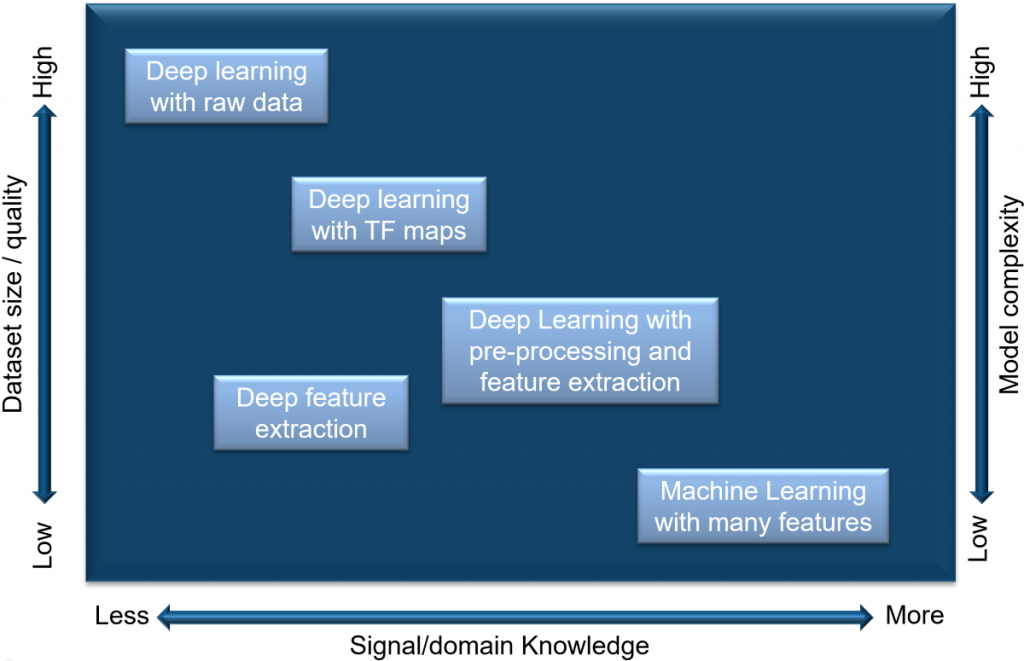

Deep learning for signal data requires extra steps when compared to applying deep learning or machine learning to other data sets. Good quality signal data is hard to obtain and has so much noise and variability. Wideband noise, jitters, and distortions are just a few of the unwanted characteristics found in most signal data.

As with all deep learning projects, and especially for signal data, your success will almost always depend on how much data you have and the computational power of your machine, so a good deep learning workstation is highly recommended.

To bypass using deep learning, a thorough understanding of signal data and signal processing will be needed to use machine learning techniques that rely on less data than deep learning.

Deep Learning Workflow

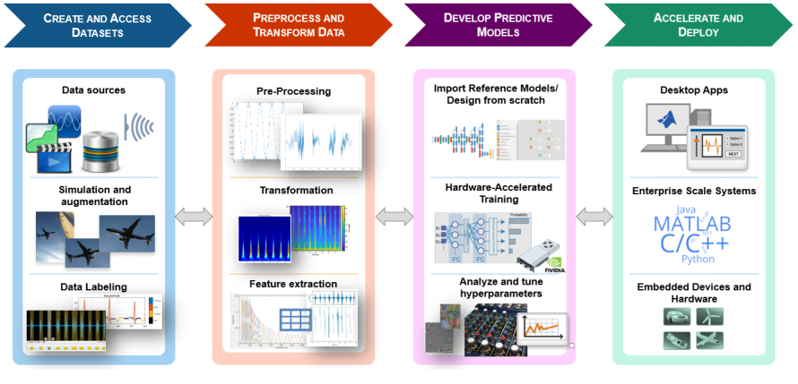

#1: Firstly, the process would involve storing, reading, and pre-processing the data. This will also involve extracting and transforming features and splitting into training and test sets. If you are planning to use a supervised learning algorithm, the data will require labeling.

#2: Visualizing the data will be key to identifying the type of pre-processing and feature extraction techniques that will be required. For signal processing, visualizing is required in the time, frequency, and time-frequency domains for proper exploration.

#3: Once the data has been visualized, it will be necessary to transform and extract features from the data such as peaks, change points, and signal patterns.

Before the advent of machine learning or deep learning, classical models for time series analysis were used since signals have a time-specific domain.

- Classical Time Series Analysis

- Visual inspection of time series, looking at change over time, inspecting peaks and troughs.

- Frequency Domain Analysis

- According to MathWorks, Frequency Domain Analysis is one of the key aspects of Signal Processing. It is used in areas such as Communications, Geology, Remote Sensing, and Image Processing. Time Domain Analysis shows a signal’s energy distributed over time while a frequency domain representation includes information on the phase shift that must be applied to each frequency component to recovering the original time signal with a combination of all the individual frequency components. A signal is transformed between time and frequency domains using mathematical operators called a “Transform”. Two famous examples of this are Fast Fourier Transform (FFT) and the Discrete Fourier Transform (DFT).

Long Short-Term Memory Models (LSTM’s) for Human Activity Recognition (HAR)

Human Activity Recognition (HAR) has been gaining traction in recent years with the advent of advancing human-computer interactions. It has real-world applications in industries ranging from healthcare, fitness, gaming, military, and navigation. There are 2 types of HAR:

- Sensor-based HAR (wearables that are attached to a human body and human activity are translated into specific sensor signal patterns that can be segmented and identified). Most research has shifted to a sensor-based approach due to advancements in sensor technology and its low cost.

- External Device HAR

Deep Learning techniques have been used to overcome the shortcomings of machine learning techniques that follow heuristics formed by the user. Deep Learning methods that can automatically extract features, scale better for more complex tasks. Sensor data is growing at a rapid pace (eg: Apple Watch, Fitbit, pedestrian tracking, etc) and the amount of data generated is sufficient for deep learning methods to learn and generate more accurate results.

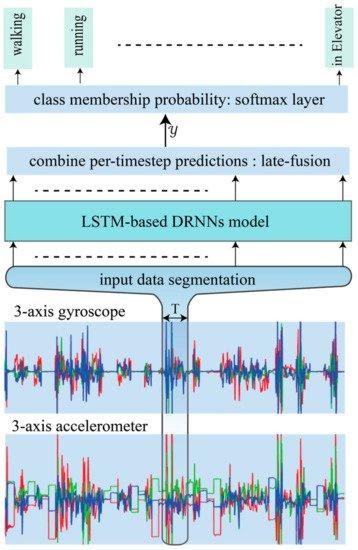

Recurrent Neural Networks are a suitable choice for signal data as it inherently has a time component, thereby a sequential component. This Paper: Deep Recurrent Neural Networks for Human Activity Recognition outlines some LSTM based Deep RNN’s to build HAR models for classifying activities mapped from variable length input sequences.

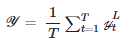

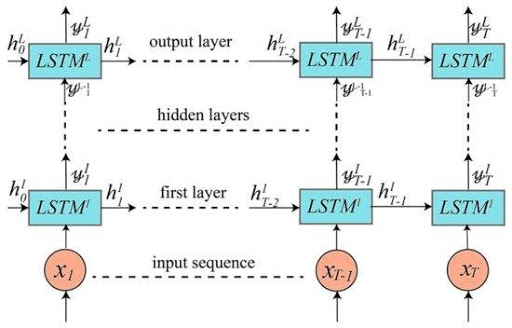

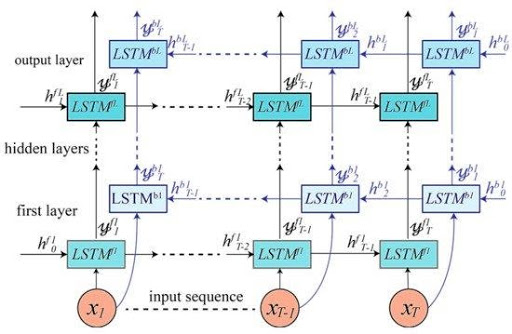

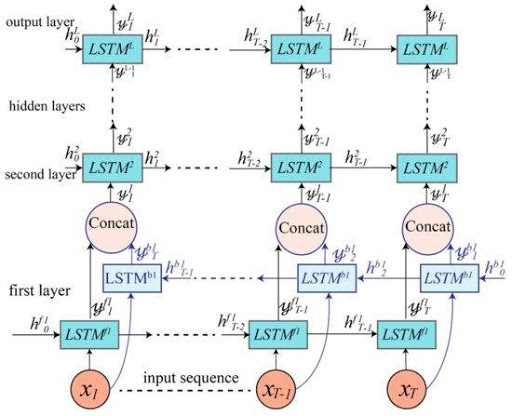

The above figure shows a proposed architecture for using LSTM based Deep RNNs for HAR. The inputs will be raw signals obtained from multi-modal sensors, segmented into windows of length T, and fed into the LSTM based DRNN model. The model will then output class prediction scores for each timestep, which are then merged via late-fusion and fed into the softmax layer to determine class membership probability.

The proposed model performs the direct end-to-end mapping from raw multimodal sensor inputs to activity label classifications. The input is a vector of a discrete sequence of equally-spaced samples observed by the sensors at time t. These samples are segmented into windows of a maximum time index T and fed into an LSTM based DRNN model. The output of the model is a sequence of scores representing activity label predictions in which there is a label prediction for each time step (yL1, yL2,...yLT), where yLT∈RC is a vector of scores representing the prediction for a given input sample xt and C is the number of activity classes.

There will be a score for each time-step predicting the type of activity occurring at time t. The prediction for the entire window T is obtained by merging the individual scores into a single prediction. The model uses a late-fusion technique in which the classification decision from individual samples is combined for the overall prediction of a window.

The paper suggests 3 Deep RNN (DRNN) models for this process:

#1 Unidirectional LSTM Based DRNN Model

#2 Bidirectional LSTM Based DRNN Model

#3 Cascaded Bidirectional and Undirectional LSTM Based DRNN Model

Signal Processing on GPUs

If you aren’t already using a GPU based workstation then you might want to consider switching from your slower CPU computer. You can typically see performance gains of 30x or more, and several open-source libraries will help with signal processing on GPU based systems. These include:

- NVIDIA Performance Primitives (NPP) – Provides GPU-accelerated image, video, and signal processing functions.

- ArrayFire – GPU-accelerated open-source library for matrix, signal, and image processing.

- IMSL Fortran Numerical Library – GPU-accelerated open-source Fortran library with functions for math, signal, and image processing, statistics.

Signal Processing on FPGAs

An alternative to using a GPU based system for signal processing is looking into an FPGA (field-programmable gate array) solution.

FPGAs can be programmed after manufacturing, even if the hardware is already in the “field”. FPGAs often work in tandem with CPUs to accelerate throughput for targeted functions in compute- and data-intensive workloads. This allows you to offload repetitive processing functions in workloads to boost the performance of applications.

Deciding whether to go with a GPU or FPGA solution depends on what you’re trying to do, so it’s important to discuss your use case with a sales engineer to determine benefits vs. cost of each.

Signal Processing is Coming to the Forefront of Data Analysis

The physical world is a beacon of signals. The human body, the earth’s environment, outer space, even animals all emit signals that can be analyzed and understood using mathematical and statistical models. Signal processing has been used to understand the human brain, diseases, audio processing, image processing, financial signals, and more. Signal processing is slowly coming into the mainstream of data analysis with new deep learning models being developed to analyze signal data.

Additional Resources for Signal Processing

Published at DZone with permission of Kevin Vu. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments