Data Testing: The Missing Component of Data Quality

Data quality in production is incomplete without proper data testing. In this article, you will learn the basics of data testing and how to get started.

Data Quality is crucial for systems such as data warehouses, MDM, CRM, and other data-centric projects. However, DQ is often ignored in development until the system is fully operational in production. This results in a large gap in data quality as there was minimal or no testing done during the development phase of the project.

Data is like a product, and the data system is like a factory that produces it. In a factory, quality is split into two components: quality assurance and quality control. Let’s dive deeper and understand these concepts and how they apply to data quality.

![Data quality]()

Quality Assurance (QA) vs. Quality Control (QC)

QA: The quality of the raw materials and the processing methods are verified by QA processes during product manufacturing to ensure minimum defects in the final product.

QC: Even after QA, there will still be some gaps in manufacturing that are not caught by the QA processes, and that is why there is a final process of QC that checks the final product.

|

|

QUALITY ASSURANCE |

QUALITY CONTROL |

Focus on processing to prevent defects in the product. |

Identifying defects in the product before delivery. |

|

|

|

|

|

|

|

How do the QA and QC concepts from manufacturing apply to the data domain? What are the shortcomings of the traditional data quality methods where data testing is often ignored?

Limitation of Legacy Data Quality Approach

The above comparison shows that DQ in the postproduction phase is QC, which is just 50% of the DQ process. The other 50% is Quality Assurance or Data Testing in development is missing. Here are a few shortcomings of traditional data quality:

- ETL Testing: Data quality tools are designed to test the data and not for ETL testing.

- Too Little, Too Late: Data Quality processes are only applied when the final data is delivered in production. By this time, the defective data system is already in production.

- Garbage In, Garbage Out: The raw data that was used during development was never tested. So, the developer was not aware of all the permutations and combinations of data that were possible.

- Bad Process Results in Bad Data: The data processes that transform the data itself might be incorrect, and that will result in poor-quality data.

- Incorrect Orchestration of Data Processes: Usually, data processing is the execution of various data processes in a particular order and time. This is required to integrate data from multiple sources into a single unified view.

- Incorrect Data Schema: If the data model has issues such as incorrect data types, wrong data type lengths, precisions, or missing constraints, then many data issues will later pop up in production data.

Remember, these issues can only be dealt with during the development phase of the project by implementing proper QA or data testing.

Understanding Data Testing

Data Testing is a method to test and certify the data and the data processes before deploying the code in production. It consists of raw data testing, ETL testing, and process orchestration.

Data testing consists of the following activities.

|

Testing raw data to ensure the data is as expected.

|

|

Testing the ETL process to ensure it is coded correctly and transforming the data as per the requirements.

|

|

Business validation of the output of the data generated by the ETL process.

|

Getting Started With Data Testing

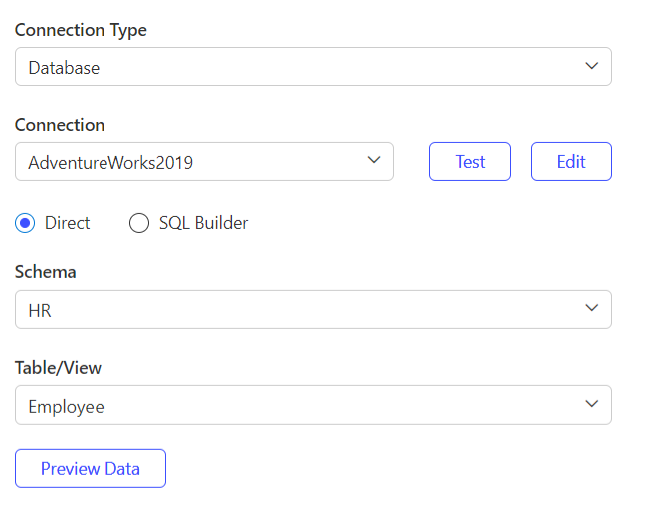

A major part of data testing involves testing ETL processes. In iceDQ, this is done by implementing the following steps:

1. Identify the Process for Testing

- Identify the data process that is tested. In this case, we are taking the “LOAD_EMP_DATA” process.

- Find the source database. Here, it is “ADVENTURE WORKS DB,” and the schema is “HR”

- Find the destination database “EDW_DB.”

2. Find the Source and Target Tables Used by the Data Process

- The source table is “EMPLOYEE.”

- The destination table is “EMPLOYEE_DIM.”

3. Connecting to Databases

- Create a connection to the source database from where the data is read by the process.

- Create another connection for the target database where the processed data is loaded.

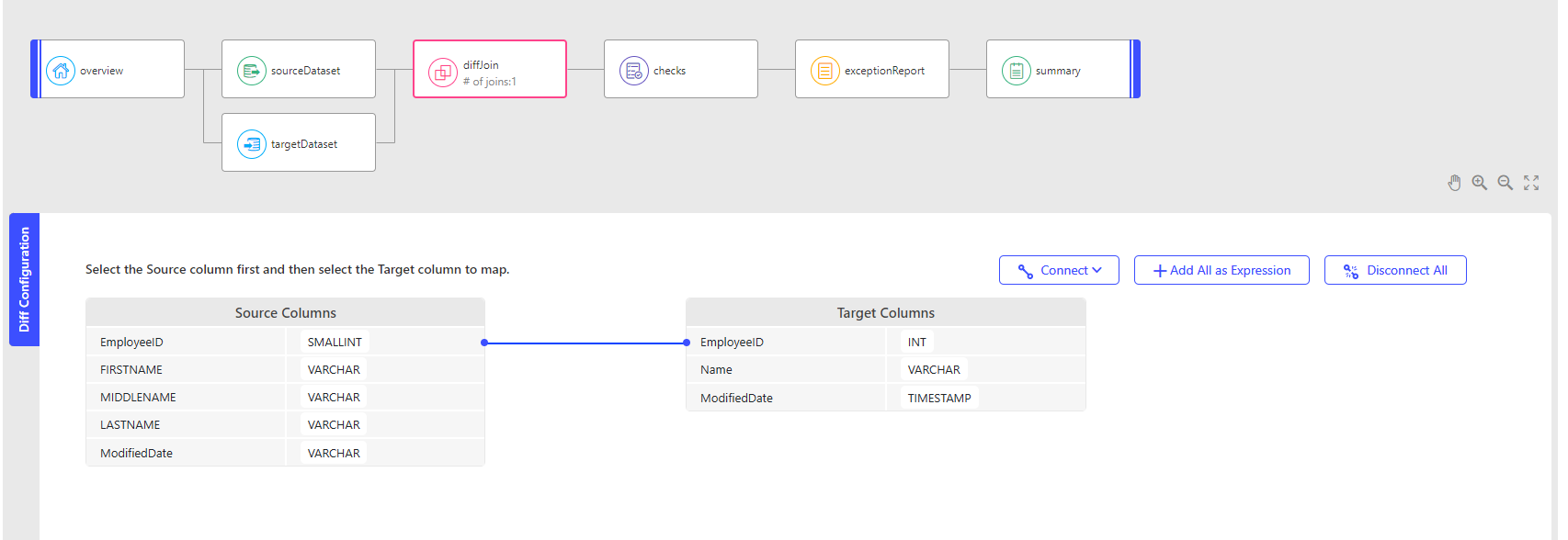

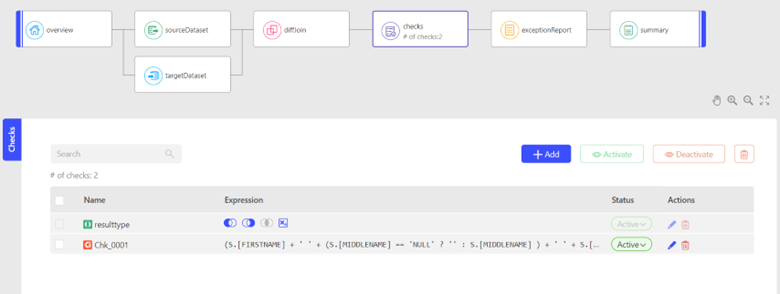

4. Creating and Executing Data Testing Rules

- In this case, most of the data transformation is happening for the employee’s name.

- The source has a “first name” and “last name” as columns.

- However, the destination has a complete name.

- The data transformation combines the first and last names to provide the complete name.

- Hence, a check is created to reconcile the data transformation.

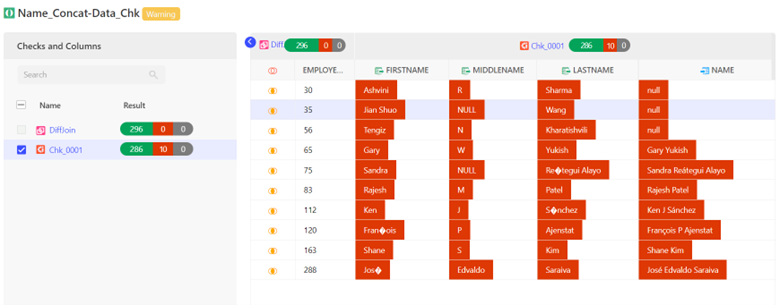

5. Reviewing the Output of Data

- Once the test is executed, iceDQ will generate data exceptions that show the defects in data transformations, as highlighted below.

- Based on the defects, the data process is certified or rejected.

Conclusion

We at iceDQ believe that data quality is not just a production concept, but equal or more efforts are put into data testing during development. A combined approach of Data Testing during development and Data Monitoring in production will provide the best outcome for data quality, and that too in a very effective way.

Comments