Creating a Positive Developer Experience for Container-Based Applications: From Soup to Nuts

Take a look at this article from our recently-released Containers Guide that answers some of your pressing questions about adopting Kubernetes.

Join the DZone community and get the full member experience.

Join For FreeThis article is featured in the new DZone Guide to Containers: Development and Management. Get your free copy for more insightful articles, industry statistics, and more!

Nearly every engineering team that is working on a web-based application realizes that they would benefit from a deployment and runtime platform — and ideally, some kind of self-service platform (much like a PaaS) — but as the joke goes, the only requirement is that “it has to be built by them.” Open-source and commercial PaaS-es are now coming of age, particularly with the emergence of Kubernetes as the de facto abstraction layer over low-level compute and network fabric. Commercially packaged versions of the Cloud Foundry Foundation’s Cloud Foundry distribution (such as that by Pivotal), alongside Red Hat’s OpenShift, are growing in popularity across the industry. However, not every team wants to work with such fully featured PaaSes, and now, you can also find a variety of pluggable components that remove much of the pain of assembling your own bespoke platform. However, one topic that often gets overlooked when assembling your own platform is the associated developer workflow and developer experience (DevEx) that should drive the selection of tooling. This article explores this topic in more detail.

Infrastructure, Platform and Workflow

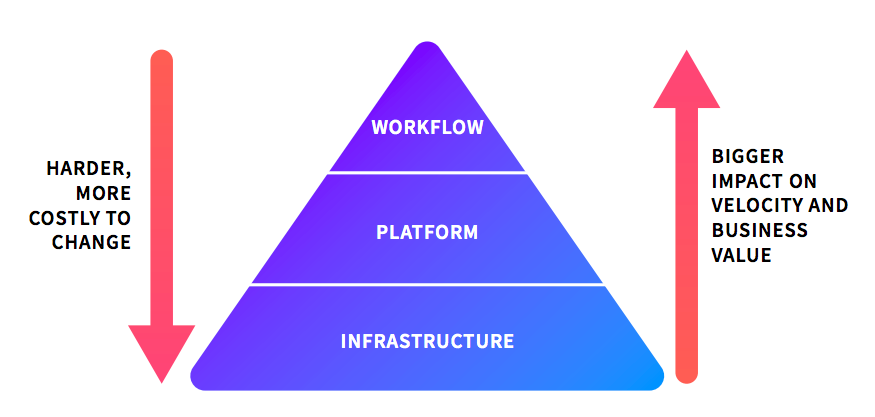

In a previous TheNewStack article, Kubernetes and PaaS: The Force of Developer Experience and Workflow, I introduced how the Datawire team often talks with customers about three high-level foundational concepts of modern software delivery — infrastructure, platform, and workflow — and how this impacts both technical platform decisions and the delivery of value to stakeholders.

If Kubernetes Is the Platform, What’s the Workflow?

One of the many advantages with deploying systems using Kubernetes is that it provides just enough platform features to abstract away most of the infrastructure — at least to the development team. Ideally, you will have a dedicated platform team to manage the infrastructure and Kubernetes cluster, or perhaps use a fully managed offering like Google Container Engine (GKE), Azure Container Service (AKS), or the soon-to-be-released Amazon Elastic Container Service (EKS). Developers will still benefit from learning about the underlying infrastructure and how the platform interfaces with this (cultivating “mechanical sympathy”), but fundamentally, their interaction with the platform should largely be driven by self-service dashboards, tooling, and SDKs.

Self-service is not only about reducing the development friction between an idea to delivered (deployed and observed) value. It’s also about allowing different parts of the organization to pick and choose their workflow and tooling, and ultimately make an informed trade-off against velocity and stability (or increase both velocity and stability). There are several key areas of the software delivery life cycle where this applies:

Structuring code and automating (container) build and deployment.

Local development, potentially against a remote cluster, due to local resource constraints or the need to interface with remote services.

Post-production testing and validation, such as shadowing traffic and canary testing (and the associated creation of metrics to support hypothesis testing).

Several tools and frameworks are emerging within this space, and they each offer various opinions and present trade-offs that you must consider.

Emerging Developer Workflow Frameworks

There is a lot of activity in the space of Kubernetes developer workflow tooling. Shahidh K. Muhammed recently wrote an excellent Medium post, Draft vs. Gitkube vs. Helm vs. Ksonnet vs. Metaparticle vs. Skaffold, which offered a comparison of tools that help developers build and deploy their apps on Kubernetes (although he did miss Forge!). Matt Farina has also written a very useful blog post for engineers looking to understand application artifacts, package management, and deployment options within this space: and Kubernetes: Where Helm and Related Tools Sit.

Learning from these sources is essential, but it is also often worth looking a little bit deeper into your development process itself, and then selecting appropriate tooling. Some questions to explore include:

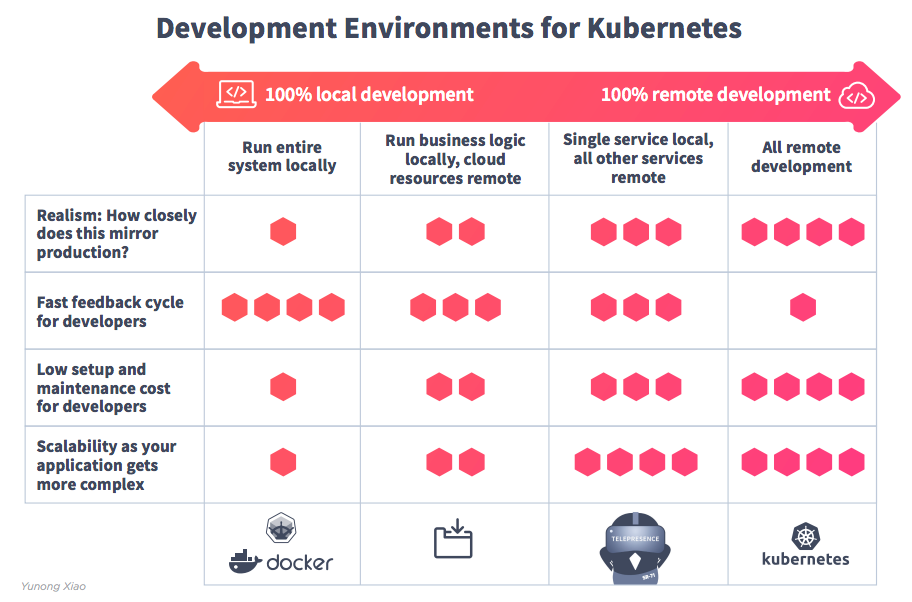

Do you want to develop and test services locally, or within the cluster?

Working locally has many advantages. I’m definitely an advocate of techniques such as BDD and TDD that promote rapid code and test cycles, and these approaches do require a workflow and platform that enable fast development iterations. There is also no denying that there are many benefits — such as iteration speed and the limited reliance on third-party services — that come from being able to work on a standalone local instance of a service during development. Finally, there is an argument that your system may not be adhering to the architectural best practices of high cohesion and loose coupling if you find that you cannot work on a service locally in isolation.

Some teams want to maintain minimal (thin client) development environments locally and manage all of their tooling in the cloud. The rise in popularity of cloud-based IDEs, such as AWS Cloud9 and Google CloudShell Editor, can be seen as a validation of this. Although this option typically increases your reliance on a third-party cloud vendor, the near-unlimited resources of the cloud mean that you can spin up a lot more hardware and test against real cloud services and data stores.

Using local/remote container development and debugging tools like Telepresence and Squash allows you to implement a hybrid approach where the majority of your system (or perhaps specific environments, like staging and pre-live) can be hosted remotely in a Kubernetes cluster, but you can code and diagnose using all of your locally installed tooling.

Do you have an opinion on code repository structure?

Using a monorepo can bring many benefits and challenges. Coordination of integration and testing across services is generally easier, and so is service dependency management. For example, developer workflow tooling such as Forge can automatically re-deploy dependent services when a code change is made to another related service. However, one of the challenges associated with using a monorepo is developing the workflow discipline to avoid code “merge hell” across service boundaries.

The multi-repo VCS option also has pros and cons. There can be clearer ownership, and it is often easier to initialize and orchestrate services for local running and debugging. However, ensuring code-level standardization (and understandability) across repos can be challenging, as can managing integration and coordinating deployment to environments. Consumer-driven contract tooling such as Pact and Spring Cloud Contract provide options for testing integration at the interface level, and frameworks like Helm (and Draft for a slicker developer experience) and Ksonnet can be used to manage service dependencies across your system.

Do you want to implement “guide rails” for your development teams?

Larger teams and enterprises often want to provide comprehensive guide rails for development teams; these constrain the workflow and toolset being used. Doing this has many advantages, such as the reduction of friction when moving engineers across projects, and the creation of integrated debug tooling and auditing is easier. The key trade-off is the limited flexibility associated with the establishment of workflows required for exceptional circumstances, such as when a project requires a custom build and deployment or differing test tooling. Red Hat’s OpenShift and Pivotal Cloud Foundry offer PaaS-es that are popular within many enterprise organizations.

Startups and small/medium enterprises (SMEs) may instead value team independence, where each team chooses the most appropriate workflow and developer tooling for them. My colleague, Rafael Schloming, has spoken about the associated benefits and challenges at QCon San Francisco: Patterns for Microservice Developer Workflows and Deployment. Teams embracing this approach often operate a Kubernetes cluster via a cloud vendor, such as Google’s GKE or Azure’s AKS, and utilize a combination of vendor services and open-source tooling.

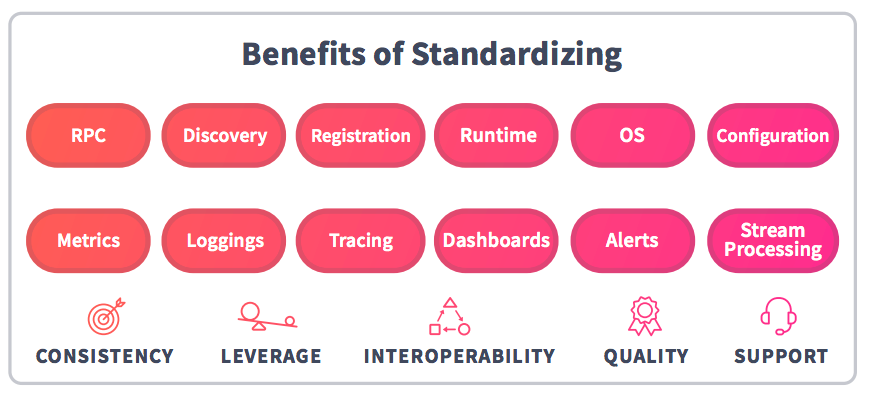

A hybrid approach, such as that espoused by Netflix, is to provide a centralized platform team and approved/managed tooling, but allow any service team the freedom to implement their own workflow and associated tooling that they will also have the responsibility for managing. My summary of Yunong Xiao’s QCon New York talk provides more insight to the ideas: The “Paved Road” PaaS for Microservices at Netflix. This hybrid approach is the style we favor at Datawire, and we are building open-source tooling to support this.

How comfortable (and capable) is the team in regard to automation?

One of the many benefits of platforms like Kubernetes is the API-driven approach to deploying and operating applications. Teams that are comfortable with automating workflows with tooling and APIs can take advantage of increased release velocity and stability via automated testing in production through the use of traffic shadowing and canary testing (and the associated observability, monitoring, and logging tooling).

Using container technology offers many other benefits in addition to the provision of management APIs. However, if existing workflows, practices, and tooling are kept while embracing this new technology, then limited benefits may be seen.

Conclusions

This article has provided several questions that you and your team must ask when adopting Kubernetes as your platform of choice. Kubernetes and container technology offer fantastic opportunity, but to fully take advantage of this, you will most likely need to change your workflow. Every software development organization needs a platform and associated workflow and developer experience, but the key question is: How much of this do you want to build yourself? Any technical leader will benefit from understanding the value proposition of PaaS and the trade-offs and benefits of assembling key components of a platform yourself.

This article is featured in the new DZone Guide to Containers: Development and Management. Get your free copy for more insightful articles, industry statistics, and more!

Opinions expressed by DZone contributors are their own.

Comments